Calcofi Data Management, White Paper. Karen I

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

DYNAMIC HABITAT USE of ALBACORE and THEIR PRIMARY PREY SPECIES in the CALIFORNIA CURRENT SYSTEM Calcofi Rep., Vol

MUHLING ET AL.: DYNAMIC HABITAT USE OF ALBACORE AND THEIR PRIMARY PREY SPECIES IN THE CALIFORNIA CURRENT SYSTEM CalCOFI Rep., Vol. 60, 2019 DYNAMIC HABITAT USE OF ALBACORE AND THEIR PRIMARY PREY SPECIES IN THE CALIFORNIA CURRENT SYSTEM BARBARA MUHLING, STEPHANIE BRODIE, MICHAEL JACOX OWYN SNODGRASS, DESIREE TOMMASI NOAA Earth System Research Laboratory University of California, Santa Cruz Boulder, CO Institute for Marine Science Santa Cruz, CA CHRISTOPHER A. EDWARDS ph: (858) 546-7197 Ocean Sciences Department [email protected] University of California, Santa Cruz, CA BARBARA MUHLING, OWYN SNODGRASS, YI XU HEIDI DEWAR, DESIREE TOMMASI, JOHN CHILDERS Department of Fisheries and Oceans NOAA Southwest Fisheries Science Center Delta, British Columbia, Canada San Diego, CA STEPHANIE SNYDER STEPHANIE BRODIE, MICHAEL JACOX Thomas More University, NOAA Southwest Fisheries Science Center Crestview Hills, KY Monterey, CA ABSTRACT peiods, krill, and some cephalopods (Smith et al. 2011). Juvenile north Pacific albacore Thunnus( alalunga) for- Many of these forage species are fished commercially, age in the California Current System (CCS), supporting but also support higher-order predators further up the fisheries between Baja California and British Columbia. food chain, such as other exploited species (e.g., tunas, Within the CCS, their distribution, abundance, and for- billfish) and protected resources (e.g., marine mammals aging behaviors are strongly variable interannually. Here, and seabirds) (Pikitch et al. 2004; Link and Browman we use catch logbook data and trawl survey records to 2014). Effectively managing marine ecosystems to pre- investigate how juvenile albacore in the CCS use their serve these trophic linkages, and improve robustness of oceanographic environment, and how their distributions management strategies to environmental variability, thus overlap with the habitats of four key forage species. -

California Sea Lion Interaction and Depredation Rates with the Commercial Passenger Fishing Vessel Fleet Near San Diego

HANAN ET AL.: SEA LIONS AND COMMERCIAL PASSENGER FISHING VESSELS CalCOFl Rep., Vol. 30,1989 CALIFORNIA SEA LION INTERACTION AND DEPREDATION RATES WITH THE COMMERCIAL PASSENGER FISHING VESSEL FLEET NEAR SAN DIEGO DOYLE A. HANAN, LISA M. JONES ROBERT B. READ California Department of Fish and Game California Department of Fish and Game c/o Southwest Fisheries Center 1350 Front Street, Room 2006 P.O. Box 271 San Diego, California 92101 La Jolla, California 92038 ABSTRACT anchovies, Engraulis movdax, which are thrown California sea lions depredate sport fish caught from the fishing boat to lure surface and mid-depth by anglers aboard commercial passenger fishing fish within casting range. Although depredation be- vessels. During a statewide survey in 1978, San havior has not been observed along the California Diego County was identified as the area with the coast among other marine mammals, it has become highest rates of interaction and depredation by sea a serious problem with sea lions, and is less serious lions. Subsequently, the California Department of with harbor seals. The behavior is generally not Fish and Game began monitoring the rates and con- appreciated by the boat operators or the anglers, ducting research on reducing them. The sea lion even though some crew and anglers encourage it by interaction and depredation rates for San Diego hand-feeding the sea lions. County declined from 1984 to 1988. During depredation, sea lions usually surface some distance from the boat, dive to swim under RESUMEN the boat, take a fish, and then reappear a safe dis- Los leones marinos en California depredan peces tance away to eat the fish, tear it apart, or just throw colectados por pescadores a bordo de embarca- it around on the surface of the water. -

Frontmatter Final.Qxd

5-14 Committee Rept final:5-9 Committee Rept 11/8/08 6:11 PM Page 5 COMMITTEE REPORT CalCOFI Rep., Vol. 49, 2008 Part I REPORTS, REVIEW, AND PUBLICATIONS REPORT OF THE CALCOFI COMMITTEE NOAA HIGHLIGHTS The David Starr Jordan will conduct operations in the Southern California Bight in San Diego and stations CalCOFI Cruises from Point Conception north to San Francisco, Cali- The CalCOFI program completed its fifty-eighth year fornia. The Miller Freeman will synoptically conduct sim - with four successful quarterly cruises. All four cruises ilar operations over the northern section of the CCE, were manned by personnel from both the NOAA in Port Townsend, Washington, and south to San Fran- Fisheries Southwest Fisheries Science Center (SWFSC) cisco. An additional California Current Ecosystem sur - and Scripps Institution of Oceanography (SIO). The fall vey will be conducted in July 2008. 2007 cruise was conducted on the SIO RV New Horizon and covered the southern lines of the CalCOFI pattern. CalCOFI Ichthyoplankton Update The winter and spring 2007 cruises were conducted on The SWFSC Ichthyoplankton Ecology group made the NOAA RV David Starr Jordan . The Jordan covered progress on a multi-year project to update larval fish lines 93 to line 60 just north of San Francisco. The sum - identifications to current standards from 1951 to the pre - mer 2007 cruise was on the SIO RV New Horizon , and sent, which will ultimately provide taxonomic consis - covered the standard CalCOFI pattern. tency throughout the CalCOFI ichthyoplankton time Standard CalCOFI protocols were followed during series. The group updated samples collected during the the four quarterly cruises. -

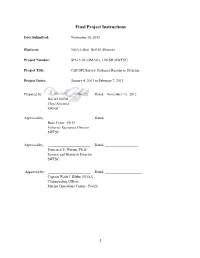

Final Project Instructions

Final Project Instructions Date Submitted: November 16, 2012 Platform: NOAA Ship Bell M. Shimada Project Number: SH-13-01 (OMAO), 1301SH (SWFSC) Project Title: CalCOFI Survey, Fisheries Resources Division. Project Dates: January 4, 2013 to February 7, 2013 Prepared by: ________________________ Dated: _November 16,_2012_ David Griffith Chief Scientist SWFSC Approved by: ________________________ Dated: __________________ Russ Vetter , Ph.D. Fisheries Resources Director SWFSC Approved by: ________________________ Dated: __________________ Francisco E. Werner, Ph.D. Science and Research Director SWFSC Approved by: ________________________ Dated: ________________ Captain Wade J. Blake, NOAA Commanding Officer Marine Operations Center - Pacific 1 I. Overview A. Brief Summary and Project Period Survey the distributions and abundances of pelagic fish stocks, their prey, and their biotic and abiotic environments in the area of the California Current between San Francisco, California and San Diego, California during the period of January 9 to February 2, 2013. B. Service Level Agreements Of the 33 DAS scheduled for this project, 0 DAS are funded by the program and 33 DAS are funded by OMAO. This project is estimated to exhibit a medium Operational Tempo. C. Operating Area 2 D. Summary of Objectives Survey the distributions and abundances of pelagic fish stocks, their prey, and their biotic and abiotic environments in the area of the California Current between San Francisco, California and San Diego, California. The following are specific objectives for the Winter CalCOFI. I.D.1. Continuously sample pelagic fish eggs using the Continuous Underway Fish Egg Sampler (CUFES). The data will be used to estimate the distributions and abundances of spawning hake, anchovy, mackerel, and early spawning Pacific sardine. -

Views Concerning Use of the Living Resources of the California Current

Calif. JIw. Res. Comna., CalCOFI Rept., 13 : 91-94, 1969 VIEWS CONCERNING USE OF THE LIVING RESOURCES OF THE CALIFORNIA CURRENT GERALD V. HOWARD, Regional Director Pacific Southwest Region Bureau of Commercial Fisheries Terminal Island, California I have been asked to express the views of the Bu- ‘wetfish ’ ’ fisheries which harvest mackerels, anchovies reau of Commercial Fisheries concerning the legal, and bonito ; and the “ bottomfish ” fisheries which har- economic, sociological and technological problems im- rest, though not exclusiuely, species taken by trawl- peding the best use of the living resources of the ing. California Current and how they can be resolved. Californians generally do not think of the tropical The impediments are generally rather well knowii. It tunas as a resource of the California Current, prob- is their resolution which presents the challenge. ably because they occur in its southern extension off A rnajor objective of the programs of the Bureau Baja California and rarely in commercial quantities of Commercial Fisheries is to seek the resolution of off southern California. I have included them not only problems which handicap the economic well-being of because they support California’s most important the domestic fishing industry and hinder the best use fishery, but because the long-range tuna fleet’s experi- of the fishery resources. Success of Bureau research ence in overcoming economic difficulties has been more and service programs, however, depends on close col- successful than that of other elements of the Cali- laboration and cooperation with other parties, espe- fornia fishing fleet. I especially wish to mention cially State agencics. -

A Historical Review of Fisheries Statistics and Environmental and Societal Influences Off the Palos Verdes Peninsula, California

STULL ET AL. : PALOS VERDES SPORT AND COMMERCIAL FISHERY CalCOFI Rep., Vol. XXVIII, 1987 A HISTORICAL REVIEW OF FISHERIES STATISTICS AND ENVIRONMENTAL AND SOCIETAL INFLUENCES OFF THE PALOS VERDES PENINSULA, CALIFORNIA JANET K. STULL, KELLY A. DRYDEN PAUL A. GREGORY Los Angeles County Sanitation Districts California Department of Fish and Game P.O. Box 4998 245 West Broadway Whittier. California 90607 Long Beach, California 90802 ABSTRACT peracion ambiental significativa y puede estar aso- A synopsis of partyboat and commercial fish and ciado con una reducci6n en la contaminacion del invertebrate catches is presented for the Palos medio ambiente marino costero. Verdes region. Fifty years (1936-85) of partyboat catch, in numbers of fish and angler effort, and 15 INTRODUCTION years (1969-83) of commercial landings, in Our goals were to summarize long-term fish and pounds, are reviewed. Several hypotheses are invertebrate catch statistics gathered by the Cali- proposed to explain fluctuations in partyboat fornia Department of Fish and Game (CDFG) for (commercial passenger fishing vessel) and com- the Palos Verdes Peninsula, to infer relative fish mercial fishery catches. Where possible, compari- abundance and human consumption rates, and, sons are drawn to relate catch information to envi- where possible, to better understand influences ronmental and societal influences. This report from natural and human environmental perturba- documents trends in historical resource use and tions. We examined total catches and common and fish consumption patterns, and is useful for re- economically important species, in addition to spe- gional fisheries management. The status of several cies with reported elevated body burdens of con- species has improved since the early to mid-1970s. -

Physical Forcing on Fish Abundance in the Southern California Current System

Received: 19 May 2016 | Accepted: 8 December 2017 DOI: 10.1111/fog.12267 ORIGINAL ARTICLE Physical forcing on fish abundance in the southern California Current System Lia Siegelman-Charbit1 | J. Anthony Koslow2 | Michael G. Jacox3,4 | Elliott L. Hazen3 | Steven J. Bograd3 | Eric F. Miller5 1Universite Pierre et Marie Curie, Paris, France Abstract 2Scripps Institution of Oceanography, The California Current System (CCS) is an eastern boundary current system with University of California, San Diego, La Jolla, strong biological productivity largely due to seasonal wind-driven upwelling and CA, USA 3National Oceanic and Atmospheric transport of the California Current (CC). Two independent, yet complementary time Administration, Monterey, CA, USA series, CalCOFI ichthyoplankton surveys and sampling of southern California power 4 Institute of Marine Sciences, University of plant cooling-water intakes, have indicated that an assemblage of predominantly California, Santa Cruz, CA, USA 5MBC applied Environmental Sciences, cool-water affinity fishes spanning nearshore to oceanic environments in the south- Costa Mesa, CA, USA ern CCS has declined dramatically from the 1970s to the 2000s. We examined Correspondence potential oceanographic drivers behind this decline both within and north of the L. Siegelman-Charbit CalCOFI survey area in order to capture upstream processes as well. Empirical Email: [email protected] orthogonal function (EOF) analyses using output from a data-assimilative regional ocean model revealed significant relationships -

Artificial Reefs: Nothing More Than Benthic Fish Aggregators Jeffrey J

POLOVINA: ARTIFICIAL REEFS: BENTHIC FISH AGGREGATORS ColCOFl Rep., Vol. 30,1989 ARTIFICIAL REEFS: NOTHING MORE THAN BENTHIC FISH AGGREGATORS JEFFREY J. POLOVINA Southwest Fisheries Center Honolulu Laboratory National Marine Fisheries Service, NOAA 2570 Dole Street Honolulu, Hawaii 96822-2396 ABSTRACT lieve the real benefit of the reefs is that they aggre- The potential for artificial reefs to substantially gate wide-ranging fishes close to shore so they can increase standing stock of marine resources is con- be harvested by fishermen with small vessels and sidered. Three sources - the Japanese artificial reef thus keep the fleet of small vessels economically program; relationships between fishery production viable. and the area of natural habitat for several fisheries; Outside of Japan, artificial reefs have not been and population dynamics - offer evidence that ar- deployed on a large enough scale to evaluate their tificial reefs do not substantially increase the stand- effectiveness in increasing standing stocks. How- ing stock of marine resources. ever, examining the relationship between habitat and fishery production can provide estimates of the RESUMEN level of fishery yield per area of habitat that might be expected from appropriately designed and sited Se considera el potencial de 10s arrecifes artifi- artificial reefs. One example is penaeid shrimp: ciales para aumentar sustancialmente el stoc4 dis- worldwide fishery yields range from 8 to 200 kg/ ponible de 10s recursos marinos. Tres fuentes de hectare of intertidal nursery habitat (Turner 1977). evidencia: el programa de arrecifes artificiales ja- pods, la relaci6n entre producci6n y Area del hAbi- In the case of artificial reefs, yields are measured in production per unit of reef volume. -

End-To-End Modeling of Sardine and Anchovy in the California Current System Calcofi Rep., Vol

ROSE ET AL.: END-TO-END MODELING OF SARDINE AND ANCHOVY IN THE CALIFORNIA CURRENT SYSTEM CalCOFI Rep., Vol. 56, 2015 END-TO-END MODELING OF SARDINE AND ANCHOVY IN THE CALIFORNIA CURRENT SYSTEM KENNETH A. ROSE, SEAN CREEKMORE MIGUEL BERNAL DavE CHECKLEY Department of Oceanography General Fisheries Commission Scripps Institution of Oceanography and Coastal Sciences for the Mediterranean (GFCM) University of California, San Diego Louisiana State University FAO of the United Nations 9500 Gilman Drive Baton Rouge, LA 70803 Fisheries and Aquaculture Department La Jolla, CA 92093 ph: (225) 578-6346 Palazzo Blumenstihl [email protected] fax: (225) 578-6513 Via Vittoria Colonna 1 - 00193, Rome, Italy [email protected] [email protected] TONY KOSLOW [email protected] Scripps Institution of Oceanography, CalCOFI ALAN HAYNIE, 9500 Gilman Drive, Department 0218 JEROME FIECHTER BERNARD A. MEGREY (deceased) La Jolla, CA 92093 Institute of Marine Sciences Alaska Fisheries Science Center-NOAA [email protected] University of California, Santa Cruz 7600 Sand Point Way NE Santa Cruz, CA 95064 Seattle, WA 98115 SAM MCCLATCHIE, [email protected] [email protected] FRANCISCO WERNER Southwest Fisheries Science Center-NOAA ENRIQUE N. CURCHITSER SHIN-ICHI ITO 8604 La Jolla Shores Drive Department of Environmental Sciences Atmosphere and Ocean Research Institute La Jolla, CA 92037-1508 and Institute of Marine and Coastal Sciences University of Tokyo [email protected] Rutgers University Kashiwa-city, Chiba 277-8564, Japan [email protected] 14 College Farm Road [email protected] New Brunswick, NJ 08901 ALEC MacCALL [email protected] SalvadOR LLUCH-COTA Southwest Fisheries Science Center-NOAA CIBNOR 110 Shaffer Road KATE HEDSTROM Mar Bermejo #195 Santa Cruz, CA 95060 Institute of Marine Science Col. -

DISTRIBUTION of LARVAL SQUID, Lollgo OPALESCENS, in VARIOUS NEARSHORE LOCATIONS

RECKSIEK AND KASHIWADA: DISTRIBUTION OF NEARSHORE LOLIGO OPALESCENS CalCOFI Rep., Vol. XX, 1979 DISTRIBUTION OF LARVAL SQUID, LOLlGO OPALESCENS, IN VARIOUS NEARSHORE LOCATIONS CONRAD W. RECKSIEK AND JERRY KASHIWADA Moss Landing Marine Laboratories P.O. BOX 223. Moss Landing, CA 95039 ABSTRACT estimated using an inclinometer and metered block. Three distinct sampling efforts employing different Mesh size decreased from about 6 mm at the mouth to a plankton-collecting gears aimed at collecting California cod-end liner of 1 mm. This gear was fished from Moss market squid, Loligo opalescens, in nearshore waters Landing Marine Laboratories research vessels Artemia from San Diego to Monterey Bay are described. Larval and Oconostota. Loligo opalescens occurrence in the hauls was low and Nearshore oblique tows in the upper 100 m were taken patchy, corroborating the experience of past workers. from San Diego to Morro Bay (Figure 1) in an attempt to Over the Monterey Jpawning grounds, the use of a spec- define geographical concentrations of larvae. These tows ially designed bottom-fishing plankton net was fouid to were made with a continuously open net similar in design be more generally effective than the other gears. to that used in Monterey Bay but with slightly larger mesh. In the case of operation in shallow water, the max- INTRODUCTION imum safe operation depth was shifted upward. In gen- The early life history of Loligo opalescens is unclear. eral, the net was allowed no lower than about 25 m from Fields (1965) suggests that the newly hatched larvae the bottom. Mesh sizes decreased from 9 mm to a cod- may be carried long distances by coastal currents. -

Scientific Research and the Twentieth-Century Fishing Industry

McEVOY: SCIENTIFIC RESEARCH AND THE 20TH-CENTURY FISHING INDUSTRY CalCOFI Rep., Vol. XXIII, 1982 SCIENTIFIC RESEARCH AND THE TWENTIETH-CENTURY FISHING INDUSTRY ARTHUR F. McEVOY’ The 1981-82 California Sea Grant College Program Among natural scientists, Ciriacy-Wantrup set a directory includes two projects devoted to the history similar example in a 1952 text on resource conser- of public policymaking for the fisheries.’ This indi- vation, defining conservation as a dynamic process cates, I think, a new and salutary trend in the study influenced by social, economic, and political institu- and management of natural resources in the public tions as much as by the character of the resources domain. Long isolated in their various specializations, themselve~.~More recently, Richard A. Walker ad- scientists and scholars interested in natural resources monished us not to forget that we do not address re- have, since the 1960s, begun to look across discipli- source policy questions innocent of history, politics, nary boundaries for new perspectives on problems that or of “commitments to pre-existing threads of ideol- draw their common concern. They have also begun to ogy passed down from those who have grappled with examine their own role in the making of policy- similar problems before. ”6 Walker trenchantly ob- analyzing the ways in which scientists and other served that effective resource management requires scholars, successfully or not, have tried at various the understanding and manipulation of the human stages in our history to contribute their expertise to processes that lead people to use nature in particular developing government policy for resource use. -

TRENDS in the SOUTHERN CALIFORNIA SPORT FISHERY Calcofi Rep., Vol

DOTSON AND CHARTER: TRENDS IN THE SOUTHERN CALIFORNIA SPORT FISHERY CalCOFI Rep., Vol. 44, 2003 TRENDS IN THE SOUTHERN CALIFORNIA SPORT FISHERY RONALD C. DOTSON RICHARD L. CHARTER Southwest Fisheries Science Center Southwest Fisheries Science Center National Marine Fisheries Service, NOAA National Marine Fisheries Service, NOAA 8604 La Jolla Shores Drive 8604 La Jolla Shores Drive La Jolla, California 92037 La Jolla, California 92037 [email protected] ABSTRACT and the stocks on which it depends. The Los Angeles We constructed a database for the Southern California Times usually reports each day’s landings for most fish- commercial passenger fishing vessel (CPFV) fleet for the ing ports, the number of CPFVs reporting landings on years 1959–1998 using the daily fish reports from the that day, the total catch numbers by species or species Los Angeles Times. This database includes information group, and the total number of fishing passengers. In on number of fish caught by species, landing port, land- 1997 Charles Mitchell (of MBC Applied Environmental ing date, and number of anglers. Comparison of this Sciences) was awarded a Saltonstall-Kennedy Grant database to the logbook database maintained by the (NA76FD0050) to enter 40 years of the Los Angeles California Department of Fish and Game shows high Times’ marine fish reports into a database to make this correlation. information available for fishery researchers in Cali- Angler effort has been consistent throughout the fornia. The Southwest Fisheries Science Center, National time series at about 620,000 passengers per year. The Marine Fisheries Service, assumed responsibility for up- annual fish catch averaged 4.25 million fish from 1963 dating and maintaining the database after Mitchell com- to 1991 but has declined since 1992 to 2.5 million fish pleted his grant project.