2013 2013 5Th International Conference on Cyber Conflict

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Statistical Structures: Fingerprinting Malware for Classification and Analysis

Statistical Structures: Fingerprinting Malware for Classification and Analysis Daniel Bilar Wellesley College (Wellesley, MA) Colby College (Waterville, ME) bilar <at> alum dot dartmouth dot org Why Structural Fingerprinting? Goal: Identifying and classifying malware Problem: For any single fingerprint, balance between over-fitting (type II error) and under- fitting (type I error) hard to achieve Approach: View binaries simultaneously from different structural perspectives and perform statistical analysis on these ‘structural fingerprints’ Different Perspectives Idea: Multiple perspectives may increase likelihood of correct identification and classification Structural Description Statistical static / Perspective Fingerprint dynamic? Assembly Count different Opcode Primarily instruction instructions frequency static distribution Win 32 API Observe API calls API call vector Primarily call made dynamic System Explore graph- Graph structural Primarily Dependence modeled control and properties static Graph data dependencies Fingerprint: Opcode frequency distribution Synopsis: Statically disassemble the binary, tabulate the opcode frequencies and construct a statistical fingerprint with a subset of said opcodes. Goal: Compare opcode fingerprint across non- malicious software and malware classes for quick identification and classification purposes. Main result: ‘Rare’ opcodes explain more data variation then common ones Goodware: Opcode Distribution 1, 2 ---------.exe Procedure: -------.exe 1. Inventoried PEs (EXE, DLL, ---------.exe etc) on XP box with Advanced Disk Catalog 2. Chose random EXE samples size: 122880 with MS Excel and Index totalopcodes: 10680 3, 4 your Files compiler: MS Visual C++ 6.0 3. Ran IDA with modified class: utility (process) InstructionCounter plugin on sample PEs 0001. 002145 20.08% mov 4. Augmented IDA output files 0002. 001859 17.41% push with PEID results (compiler) 0003. 000760 7.12% call and general ‘functionality 0004. -

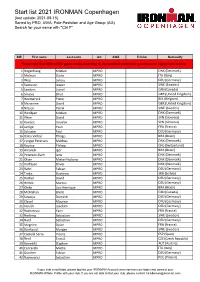

Start List 2021 IRONMAN Copenhagen (Last Update: 2021-08-15) Sorted by PRO, AWA, Pole Posistion and Age Group (AG) Search for Your Name with "Ctrl F"

Start list 2021 IRONMAN Copenhagen (last update: 2021-08-15) Sorted by PRO, AWA, Pole Posistion and Age Group (AG) Search for your name with "Ctrl F" BIB First name Last name AG AWA TriClub Nationallty Please note that BIBs will be given onsite according to the selected swim time you choose in registration oniste. 1 Hogenhaug Kristian MPRO DNK (Denmark) 2 Molinari Giulio MPRO ITA (Italy) 3 Wojt Lukasz MPRO DEU (Germany) 4 Svensson Jesper MPRO SWE (Sweden) 5 Sanders Lionel MPRO CAN (Canada) 6 Smales Elliot MPRO GBR (United Kingdom) 7 Heemeryck Pieter MPRO BEL (Belgium) 8 Mcnamee David MPRO GBR (United Kingdom) 9 Nilsson Patrik MPRO SWE (Sweden) 10 Hindkjær Kristian MPRO DNK (Denmark) 11 Plese David MPRO SVN (Slovenia) 12 Kovacic Jaroslav MPRO SVN (Slovenia) 14 Jarrige Yvan MPRO FRA (France) 15 Schuster Paul MPRO DEU (Germany) 16 Dário Vinhal Thiago MPRO BRA (Brazil) 17 Lyngsø Petersen Mathias MPRO DNK (Denmark) 18 Koutny Philipp MPRO CHE (Switzerland) 19 Amorelli Igor MPRO BRA (Brazil) 20 Petersen-Bach Jens MPRO DNK (Denmark) 21 Olsen Mikkel Hojborg MPRO DNK (Denmark) 22 Korfitsen Oliver MPRO DNK (Denmark) 23 Rahn Fabian MPRO DEU (Germany) 24 Trakic Strahinja MPRO SRB (Serbia) 25 Rother David MPRO DEU (Germany) 26 Herbst Marcus MPRO DEU (Germany) 27 Ohde Luis Henrique MPRO BRA (Brazil) 28 McMahon Brent MPRO CAN (Canada) 29 Sowieja Dominik MPRO DEU (Germany) 30 Clavel Maurice MPRO DEU (Germany) 31 Krauth Joachim MPRO DEU (Germany) 32 Rocheteau Yann MPRO FRA (France) 33 Norberg Sebastian MPRO SWE (Sweden) 34 Neef Sebastian MPRO DEU (Germany) 35 Magnien Dylan MPRO FRA (France) 36 Björkqvist Morgan MPRO SWE (Sweden) 37 Castellà Serra Vicenç MPRO ESP (Spain) 38 Řenč Tomáš MPRO CZE (Czech Republic) 39 Benedikt Stephen MPRO AUT (Austria) 40 Ceccarelli Mattia MPRO ITA (Italy) 41 Günther Fabian MPRO DEU (Germany) 42 Najmowicz Sebastian MPRO POL (Poland) If your club is not listed, please log into your IRONMAN Account (www.ironman.com/login) and connect your IRONMAN Athlete Profile with your club. -

Legacy Image

5-(948-~gblb SOWARE ENGINEERING LABORATORY SERIES SEL-95-004 PROCEEDINGS OF THE TWENTIETH ANNUAL SOFTWARE ENGINEERING WORKSHOP DECEMBER 1995 National Aeronautics and Space Administration Goddard Space Flight Center Greenbelt, Maryland 20771 Proceedings of the Twentieth Annual Software Engineering Workshop November 29-30,1995 GODDARD SPACE FLIGHT CENTER Greenbelt, Maryland The Software Engineering Laboratory (SEL) is an organization sponsored by the National Aeronautics and Space AdministratiodGoddard Space Flight Center (NASAIGSFC) and created to investigate the effectiveness of software engineering technologies when applied to the development of applications software. The SEL was created in 1976 and has three primary organizational members: NASAIGSFC, Software Engineering Branch The University of Maryland, Department of Computer Science .I Computer Sciences Corporation, Software Engineering Operation The goals of the SEL are (1) to understand the software development process in the GSFC environment; (2) to measure the effects of various methodologies, tools, and models on this process; and (3) to identifjr and then to apply successful development practices. The activities, findings, and recommendations of the SEL are recorded in the Software Engineering Laboratory Series, a continuing series of reports that includes this document. Documents from the Software Engineering Laboratory Series can be obtained via the SEL homepage at: or by writing to: Software Engineering Branch Code 552 Goddard Space Flight Center Greenbelt, Maryland 2077 1 SEW Proceedings iii The views and findings expressed herein are those of, the authors and presenters and do not necessarily represent the views, estimates, or policies of the SEL. All material herein is reprinted as submitted by authors and presenters, who are solely responsible for compliance with any relevant copyright, patent, or other proprietary restrictions. -

The Spirit of Discovery

Foundation «Интеркультура» AFS RUSSIA 2019 THE SPIRIT OF DISCOVERY This handbook belongs to ______________________ From ________________________ Contents Welcome letter ………………………………………………………………………………………………………… Page 3 1. General information about Russia ………………………………………………………………… Page 4 - Geography and Climate ……………………………………………………………………………… Page 4 - History ………………………………………………………………………………………………………………………. Page 5 - Religion ……………………………………………………………………………………………………………………… Page 6 - Language ………………………………………………………………………………………………………………… Page 6 2. AFS Russia ………………………………………………………………………………………………………………… Page 7 3. Your life in Russia …………………………………………………………………………………………………… Page 11 - Arrival ……………………………………………………………………………………………………………………. Page 11 - Your host family ……………………………………………………………………………………………… Page 12 - Your host school …………………………………………………………………………………………… Page 13 - Language course …………………………………………………………………………………………… Page 15 - AFS activities ……………………………………………………………………………………………………. Page 17 * Check yourself, part 1 ………………………………………………………………………………………. Page 18 4. Life in Russia …………………………………………………………………………………………………………….. Page 20 - General information about Russian Families ………………………………… Page 20 - General information about the School system……………………………… Page 22 - Extracurricular activities …………………………………………………………………………………… Page 24 - Social life …………………………………………………………………………………………………………… Page 25 - Holidays and parties ……………………………………………………………………………………. Page 28 5. Russian peculiarities ………………………………………………………………………………………….. Page 29 6. Organizational matters ……………………………………………………………………………………… -

Exploring Corporate Decision Makers' Attitudes Towards Active Cyber

Protecting the Information Society: Exploring Corporate Decision Makers’ Attitudes towards Active Cyber Defence as an Online Deterrence Option by Patrick Neal A Dissertation Submitted to the College of Interdisciplinary Studies in Partial Fulfilment of the Requirements for the Degree of DOCTOR OF SOCIAL SCIENCES Royal Roads University Victoria, British Columbia, Canada Supervisor: Dr. Bernard Schissel February, 2019 Patrick Neal, 2019 COMMITTEE APPROVAL The members of Patrick Neal’s Dissertation Committee certify that they have read the dissertation titled Protecting the Information Society: Exploring Corporate Decision Makers’ Attitudes towards Active Cyber Defence as an Online Deterrence Option and recommend that it be accepted as fulfilling the dissertation requirements for the Degree of Doctor of Social Sciences: Dr. Bernard Schissel [signature on file] Dr. Joe Ilsever [signature on file] Ms. Bessie Pang [signature on file] Final approval and acceptance of this dissertation is contingent upon the candidate’s submission of the final copy of the dissertation to Royal Roads University. The dissertation supervisor confirms to have read this dissertation and recommends that it be accepted as fulfilling the dissertation requirements: Dr. Bernard Schissel[signature on file] Creative Commons Statement This work is licensed under the Creative Commons Attribution-NonCommercial- ShareAlike 2.5 Canada License. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-sa/2.5/ca/ . Some material in this work is not being made available under the terms of this licence: • Third-Party material that is being used under fair dealing or with permission. • Any photographs where individuals are easily identifiable. Contents Creative Commons Statement............................................................................................ -

Getting to Yes with China in Cyberspace

Getting to Yes with China in Cyberspace Scott Warren Harold, Martin C. Libicki, Astrid Stuth Cevallos C O R P O R A T I O N For more information on this publication, visit www.rand.org/t/rr1335 Library of Congress Cataloging-in-Publication Data ISBN: 978-0-8330-9249-6 Published by the RAND Corporation, Santa Monica, Calif. © Copyright 2016 RAND Corporation R® is a registered trademark Cover Image: US President Barack Obama (R) checks hands with Chinese president Xi Jinping after a press conference in the Rose Garden of the White House September 25, 2015 in Washington, DC. President Obama is welcoming President Jinping during a state arrival ceremony. Photo by Olivier Douliery/ABACA (Sipa via AP Images). Limited Print and Electronic Distribution Rights This document and trademark(s) contained herein are protected by law. This representation of RAND intellectual property is provided for noncommercial use only. Unauthorized posting of this publication online is prohibited. Permission is given to duplicate this document for personal use only, as long as it is unaltered and complete. Permission is required from RAND to reproduce, or reuse in another form, any of its research documents for commercial use. For information on reprint and linking permissions, please visit www.rand.org/pubs/permissions.html. The RAND Corporation is a research organization that develops solutions to public policy challenges to help make communities throughout the world safer and more secure, healthier and more prosperous. RAND is nonprofit, nonpartisan, and committed to the public interest. RAND’s publications do not necessarily reflect the opinions of its research clients and sponsors. -

GQ: Practical Containment for Measuring Modern Malware Systems

GQ: Practical Containment for Measuring Modern Malware Systems Christian Kreibich Nicholas Weaver Chris Kanich ICSI & UC Berkeley ICSI & UC Berkeley UC San Diego [email protected] [email protected] [email protected] Weidong Cui Vern Paxson Microsoft Research ICSI & UC Berkeley [email protected] [email protected] Abstract their behavior, sometimes only for seconds at a time (e.g., to un- Measurement and analysis of modern malware systems such as bot- derstand the bootstrapping behavior of a binary, perhaps in tandem nets relies crucially on execution of specimens in a setting that en- with static analysis), but potentially also for weeks on end (e.g., to ables them to communicate with other systems across the Internet. conduct long-term botnet measurement via “infiltration” [13]). Ethical, legal, and technical constraints however demand contain- This need to execute malware samples in a laboratory setting ex- ment of resulting network activity in order to prevent the malware poses a dilemma. On the one hand, unconstrained execution of the from harming others while still ensuring that it exhibits its inher- malware under study will likely enable it to operate fully as in- ent behavior. Current best practices in this space are sorely lack- tended, including embarking on a large array of possible malicious ing: measurement researchers often treat containment superficially, activities, such as pumping out spam, contributing to denial-of- sometimes ignoring it altogether. In this paper we present GQ, service floods, conducting click fraud, or obscuring other attacks a malware execution “farm” that uses explicit containment prim- by proxying malicious traffic. -

How to Analyze the Cyber Threat from Drones

C O R P O R A T I O N KATHARINA LEY BEST, JON SCHMID, SHANE TIERNEY, JALAL AWAN, NAHOM M. BEYENE, MAYNARD A. HOLLIDAY, RAZA KHAN, KAREN LEE How to Analyze the Cyber Threat from Drones Background, Analysis Frameworks, and Analysis Tools For more information on this publication, visit www.rand.org/t/RR2972 Library of Congress Cataloging-in-Publication Data is available for this publication. ISBN: 978-1-9774-0287-5 Published by the RAND Corporation, Santa Monica, Calif. © Copyright 2020 RAND Corporation R® is a registered trademark. Cover design by Rick Penn-Kraus Cover images: drone, Kadmy - stock.adobe.com; data, Getty Images. Limited Print and Electronic Distribution Rights This document and trademark(s) contained herein are protected by law. This representation of RAND intellectual property is provided for noncommercial use only. Unauthorized posting of this publication online is prohibited. Permission is given to duplicate this document for personal use only, as long as it is unaltered and complete. Permission is required from RAND to reproduce, or reuse in another form, any of its research documents for commercial use. For information on reprint and linking permissions, please visit www.rand.org/pubs/permissions. The RAND Corporation is a research organization that develops solutions to public policy challenges to help make communities throughout the world safer and more secure, healthier and more prosperous. RAND is nonprofit, nonpartisan, and committed to the public interest. RAND’s publications do not necessarily reflect the opinions of its research clients and sponsors. Support RAND Make a tax-deductible charitable contribution at www.rand.org/giving/contribute www.rand.org Preface This report explores the security implications of the rapid growth in unmanned aerial systems (UAS), focusing specifically on current and future vulnerabilities. -

Advanced Persistent Threats

Advanced Persistent Threats September 30, 2010 By: Ali Golshan ([email protected]) Agenda Page Current Threat Landscape 2 The Disconnect 10 The Risk 19 What now? 25 Section 1 Current Threat Landscape • Context • Common Targets for APTs • Recent Attacks • Shift in Purpose • Repercussions Section 1 - Current Threat Landscape Context Conventional Cyber Attacks • Conventional cyber attacks use known vulnerabilities to exploit the un-specific targets • Examples include malware (viruses, worms and Trojans), and traditional hacking and cracking methods Advanced Persistent Threats (APTs) • There is a new breed of attacks that is being referred to as Advanced Persistent Threats • APTs are targeted cyber based attacks using unknown vulnerabilities, customized to extract a specific set of data from a specific organization • APTs have the following characteristics that make them particularly dangerous: • Persistent: The persistent nature makes them difficult to be extracted • Updatable: The attacker can update the malware to be able to continuously evade security solutions even as they are upgraded Section 1 - Current Threat Landscape APTs target specific organizations to obtain specific information Since these are specialized attacks, they are customized for their targets, and are designed to extract very specific information based on the target. Most common targets are: Government agencies • Government agencies are targeted by Foreign Intelligence Services (FIS) • APTs can be used for theft of military level secrets and in cyber warfare for destabilization along with conventional warfare • 2007 McAfee report stated approximately 120 countries are trying to create weaponized internet capabilities • Example: The Russia-Georgia war of 2008 was the first example of a APT coinciding with conventional warfare. -

Red Teaming the Red Team: Utilizing Cyber Espionage to Combat Terrorism

Journal of Strategic Security Volume 6 Number 5 Volume 6, No. 3, Fall 2013 Supplement: Ninth Annual IAFIE Article 3 Conference: Expanding the Frontiers of Intelligence Education Red Teaming the Red Team: Utilizing Cyber Espionage to Combat Terrorism Gary Adkins The University of Texas at El Paso Follow this and additional works at: https://scholarcommons.usf.edu/jss pp. 1-9 Recommended Citation Adkins, Gary. "Red Teaming the Red Team: Utilizing Cyber Espionage to Combat Terrorism." Journal of Strategic Security 6, no. 3 Suppl. (2013): 1-9. This Papers is brought to you for free and open access by the Open Access Journals at Scholar Commons. It has been accepted for inclusion in Journal of Strategic Security by an authorized editor of Scholar Commons. For more information, please contact [email protected]. Red Teaming the Red Team: Utilizing Cyber Espionage to Combat Terrorism This papers is available in Journal of Strategic Security: https://scholarcommons.usf.edu/jss/vol6/iss5/ 3 Adkins: Red Teaming the Red Team: Utilizing Cyber Espionage to Combat Terrorism Red Teaming the Red Team: Utilizing Cyber Espionage to Combat Terrorism Gary Adkins Introduction The world has effectively exited the Industrial Age and is firmly planted in the Information Age. Global communication at the speed of light has become a great asset to both businesses and private citizens. However, there is a dark side to the age we live in as it allows terrorist groups to communicate, plan, fund, recruit, and spread their message to the world. Given the relative anonymity the Internet provides, many law enforcement and security agencies investigations are hindered in not only locating would be terrorists but also in disrupting their operations. -

An Email Application with Active Spoof Monitoring and Control

2016 International Conference on Computer Communication and Informatics (ICCCI -2016), Jan. 07 – 09, 2016, Coimbatore, INDIA An Email Application with Active Spoof Monitoring and Control T.P. Fowdur, Member IEEE and L.Veerasoo [email protected] [email protected] Department of Electrical and Electronic Engineering University of Mauritius Mauritius Abstract- Spoofing is a serious security issue for email overview of some recent anti-spoofing mechanisms is now applications. Although several anti-email spoofing techniques presented have been developed, most of them do not provide users with sufficient control and information on spoof attacks. In this paper In [11], the authors proposed an anti-spoofing scheme for IP a web-based client oriented anti-spoofing email application is packets which provides an extended inter-domain packet filter proposed which actively detects, monitors and controls email architecture along with an algorithm for filter placement. A spoofing attacks. When the application detects a spoofed security key is first placed in the identification field of the IP message, it triggers an alert message and sends the spoofed header and a border router checks the key on the source message into a spoof filter. Moreover, the user who has received packet. If this key corresponds to the key of the target packet, the spoofed message is given the option of notifying the real sender of the spoofing attack. In this way an active spoof control the packet is considered valid, else it is flagged as a spoofed is achieved. The application is hosted using the HTTPS protocol packet. A Packet Resonance Strategy (PRS) which detects and uses notification messages that are sent in parallel with email different types of spoofing attacks that use up the resources of messages via a channel that has been secured by the Secure the server or commit data theft at a datacenter was proposed in Socket Layer (SSL) protocol. -

OCLC Use by Library Clusters. Illinois Valley Library System OCLC Experimental Project Report No. 5

DOCUMENT RESUME ED 241 063 IR 050 673 AUTHOR Bills, Linda G. TITLE OCLC Use by Library Clusters. Illinois Valley Library System OCLC Experimental Project Report No. 5. INSTITUTION Illinois State Library, Springfield.; Illinois Valley Library System, Pekin. PUB DATE May 83 GRANT LSCA-I-79-IX-C NOTE 67p.; For related documents, see IR 050 445 and IR 050 665. PUB TYPE Legal/Legislative/Regulatory Materials (090) -- Reports - Research/Technical (143) EDRS PRICE MF01/PC03 Plus Postage. DESCRIPTORS Attitudes; *Cataloging; Cost Effectiveness; Input Output Devices; *Interlibrary Loans; *Library Networks; *Online Systems; Records (Forms); Regional Cooperation; *Shared Services IDENTIFIERS *Illinois Valley Library System; Multitype Library Networks; *OCLC; Resource Sharing ABSTRACT A project was conducted from 1980 to 1982 to examine the costs and benefits of OCLC use in 29 small and medium-sized member libraries of the Illinois Valley Library System (IVLS). Academic, school, public, and special libraries participated in the project. A major project objective was to determine whether the sharing of an OCLC terminal by two to five libraries in close geographic proximity could significantly cut costs and yet provide acceptable service. This report describes specific cluster work arrangements for cataloging and interlibrary loan, including cataloging at the host library; cataloging from printouts; cataloging by the host library; conducting part of the cataloging procedure at the guest library and the rest at the host library; limiting interlibrary loan activity to lending of materials requested via OCLC; lending and borrowing through the host library; lending and borrowing by the guest library; and dial access interlibrary loan use and shared use of a portable terminal.