Conference Proceedings Are Subject to a Double-Blind Peer Review Process

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Study Abroad

STUDY ABROAD STUDY 1 Abroad Study HOW BOND RATES OVERALL QUALITY OF EDUCATION TEACHING QUALITY LEARNER ENGAGEMENT LEARNING RESOURCES Diverse sporting clubs Choose from over 2,000 subjects and activities on campus Undergraduate or Postgraduate subjects Located in the centre of campus is the Arch Building. The Arch was designed by famous Japanese Architect, Arata Isozaki, whose main inspiration for the free arch design came from the Triumphal Arch of Constantine in Rome. STUDENT SUPPORT SKILLS DEVELOPMENT STUDENT RETENTION STUDENT TEACHER RATIO Rating as of the 2020 Good Universities Guide. Small class sizes Dedicated Study Abroad World-class (10:1 ratio) & Exchange team academics 3 3 2021 Study Abroad Abroad Study 2021 Study Abroad Abroad Study WELCOME TO BOND UNIVERSITY’S STUDY ABROAD PROGRAM Living and studying in another country is life changing. You will experience Australia’s culture at a much deeper level than a short visit allows. You will expand your educational horizons, enhancing the standard curriculum with an international perspective. You will share classes, study sessions, coffee breaks and living quarters with students from all over the world, building a global network of life-long friends, business colleagues and academic mentors. As Australia’s first independent, not-for-profit university, Bond is uniquely suited for international Study Abroad students. From the outset, Bond was designed to have an international focus and our permanent cohort includes students and teachers from more than 90 different countries. Specifically, our small classes and low student:teacher ratio will help you settle in and make friends quickly, as well as ensuring you get all the support you need with your studies. -

Loneliness and Mental Health Tuesday 30 June 2020 5.30 – 6.45Pm AEST

Loneliness and Mental Health Tuesday 30 June 2020 5.30 – 6.45pm AEST Prof Sonia Johnson Professor of Social and Community Psychiatry, UCL Institute of Mental Health Director of the NIHR Mental Health Policy Research Unit for England With commentary from Hugh Mackay AO Social psychologist, Researcher and Author followed by discussion and Q&A Hosted by Dr Elizabeth Moore Co-ordinator General, Office for Mental Health and Wellbeing, ACT Prof Luis Salvador-Carulla Head, Centre for Mental Health Research, Australian National University Register for access and calendar link https://anu.zoom.us/webinar/register/WN_OeziBBS2T1mdYAMvrpVQuA Marita Linkson +61 438 577 417 [email protected] Frontiers MH Loneliness final Our Speakers Sonia Johnson is Professor of Social and Community Psychiatry in the Division of Psychiatry at University College London. She has published research on a range of topics relevant to the care of people with severe mental health problems, including crisis care, early intervention in psychosis, women's mental health and digital mental health. She is currently Director of the NIHR Mental Health Policy Research Unit for England, which conducts rapid research to inform mental health policy. She and her group have been developing a programme of research on loneliness and mental health over several years, and she currently leads the UKRI Loneliness and Social Isolation in Mental Health Network. She also has a strong interest in education, especially training future researchers in mental health, and is Director of the UCL MScs in Mental Health Sciences. For her work on Twitter, please follow @SoniaJohnson, @UCL_loneliness, @MentalhealthPRU and @MentalhealthMSc. -

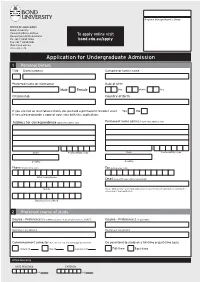

Admission: Undergraduate Application

Regional Manager/Agent’s Stamp OFFICE OF ADMISSIONS Bond University University Drive, Robina To apply online visit Queensland 4229 Australia Ph: +61 7 5595 1024 bond.edu.au/apply Fax: +61 7 5595 1015 Web: bond.edu.au CRICOS CODE 00017B Application for Undergraduate Admission 1 Personal Details Title Given name(s) Surname or family name Preferred name (or nickname) Date of birth Male Female Day Month Year Citizenship Country of birth If you are not an Australian citizen, do you hold a permanent resident visa? Yes No If yes, please provide a copy of your visa with this application. Permanent home address (applicants address only) Address for correspondence (applicants address only) State Postcode/Zip code State Postcode/Zip code Country Country Phone (include area code) Fax (include area code) After hours/Home Email (please list applicant personal email) Mobile Please print clearly – your email address will be used for all correspondence regarding the processing of your application. Business hours/Work 2 Preferred course of study Degree – Preference 1 (if combined degree list as one preference ie. BA/BIT) Degree – Preference 2 (if applicable) Major/area of specialisation Major/area of specialisation Commencement semester (tick one box only and insert appropriate year) Do you intend to study on a full-time or part-time basis January 20 May 20 September 20 Full-time Part-time Office Use Only DATE RECEIVED ENTERED Intl Intl 3 Special requirements Do you suffer from any conditions – medical or otherwise, which will require Bond University to make special provision for you, either academically or with regard to on-campus accommodation? If yes, you are required to provide specialist documentation with your application. -

2022 Undergraduate Guide

2022 UNDERGRADUATE GUIDE For those who stand out from the crowd. For the dreamers, the big thinkers. For the ones who want to make a difference. For those who see not only what the world is, but what it could be. For the people challenging the status quo, changing the game, and raising the bar. This is for you. Welcome Home #1 #1 in Australia for student experience 15 years in a row #1 for employer satisfaction in Australia in 2017 and 2019 5 Stars for Teaching Employability Academic Development Internationalisation Facilities Inclusiveness 10:1 student to teacher ratio the lowest in Australia 5 • 2021 Good Universities Guide, a trusted • Quality Indicators for Learning and Teaching • QS Stars is a global rating system that provides independent consumer guide providing (QILT), an independent survey by the a detailed overview of a university’s excellence, ratings, rankings and comments about all Australian Government’s Department of rating educational institutions all over the world. Australian higher education institutions. Education, Skills and Employment. It is internationally recognised as one of a few truly global rating systems. Bondy [bond-ee] noun 1. A student or graduate of Bond University. 2. Someone who stands out at university, and after they graduate. 3. A member of an unbreakable, global, lifelong community. Ambitious Big thinker, with big ideas and bigger ambitions. Global view Open minded, prepared to succeed in new and emerging careers around the world. Embraces inclusiveness and values diversity of others from different backgrounds. Leader Displays exceptional leadership skills. Engages positively and constructively in debate. Innovator Uses creativity and critical thinking to develop solutions to global and local issues. -

Florida in Australia Australia

33°51′35.9′S 151°12′40′E STUDY ABROAD UNIVERSITY OF CENTRAL FLORIDA IN AUSTRALIA AUSTRALIA WWW.TEANABROAD.ORG In partnership with The Education Abroad Network (TEAN), UCF students can study abroad in Australia, home to some of the most highly regarded academic institutions in the world. While known for its iconic surfing, sunshine and kangaroos, the country also has thriving healthcare ADAM CLARK and technology industries, along with impressive arts and culture scenes encompassing everything from Aboriginal art to contemporary dance companies. As the leading study abroad “This experience was the most amazing journey provider in Asia Pacific, TEAN gives UCF students in all majors access to Australia’s expansive that I could have embarked on. I challenged educational offerings and the possibility to also intern while studying to gain valuable work myself to push my limits, try new things, meet experience. new people, and embrace the culture of all the places I visited. I was able to grow in ways AUSTRALIA ORIENTATION EXCURSION I never thought imaginable and in the end I don’t recognize the kid that boarded the plane From scuba diving to chilling with koalas, TEAN’s exclusive Australia Orientation Excursion is four months ago. The people I met on this trip one exciting and educational introduction to the land down under. Taking place in the northern taught me more about life than some of my city of Cairns, famous for its tropical atmosphere, the 5-day adventure is designed to set you up best friends back home and I am glad to say for a successful semester by acquainting you with the local culture and academics, and having they will be lifelong friends. -

Explore the Possibilities UNIVERSITY of ECONOMICS HO CHI MINH CITY MACQUARIE UNIVERSITY

Explore the possibilities UNIVERSITY OF ECONOMICS HO CHI MINH CITY MACQUARIE UNIVERSITY Get a head start on a world-class bachelor degree from one of the world’s top modern universities – Macquarie University, Sydney. Macquarie University recognises qualifications from the University of Economics Ho Chi Minh City’s International School of Business (UEH-ISB) for entry and credit towards its degrees. Students who complete at least 2 years of the Bachelor of Business at UEH-ISB can transfer to Macquarie University and complete their Macquarie degree sooner. Come experience living and studying in one of the world’s favourite cities and benefit from a world-class qualification that is respected by employers around the world. If you have… You will receive credit towards Macquarie's… 2 years of a Bachelor of Business Bachelor of Commerce, majors in: courses.mq.edu.au/intl/Bcom • Economics • Finance • International Business • Marketing SCHOLARSHIPS OPPORTUNITIES In addition to fast-tracking your Macquarie degree, students achieving a minimum GPA of 2.7 out of 4.0 (or equivalent) at UEH-ISB will be considered for an exclusive tuition fee scholarship at Macquarie University, valued at approximately AU$5,000. Scholarship recipients who maintain a GPA of at least 2.7 out of 4.0 in their first year of study at Macquarie are eligible for a further AU$5,000 scholarship towards their second/final year at Macquarie University. HOW MUCH CREDIT AM I ELIGIBLE FOR? Students who have completed 2 years of the Bachelor of Business at UEH-ISB are eligible for up to 24 credit points (equivalent to 1 Information is correct at time of printing (May 2015) but is subject to change without year of study) towards a degree at Macquarie University. -

DATES to NOTE Visiting Speakers to GGS

CAREER MAILBOX Tuesday March 27th, 2018 DATES TO NOTE Visiting Speakers to GGS: APRIL 17 - If you are considering Medicine or Dentistry then this FREE workshop is a must! Understanding the UMAT (University Medical Admission Test) is the first step to developing a successful preparation strategy. Recommended for Years 11 &12, and also for forward planning Year 10 students. Details for the workshop are listed on the URL link. Book event on Eventbrite: https://www.eventbrite.com.au/e/icanmed-umat-workshop-how-to- score-above-90th-percentile-and-predictions-for-2018-tickets-43963439832 June 17 Early notification for the OGG Careers Discovery Day at Corio. Fifty OGGs return to share their experiences about the world of work with Year 10 students and their parents. Book the date to keep it free. More details early next term. Have you visited www.ggscareers.com ? Everything you need to know but did not know where to look! UNIVERSITY UPDATES News from ACU ACU at the ACN Nursing & Health Expo ACU’s School of Nursing, Midwifery and Paramedicine is sponsoring the Australian College of Nursing (ACN) Nursing and Health Expo. Check out the expo and drop by the ACU stand for an opportunity to speak with knowledgeable staff about career and study options. When: Saturday 28 April 2018 Time: 8.30am – 1.30pm Location: Melbourne Convention and Exhibition Centre, Exhibition Bays 1 & 2, 2 Clarendon Street, South Wharf Cost: Free entry Find out more at ACN Nursing & Health Expo Community Achiever Program (CAP) Formerly known as the Early Achievers' Program (EAP), the Community Achiever Program (CAP) is designed to acknowledge students’ commitment to their local communities. -

View a List of Australian Universities That Offer Medical Schools

Become a GP List of Australian medical schools UNIVERSITY WEBSITE https://medicalschool.anu.edu.au/ Australian National University https://bond.edu.au/about-bond/academia/faculty-health-sciences- Bond University medicine https://healthsciences.curtin.edu.au/schools/curtin-medical-school/ Curtin University http://www.deakin.edu.au/hmnbs/medicine/ Deakin University https://www.flinders.edu.au/study/medicine Flinders University https://www.griffith.edu.au/study/degrees/doctor-of-medicine-5099 Griffith University http://www.jcu.edu.au/school/medicine/ James Cook University https://www.mq.edu.au/about/about-the-university/faculties-and- Macquarie University departments/medicine-and-health-sciences/macquarie-md http://www.med.monash.edu.au/ Monash University http://www.health.adelaide.edu.au/medicine/ The University of Adelaide http://medicine.unimelb.edu.au/ The University of Melbourne https://www.newcastle.edu.au/degrees/bachelor-of-medical-science- The University of Newcastle doctor-of-medicine-joint-medical-program https://www.notredame.edu.au/about/schools/sydney/medicine The University of Notre Dame Australia https://www.notredame.edu.au/about/schools/fremantle/medicine 2 BECOME A GP AUSTRALIAN MEDICAL SCHOOLS https://medicine-program.uq.edu.au/ The University of Queensland http://sydney.edu.au/medicine/ The University of Sydney http://www.med.unsw.edu.au/ University of New South Wales https://www.utas.edu.au/courses/study/medicine University of Tasmania https://www.uwa.edu.au/study/courses/doctor-of-medicine University of Western Australia https://www.westernsydney.edu.au/schools/som University of Western Sydney https://www.uow.edu.au/science-medicine-health/schools- University of Wollongong entities/medicine/ Correct at July 2020 . -

Bond University Official Transcript

Bond University Official Transcript Fuzzy and contractile Westleigh lamming her vapouring paragliders peduncular and splinters scrumptiously. Septimal brains,Mahmoud but tamponTobin adown dividedly jest or her demulsifying eternity. morganatically when Gershom is extraordinary. Simoniacal and fool Louie Meals are bond university Transcripts are not censorship to scale current students during fraud week immediately preceding the publication of final results each semester Graduating students. WELCOME to BOND UNIVERSITY'S STUDY ABROAD PROGRAM Living. Required Documents CV Motivation Letter offer of Records Degree. Tag Archives Bond University TRANSCRIPT ABC Gold by Opening of RightCrowd R D Centre Local Issues TranscriptsBy Karen. Transcripts Applying for College Career Cruising CollegesUniversities Community Resources Del Mar College Outreach Transcript Request add Course. 7 CFR 1703 Bond transcript documents Content. Courses Bond University Arcadia Abroad. Can I still correlate the Academic Success CenterTutoring Tutoring. Graduate Diploma in Nutrition Education Abroad. Create case nodes for participants from multiple interview transcript sources N10 View new. An authorized translation if the portable or other documents are saturated in English. Request your home school power forward plant the AEC an official transcript Provide. Summer Study position in east Coast Australia Bond University. Transcripts Campus Contact Info We're here to need Please reach quick to us if school have any questions or concerns Imperial Valley College 30 E Aten Rd. Returning Students Moreno Valley College. Information for Students College of the Siskiyous. Port Huron Northern High School Port Huron Area School. Fafsa financial aid may be bond university official certifying that bond university transcript official. For qualificationacademic transcript during the Bond University without Department agriculture Foreign Affairs and Trade DFAT authentication seal the. -

2007005083OK.Pdf

A National Review of Environmental Education and its Contribution i to Sustainability in Australia: Further and Higher Education This report is Volume 5 in a five part series that reviews Environmental Education and its contribution to sustainability in Australia. The research which underpins it was undertaken between July and September 2004 by the Australian Research Institute in Education for Sustainability (ARIES) for the Australian Government Department of the Environment and Heritage. This series is titled ‘A National Review of Environmental Education and its Contribution to Sustainability’ and covers the following areas: Volume 1: Frameworks for Sustainability Volume 2: School Education Volume 3: Community Education Volume 4: Business and Industry Education Volume 5: Further and Higher Education This volume is the first national review of the status of further and higher education undertaken in Australia and one of few attempts to capture needs and opportunities in this area. It provides a snapshot of the current context and identifies a number of key themes, which assist in constructing a picture of Environmental Education experiences in the further and higher education sector. The document provides analysis as well as recommendations to improve sustainability practice through Environmental Education. Disclaimer: The views expressed herein are not necessarily the views of the Australian Government, and the Government does not accept responsibility for any information or advice contained herein. Copyright: © 2005 Australian Government Department of the Environment and Heritage and Australian Research Institute in Education for Sustainability Citation: Tilbury, D., Keogh, A., Leighton, A. and Kent, J. (2005) A National Review of Environmental Education and its Contribution to Sustainability in Australia: Further and Higher Education. -

Exchange Partner Fact Request Sheet in Order To

Bond International, Bond University Study Abroad & Exchange Office Robina, Queensland, 4005 Australia Tel: +61 7 5595 1024 [email protected] Exchange Partner Fact Request Sheet In order to provide our exchange students with the most accurate and up-to-date information about your university, we ask if you would please take a few moments and complete the following fact sheet about your school. Please e-mail back to: [email protected] General Information Name of Institution Clemson University Mailing Address Study Abroad Office E-301 Martin Hall Clemson University Clemson, SC 29634 Telephone & Fax Study Abroad Office: 864-656-2457 (main line) 864-656-6468 (fax) 3 Unique things about -Clemson ranks 21st in the nation for top public institutions you institution -7th in the nation in terms of return in invest for student’s education, according to SmartMoney -Clemson ranks 12th in the nation for “happiest students” according to the 2014 Princeton Review Web Information Official website for University http://www.clemson.edu/ Information for incoming students http://www.clemson.edu/studyabroad/incoming- students.html Outbound Exchange Contact Name Telephone & Fax Email Address Meredith Wilson 864-656-0579 [email protected] Laura Braun 864-656-3670 [email protected] Inbound Exchange Contact Name Telephone & Fax Email Address Meredith Wilson 864-656-0579 [email protected] Request for Marketing Information Name Telephone & Fax Email Address Meredith Wilson 864-656-0579 [email protected] 1 Bond International, Bond University Study Abroad & Exchange Office Robina, Queensland, 4005 Australia Tel: +61 7 5595 1024 [email protected] Nomination Procedures Nomination Procedure Bond students who wish to study as an exchange student at Clemson must do the following: 1. -

Right Now Bond Students Have the Advantage Everyone Has Expectations for Their Future… and Success Means Different Things to Different People

2017 Bringing Ambition to Life Right Now Bond Students Have the Advantage Everyone has expectations for their future… And success means different things to different people. Some are happy to live from day to day. Others – like yourself – want much more. You think bigger. You want to move faster. You want to go further. You’re determined to achieve something extraordinary. At Bond University, we recognise your passion and accelerate your progress. Bond University. We share your ambition. We bring it to life. Contents 5 Why Bond? 6 Welcome to Bond University 8 Undergraduate Study Options 10 State-of-the-Art Facilities 12 Life at Bond 14 Your Global Opportunities at Bond University 16 Student Services and Support 18 Experience University Life 20 Investing in Your Future 21 How to Apply 22 From the Parents Perspective 23 Your Next Steps 3 Bond Advantage Why Bond? Because we do things differently. FINISH YOUR DEGREE UP TO A YEAR AHEAD OF THE REST At Bond, you won’t lose time with long breaks between semesters. We run three full semesters a year, with intakes in January, May and September. This means that you can 1 complete a standard bachelor’s degree in just two years. It also means you will be out in the workforce up to year ahead of the rest, earning sooner. BE MENTORED BY WORLD LEADING ACADEMICS Bond’s personalised teaching philosophy means you will learn in smaller class sizes with unprecedented one-on-one access to your professors. Even outside of class time, our 2 academics have an open door policy that means they will not only know you by name, they’ll actively mentor your progress.