Ontopop Or How to Annotate Documents and Populate Ontologies from Texts

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Art of Adaptation

Ritgerð til M.A.-prófs Bókmenntir, Menning og Miðlun The Art of Adaptation The move from page to stage/screen, as seen through three films Margrét Ann Thors 301287-3139 Leiðbeinandi: Guðrún Björk Guðsteinsdóttir Janúar 2020 2 Big TAKK to ÓBS, “Óskar Helps,” for being IMDB and the (very) best 3 Abstract This paper looks at the art of adaptation, specifically the move from page to screen/stage, through the lens of three films from the early aughts: Spike Jonze’s Adaptation, Alejandro González Iñárritu’s Birdman, or The Unexpected Virtue of Ignorance, and Joel and Ethan Coen’s O Brother, Where Art Thou? The analysis identifies three main adaptation-related themes woven throughout each of these films, namely, duality/the double, artistic madness/genius, and meta- commentary on the art of adaptation. Ultimately, the paper seeks to argue that contrary to common opinion, adaptations need not be viewed as derivatives of or secondary to their source text; rather, just as in nature species shift, change, and evolve over time to better suit their environment, so too do (and should) narratives change to suit new media, cultural mores, and modes of storytelling. The analysis begins with a theoretical framing that draws on T.S. Eliot’s, Linda Hutcheon’s, Kamilla Elliott’s, and Julie Sanders’s thoughts about the art of adaptation. The framing then extends to notions of duality/the double and artistic madness/genius, both of which feature prominently in the films discussed herein. Finally, the framing concludes with a discussion of postmodernism, and the basis on which these films can be situated within the postmodern artistic landscape. -

Sofia Coppola and the Significance of an Appealing Aesthetic

SOFIA COPPOLA AND THE SIGNIFICANCE OF AN APPEALING AESTHETIC by LEILA OZERAN A THESIS Presented to the Department of Cinema Studies and the Robert D. Clark Honors College in partial fulfillment of the requirements for the degree of Bachelor of Arts June 2016 An Abstract of the Thesis of Leila Ozeran for the degree of Bachelor of Arts in the Department of Cinema Studies to be taken June 2016 Title: Sofia Coppola and the Significance of an Appealing Aesthetic Approved: r--~ ~ Professor Priscilla Pena Ovalle This thesis grew out of an interest in the films of female directors, producers, and writers and the substantially lower opportunities for such filmmakers in Hollywood and Independent film. The particular look and atmosphere which Sofia Coppola is able to compose in her five films is a point of interest and a viable course of study. This project uses her fifth and latest film, Bling Ring (2013), to showcase Coppola's merits as a filmmaker at the intersection of box office and critical appeal. I first describe the current filmmaking landscape in terms of gender. Using studies by Dr. Martha Lauzen from San Diego State University and the Geena Davis Institute on Gender in Media to illustrate the statistical lack of a female presence in creative film roles and also why it is important to have women represented in above-the-line positions. Then I used close readings of Bling Ring to analyze formal aspects of Sofia Coppola's filmmaking style namely her use of distinct color palettes, provocative soundtracks, car shots, and tableaus. Third and lastly I went on to describe the sociocultural aspects of Coppola's interpretation of the "Sling Ring." The way the film explores the relationships between characters, portrays parents as absent or misguided, and through film form shows the pervasiveness of celebrity culture, Sofia Coppola has given Bling Ring has a central ii message, substance, and meaning: glamorous contemporary celebrity culture can have dangerous consequences on unchecked youth. -

The 21St Hamptons International Film Festival Announces Southampton

THE 21ST HAMPTONS INTERNATIONAL FILM FESTIVAL ANNOUNCES SOUTHAMPTON OPENING, SATURDAY’S CENTERPIECE FILM AUGUST: OSAGE COUNTY, SPOTLIGHT AND WORLD CINEMA FILMS INCLUDING LABOR DAY, HER, THE PAST AND MANDELA: LONG WALK TO FREEDOM WILL FORTE TO JOIN BRUCE DERN IN “A CONVERSATION WITH…” MODERATED BY NEW YORK FILM CRITICS CIRCLE CHAIRMAN JOSHUA ROTHKOPF Among those expected to attend the Festival are: Anna Paquin, Bruce Dern, Ralph Fiennes, Renee Zellweger, Dakota Fanning, David Duchovny, Helena Bonham Carter, Edgar Wright, Kevin Connolly, Will Forte, Timothy Hutton, Amy Ryan, Richard Curtis, Adepero Oduye, Brie Larson, Dane DeHaan, David Oyelowo, Jonathan Franzen, Paul Dano, Ralph Macchio, Richard Curtis, Scott Haze, Spike Jonze and Joe Wright. East Hampton, NY (September 24, 2013) -The Hamptons International Film Festival (HIFF) is thrilled to announce that Director Richard Curtis' ABOUT TIME will be the Southampton opener on Friday, October 11th and that Saturday's Centerpiece Film is AUGUST: OSAGE COUNTY directed by John Wells. As previously announced, KILL YOUR DARLINGS will open the Festival on October 10th; 12 YEARS A SLAVE will close the Festival; and NEBRASKA is the Sunday Centerpiece. The Spotlight films include: BREATHE IN, FREE RIDE, HER, LABOR DAY, LOUDER THAN WORDS, MANDELA: LONG WALK TO FREEDOM, THE PAST and CAPITAL.This year the festival will pay special tribute to Oscar Award winning director Costa-Gavras before the screening of his latest film CAPITAL. The Festival is proud to have the World Premiere of AMERICAN MASTERS – MARVIN HAMLISCH: WHAT HE DID FOR LOVE as well as the U.S Premiere of Oscar Winner Alex Gibney’s latest doc THE ARMSTRONG LIE about Lance Armstrong. -

Schwerelos in Hollywood Nebenbei Macht Der Film Deutlich, Was Für Eine Luftnummer „Moulin Rouge“ Der Hamburger Schauspieler Ulrich Tukur Spielt in War

FILM Premieren Ab 27.2. About Schmidt Regie: Alexander Payne. Mit Jack Nicholson, Hope Davis, Dermot Mul- roney. Zunehmend deprimierende Tragikomö- die über Jack Nicholsons Fähigkeit, sich in den unauffälligen Ruheständler War- ren Schmidt zu verwandeln und dabei je- derzeit Jack Nicholson zu bleiben Ð wes- halb Alexander Paynes Oscar-nominier- tes Trauerspiel über einen Mann, der nach dem Tod seiner Frau auf Selbster- kundung geht, treffender ãAbout Nicholson“ heißen sollte. Chicago Tukur in „Solaris“: Regie: Rob Marshall. Mit Renée Zell- „Sie haben einen sehr weger, Catherine Zeta-Jones, Richard talentierten Hund“ Gere, John C. Reilly. Chicago 1929, die Welt als Bühne: Rob Marshalls virtuos in Szene gesetztes, bisweilen mitrei§endes Musical ist vergnüglich, nicht obwohl, sondern weil die Geschichte vorhersehbar ist. Schwerelos in Hollywood Nebenbei macht der Film deutlich, was für eine Luftnummer „Moulin Rouge“ Der Hamburger Schauspieler Ulrich Tukur spielt in war. Für 13 Oscars nominiert. „Solaris“ neben dem Superstar George Clooney. Ab 6.3. Deutsche Schauspieler haben in Hollwood nicht viel zu sagen, oft nur zwei Wörter: Frida „Heil Hitler!“ Dann ist der Kurzauftritt im Nazi-Kostüm auch schon wieder vorbei; Regie: Julie Taymor. Mit Salma Hayek, das Warten auf die nächste Rolle beginnt. Es gibt sehr viele Schauspieler in Hollywood, Alfred Molina, Geoffrey Rush. Leben und Sterben von Frida Kahlo, die auf Kommando „Heil Hitler!“ sagen können. Mexikos gro§er, leidenschaftlicher Ma- Auch der ewige Grenzgänger Ulrich Tukur Ð Schauspieler, Musiker und Noch- lerin, produziert, gespielt und inter- Intendant der Hamburger Kammerspiele Ð hat schon etliche Nazis und ebenso viele pretiert von Salma Hayek, Mexikos Widerstandskämpfer gespielt. -

BABEL and the GLOBAL HOLLYWOOD GAZE Deborah Shaw

02_shaw:SITUATIONS 10/16/11 12:40 PM Page 11 BABEL AND THE GLOBAL HOLLYWOOD GAZE Deborah Shaw BABEL AND THE GLOBAL HOLLYWOOD GAZE n popular imaginaries, “world cinema” and Hollywood commercial cinema appear to be two opposing forms of filmic production obeying Idiverse political and aesthetic laws. However, definitions of world cin- ema have been vague and often contradictory, while some leftist discourses have a simplistic take on the evils of reactionary Hollywood, seeing it in rather monolithic terms. In this article, I examine some of the ways in which the term “world cinema” has been used, and I explore the film Babel (2006), the last of the three films of the collaboration between the Mexicans Alejandro González Iñárritu and screenwriter Guillermo Arriaga.1 Babel sets out to be a new sort of film that attempts to create a “world cinema” gaze within a commercial Hollywood framework. I exam- ine how it approaches this and ask whether the film succeeds in this attempt. I explore the tensions between progressive and conservative political agendas, and pay particular attention to the ways “other” cul- tures are seen in a film with “Third World” pretensions and U.S money behind it. I frame my analysis around a key question: does the Iñárritu-led outfit successfully create a paradigmatic “transnational world cinema” text that de-centers U.S. hegemony, or is this a utopian project doomed to failure in a film funded predominantly by major U.S. studios?2 I examine the ways in which the film engages with the tourist gaze and ask whether the film replaces this gaze with a world cinema gaze or merely reproduces it in new ways. -

Sbiff Special Events

Special Thanks To www.sbiff.org #sbiff Special Events Opening Night Film A Bump Along The Way Wednesday, January 15, 8:00 PM Arlington Theatre Presented by UGG® A female-led, feel-good comedy drama set in Derry, PRESENTED BY Northern Ireland, about a middle-aged woman whose unexpected pregnancy after a one-night stand acts as the catalyst for her to finally take control of her life. American Riviera Award Renée Zellweger Thursday, January 16, 8:00 PM Arlington Theatre Sponsored by Bella Vista Designs The American Riviera Award recognizes actors who have made a significant contribution to American Cinema. Outstanding Performers of the Year Award Scarlett Johansson & Adam Driver 1. Arlington Theatre 2. Will Call and Volunteer HQ at SBIFF’s Education Center 3. Fiesta Theatre 4. Lobero Theatre & Festival Pavilion 5. Metro Theatre 6. Festival Hub & Press Office Friday, January at Hotel 17, 8:00 Santa PM Barbara Arlington Theatre Presented by Belvedere Vodka Public Parking Lot Public Restrooms The Outstanding Performer of the Year Award is given to an artist who has delivered a standout performance 1 in a leading role. Special Events Special Events FREE Screening of THREE KINGS Montecito Award FOLLOWED BY A Q&A WITH DAVID O. RUSSELL Lupita Nyong’o Saturday, January 18, 2:00 PM Lobero Theatre Monday, January 20, 8:00 PM Arlington Theatre FREE ADMISSION Presented by Manitou Fund In celebration of its 20th anniversary, SBIFF will present a This year we recognize Lupita Nyong’o with the Monte- free screening of THREE KINGS followed by a Q&A with cito Award for her impressive career and most recent David O. -

Using Pop Soundtrack As Narrative Counterpoint Or Complement Margaret Dudasik Honors College, Pace University

Pace University DigitalCommons@Pace Honors College Theses Pforzheimer Honors College 5-1-2013 Genre as Bait: Using Pop Soundtrack as Narrative Counterpoint or Complement Margaret Dudasik Honors College, Pace University Follow this and additional works at: http://digitalcommons.pace.edu/honorscollege_theses Part of the Film and Media Studies Commons Recommended Citation Dudasik, Margaret, "Genre as Bait: Using Pop Soundtrack as Narrative Counterpoint or Complement" (2013). Honors College Theses. Paper 127. http://digitalcommons.pace.edu/honorscollege_theses/127 This Thesis is brought to you for free and open access by the Pforzheimer Honors College at DigitalCommons@Pace. It has been accepted for inclusion in Honors College Theses by an authorized administrator of DigitalCommons@Pace. For more information, please contact [email protected]. Genre as Bait: Using Pop Soundtrack as Narrative Counterpoint or Complement By: Margaret Dudasik May 15, 2013 BA Film & Screen Studies/ BFA Musical Theatre Dr. Ruth Johnston Film & Screen Studies, Dyson College of Arts and Sciences 1 Abstract There is much argument against using pre-existing music in film, Ian Garwood noting three potential problems with the pop song: obtrusiveness, cultural relevance, and distance from the narrative (103-106). It is believed that lyrics and cultural connotations can distract from the action, but it is my belief that these elements only aid narrative. By examining the cinematic functions of the soundtracks of O Brother Where Are Thou? (2000) and Marie Antoinette (2006), I will argue that using pre-existing music in film is actually more effective than a score composed specifically for a film. Film theorist Claudia Gorbman notes that film scores have “temporal, spatial, dramatic, structural, denotative, [and] connotative” abilities” (22), and it is my belief that pop music is just as economical in forming character, conveying setting, and furthering plot. -

Film: the Case of Being John Malkovich Martin Barker, University of Aberystwyth, UK

The Pleasures of Watching an "Off-beat" Film: the Case of Being John Malkovich Martin Barker, University of Aberystwyth, UK It's a real thinking film. And you sort of ponder on a lot of things, you think ooh I wonder if that is possible, and what would you do -- 'cos it starts off as such a peculiar film with that 7½th floor and you think this is going to be really funny all the way through and it's not, it's extremely dark. And an awful lot of undercurrents to it, and quite sinister and … it's actually quite depressing if you stop and think about it. [Emma, Interview 15] M: Last question of all. Try to put into words the kind of pleasure the film gave you overall, both at the time you were watching and now when you sit and think about it. J: Erm. I felt free somehow and very "oof"! [sound of sharp intake of breath] and um, it felt like you know those wheels, you know in a funfair, something like that when I left, very "woah-oah" [wobbling and physical instability]. [Javita, Interview 9] Hollywood in the 1990s was a complicated place, and source of films. As well as the tent- pole summer and Christmas blockbusters, and the array of genre or mixed-genre films, through its finance houses and distribution channels also came an important sequence of "independent" films -- films often characterised by twisted narratives of various kinds. Building in different ways on the achievements of Tarantino's Reservoir Dogs (1992) and Pulp Fiction (1994), all the following (although not all might count as "independents") were significant success stories: The Usual Suspects (1995), The Sixth Sense (1999), American Beauty (1999), Magnolia (1999), The Blair Witch Project (1999), Memento (2000), Vanilla Sky (2001), Adaptation (2002), and Eternal Sunshine of the Spotless Mind (2004). -

The Daily Gamecock, MONDAY, OCTOBER 19, 2009

University of South Carolina Scholar Commons October 2009 10-19-2009 The aiD ly Gamecock, MONDAY, OCTOBER 19, 2009 University of South Carolina, Office oftude S nt Media Follow this and additional works at: https://scholarcommons.sc.edu/gamecock_2009_oct Recommended Citation University of South Carolina, Office of Student Media, "The aiD ly Gamecock, MONDAY, OCTOBER 19, 2009" (2009). October. 16. https://scholarcommons.sc.edu/gamecock_2009_oct/16 This Newspaper is brought to you by the 2009 at Scholar Commons. It has been accepted for inclusion in October by an authorized administrator of Scholar Commons. For more information, please contact [email protected]. dailygamecock.com UNIVERSITY OF SOUTH CAROLINA MONDAY, OCTOBER 19, 2009 VOL. 103, NO. 37 ● SINCE 1908 Monday DRESS SALES 66° 41° Tuesday BENEFIT CHARITY 74° 44° Local survivors, in 2006. Both women are survivors. On the store’s brides-to-be leave Web site, the owners Wednesday ‘cancer at the altar’ describe their business as “a 77° 50° unique boutique for today’s surviving woman.” The Kristyn Winch name comes from Greek THE DAILY GAMECOCK mythology where “Alala” is defi ned as “the female spirit For many young women, of the war cry.” The owners the idea of getting married have “declared war on has been a fantasy since they traditional sales and service” were children. Over the in the evolving procedures of weekend, brides-to-be had cancer treatment. the opportunity to give back First-year students to women like themselves Rebecca Mascaro and Erin Chad Simmons / THE DAILY GAMECOCK while shopping for the Tingley both volunteered USC women stand in line Friday evening for the T.A.K.E. -

Shirley Henderson

www.hamiltonhodell.co.uk Shirley Henderson Talent Representation Telephone Madeleine Dewhirst & Sian Smyth +44 (0) 20 7636 1221 [email protected] Address Hamilton Hodell, 20 Golden Square London, W1F 9JL, United Kingdom Film Title Role Director Production Company THE TROUBLE WITH JESSICA Sarah Matt Winn Sarah Sullick THE SANDS OF VENUS Rachel Tony Grisoni Potboiler Films GREED Margaret Michael Winterbottom Revolution Films STAR WARS: EPISODE IX Babu Frik J.J. Abrams Lucasfilm/Walt Disney Pictures STAN AND OLLIE Lucille Hardy Jon S. Baird BBC/Fable Pictures Nominated for the Best Actress (Film) Award, BAFTA Scotland Awards, 2019 T2: TRAINSPOTTING Gail Danny Boyle TriStar Pictures/Film4 OKJA Jennifer Bong Joon-ho Plan B Entertainment NEVER STEADY, NEVER STILL Judy Findlay Kathleen Hepburn Experimental Forest Films LOVE SONG: WOLF ALICE Joe's Mum Michael Winterbottom Lorton Entertainment Universal Pictures/Working Title BRIDGET JONES'S BABY Jude Sharon Maguire Films URBAN HYMN Kate Linton Michael Caton-Jones Eclipse Films Archimede/Recorded Picture THE TALE OF TALES Imma Matteo Garrone Company THE CARAVAN Elaine Simon Powell Uprising Features SET FIRE TO THE STARS Shirley Andy Goddard Mad As Birds/Masnomis FILTH Nominated for the Best Supporting Actress Award, British Independent Film Bunty Jon S. Baird Steel Mill Pictures Awards, 2013 THE LOOK OF LOVE Rusty Humphries Michael Winterbottom Pink Pussycat Films THÉRÈSE Suzanne Charlie Stratton Liddell Entertainment ANNA KARENINA Wife (Opera House) Joe Wright Working Title Films EVERYDAY -

Screenwriting: the Feature Film

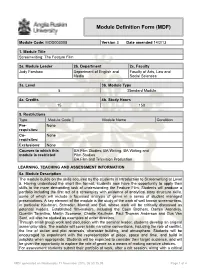

Module Definition Form (MDF) Module Code: MOD003008 Version 3 Date amended 14/2/13 1. Module Title Screenwriting: The Feature Film 2a. Module Leader 2b. Department 2c. Faculty Judy Forshaw Department of English and Faculty of Arts, Law and Media Social Sciences 3a. Level 3b. Module Type 5 Standard Module 4a. Credits 4b. Study Hours 15 150 5. Restrictions Type Module Code Module Name Condition Pre- None requisites: Co- None requisites: Exclusions: None Courses to which this BA Film Studies; BA Writing; BA Writing and module is restricted Film Studies BA Film and Television Production LEARNING, TEACHING AND ASSESSMENT INFORMATION 6a. Module Description The module builds on the skills acquired by the students in Introduction to Screenwriting at Level 5. Having understood the short film format, students now have the opportunity to apply their skills to the more demanding task of understanding the Feature Film. Students will produce a portfolio including the first act of a screenplay with evidence of analytical story structure skills, some of which will include a focussed analysis of genre in a series of student managed presentations. A key element of the module is the study of the work of well known screenwriters, in particular Kaufman, Schrader, Mamet and Ball, whose work will be critically discussed as potential models. Established film-makers, including the Coen Brothers, Darren Aronsksy, Quentin Tarantino, Martin Scorsese, Charlie Kaufman, Paul Thomas Anderson and Gus Van Sant, will also be studied as examples of writer directors. Through small group work and discussion with the seminar leader, students develop an original screenplay idea. -

Projection and Control in the Works of Charlie Kaufman

In this careful study of the works of Charlie Kaufman, Larson sets up the problem of the mind's relationship to reality, then explores it through a series of related concepts—how we project ourselves into reality, how we try to control it, and how this limits our ability to connect to others. (Instructor: Victoria Olsen) PLAYING WITH PEOPLE: PROJECTION AND CONTROL IN THE WORKS OF CHARLIE KAUFMAN Audrey Larson ohn Malkovich is falling down a dark, grimy tunnel. With a crash, he finds himself in a restaurant, the camera panning up from a plate set with a perfectly starched napkin to a pair of large Jbreasts in a red dress and a delicate wrist hanging in the air like a question mark. The camera continues panning up to the face that belongs to this body, the face of—John Malkovich. Large-breasted Malkovich looks sensually at the camera and whispers, “Malkovich, Malkovich,” in a sing-song whisper. A waiter pops in, also with the face of Malkovich, and asks, “Malkovich? Malkovich, Malkovich?” The real Malkovich looks down at the menu—every item is “Malkovich.” He opens his mouth, but only “Malkovich,” comes out. A look of horror dawns on his face as the camera jerks around the restaurant, focusing on different groups of people, all with Malkovich’s face. He gets up and starts to run, but stops in his tracks at the sight of a jazz singer with his face, lounging across a piano and tossing a high-heeled leg up in the air. A whimper escapes the real Malkovich.