Massively Parallel Cartesian Discrete Ordinates Method for Neutron Transport Simulation Salli Moustafa

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ICANS XXI Dawn of High Power Neutron Sources and Science Applications

Book of Abstracts ICANS XXI Dawn of high power neutron sources and science applications 29 Sep - 3 Oct 2014, JAPAN Ibaraki Prefectural Culture Center Update : 12 Oct. 2014 Best photography in 7th Oarai Town Photo Contest. WELCOME TO ICANS XXI ICANS (International Collaboration on Advanced Neutron Sources) is a network for scientists who are involved in developing pulsed neutron sources and accelerator based spallation neutron sources. Since 1st ICANS meetings was held in 1977 at Argonne National Laboratory in the day of dawn of spallation neutron technique, ICANS has been continuously held already 20 times somewhere in the world. Now we are extremely happy to announce that the ICANS, the 21st meeting, will be held at Mito hosted by J-PARC this autumn. We have a large number of topics to be discussed, there are twelve topics, such as futuristic idea of neutron source, rapid progress in facilities, integration issues in target-moderator-development, etc. The details can be found in the agenda. The meeting has a two layered structure, one is plenary session and another is workshop. Two of them are complementary and tightly cooperate each other. In the meeting we would like to enhance "workshop style", which is an original and traditional way of ICANS. Actually 2/3 of topics will be discussed in the workshop sessions. It also will be essentially organized/ lead by the workshop chairs. Plenary session shows overall issues in a relevant workshop, whose details should be talked/discussed in the workshop. The venue for the meeting is Mito city, where the 2nd Shogun Family lived for a long period of time during Edo era from 17th to 19th century, when the Tokugawa shogunate ruled the country. -

![Φ()Edeat∆≡ Nedeavt () [ ∆ ] (2)](https://docslib.b-cdn.net/cover/7552/edeat-nedeavt-2-327552.webp)

Φ()Edeat∆≡ Nedeavt () [ ∆ ] (2)

22.54 Neutron Interactions and Applications (Spring 2002) Lecture Notes on Neutronics of Multiplying Media This is a set of notes for the four lectures on neutron interactions in a reactor system in which a neutron population can change as a result of capture and fission reactions, in addition to scattering. We will discuss the concept of criticality as a way of describing any self-sustaining chain reaction which, in principle, can be just as well chemical in nature, and focus on the fundamental understanding of neutron diffusion and slowing down, and the interplay between materials constants (expressed through cross sections) and geometric factors (system size and shape) in determining criticality. The four lectures are: Lec 14. Neutronics of Multiplying Media – criticality, moderation, leakage Lec 15. Elements of Neutron Diffusion Theory Lec 16. Neutron Slowing Down Lec.17. The Two-Group Two-Region Reactor As a start the following references are recommended for supplemental reading: J. Lemarsh, Introduction to Nuclear Reactor Theory K. M. Case, F. deHoffmann, G. Placzek, Introduction to The Theory of Neutron Diffusion, vol. 1, LASL, 1953. I. Some Basic Notions of Neutron Multiplication Consider a homogeneous beam of neutrons having a distribution of energies. Define distribution function n(E) such that n(E)dE = expected no, of neutrons with energies in dE about E per cm3 (1) Suppose we ask how many neutrons in a certain energy range will cross an area during a time interval ∆t. Call this number φ()EdEAt∆≡ nEdEAvt () [ ∆ ] (2) since all the neutrons in a certain volume Av ∆t will cross during ∆t. -

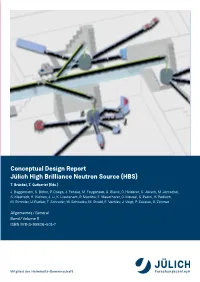

Conceptual Design Report Jülich High

General Allgemeines ual Design Report ual Design Report Concept Jülich High Brilliance Neutron Source Source Jülich High Brilliance Neutron 8 Conceptual Design Report Jülich High Brilliance Neutron Source (HBS) T. Brückel, T. Gutberlet (Eds.) J. Baggemann, S. Böhm, P. Doege, J. Fenske, M. Feygenson, A. Glavic, O. Holderer, S. Jaksch, M. Jentschel, S. Kleefisch, H. Kleines, J. Li, K. Lieutenant,P . Mastinu, E. Mauerhofer, O. Meusel, S. Pasini, H. Podlech, M. Rimmler, U. Rücker, T. Schrader, W. Schweika, M. Strobl, E. Vezhlev, J. Voigt, P. Zakalek, O. Zimmer Allgemeines / General Allgemeines / General Band / Volume 8 Band / Volume 8 ISBN 978-3-95806-501-7 ISBN 978-3-95806-501-7 T. Brückel, T. Gutberlet (Eds.) Gutberlet T. Brückel, T. Jülich High Brilliance Neutron Source (HBS) 1 100 mA proton ion source 2 70 MeV linear accelerator 5 3 Proton beam multiplexer system 5 4 Individual neutron target stations 4 5 Various instruments in the experimental halls 3 5 4 2 1 5 5 5 5 4 3 5 4 5 5 Schriften des Forschungszentrums Jülich Reihe Allgemeines / General Band / Volume 8 CONTENT I. Executive summary 7 II. Foreword 11 III. Rationale 13 1. Neutron provision 13 1.1 Reactor based fission neutron sources 14 1.2 Spallation neutron sources 15 1.3 Accelerator driven neutron sources 15 2. Neutron landscape 16 3. Baseline design 18 3.1 Comparison to existing sources 19 IV. Science case 21 1. Chemistry 24 2. Geoscience 25 3. Environment 26 4. Engineering 27 5. Information and quantum technologies 28 6. Nanotechnology 29 7. Energy technology 30 8. -

THE SPALLATION NEUTRON SOURCE (SNS) PROJECT Jose Alonso

THE SPALLATION NEUTRON SOURCE (SNS) PROJECT Jose Alonso The SNS is currently the US Department of Energy's activation and residual-radiation levels in the tunnels are largest accelerator construction project, and is being low enough to allow quick access and hands-on carried out by a partnership of five DOE National maintenance, and is driven by the very high reliability and Laboratories for building the world's most powerful availability specifications associated with the strong user- accelerator-based pulse spallation source. At its planned orientation of the SNS facility. Areas where unusable 2 MW operating level, it will produce neutron fluxes at beam is diverted (so-called "controlled" beam-loss least a factor of ten greater than Rutherford Appleton points), such as collimators, scrapers and dumps, must be Laboratory’s ISIS, currently the world’s leading spallation designed to collect these particles in a way that contribute source. The current design of the SNS, shown in Figure only minimally to background levels in the tunnels where 1, calls for a full-energy (1 GeV) linac delivering 1 ms access is required. Normal loss mechanisms must receive pulses to an Accumulator Ring which compresses these particular attention to meet this specification. This into 600 ns proton pulses that strike a liquid mercury implies very tight control over beam emittance, and target at a 60 Hz rate. Room-temperature and cryogenic emittance growth; designing for the largest-possible stay- moderators produce beams of slow neutrons suitable for clear apertures in linac, transport and ring structures; materials research with an initial suite of at least 10 understanding of space-charge and halo effects in linac and ring; ensuring highest-possible vacuum in areas neutron-scattering instruments. -

Nist Center for Neutron Research Accomplishments and Opportunities

RXC NATL INST. OF STAND & TECH WIST PUBLICATIONS A111QS 6=11773- m ON THE COVER Part of the 913-detector array of the new Disk Chopper Spectrometer (DCS) that will be available to users in 2000. To view the detectors as found in the instrument, the picture should be rotated 90°. Information about the DCS and other instruments at NCNR is available at http://www.ncnr.nist.gov/. Photo: Mark Heifer NCNR 1999 NIST CENTER FOR NEUTRON RESEARCH ACCOMPLISHMENTS AND OPPORTUNITIES NIST Special Publication 944 J. Michael Rowe, Director Ronald L. Cappelletti, Editor Linda K. Clutter, Assistant Editor January 2000 U.S. DEPARTMENT OF COMMERCE William M. Daley, Secretary Technology Administration Dr. Cheryl L. Shavers, Under Secretary of Commerce for Technology National Institute of Standards and Technology Raymond G. Kammer, Director DISCLAIMER Certain commercial equipment, instruments, or materials are identified in this report to foster understanding. Such identification does not imply recommendation or endorsement by the National Institute of Standards and Technology, nor does it imply that the materials or equipment identified are necessarily the best available for the purpose. National Institute of Standards and Technology Special Publication 944 Natl. Inst. Stand. Technol. Spec. Publ. 944, 80 pages (January 2000) CODEN: NSPUE2 U.S. GOVERNMENT PRINTING OFFICE - WASHINGTON: 2000 For Sale by the Superintendent of Documents, U.S. Government Printing Office Washington, DC 20402-9325 CONTENTS Foreword iv Introduction to the NIST Center for Neutron Research -

1 a Numerical Solution of the Time-Dependent Neutron

A NUMERICAL SOLUTION OF THE TIME-DEPENDENT NEUTRON TRANSPORT EQUATION USING THE CHARACTERISTIC METHOD. APPLICATIONS TO ICF AND TO HYBRID FISSION-FUSION SYSTEMS Dalton Ellery Girão Barroso Military Institute of Engineering Department of Nuclear Engineering Emails: [email protected], [email protected] ABSTRACT In this work we present a solution of the one-dimensional spherical symmetric time- dependent neutron transport equation (written for a moving system in lagrangian coordinates) by using the characteristic method. One of the objectives is to overcome the negative flux problem that arises when the system is very opaque and the angular neutron flux can become negative when it is extrapolated in spatial meshes as, for example, in diamond scheme adopted in many codes. Although there are recipes to overcome this problem, it can completely degrade the numerical solution if repeated many times. In most common time discretization of the time-dependent neutron transport equation, there is a term, 1/vt, summed to total (or transport) cross sections that can increase enormously the opacity of the medium. This certainly gets worse when there is a combination of lower neutron velocity (in a moderated system) and a very small time-step a very common situation in ICF (inertial confinement fusion). The solution presented here can be easily coupled to radiation-hydrodynamics equations, but it is necessary an additional term to maintain neutron conservation in a moving system in lagrangian coordinates. Energy multigroup method and a former SN method to deal with the angular variable are used, with the assumption of isotropic scattering and the transport cross sections approximation. -

Module 6: Neutron Diffusion Dr. John H. Bickel

Fundamentals of Nuclear Engineering Module 6: Neutron Diffusion Dr. John H. Bickel 1 2 Objectives: 1. Understand how Neutron Diffusion explains reactor neutron flux distribution 2. Understand origin, limitations of Neutron Diffusion from: • Boltzmann Transport Equation, • Ficke’s Law 3. Solution of One-Group Neutron Diffusion Equation for: • Cubical, • Cylindrical geometries (via separation of variables technique) 4. Identify Eigenvalues of Neutron Diffusion Equation (Buckling, Diffusion Length) related to physical properties 5. Understand refinements from Multi-Group Diffusion Model applied to Reflectors, flux depression near strong neutron absorbers, and sources 3 Boltzmann’s Transport Equation • Originates from Statistical Mechanics • Used to understand kinetic theory of gasses • Full equation expressed as integral-differential equation • In simplest terms it is a conservation equation d r N(r,v,t)d 3r = S(r,t)d 3r − N(r,v,t)vΣ (r,v,t)d 3r − J (r,v,t) • nrdA ∫ ∫ ∫∫c dt V V V 4 Boltzmann’s Transport Equation • First simplification: Apply Divergence Theorem r r r ∫ J (r,v,t) • nrdA = ∫∇ • J (r,v,t)d 3r V • Remove volume integration: d r r N(r,v,t) = S(r,t) − N(r,v,t)vΣ (r,v,t) − ∇ • J (r,v,t) dt c • Divergence of Local Neutron Current is simplified by making “Diffusion Approximation” • Alternate solution approach is via Monte Carlo Method • Diffusion Approximation is based upon Fick’s Law 5 Diffusion Approximation Assumes: • Uniform relatively infinite medium thus: Σc(r) ~ Σc • No strong “point” neutron sources in medium • Scattering -

Analytical Benchmarks for Nuclear Engineering Applications

Data Bank ISBN 978-92-64-99056-2 NEA/DB/DOC(2008)1 Analytical Benchmarks for Nuclear Engineering Applications Case Studies in Neutron Transport Theory B.D. Ganapol Department of Aerospace and Mechanical Engineering University of Arizona with Forewords by Paul F. Zweifel Richard Sanchez and Norman J. McCormick OECD © 2008 NEA No. 6292 NUCLEAR ENERGY AGENCY ORGANISATION FOR ECONOMIC CO-OPERATION AND DEVELOPMENT ORGANISATION FOR ECONOMIC CO-OPERATION AND DEVELOPMENT The OECD is a unique forum where the governments of 30 democracies work together to address the economic, social and environmental challenges of globalisation. The OECD is also at the forefront of efforts to understand and to help governments respond to new developments and concerns, such as corporate governance, the information economy and the challenges of an ageing population. The Organisation provides a setting where governments can compare policy experiences, seek answers to common problems, identify good practice and work to co-ordinate domestic and international policies. The OECD member countries are: Australia, Austria, Belgium, Canada, the Czech Republic, Denmark, Finland, France, Germany, Greece, Hungary, Iceland, Ireland, Italy, Japan, Korea, Luxembourg, Mexico, the Netherlands, New Zealand, Norway, Poland, Portugal, the Slovak Republic, Spain, Sweden, Switzerland, Turkey, the United Kingdom and the United States. The Commission of the European Communities takes part in the work of the OECD. OECD Publishing disseminates widely the results of the Organisation’s statistics gathering and research on economic, social and environmental issues, as well as the conventions, guidelines and standards agreed by its members. * * * This work is published on the responsibility of the Secretary-General of the OECD. -

Accurate Simulation of Neutrons in Less Than One Minute Pt. 2: Sandman—GPU-Accelerated Adjoint Monte-Carlo Sampled Acceptance Diagrams

Article Accurate Simulation of Neutrons in Less Than One Minute Pt. 2: Sandman—GPU-Accelerated Adjoint Monte-Carlo Sampled Acceptance Diagrams Phillip Bentley European Spallation Source ERIC, Box 176, 221 00 Lund, Sweden; [email protected] or [email protected] Received: 20 May 2020; Accepted: 12 June 2020; Published: 16 June 2020 Abstract: A computational method in the modelling of neutron beams is described that blends neutron acceptance diagrams, GPU-based Monte-Carlo sampling, and a Bayesian approach to efficiency. The resulting code reaches orders of magnitude improvement in performance relative to existing methods. For example, data rates similar to world-leading, real instruments can be achieved on a 2017 laptop, generating 106 neutrons per second at the sample position of a high-resolution small angle scattering instrument. The method is benchmarked, and is shown to be in agreement with previous work. Finally, the method is demonstrated on a mature instrument design, where a sub-second turnaround in an interactive simulation process allows the rapid exploration of a wide range of options. This results in a doubling of the design performance, at the same time as reducing the hardware cost by 40%. Keywords: GPU; adjoint; Monte-Carlo; neutron; simulation 1. Introduction Neutron scattering facilities such as the European Spallation Source (ESS) currently under construction in Lund, Sweden, and the world-leading Institut Laue Langevin (ILL) in Grenoble, France, rely increasingly upon beam simulations to steer the evolution of the design of their instruments. The credibility of instrument conceptual design is now largely driven by Monte-Carlo simulation in preference to the more traditional approach of using analytical derivations. -

Simulating Neutron Transport in Long Beamlines at a Spallation Neutron Source Using Geant4

Simulating neutron transport in long beamlines at a spallation neutron source using Geant4 Douglas D. DiJulio a;b;∗ Isak Svensson a;b Xiao Xiao Cai a;c Joakim Cederkall b and Phillip M. Bentley a a European Spallation Source ESS ERIC, SE-221 00 Lund, Sweden b Department of Physics, Lund University, SE-221 00 Lund, Sweden c DTU Nutech, Technical University of Denmark, Roskilde, Denmark Abstract. The transport of neutrons in long beamlines at spallation neutron sources presents a unique challenge for Monte- Carlo transport calculations. This is due to the need to accurately model the deep-penetration of high-energy neutrons through meters of thick dense shields close to the source and at the same time to model the transport of low- energy neutrons across distances up to around 150 m in length. Typically, such types of calculations may be carried out with MCNP-based codes or alternatively PHITS. However, in recent years there has been an increased interest in the suitability of Geant4 for such types of calculations. Therefore, we have implemented supermirror physics, a neutron chopper module and the duct-source variance reduction technique for low- energy neutron transport from the PHITS Monte-Carlo code into Geant4. In the current work, we present a series of benchmarks of these extensions with the PHITS software, which demonstrates the suitability of Geant4 for simulating long neutron beamlines at a spallation neutron source, such as the European Spallation Source, currently under construction in Lund, Sweden. Keywords: Geant4, PHITS, Spallation Source, Neutron supermirror 1. Introduction At modern high-power spallation neutron sources, a wide-range of neutron energies (below meVs up to GeVs) are generated through the bombardment of a heavy metal target with a high-energy proton beam (up to a few GeV). -

Development of High Intensity Neutron Source at the European Spallation Source

Development of High Intensity Neutron Source at the European Spallation Source V. Santoro a;b;∗,K.H. Andersen a, D. D. DiJulio a;b, E. B. Klinkby c, T. M. Miller a, D. Milstead d, G. Muhrer a, M. Strobl e, A. Takibayev a, L. Zanini a, and O. Zimmer f a European Spallation Source ERIC, P.O. Box 176, 22100 Lund, Sweden b Department of Physics, Lund University, 22100 Lund, Sweden c DTU Nutech, Technical University of Denmark, DTU Risø Campus, Frederiksborgvej 399, DK-4000, Roskilde, Denmark, d Stockholm University, SE-106 91 Stockholm, Sweden e Paul Scherrer Institut, PSI AarebrÜcke, 5232 Villigen, Switzerland f Institut Laue-Langevin - 71 avenue des Martyrs CS 20156, 38042 GRENOBLE Cedex 9 - France Abstract. The European Spallation Source being constructed in Lund, Sweden will provide the user community with a neutron source of unprecedented brightness. By 2025, a suite of 15 instruments will be served by a high-brightness moderator system placed above the spallation target. The ESS infrastructure, consisting of the proton linac, the target station, and the instrument halls, allows for implementation of a second source below the spallation target. We propose to develop a second neutron source with a high-intensity moderator able to (1) deliver a larger total cold neutron flux, (2) provide high intensities at longer wavelengths in the spectral regions of Cold (4-10 Å), Very Cold (10-40 Å), and Ultra Cold (several 100 Å) neutrons, as opposed to Thermal and Cold neutrons delivered by the top moderator. Offering both unprecedented brilliance, flux, and spectral range in a single facility, this upgrade will make ESS the most versatile neutron source in the world and will further strengthen the leadership of Europe in neutron science. -

Nuclear Data Development at the European Spallation Source

Nuclear data development at the European Spallation Source Jose Ignacio Marquez Damian a;∗, Douglas D. DiJulio a, and Günter Muhrer a, a Spallation Physics Group, European Spallation Source ERIC, Lund, Sweden Abstract. Transport calculations for neutronic design require accurate nuclear data and validated computational tools. In the Spallation Physics Group, at the European Spallation Source, we perform shielding and neutron beam calculations to help the deployment of the instrument suite for the current high brilliance (top) moderator, as well for the design of the high intensity bottom moderator, currently under study for the facility. This work includes providing the best available nuclear data in addition to improving models and tools when necessary. In this paper we present the status of these activities, which include a set of thermal scattering kernels for moderator, reflector, and structural materials, the development of new kernels for beryllium considering crystallite size effects, nanodiamonds, liquid hydrogen and deuterium based on path integral molecular dynamics, and the use of the software package NCrystal to assist the development of nuclear data in the framework of the new HighNESS project. Keywords: thermal scattering, neutron, nuclear data libraries 1. Introduction Neutronic calculations for the simulation and development of moderators and reflectors at spallation neutron sources, such as the European Spallation Source (ESS) [1], currently under construction in Lund, Sweden, require accurate models for the interaction of thermal and cold neutrons with matter in order to get the best possible estimates for the performance of the facility. For this reason, we have launched several activities at the ESS to produce updated and revised libraries for Monte-Carlo simulations.