MD²P² 2014 – Model-Driven Development Processes and Practices Workshop Proceedings Regina Hebig, Reda Bendraou, Markus Völter, and Michel Chaudron (Eds.)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Business Process Management & Enterprise Architecture Track

BIO - Bioinformatics Track Track Co-Chairs: Juan Manuel Corchado, University of Salamanca, Spain Paola Lecca, University of Trento, Italy Dan Tulpan, University of Guelph, Canada An Insight into Biological Data Mining based on Rarity and Correlation as Constraints .............................1 Souad Bouasker, University of Tunis ElManar, Tunisia Drug Target Discovery Using Knowledge Graph Embeddings .........................................................................9 Sameh K. Mohamed, Insight Centre for Data Analytics, Ireland Aayah Nounu, University of Bristol, UK Vit Novacek, INSIGHT @ NUI Galway, Ireland Ensemble Feature Selectin for Biomarker Discovery in Mass Spectrometry-based Metabolomics ............17 Aliasghar Shahrjooihaghighi, University of Louisville, USA Hichem Frigui, University of Louisville, USA Xiang Zhang, University of Louisville, USA Xiaoli Wei, University of Louisville, USA Biyun Shi, University of Louisville, USA Craig J. McClain, University of Louisville, USA Molecule Specific Normalization for Protein and Metabolite Biomarker Discovery ....................................23 Ameni Trabelsi, University of Louisville, USA Biyun Shi, University of Louisville, USA Xiaoli Wei, University of Louisville, USA HICHEM FRIGUI, University of Louisville, USA Xiang Zhang, University of Louisville, USA Aliasghar Shahrajooihaghighi, University of Louisville, USA Craig McClain, University of Louisville, USA BPMEA - Business Process Management & Enterprise Architecture Track Track Co-Chairs: Marco Brambilla, Politecnico di -

Collaborative Development of a Space System Simulation Model

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Institute of Transport Research:Publications Collaborative Development of a Space System Simulation Model Volker Schaus∗, Karsten Großekatthofer¨ ∗∗, Daniel Ludtke¨ ∗, Andreas Gerndt∗ ∗German Aerospace Center (DLR) Simulation and Software Technology Software for Space Systems and Interactive Visualization Lilienthalplatz 7, 38108 Braunschweig, Germany Email: fvolker.schaus, daniel.luedtke, [email protected] ∗∗Astro- und Feinwerktechnik Adlershof GmbH (AFW) Albert-Einstein-Str. 12, 12489 Berlin Email: [email protected] Abstract—Modeling and simulation is a powerful method temperatures of satellite components. Widespread tools for to evaluate the design of a space system. Simulation models thermal analysis are ESARAD/ESATAN or ThermalDesktop. represent valuable knowledge and require considerable time Such examples can be named for all engineering domains and effort for their development. Means for reuse should be taken into account from the beginning of model creation. This involved in space system design. However, all these sim- paper presents a collaborative model development process, which ulations stay within their domain. Portability and data ex- creates prerequisite information for successful reuse of simulation change between the simulations are major issues and make models. It introduces a knowledge model and proposes reviewed the evaluation of cross-domain dependencies very difficult. documentation at each step in the process. These pieces of Recent initiatives, such as European Space Agency’s (ESA) documentation enable successive reuse at different levels. The modeling process was evaluated by creating a system simulation SimVis project [4] and German Aerospace Center’s (DLR) of the OOV-TET-1 satellite including three satellite subsystems, Virtual Satellite initiative [5] aim to establish overall system dynamics, kinematics, and space environment. -

Update 01/2020

update Das Magazin des SICP – Software Innovation Campus Paderborn 1 | 2020 inhalt Editorial ..............................................................................................................................Seite 4 Aktuelles ............................................................................................................................Seite 6 BEST POSTER AWARD AUF DER „8TH INTERNATIONAL CONFERENCE ON MODEL-DRIVEN ENGINEERING AND SOFTWARE DEVELOPMENT” SEITE 7 OFFENE PLATTFORM FÜR CONNECTED-CAR-SZENARIEN – DAS ITEA-PROJEKT APPSTACLE SEITE 8 KÜNSTLICHE INTELLIGENZ SOLL INNOVATIVE GESCHÄFTSMODELLIDEEN ENTWICKELN SEITE 10 BÜNDELUNG DES KULTURANGEBOTES IN OWL SEITE 12 PROF. DR. ERIC BODDEN IST NEUER DIREKTOR DES KOMPETENZBEREICHS „DIGITAL SECURITY“ SEITE 14 FÖRDERUNG VON KOMPETENZEN IM BEREICH WIRTSCHAFT 4.0 AN FACHSCHULEN SEITE 15 DIE ZUKUNFTSMEILE 2 ALS HEIMAT DES SICP SEITE 16 SICP ZUSAMMEN MIT ITEMIS AG UND S&N INVENT AUSRICHTER DES ELFTEN IT-FLASH SEITE 19 ERFOLGREICHER ERSTER STUDENTS‘ DAY DES SOFTWARE INNOVATION CAMPUS PADERBORN SEITE 20 BEZIRKSREGIERUNG ÜBERGIBT FÖRDERBESCHEIDE FÜR DIGITALES MODELLPROJEKT DES KREISES UND DER UNIVERSITÄT PADERBORN SEITE 22 KNAPP 80 PROZENT DER BEFRAGTEN UNTERNEHMEN SPEICHERN SENSIBLE DATEN IN DER PUBLIC CLOUD SEITE 24 DEEPFAKES, MEHRSTUFIGE RANSOMWARE-ANGRIFFE & CO SEITE 25 VON DER IT-PASSION ZUR KARRIERE-MISSION SEITE 26 „DER GESETZGEBER SOLLTE KRYPTOWÄHRUNGEN FLÄCHENDECKEND VERBIETEN.“: PROF. DR. ERIC BODDEN ZUM THEMA IT-SICHERHEIT SEITE 27 IT-SICHERHEIT OHNE HÜRDEN: FORSCHUNGSPROJEKT -

Table of Contents

Table of Contents Scroll to the title and select a Blue link to open a paper. After viewing the paper, use the bookmarks to the left to return to the beginning of the Table of Contents. Theme: AI and Agents BIO - Bioinformatics Track Track Co-Chairs: Paola Lecca, University of Trento, Italy Dan Tulpan, University of Guelph, Canada Juan Manuel Corchado, University of Salamanca, Spain Track Editorial ..................................................................................................................................................... 1 An Insight into Biological Data Mining based on Rarity and Correlation as Constraints ........................... 3 Souad Bouasker, University of Tunis ElManar, Tunisia Sadok Ben Yahia, Tallinn University of Technology, Estonia Gayo Diallo, University of Bordeaux, France Drug Target Discovery using Knowledge Graph Embeddings ..................................................................... 11 Sameh K. Mohamed, National University of Ireland Galway, Ireland Aayah Nounu, University of Bristol, UK Vit Nováček, National University of Ireland Galway, Ireland Ensemble Feature Selectin for Biomarker Discovery in Mass Spectrometry-based Metabolomics .......... 19 AliAsghar ShahrjooiHaghighi, University of Louisville, USA Hichem Frigui, University of Louisville, USA Xiang Zhang, University of Louisville, USA Xiaoli Wei, University of Louisville, USA Biyun Shi, University of Louisville, USA Craig J. McClain, University of Louisville, USA Molecule Specific Normalization for Protein and Metabolite Biomarker -

Itemis AG Pressemappe

itemis AG Pressemappe Pressemappe itemis AG Pressemappe (erstellt am 29.09.2021) Erstellt am: 29.09.2021 itemis AG Pressemappe Inhaltsverzeichnis Firmeninfo ................................................................................................................................ 3 Firmenbeschreibung/Boilerplate ........................................................................................... 3 Ansprechpartner ...................................................................................................................... 3 Übersicht der Pressemeldungen ........................................................................................... 4 Pressemeldungen .................................................................................................................... 9 22.07.2019 - itemis und Michael Feathers geben gemeinsame Workshops ................................... 9 18.07.2019 - itemis bekommt zum achten Mal in Folge das CreFoZert verliehen ......................... 11 01.07.2019 - itemis holt Platz 1 als „Innovator des Jahres“ ........................................................ 13 20.05.2019 - Risikobewertung: Cyber Security und der Faktor Mensch ....................................... 18 08.04.2019 - Ausgezeichnet: App-Entwicklung bei itemis .......................................................... 21 22.03.2019 - Effektives Arbeiten mit Legacy Code: Erfolgreiche Workshops mit Michael Feathers .... 23 27.02.2019 - IT-Flash #8: Impulsvorträge und Networking in Paderborn .................................... -

Model Driven Language Framework to Automate Command and Data Handling Code Generation

Model Driven Language Framework to Automate Command and Data Handling Code Generation Meenakshi Deshmukh, Benjamin Weps Pedro Isidro German Aerospace Center (DLR) Instituto Superior Técnico Simulation and Software Technology Department of Mechanical Engineering Lilienthalplatz 7, 38108, Braunschweig, Germany Avenida Rovisco Pais 1, 1049-001 Lisboa, Portugal meenakshi.deshmukh, [email protected] [email protected] Andreas Gerndt German Aerospace Center (DLR) Simulation and Software Technology Lilienthalplatz 7, 38108, Braunschweig, Germany [email protected] Abstract—On-board computer software (OBSW) is an integral part of every space mission. It has been continuously growing in size and complexity. The insufficient level of automation in the Instruments Propulsion development process of such software leads to low software re- Attitude usability and drives up the costs. This paper presents a generic Telemetry approach to describe and model the on-board software in terms Control of data that is processed by it. Domain Specific Language Thermal House- (DSL) based framework is developed using which provides a Regulation Keeping DSL editor, a model validator, and a code generator. Using the Data framework, a system data model is created. The C++ code is Power Control generated from it which is then customized to implement low- Operation level behavior. As a proof of concept, the telecommand handling functionality of OBSW is developed to prove the feasibility of applying the solution to the whole system. Based on the Figure 1. General Structure of Spacecraft’s On-Board Soft- analysis conducted on the source code of the TET-1 satellite of the German Aerospace Center (DLR), a DSL is designed and ware System (TET-1 Image Credit [1]) implemented. -

IM VISIER: RECRUITMENT 2.0 Hamburger Trendforschungs-Unternehmen Trendone 10 Weidegrund Web 2.0 Präsentieren

€ ALWAYS ON Juni I Deutschland 2,00 I Deutschland Juni Hamburgs Magazin der Digitalen Wirtschaft www.hamburg-media.net AUSGABE 10 I 2009 Im Visier: Recruitment 2.0 Willkommen auf dem Personalmarkt der unbegrenzten Möglichkeiten TRENDS HAMBURG@WORK PORTRÄT EVENTS VON HAMBURG@WORK AM PULS DER ZEIT STARTUPS GESUCHT DER MEDIEN- JETZT NEU! TrendONE stellt aktuelle Web- Bewerbungsphase zum Webfuture KOORDINATOR Mit der Bilderseite die Highlights entwicklungen vor ... Seite 4 Award 2009 beginnt ... Seite 7 Karl Dietrich Seikels Arbeit hinter Revue passieren lassen ... Seite 27 den Kulissen ... Seite 8 EDITORIAL | TOPTALK INHALT TOPTALK 3 Bundestagswahlkampf – Twitter statt Marktplatz 3 Bitkom-Trendthema TRENDS Sehr geehrte Leserin, 4 Schwerpunkt: Web Innovations sehr geehrter Leser, NEWTV 5 Zurück in die Zukunft des Musikfernsehens die Zeiten, in denen eine Annonce in der Zeitung als der 5 3D – Eine neue Dimension einzige und wahre Weg galt, um Mitarbeiter zu rekrutie- 5 Trends in Bewegung ren, sind längst vorbei. Auch Stellenausschreibungen im Internet gehören inzwischen zum Standard. Was also GAMECITY sind die neuesten Trends bei der Personalbeschaffung? 6 Deutscher Computerspielpreis: Hoch im Norden Welche Möglichkeiten bietet das Web 2.0 Personalver- 6 Hamburg auf der GDC in San Francisco 6 Gamecity Hamburg trotzt der Krise antwortlichen, aber auch qualifizierten Kräften auf der Suche nach einer neuen Herausforderung? Wir berichten WEBCITY ab Seite 10 darüber, welche Chancen und Risiken 7 Sieben Fragen an Dr. Gottfried Neuhaus Business-Netzwerke und Social Communities für die PORTRÄT Mitarbeiter- und Stellensuche bieten und welche Wege 8 Karl Dietrich Seikel – Medienkoordinator der Hansestadt Hamburg Hamburger Unternehmen beschreiten, um sich als attrak- tive Arbeitgeber zu präsentieren. -

Special Issue on Engineering Collaborative Embedded Systems

[RSSV19] B. Rumpe, I. Schaefer, B. Schlingloff, A. Vogelsang: Special issue on engineering collaborative embedded systems. In: SICS Software-Intensive Cyber-Physical Systems, 2019. www.se-rwth.de/publications/ SPECIAL ISSUE PAPER Special issue on engineering collaborative embedded systems Bernhard Rumpe1 · Ina Schaefer2 · Bernd-Holger Schlingloff3 · Andreas Vogelsang4 © Springer-Verlag GmbH Germany, part of Springer Nature 2019 1 Collaborative embedded systems (CrESt): schweig, TU Kaiserslautern, TU München, and Univ. Duisburg- the BMBF project Essen. Their goal is to deepen the knowledge on how to develop collaborative embedded systems in the new chal- Welcome to the special issue on “Collaborative Embed- lenging environments. ded Systems” (CrESt). While in the old days software The CrESt project can be seen as a necessary exten- in embedded systems was isolated and mainly concentrat- sion of the projects SPES 2020 (2009–2012) and SPES_XT ing on predefined functionalities within the overall system (2012–2015). These projects successfully developed con- context, software has now become the connecting fac- cepts, methods and tools to master the challenges of today’s tor between cooperating systems of systems and, because complex embedded system development. The SPES 2020 of its increasing functionality, also the driving complex- methodology on model-based engineering of embedded sys- ity factor. Cyber-physical systems are composed of several tems, and its extension, the SPES_XT methodology, are autonomous individual systems, which have to collaborate documented in two Springer volumes and are now widely in order to achieve common objectives. Collaboration of applied in industrial developments. The ambition of the systems is necessarily based on collaboration of connected CrESt project is to gain a holistic view on the additional software components that control and govern the overall challenges imposed by the fact that not only a single embed- cyber-physical system behaviour. -

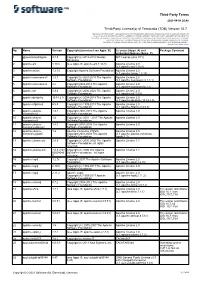

Third-Party Terms Third-Party License(S) of Terracotta (TDB

Third-Party Terms 2021-04-09 20:48 Third-Party License(s) of Terracotta (TDB) Version 10.7 VERSIONS OF THE THIRD-PARTY COMPONENTS MAY BE UTILIZED, EMBEDDED, BUNDLED OR OTHERWISE INCLUDED IN SOME OF THE PRODUCTS ("Product") YOU HAVE LICENSED PURSUANT TO A COMMERCIAL LICENSE AGREEMENT. THESE THIRD-PARTY COMPONENTS MAY BE SUBJECT TO ADDITIONAL OR DIFFERENT LICENSE RIGHTS, TERMS AND CONDITIONS AND / OR REQUIRE CERTAIN NOTICES BY THEIR THIRD-PARTY LICENSORS. SOFTWARE AG IS OBLIGED TO PASS ANY CURRENT AND FUTURE TERMS OF SUCH LICENSES THROUGH TO ITS LICENSEES. SOFTWARE AG PROVIDES SOURCE CODE OF THIRD-PARTY COMPONENTS WHERE REQUIRED BY AN OPEN SOURCE LICENSE AT https://opensource.softwareag.com/ No. Name Version Copyright (oversized see Appx. B) Licenses (Appx. A) and Package Comment embedded Notices (Appx. C) 1 @swimlane/dragula 3.7.3 Copyright (c) 2015-2016 Nicolas MIT License (also X11) Bevacqua 2 apache-ant 1.10.8 (see Appx. B: apache-ant 1.10.8) Apache License 2.0 (c.f. apache-ant 1.10.8) 3 apache-axiom 1.2.13 Copyright Apache Software Foundation Apache License 2.0 (c.f. apache-axiom 1.2.13) 4 apache-commons-cli 1.3.1 Copyright (c) 2001-2015 The Apache Apache License 2.0 Software Foundation. (c.f. apache-commons-cli 1.3.1) 5 apache-commons-io 2.2 Copyright 2002-2012 The Apache Apache License 2.0 Software Foundation (c.f. apache-commons-io 2.2) 6 apache-cxf 3.3.6 Copyright (c) 2006-2020 The Apache Apache License 2.0 Software Foundation (c.f. -

HNI Annual Report 2009.Pdf

HEINZ NIXDORF INSTITUTINSTITUTE Annual Report 2009 HEINZ NIXDORF INSTITUTE University of Paderborn HEINZ NIXDORF INSTITUTE Interdisciplinary Research Center for Computer Science and Technology Fuerstenallee 11, D-33102 Paderborn Phone +49(0)5251|60 62 11 Fax +49(0)5251|60 62 12 http://www.hni.uni-paderborn.de Members of Board of Directors Professor Group: Prof. Dr.-Ing. habil. Wilhelm Dangelmaier (President)* Prof. Dr.-Ing. Jürgen Gausemeier * Prof. Dr.-Ing. Reinhard Keil * Prof. Dr. math. Friedhelm Meyer auf der Heide Prof. Dr. rer. nat. Burkhard Monien Prof. Dr. phil. Volker Peckhaus * Prof. Dr. rer. nat. Franz Josef Rammig Prof. Dr.-Ing. Ulrich Rückert Prof. Dr. rer. nat. Wilhelm Schäfer Prof. Dr.-Ing. habil. Ansgar Trächtler * Members of Executive Board Academic Staff: Volker Brink Dr. rer. pol. Christoph Laroque Non-Academic Staff: Wilfried Bröckelmann Student Group: Andreas Cord-Landwehr Members of Curatorship Nominated by Stiftung Westfalen: Dr.-Ing. Horst Nasko, Deputy Chairman of Stiftung Westfalen Heinz Paus, Mayor of the City Paderborn Prof. Dr. rer. nat. Hartwig Steusloff, Fraunhofer-Institute for Information and Data Processing Nominated by the University: Prof. Dr. rer. nat. Thomas Lengauer Ph. D., Max-Planck-Institute for Informatics Prof. Dr. rer. nat. Nikolaus Risch, Rector of the University of Paderborn Prof. Dr. Holm Tetens, Freie Universität Berlin Jointly nominated: Prof. Dr. Otto K. Ferstl, Otto Friedrich University of Bamberg Prof. Dr. Klaus Waldschmidt, Johann Wolfgang Goethe-University Frankfurt/Main Prof. Dr.-Ing. Prof. E.h. Dr.-Ing. E.h. Dr. h.c. mult. Engelbert Westkämper, University of Stuttgart HEINZ NIXDORF INSTITUTE Annual Report 2009 HEINZ NIXDORF INSTITUTE University of Paderborn Statistics of the Institute 2 Dr.-Phil. -

ITS-Kongress – Nutzt Der Senat Die Chancen Der Innovationsförderung?

BÜRGERSCHAFT DER FREIEN UND HANSESTADT HAMBURG Drucksache 21/16793 21. Wahlperiode 16.04.19 Schriftliche Kleine Anfrage der Abgeordneten Carsten Ovens und Dennis Thering (CDU) vom 08.04.19 und Antwort des Senats Betr.: ITS-Kongress – Nutzt der Senat die Chancen der Innovationsförderung? Mit Drs. 21/13503 legt der Senat die Handlungsziele der ITS-Strategie dar: erhöhte Verkehrssicherheit, Reduzierung von Umwelteinflüssen, Steigerung der Effizienz des Gesamtsystems, sichere Informationsverteilung und Innova- tionsförderung. Seit Juni 2018 ist einige Zeit vergangen, daher stellt sich die Frage des aktu- ellen Umsetzungsstandstands dieser Ziele, insbesondere mit Blick auf das systemrelevante Thema der Innovationsförderung. Vor diesem Hintergrund fragen wir den Senat: Der Senat betreibt im Rahmen der ITS-Strategie eine erfolgreiche Innovationsförde- rung. Dies zeigen zum einen die vielen ITS-Projekte, die in Kooperation mit Unter- nehmen durchgeführt werden, als auch zum anderen die in Memoranden of Under- standing (MoU) formalisierten Partnerschaften mit großen Unternehmen wie Volkswa- gen, BMW, Daimler, Deutsche Bahn, HERE und T-Systems. So hat zum Beispiel Volkswagen am 3. April 2019 in einer Pressekonferenz unter Beteiligung des Präses der Behörde für Wirtschaft, Verkehr und Innovation (BWVI) verkündet, Testfahrten mit vollautomatisierten Fahrzeugen auf der Testrecke in der Hamburger Innenstadt vor- zunehmen (vergleiche https://www.hamburg.de/bwvi/medien/12407892/2019-04-03- bwvi-teststrecke/). Dies vorausgeschickt, beantwortet der Senat die Fragen auf der Grundlage von Aus- künften der Hamburger Hochbahn AG (HOCHBAHN) wie folgt: 1. Inwiefern möchte der Senat Hamburg im Rahmen des ITS-Kongresses als Modellregion für intelligente Mobilität und Transportsysteme positio- nieren? Was ist hierzu bislang konkret geschehen? Bitte im Detail und ohne Verweis auf andere Drucksachen auflisten. -

Model-Driven Generation of Graphical Editors in Eclipse

International Journal of Computer Science & Information Technology (IJCSIT) Vol 8, No 5, October 2016 MODIGEN : MODEL -DRIVEN GENERATION OF GRAPHICAL EDITORS IN ECLIPSE Markus Gerhart 1and Prof. Dr. Marko Boger 2 1Department of Applied computer science, University of Applied Sciences, Konstanz 2 Department of Applied computer science, University of Applied Sciences, Konstanz ABSTRACT Domain-specific modeling is more and more understood as a comparable solution compared to classical software development. Textual domain-specific languages (DSLs) already have a massive impactin contrast tographical DSLs, they still have to show their full potential. The established textual DSLs are normally generated from a domain specific grammar or maybe other specific textual descriptions. And advantage of textual DSLs is thatthey can be development cost-efficient. In this paper, we describe asimilar approach for the creation of graphical DSLs from textual descriptions. We present a set of speciallydeveloped textual DSLs to fully describe graphical DSLs based on node and edge diagrams. These are, together with an EMF meta-model, the input for a generator that produces an eclipse-based graphical Editor. The entire project is available as open source under the name MoDiGen. KEYWORDS Model-Driven Software Development (MDSD), Domain-Specific Language (DSL), Xtext, Eclipse Modeling Framework (EMF), Metamodel Model-Driven Architecture (MDA), Graphical Editor 1. INTRODUCTION Domain-Specific Languages (DSLs) have a crucial importance in Model-Driven Engineering (Also known as Model Driven Software Development (MDSD) or Model Driven Development (MDD)) (MDE) and Model-Driven Architecture (MDA). A survey of MDE practitioners [24] shows that MDE users make use of multiple modelling languages. Nearly 40% of participants had used specially design custom DSLs, 25% had used off-the-shelf DSLs and only UML had been used more widely than DSLs (used by 85% of participants).