Turing and Von Neumann's Brains and Their Computers

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

6. Knowledge, Information, and Entropy the Book John Von

6. Knowledge, Information, and Entropy The book John von Neumann and the Foundations of Quantum Physics contains a fascinating and informative article written by Eckehart Kohler entitled “Why von Neumann Rejected Carnap’s Dualism of Information Concept.” The topic is precisely the core issue before us: How is knowledge connected to physics? Kohler illuminates von Neumann’s views on this subject by contrasting them to those of Carnap. Rudolph Carnap was a distinguished philosopher, and member of the Vienna Circle. He was in some sense a dualist. He had studied one of the central problems of philosophy, namely the distinction between analytic statements and synthetic statements. (The former are true or false by virtue of a specified set of rules held in our minds, whereas the latter are true or false by virtue their concordance with physical or empirical facts.) His conclusions had led him to the idea that there are two different domains of truth, one pertaining to logic and mathematics and the other to physics and the natural sciences. This led to the claim that there are “Two Concepts of Probability,” one logical the other physical. That conclusion was in line with the fact that philosophers were then divided between two main schools as to whether probability should be understood in terms of abstract idealizations or physical sequences of outcomes of measurements. Carnap’s bifurcations implied a similar division between two different concepts of information, and of entropy. In 1952 Carnap was working at the Institute for Advanced Study in Princeton and about to publish a work on his dualistic theory of information, according to which epistemological concepts like information should be treated separately from physics. -

Edsger Dijkstra: the Man Who Carried Computer Science on His Shoulders

INFERENCE / Vol. 5, No. 3 Edsger Dijkstra The Man Who Carried Computer Science on His Shoulders Krzysztof Apt s it turned out, the train I had taken from dsger dijkstra was born in Rotterdam in 1930. Nijmegen to Eindhoven arrived late. To make He described his father, at one time the president matters worse, I was then unable to find the right of the Dutch Chemical Society, as “an excellent Aoffice in the university building. When I eventually arrived Echemist,” and his mother as “a brilliant mathematician for my appointment, I was more than half an hour behind who had no job.”1 In 1948, Dijkstra achieved remarkable schedule. The professor completely ignored my profuse results when he completed secondary school at the famous apologies and proceeded to take a full hour for the meet- Erasmiaans Gymnasium in Rotterdam. His school diploma ing. It was the first time I met Edsger Wybe Dijkstra. shows that he earned the highest possible grade in no less At the time of our meeting in 1975, Dijkstra was 45 than six out of thirteen subjects. He then enrolled at the years old. The most prestigious award in computer sci- University of Leiden to study physics. ence, the ACM Turing Award, had been conferred on In September 1951, Dijkstra’s father suggested he attend him three years earlier. Almost twenty years his junior, I a three-week course on programming in Cambridge. It knew very little about the field—I had only learned what turned out to be an idea with far-reaching consequences. a flowchart was a couple of weeks earlier. -

The Turing Approach Vs. Lovelace Approach

Connecting the Humanities and the Sciences: Part 2. Two Schools of Thought: The Turing Approach vs. The Lovelace Approach* Walter Isaacson, The Jefferson Lecture, National Endowment for the Humanities, May 12, 2014 That brings us to another historical figure, not nearly as famous, but perhaps she should be: Ada Byron, the Countess of Lovelace, often credited with being, in the 1840s, the first computer programmer. The only legitimate child of the poet Lord Byron, Ada inherited her father’s romantic spirit, a trait that her mother tried to temper by having her tutored in math, as if it were an antidote to poetic imagination. When Ada, at age five, showed a preference for geography, Lady Byron ordered that the subject be replaced by additional arithmetic lessons, and her governess soon proudly reported, “she adds up sums of five or six rows of figures with accuracy.” Despite these efforts, Ada developed some of her father’s propensities. She had an affair as a young teenager with one of her tutors, and when they were caught and the tutor banished, Ada tried to run away from home to be with him. She was a romantic as well as a rationalist. The resulting combination produced in Ada a love for what she took to calling “poetical science,” which linked her rebellious imagination to an enchantment with numbers. For many people, including her father, the rarefied sensibilities of the Romantic Era clashed with the technological excitement of the Industrial Revolution. Lord Byron was a Luddite. Seriously. In his maiden and only speech to the House of Lords, he defended the followers of Nedd Ludd who were rampaging against mechanical weaving machines that were putting artisans out of work. -

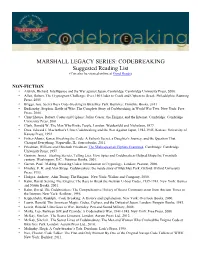

CODEBREAKING Suggested Reading List (Can Also Be Viewed Online at Good Reads)

MARSHALL LEGACY SERIES: CODEBREAKING Suggested Reading List (Can also be viewed online at Good Reads) NON-FICTION • Aldrich, Richard. Intelligence and the War against Japan. Cambridge: Cambridge University Press, 2000. • Allen, Robert. The Cryptogram Challenge: Over 150 Codes to Crack and Ciphers to Break. Philadelphia: Running Press, 2005 • Briggs, Asa. Secret Days Code-breaking in Bletchley Park. Barnsley: Frontline Books, 2011 • Budiansky, Stephen. Battle of Wits: The Complete Story of Codebreaking in World War Two. New York: Free Press, 2000. • Churchhouse, Robert. Codes and Ciphers: Julius Caesar, the Enigma, and the Internet. Cambridge: Cambridge University Press, 2001. • Clark, Ronald W. The Man Who Broke Purple. London: Weidenfeld and Nicholson, 1977. • Drea, Edward J. MacArthur's Ultra: Codebreaking and the War Against Japan, 1942-1945. Kansas: University of Kansas Press, 1992. • Fisher-Alaniz, Karen. Breaking the Code: A Father's Secret, a Daughter's Journey, and the Question That Changed Everything. Naperville, IL: Sourcebooks, 2011. • Friedman, William and Elizebeth Friedman. The Shakespearian Ciphers Examined. Cambridge: Cambridge University Press, 1957. • Gannon, James. Stealing Secrets, Telling Lies: How Spies and Codebreakers Helped Shape the Twentieth century. Washington, D.C.: Potomac Books, 2001. • Garrett, Paul. Making, Breaking Codes: Introduction to Cryptology. London: Pearson, 2000. • Hinsley, F. H. and Alan Stripp. Codebreakers: the inside story of Bletchley Park. Oxford: Oxford University Press, 1993. • Hodges, Andrew. Alan Turing: The Enigma. New York: Walker and Company, 2000. • Kahn, David. Seizing The Enigma: The Race to Break the German U-boat Codes, 1939-1943. New York: Barnes and Noble Books, 2001. • Kahn, David. The Codebreakers: The Comprehensive History of Secret Communication from Ancient Times to the Internet. -

Motion and Time Study the Goals of Motion Study

Motion and Time Study The Goals of Motion Study • Improvement • Planning / Scheduling (Cost) •Safety Know How Long to Complete Task for • Scheduling (Sequencing) • Efficiency (Best Way) • Safety (Easiest Way) How Does a Job Incumbent Spend a Day • Value Added vs. Non-Value Added The General Strategy of IE to Reduce and Control Cost • Are people productive ALL of the time ? • Which parts of job are really necessary ? • Can the job be done EASIER, SAFER and FASTER ? • Is there a sense of employee involvement? Some Techniques of Industrial Engineering •Measure – Time and Motion Study – Work Sampling • Control – Work Standards (Best Practices) – Accounting – Labor Reporting • Improve – Small group activities Time Study • Observation –Stop Watch – Computer / Interactive • Engineering Labor Standards (Bad Idea) • Job Order / Labor reporting data History • Frederick Taylor (1900’s) Studied motions of iron workers – attempted to “mechanize” motions to maximize efficiency – including proper rest, ergonomics, etc. • Frank and Lillian Gilbreth used motion picture to study worker motions – developed 17 motions called “therbligs” that describe all possible work. •GET G •PUT P • GET WEIGHT GW • PUT WEIGHT PW •REGRASP R • APPLY PRESSURE A • EYE ACTION E • FOOT ACTION F • STEP S • BEND & ARISE B • CRANK C Time Study (Stopwatch Measurement) 1. List work elements 2. Discuss with worker 3. Measure with stopwatch (running VS reset) 4. Repeat for n Observations 5. Compute mean and std dev of work station time 6. Be aware of allowances/foreign element, etc Work Sampling • Determined what is done over typical day • Random Reporting • Periodic Reporting Learning Curve • For repetitive work, worker gains skill, knowledge of product/process, etc over time • Thus we expect output to increase over time as more units are produced over time to complete task decreases as more units are produced Traditional Learning Curve Actual Curve Change, Design, Process, etc Learning Curve • Usually define learning as a percentage reduction in the time it takes to make a unit. -

An Early Program Proof by Alan Turing F

An Early Program Proof by Alan Turing F. L. MORRIS AND C. B. JONES The paper reproduces, with typographical corrections and comments, a 7 949 paper by Alan Turing that foreshadows much subsequent work in program proving. Categories and Subject Descriptors: 0.2.4 [Software Engineeringj- correctness proofs; F.3.1 [Logics and Meanings of Programs]-assertions; K.2 [History of Computing]-software General Terms: Verification Additional Key Words and Phrases: A. M. Turing Introduction The standard references for work on program proofs b) have been omitted in the commentary, and ten attribute the early statement of direction to John other identifiers are written incorrectly. It would ap- McCarthy (e.g., McCarthy 1963); the first workable pear to be worth correcting these errors and com- methods to Peter Naur (1966) and Robert Floyd menting on the proof from the viewpoint of subse- (1967); and the provision of more formal systems to quent work on program proofs. C. A. R. Hoare (1969) and Edsger Dijkstra (1976). The Turing delivered this paper in June 1949, at the early papers of some of the computing pioneers, how- inaugural conference of the EDSAC, the computer at ever, show an awareness of the need for proofs of Cambridge University built under the direction of program correctness and even present workable meth- Maurice V. Wilkes. Turing had been writing programs ods (e.g., Goldstine and von Neumann 1947; Turing for an electronic computer since the end of 1945-at 1949). first for the proposed ACE, the computer project at the The 1949 paper by Alan M. -

Are Information, Cognition and the Principle of Existence Intrinsically

Adv. Studies Theor. Phys., Vol. 7, 2013, no. 17, 797 - 818 HIKARI Ltd, www.m-hikari.com http://dx.doi.org/10.12988/astp.2013.3318 Are Information, Cognition and the Principle of Existence Intrinsically Structured in the Quantum Model of Reality? Elio Conte(1,2) and Orlando Todarello(2) (1)School of International Advanced Studies on Applied Theoretical and non Linear Methodologies of Physics, Bari, Italy (2)Department of Neurosciences and Sense Organs University of Bari “Aldo Moro”, Bari, Italy Copyright © 2013 Elio Conte and Orlando Todarello. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. Abstract The thesis of this paper is that Information, Cognition and a Principle of Existence are intrinsically structured in the quantum model of reality. We reach such evidence by using the Clifford algebra. We analyze quantization in some traditional cases of quantum mechanics and, in particular in quantum harmonic oscillator, orbital angular momentum and hydrogen atom. Keywords: information, quantum cognition, principle of existence, quantum mechanics, quantization, Clifford algebra. Corresponding author: [email protected] Introduction The earliest versions of quantum mechanics were formulated in the first decade of the 20th century following about the same time the basic discoveries of physics as 798 Elio Conte and Orlando Todarello the atomic theory and the corpuscular theory of light that was basically updated by Einstein. Early quantum theory was significantly reformulated in the mid- 1920s by Werner Heisenberg, Max Born and Pascual Jordan, who created matrix mechanics, Louis de Broglie and Erwin Schrodinger who introduced wave mechanics, and Wolfgang Pauli and Satyendra Nath Bose who introduced the statistics of subatomic particles. -

David Hilbert's Contributions to Logical Theory

David Hilbert’s contributions to logical theory CURTIS FRANKS 1. A mathematician’s cast of mind Charles Sanders Peirce famously declared that “no two things could be more directly opposite than the cast of mind of the logician and that of the mathematician” (Peirce 1976, p. 595), and one who would take his word for it could only ascribe to David Hilbert that mindset opposed to the thought of his contemporaries, Frege, Gentzen, Godel,¨ Heyting, Łukasiewicz, and Skolem. They were the logicians par excellence of a generation that saw Hilbert seated at the helm of German mathematical research. Of Hilbert’s numerous scientific achievements, not one properly belongs to the domain of logic. In fact several of the great logical discoveries of the 20th century revealed deep errors in Hilbert’s intuitions—exemplifying, one might say, Peirce’s bald generalization. Yet to Peirce’s addendum that “[i]t is almost inconceivable that a man should be great in both ways” (Ibid.), Hilbert stands as perhaps history’s principle counter-example. It is to Hilbert that we owe the fundamental ideas and goals (indeed, even the name) of proof theory, the first systematic development and application of the methods (even if the field would be named only half a century later) of model theory, and the statement of the first definitive problem in recursion theory. And he did more. Beyond giving shape to the various sub-disciplines of modern logic, Hilbert brought them each under the umbrella of mainstream mathematical activity, so that for the first time in history teams of researchers shared a common sense of logic’s open problems, key concepts, and central techniques. -

Einstein and Hilbert: the Creation of General Relativity

EINSTEIN AND HILBERT: THE CREATION OF GENERAL RELATIVITY ∗ Ivan T. Todorov Institut f¨ur Theoretische Physik, Universit¨at G¨ottingen, Friedrich-Hund-Platz 1 D-37077 G¨ottingen, Germany; e-mail: [email protected] and Institute for Nuclear Research and Nuclear Energy, Bulgarian Academy of Sciences Tsarigradsko Chaussee 72, BG-1784 Sofia, Bulgaria;∗∗e-mail: [email protected] ABSTRACT It took eight years after Einstein announced the basic physical ideas behind the relativistic gravity theory before the proper mathematical formulation of general relativity was mastered. The efforts of the greatest physicist and of the greatest mathematician of the time were involved and reached a breathtaking concentration during the last month of the work. Recent controversy, raised by a much publicized 1997 reading of Hilbert’s proof- sheets of his article of November 1915, is also discussed. arXiv:physics/0504179v1 [physics.hist-ph] 25 Apr 2005 ∗ Expanded version of a Colloquium lecture held at the International Centre for Theoretical Physics, Trieste, 9 December 1992 and (updated) at the International University Bremen, 15 March 2005. ∗∗ Permanent address. Introduction Since the supergravity fashion and especially since the birth of superstrings a new science emerged which may be called “high energy mathematical physics”. One fad changes the other each going further away from accessible experiments and into mathe- matical models, ending up, at best, with the solution of an interesting problem in pure mathematics. The realization of the grand original design seems to be, decades later, nowhere in sight. For quite some time, though, the temptation for mathematical physi- cists (including leading mathematicians) was hard to resist. -

Toward a New Science of Information

Data Science Journal, Volume 6, Supplement, 7 April 2007 TOWARD A NEW SCIENCE OF INFORMATION D Doucette1*, R Bichler 2, W Hofkirchner2, and C Raffl2 *1 The Science of Information Institute, 1737 Q Street, N.W. Washington, D.C. 20009, USA Email: [email protected] 2 ICT&S Center, University of Salzburg - Center for Advanced Studies and Research in Information and Communication Technologies & Society, Sigmund-Haffner-Gasse 18, 5020 Salzburg, Austria Email: [email protected], [email protected], [email protected] ABSTRACT The concept of information has become a crucial topic in several emerging scientific disciplines, as well as in organizations, in companies and in everyday life. Hence it is legitimate to speak of the so-called information society; but a scientific understanding of the Information Age has not had time to develop. Following this evolution we face the need of a new transdisciplinary understanding of information, encompassing many academic disciplines and new fields of interest. Therefore a Science of Information is required. The goal of this paper is to discuss the aims, the scope, and the tools of a Science of Information. Furthermore we describe the new Science of Information Institute (SOII), which will be established as an international and transdisciplinary organization that takes into consideration a larger perspective of information. Keywords: Information, Science of Information, Information Society, Transdisciplinarity, Science of Information Institute (SOII), Foundations of Information Science (FIS) 1 INTRODUCTION Information is emerging as a new and large prospective area of study. The notion of information has become a crucial topic in several emerging scientific disciplines such as Philosophy of Information, Quantum Information, Bioinformatics and Biosemiotics, Theory of Mind, Systems Theory, Internet Research, and many more. -

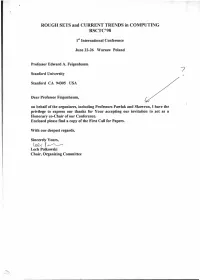

And CURRENT TRENDS in COMPUTING RSCTC'9b

ROUGH SETS and CURRENT TRENDS in COMPUTING RSCTC'9B Ist1st International Conference June 22-26 Warsaw Poland Professor Edward A. Feigenbaum 7 Stanford University Stanford CA 94305 USA Dear Professor Feigenbaum, on behalf of the organizers, including Professors Pawlak and Skowron, I have the privilege to express our thanks for Your accepting our invitation to act as a Honoraryco-Chair of our Conference. Enclosed please find a copy of the First Call for Papers. With our deepest regards, Sincerely Yours, Lech Polkowski Chair, Organizing Committee - * Rough Set Theory, proposed first by Zdzislaw Pawlak in the early 80's, has recently reached a maturity stage. In recent years we have witnessed a rapid growth of interest in rough set theory and its applications, worldwide. Various real life applications ofrough sets have shown their usefulness in many domains. It is felt useful to sum up the present state ofrough set theory and its applications, outline new areas of development and, last but not least, to work out further relationships with such areas as soft computing, knowledge discovery and data mining, intelligent information systems, synthesis and analysis of complex objects and non-conventional models of computation. Motivated by this, we plan to organize the Conference in Poland, where rough set theory was initiated and originally developed. An important aimof the Conference is to bring together eminent experts from diverse fields of expertise in order to facilitate mutual understanding and cooperation and to help in cooperative work aimed at new hybrid paradigms possibly better suited to various aspects of analysis ofreal life phenomena. We are pleased to announce that the following experts have agreedto serve in the Committees of our Conference. -

The Intellectual Journey of Hua Loo-Keng from China to the Institute for Advanced Study: His Correspondence with Hermann Weyl

ISSN 1923-8444 [Print] Studies in Mathematical Sciences ISSN 1923-8452 [Online] Vol. 6, No. 2, 2013, pp. [71{82] www.cscanada.net DOI: 10.3968/j.sms.1923845220130602.907 www.cscanada.org The Intellectual Journey of Hua Loo-keng from China to the Institute for Advanced Study: His Correspondence with Hermann Weyl Jean W. Richard[a],* and Abdramane Serme[a] [a] Department of Mathematics, The City University of New York (CUNY/BMCC), USA. * Corresponding author. Address: Department of Mathematics, The City University of New York (CUN- Y/BMCC), 199 Chambers Street, N-599, New York, NY 10007-1097, USA; E- Mail: [email protected] Received: February 4, 2013/ Accepted: April 9, 2013/ Published: May 31, 2013 \The scientific knowledge grows by the thinking of the individual solitary scientist and the communication of ideas from man to man Hua has been of great value in this respect to our whole group, especially to the younger members, by the stimulus which he has provided." (Hermann Weyl in a letter about Hua Loo-keng, 1948).y Abstract: This paper explores the intellectual journey of the self-taught Chinese mathematician Hua Loo-keng (1910{1985) from Southwest Associ- ated University (Kunming, Yunnan, China)z to the Institute for Advanced Study at Princeton. The paper seeks to show how during the Sino C Japanese war, a genuine cross-continental mentorship grew between the prolific Ger- man mathematician Hermann Weyl (1885{1955) and the gifted mathemati- cian Hua Loo-keng. Their correspondence offers a profound understanding of a solidarity-building effort that can exist in the scientific community.