Analysing Dense Passage Retrieval for Multi-Hop Estion Answering

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Nucleosynthesis

Nucleosynthesis Nucleosynthesis is the process that creates new atomic nuclei from pre-existing nucleons, primarily protons and neutrons. The first nuclei were formed about three minutes after the Big Bang, through the process called Big Bang nucleosynthesis. Seventeen minutes later the universe had cooled to a point at which these processes ended, so only the fastest and simplest reactions occurred, leaving our universe containing about 75% hydrogen, 24% helium, and traces of other elements such aslithium and the hydrogen isotope deuterium. The universe still has approximately the same composition today. Heavier nuclei were created from these, by several processes. Stars formed, and began to fuse light elements to heavier ones in their cores, giving off energy in the process, known as stellar nucleosynthesis. Fusion processes create many of the lighter elements up to and including iron and nickel, and these elements are ejected into space (the interstellar medium) when smaller stars shed their outer envelopes and become smaller stars known as white dwarfs. The remains of their ejected mass form theplanetary nebulae observable throughout our galaxy. Supernova nucleosynthesis within exploding stars by fusing carbon and oxygen is responsible for the abundances of elements between magnesium (atomic number 12) and nickel (atomic number 28).[1] Supernova nucleosynthesis is also thought to be responsible for the creation of rarer elements heavier than iron and nickel, in the last few seconds of a type II supernova event. The synthesis of these heavier elements absorbs energy (endothermic process) as they are created, from the energy produced during the supernova explosion. Some of those elements are created from the absorption of multiple neutrons (the r-process) in the period of a few seconds during the explosion. -

Primordial Nucleosynthesis: from Precision Cosmology To

DSF-20/2008, FERMILAB-PUB-08-216-A, IFIC/08-37 Primordial Nucleosynthesis: from precision cosmology to fundamental physics Fabio Iocco a1 , Gianpiero Mangano b, Gennaro Miele b,c, Ofelia Pisanti b, Pasquale D. Serpico d2 aINAF, Osservatorio Astrofisico di Arcetri, Largo E. Fermi 5, I-50125 Firenze, Italy bDip. Scienze Fisiche, Universit`adi Napoli Federico II & INFN, Sez. di Napoli, Complesso Univ. Monte S. Angelo, Via Cintia, I-80126 Napoli, Italy c Instituto de F´ısica Corpuscular (CSIC-Universitat de Val`encia), Ed. Institutos de Investigaci´on, Apartado de Correos 22085, E-46071 Val`encia, Spain dCenter for Particle Astrophysics, Fermi National Accelerator Laboratory, Batavia, IL 60510-0500, USA Abstract We present an up-to-date review of Big Bang Nucleosynthesis (BBN). We discuss the main improvements which have been achieved in the past two decades on the overall theoretical framework, summarize the impact of new experimental results on nuclear reaction rates, and critically re-examine the astrophysical determinations of light nuclei abundances. We report then on how BBN can be used as a powerful test of new physics, constraining a wide range of ideas and theoretical models of fundamental interactions beyond the standard model of strong and electroweak forces and Einstein’s general relativity. Key words: Primordial Nucleosynthesis; Early Universe; Physics Beyond the arXiv:0809.0631v2 [astro-ph] 16 Apr 2009 Standard Model PACS: 98.80.Ft; 26.35.+c; 98.62.Ai Contents 1 Introduction 3 2 Standard Cosmology 6 1 Present address: Institut -

Direct Measurement of the Neutron

Louisiana State University LSU Digital Commons LSU Doctoral Dissertations Graduate School 1-9-2020 Stellar Nucleosynthesis: Direct Measurement of the Neutron- Capture Cross Sections of Stable Germanium Isotopes and Design of a Next Generation Ion Trap for the Study of Beta- Delayed Neutron Emission Alexander Laminack Louisiana State University and Agricultural and Mechanical College Follow this and additional works at: https://digitalcommons.lsu.edu/gradschool_dissertations Part of the Instrumentation Commons, Nuclear Commons, Physical Processes Commons, and the Stars, Interstellar Medium and the Galaxy Commons Recommended Citation Laminack, Alexander, "Stellar Nucleosynthesis: Direct Measurement of the Neutron-Capture Cross Sections of Stable Germanium Isotopes and Design of a Next Generation Ion Trap for the Study of Beta- Delayed Neutron Emission" (2020). LSU Doctoral Dissertations. 5131. https://digitalcommons.lsu.edu/gradschool_dissertations/5131 This Dissertation is brought to you for free and open access by the Graduate School at LSU Digital Commons. It has been accepted for inclusion in LSU Doctoral Dissertations by an authorized graduate school editor of LSU Digital Commons. For more information, please [email protected]. STELLAR NUCLEOSYNTHESIS: DIRECT MEASUREMENT OF THE NEUTRON-CAPTURE CROSS SECTIONS OF STABLE GERMANIUM ISOTOPES AND DESIGN OF A NEXT GENERATION ION TRAP FOR THE STUDY OF β-DELAYED NEUTRON EMISSION A Dissertation Submitted to the Graduate Faculty of the Louisiana State University and Agricultural and Mechanical College in partial fulfillment of the requirements for the degree of Doctor of Philosophy in The Department of Physics and Astronomy by Alexander Laminack B. S., The Unviersity of Alabama, 2015 May 2020 To my wife and son: Kristy Allen Alexander Laminack and Daniel Allen Laminack. -

Nucleosynthetic Isotope Variations of Siderophile and Chalcophile

Reviews in Mineralogy & Geochemistry Vol.81 pp. 107-160, 2016 3 Copyright © Mineralogical Society of America Nucleosynthetic Isotope Variations of Siderophile and Chalcophile Elements in the Solar System Tetsuya Yokoyama Department of Earth and Planetary Sciences Tokyo Institute of Technology Ookayama, Tokyo 152-885 Japan [email protected] Richard J. Walker Department of Geology University of Maryland College Park, MD 20742 USA [email protected] INTRODUCTION Numerous investigations have been devoted to understanding how the materials that contributed to the Solar System formed, were incorporated into the precursor molecular cloud and the protoplanetary disk, and ultimately evolved into the building blocks of planetesimals and planets. Chemical and isotopic analyses of extraterrestrial materials have played a central role in decoding the signatures of individual processes that led to their formation. Among the elements studied, the siderophile and chalcophile elements are crucial for considering a range of formational and evolutionary processes. Consequently, over the past 60 years, considerable effort has been focused on the development of abundance and isotopic analyses of these elements in terrestrial and extraterrestrial materials (e.g., Shirey and Walker 1995; Birck et al. 1997; Reisberg and Meisel 2002; Meisel and Horan 2016, this volume). In this review, we consider nucleosynthetic isotopic variability of siderophile and chalcophile elements in meteorites. Chapter 4 provides a review for siderophile and chalcophile elements in planetary materials in general (Day et al. 2016, this volume). In many cases, such variability is denoted as an “isotopic anomaly”; however, the term can be ambiguous because several pre- and post- Solar System formation processes can lead to variability of isotopic compositions as recorded in meteorites. -

Refueling the Magic Furnace: Kilonova 2017 Rewrites the Story of Element Origins

Refueling the Magic Furnace: Kilonova 2017 Rewrites the Story of Element Origins Barry Wood University of Houston Abstract For more than half a century, we have understood element creation in the stars—described in Marcus Chown’s colorful image as “the magic furnace.” From 1958 until 2017, supernova explosions were thought to be the primary site of element creation above Iron, No. 26 on the Periodic Table. This assumption was radically overturned with the August 17, 2017, arrival of signals from a catastrophic collision of neutron stars. This paper traces the history of element-creation science leading to the striking discoveries attending “Kilonova 2017” that now call for a rewriting of the nucleosynthesis chapter of Big History. Correspondence | Barry Wood, [email protected] Citation | Wood, B. (2018) Refueling the Magic Furnace: Kilonova 2017 Rewrites the Story of Element Origins. Journal of Big History, II(3); 1 - 15. DOI | http://dx.doi.org/10.22339/jbh.v2i3.2300 Introduction Beginning at 24 Hertz, it gradually rose over 100 Pangea had recently broken up . North America and seconds to several hundred Hertz—indicating the Europe were slowly drifting apart inspiraling of two massive bodies about to detonate . the Himalayas had not yet appeared . tropical in a cataclysmic collision. Following 3,000 cycles, the jungles harbored enormous predatory dinosaurs that GW signal came to an abrupt end at 12:41:04.4 UTC would roam the earth for another 65 million years . (Coordinated Universal Time). Almost immediately mammals the size of housecats kept to the shadows . (1.74 seconds later) a two-second gamma-ray burst . -

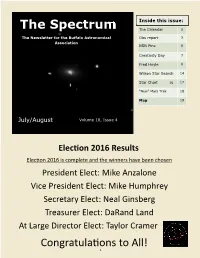

Spectrum July Aug 2016.Pub

Inside this issue: The Spectrum The Calendar 2 The Newsletter for the Buffalo Astronomical Obs report 3 Association NSN Pins 6 Creativity Day 7 Fred Hoyle 9 Wilson Star Search 14 Star Chart 16 17 “New” Mars Trek 18 Map 19 July/August Volume 18, Issue 4 ElecƟon 2016 Results Elecon 2016 is complete and the winners have been chosen President Elect: Mike Anzalone Vice President Elect: Mike Humphrey Secretary Elect: Neal Ginsberg Treasurer Elect: DaRand Land At Large Director Elect: Taylor Cramer Congratulaons to All! 1 BAA Schedule of Astronomy Fun for 2016 Public Nights and Events Public Nights ‐ First Saturday of the Month March through October. 2016 TentaƟve Schedule of Events: July 9 Wilson Star Search July 30 BAA annual star party at BMO Aug 6 Public Night BMO 5pm Wild Fesval, followed by Bring a dish to pass picnic then Public Night BMO Aug 13 Wilson Star Search‐ think meteors! Sep 2/3/4 Black Forest (Rain Fest) Star Party Cherry Springs Pa. Sep 3 Public Night BMO Sep 9 BAA Meeng Sept 10 Wilson Star Search Oct 1 Public Night BMO Oct 8 Wilson Star Search Oct 14 BAA meeng Nov 11 BAA meeng Dec 9 BAA Holiday party 2 Observatory Report Ah Summer is here. The nights are short, HOT and buggy. But have no fear! Dennis B is here. He has donated a window air condioner. Now we can enjoy cool bug free imaging at the Observatory. Life is good once again :‐) The new cooling fans for the C‐14 are installed and seem to be working. -

Nuclear Physics for Astrophysics: from the Laboratory to the Stars

Nuclear Physics for Astrophysics: from the Laboratory to the Stars Livius Trache IFIN-HH Bucuresti-Magurele & Cyclotron Institute, Texas A&M University Texas 2013 Symposium, Dallas, TX, Dec 9-13, 2013 Summary 1. A few contributions of nuclear physics to astrophysics and cosmology • Source of stars’ light • Origin of elements 2. Examples • Big Bang Nucleosynthesis • Confirmation thru BBNucleosynthesis • First determination of Baryon/photon ratio • Number of neutrino types • Number of quarks • Solar neutrino puzzle 3. Indirect methods in NPA with RIBs • Nuclear breakup • Beta-delayed proton decay 4. Some future Nuclei and Nuclear Astrophysics in XX c. • Edington – nuclear reactions at the origin of stars’ energy • Astronomy becomes astrophysics; Hubble; desc of galaxies • 1930s – Bethe – CNO cycle and the pp chain; the neutron • 1948 – abg primordial reactor (Apr 1, 1948: Alpher, Bethe and Gamow in Phys. Rev.). “The amazing legacy of a wrong paper” (M. Turner) is the beginning of precision cosmology • 1957: B2FH paper (Burbidge, Burbidge, Fowler and Hoyle) and Cameron (Chalk River): BBN and stellar nucleosynthesis • 60s-70s: solar neutrinos detected and solar neutrino puzzle (Pontecorvo, Alvarez… R. Davis Jr., started 1948, Nobel prize 2002) • SM and “The first three minutes” • We, the epigones! – Models – Hydrodynamics – Nuclear data 3 2 Nuclear astrophysics • Nuclear astrophysics – increasingly motivation for NP research: – Nuclei are the fuel of the stars – Origin of chemical elements: nucleosynthesis = a large series of nuclear reactions – & elemental/isotopic abundances are indelible fingerprints of cosmic processes • Big successes of NA: – BBN – quantitative, first determination of baryon/photon ratio, or – parameter free (after CMB) – nr. of neutrino types=3 – Heavier elements created in stars – Solar reactions understood (pp-chains, CNO, solar neutrinos…) – Nucleosynthesis is on-going process! – (quasi-) understand novae, XRB, neutron stars …, but not super-novae … H 73% Solar system abundances (A.G.W. -

6 X 10.5 Long Title.P65

Cambridge University Press 978-0-521-18947-7 - Fred Hoyle: A Life in Science Simon Mitton Index More information INDEX A for Andromeda (Hoyle), 165, 236–7 Atomic theory, 39, 51, 153, 197–8, 215 Aarseth, Sverre, 10 Aubrey, John, 312 Academic Assistance Council, 48 Australia, see also Anglo-Australian Active galactic nucleus, 235, 275, 314, 315 telescope Adams, Walter S., 70, 74, 101, 102, 104 radio astronomy surveys, 172, 175–6, Admiralty Signal Establishment, 75, 91, 184–5, 187–8, 189, 272 92, 93–8 Australian Broadcasting Corporation, 139 Adrian, Lord, 186 Australian National University, 295 Albert, Prince, 58 Alfvén, Hannes, 174–5, 304 Baade, Walter, 102–5, 107, 152, 154–5, All Souls College, 278 157, 159–60, 163, 164, 174, 176, Alpha particles, 48, 205, 210 177–8, 200, 202, 218, 232 Alpher, Ralph, 116, 199 Babbage, Charles, 1 Aluminium-26, 305 Background microwave radiation, 76, 192, Amari, Sachiko, 305 195, 314 American Astronomical Society, 195, 319 Baggaley, Norman, 255 Henry Norris Russell Lectureship, 227, Baker, Henry, 187 274 Baldwin, Stanley, 81 American Physical Society, 207, 209 Balzan Prize, 166, 304 Andromeda Breakthrough (TV series), 237 Barium, 213, 217 Andromeda galaxy, 105, 155, 160 Barrow, John, 322 Anglo-Australian telescope, 1, 8, 257, Batchelor, George, 239–41, 246, 249, 252, 258–9, 262, 279, 282, 285–6, 295–9 294 Antielectrons, see Positrons BBC Appleton, Edward, 91–2, 98 A for Andromeda TV series, 236–7 Archaeopteryx lithographica, 309–10 birthday broadcast with Hoyle, 306 Arecibo Observatory, 268–9 Hoyle’s radio -

Fingerprints of a Local Supernova

To appear in Space Exploration Research, Editors: J. H. Denis, P. D. Aldridge Chapter 7 (Nova Science Publishers, Inc.) ISBN: 978-1-60692-264-4 Fingerprints of a Local Supernova Oliver Manuela and Hilton Ratcliffeb a Nuclear Chemistry, University of Missouri, Rolla, MO 65401 USA b Climate and Solar Science Institute, Cape Girardeau, MO 63701 USA ABSTRACT. The results of precise analysis of elements and isotopes in meteorites, comets, the Earth, the Moon, Mars, Jupiter, the solar wind, solar flares, and the solar photosphere since 1960 reveal the fingerprints of a local supernova (SN)—undiluted by interstellar material. Heterogeneous SN debris formed the planets. The Sun formed on the neutron (n) rich SN core. The ground-state masses of nuclei reveal repulsive n-n interactions that can trigger axial n-emission and a series of nuclear reactions that generate solar luminosity, the solar wind, and the measured flux of solar neutrinos. The location of the Sun's high-density core shifts relative to the solar surface as gravitational forces exerted by the major planets cause the Sun to experience abrupt acceleration and deceleration, like a yoyo on a string, in its orbit about the ever-changing centre-of-mass of the solar system. Solar cycles (surface magnetic activity, solar eruptions, and sunspots) and major climate changes arise from changes in the depth of the energetic SN core remnant in the interior of the Sun. Keywords: supernovae; stellar systems; supernova debris; supernova remnants; origin, formation, abundances of elements; planetary -

A STELLAR CAREER by Benjamin Skuse the Astronomer Who Taught Us We Are All Made of Stardust Will Celebrate Her 100Th Birthday On

A STELLAR CAREER by Benjamin Skuse Happy Birthday, The astronomer who taught us we are all made Margaret of stardust will celebrate her 100th birthday on Burbidge August 12, 2019. ARCHIVE S&T CYGNUS LOOP: ESA / DIGITIZED SKY SURVEY / CALTECH; BURBIDGE: 60 JULY 2019 • SKY & TELESCOPE ©2019 Sky & Telescope. All rights reserved. LADY STARDUST Margaret Burbidge, who celebrates her 100th birthday in August, had a long and stellar career in multiple fields of astrophysics. One of her most significant achievements was formulating our understand- ing of nucleosynthesis in stars. Among positions she held in her lifetime were director of the Royal Greenwich Observatory and president of the American Astronomical Society, both the first time that a woman filled the post. The photograph shows Burbidge in Dallas, Texas, in February 1964. ©2019 Sky & Telescope. All rights reserved. skyandtelescope.com • JULY 2019 61 A Stellar Career ou can’t give telescope time for this junk science! Who and for the first time the stars were clearly visible to her, “ does she think she is?” blustered a young upstart upon and she was smitten.” Yhearing that an elderly astronomer wanted half a night This fascination, combined with a talent for mathemat- with one of the brand-new Keck telescopes to observe objects ics, developed to the point where she was reading the books that might disprove the Big Bang theory. of astronomer and mathematician Sir James Jeans, a distant Observatory Director Joe Miller was quick to put the relative on her mother’s side, by the age of 12. She went on youngster in his place: “You just look up Margaret Burbidge, to study astronomy at University College London, graduat- the Margaret Burbidge, and you’ll know who she is,” he said. -

SUN → EARTH → CRUST → LIFE: QUANTIFYING the ELEMENTAL FRACTIONATIONS THAT LED to LIFE on EARTH. A. Chopra1 and C. H. Linew

Astrobiology Science Conference 2010 (2010) 5547.pdf SUN → EARTH → CRUST → LIFE: QUANTIFYING THE ELEMENTAL FRACTIONATIONS THAT LED TO LIFE ON EARTH. A. Chopra1 and C. H. Lineweaver1, 1Planetary Science Institute, Research School of Astronomy and Astrophysics and Research School of Earth Sciences, Australian National University, ACT, Aus- tralia, [email protected] One way of answering the question ‘What is life?’ 105 is to look at the ingredients. Oxygen, carbon, hydrogen H O and nitrogen, make up 96.8 ± 0.1% of the mass of life C (based on humans and bacteria) [1]. Phosphorus and N sulfur together make up 1.0 ± 0.3%. The remaining 2.2 P Ca K Na S Cl Mg ± 0.2% is dominated by potassium, sodium, calcium, s 0 n 10 Si F magnesium and chlorine, while 0.03 ± 0.01% is attrib- ma Fe u Zn H n Rb uted to trace elements such as iron, copper and zinc. i Sr Br Al ce B Li Cu Carl Sagan popularized the idea “We are all star n P a Cd Ti d Ce Cr Ni 2 SnBa Se n stuff” based on the seminal B FH paper [2]. Since then u I Ge Mn Cs MoAs Co b Hg A Ag Sb Zr Ga others such as Davies and Koch [3] noted similarities Nb Y -5 La 10 In Sc Te V Tl Be between the composition of humans and bacteria on Ta Au (number of atoms - normalised to Si) Sm one hand and the Earth's crust and oceans on the other. -

Supernova Nucleosynthesis

Supernova nucleosynthesis Supernova nucleosynthesis is the nucleosynthesis of chemical elements in supernova explosions. In sufficiently massive stars, the nucleosynthesis by fusion of lighter elements into heavier ones occurs during sequential hydrostatic burning processes called helium burning, carbon burning, oxygen burning, and silicon burning, in which the ashes of one nuclear fuel become, after compressional heating, the fuel for the subsequent burning stage. During hydrostatic burning these fuels synthesize overwhelmingly the alpha-nucleus (A = 2Z) products. A rapid final explosive burning[1] is caused by the sudden temperature spike owing to passage of the radially moving shock wave that was launched by the gravitational collapse of the core. W. D. Arnett and his Rice University colleagues[2][1] demonstrated that the final shock burning would synthesize the non-alpha-nucleus isotopes more effectively than hydrostatic burning was able to do,[3][4] suggesting that the expected shock-wave nucleosynthesis is an essential component of supernova nucleosynthesis. Together, shock-wave nucleosynthesis and hydrostatic-burning processes create most of the isotopes of the elements carbon (Z = 6), oxygen (Z = 8), and elements with Z = 10–28 (from neon to nickel).[4][5] As a result of the ejection of the newly synthesized isotopes of the chemical elements by supernova explosions their abundances steadily increased within interstellar gas. That increase became evident to astronomers from the initial abundances in newly born stars exceeding those in earlier-born stars. Elements heavier than nickel are comparatively rare owing to the decline with atomic weight of their nuclear binding energies per nucleon, but they too are created in part within supernovae.