Detecting Disease Outbreaks in Mass Gatherings Using Internet Data

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

List of Available Videos

Artist Concert 3 Doors Down Live at the Download Festival 3 Doors Down Live At The Tabernacle 2014 30 Odd Foot Of Grunts Live at Soundstage 30 Seconds To Mars Live At Download Festival 2013 5 Seconds of Summer How Did We End Up Here? 6ft Hick Notes from the Underground A House Live on Stage A Thousand Horses Real Live Performances ABBA Arrival: The Ultimate Critical Review ABBA The Gold Singles Above And Beyond Acoustic AC/DC AC/DC - No Bull AC/DC In Performance AC/DC Live At River Plate\t AC/DC Live at the Circus Krone Acid Angels 101 A Concert - Band in Seattle Acid Angels And Big Sur 101 Episode - Band in Seattle Adam Jensen Live at Kiss FM Boston Adam Lambert Glam Nation Live Aerosmith Rock for the Rising Sun Aerosmith Videobiography After The Fire Live at the Greenbelt Against Me! Live at the Key Club: West Hollywood Aiden From Hell with Love Air Eating Sleeping Waiting and Playing Air Supply Air Supply Live in Toronto Air Supply Live in Hong Kong Akhenaton Live Aux Docks Des Sud Al Green Everything's Going To Be Alright Alabama and Friends Live at the Ryman Alain Souchon J'veux Du Live Part 2 Alanis Morissette Guitar Center Sessions Alanis Morissette Live at Montreux 2012 Alanis Morissette Live at Soundstage Albert Collins Live at Montreux Alberta Cross Live At The ATO Cabin Alejandro Fernández Confidencias Reales Alejandro Sanz El Alma al Aire en Concierto Ali Campbell Live at the Shepherds Bush Empire Alice Cooper AVO Session Alice Cooper Brutally Live Alice Cooper Good To See You Again Alice Cooper Live at Montreux Alice Cooper -

ANDERTON Music Festival Capitalism

1 Music Festival Capitalism Chris Anderton Abstract: This chapter adds to a growing subfield of music festival studies by examining the business practices and cultures of the commercial outdoor sector, with a particular focus on rock, pop and dance music events. The events of this sector require substantial financial and other capital in order to be staged and achieve success, yet the market is highly volatile, with relatively few festivals managing to attain longevity. It is argued that these events must balance their commercial needs with the socio-cultural expectations of their audiences for hedonistic, carnivalesque experiences that draw on countercultural understanding of festival culture (the countercultural carnivalesque). This balancing act has come into increased focus as corporate promoters, brand sponsors and venture capitalists have sought to dominate the market in the neoliberal era of late capitalism. The chapter examines the riskiness and volatility of the sector before examining contemporary economic strategies for risk management and audience development, and critiques of these corporatizing and mainstreaming processes. Keywords: music festival; carnivalesque; counterculture; risk management; cool capitalism A popular music festival may be defined as a live event consisting of multiple musical performances, held over one or more days (Shuker, 2017, 131), though the connotations of 2 the word “festival” extend much further than this, as I will discuss below. For the purposes of this chapter, “popular music” is conceived as music that is produced by contemporary artists, has commercial appeal, and does not rely on public subsidies to exist, hence typically ranges from rock and pop through to rap and electronic dance music, but excludes most classical music and opera (Connolly and Krueger 2006, 667). -

Organization of a Large Scale Music Event: Planning and Production

Organization of a large scale music event: planning and production Tatiana Caciur Thesis MUBBA 2012 Abstract 17.1.2012 MUBBA Author or authors Group or year of Tatiana Caciur entry 2008 Title of report Number of Organization of a large scale music event: planning and pages and production. appendices 54 + 4 Teacher/s or supervisor/s Heta-Liisa Malkavaara Live entertainment is currently one of the few vital components of the music industry as it struggles to survive in the XXI century and conform to the drastic changes on the music market. Music events occur in great amount on a daily basis and it is important to plan and produce them in the right manner in order to stay relevant and secure one‟s place on the market. This report introduces various crucial matters that require attention during event organization process, such as marketing and strategic planning, communication and promotion, risk management, event programming and capacity management, to name a few. The 5-phase project management model is applied as the base on which this thesis is built. The research is conducted by comparing the theoretical and practical approach used in the music event business. The practical approach is based on examinations and interviews with the world market leader in live entertainment – Live Nation. An additional analysis of practices used in other large and successful music events in Europe and USA is also conducted. As this thesis shows, the practical approach is often easier and less rigorous than the theory. Some of the steps in this process may be simply omitted due to being considered of little relevance by the management team, many other are outsourced to professional agencies depending on requirements (security, catering). -

Parliamentary Debates (Hansard)

Monday Volume 585 8 September 2014 No. 34 HOUSE OF COMMONS OFFICIAL REPORT PARLIAMENTARY DEBATES (HANSARD) Monday 8 September 2014 £5·00 © Parliamentary Copyright House of Commons 2014 This publication may be reproduced under the terms of the Open Parliament licence, which is published at www.parliament.uk/site-information/copyright/. 633 8 SEPTEMBER 2014 634 The Parliamentary Under-Secretary of State for House of Commons Communities and Local Government (Penny Mordaunt): Since taking office I have had several meetings with the Monday 8 September 2014 Fire Brigades Union, the Retained Firefighters Union, the Fire Officers Association and the women’s committee of the FBU. Further meetings are in the diary. It is only The House met at half-past Two o’clock through such discussions that we will bring the dispute to an end. PRAYERS Jim Fitzpatrick: I welcome the Minister to her post, although I notice that she failed to answer the question— [MR SPEAKER in the Chair] that is a great start to her ministerial career. The Minister has made positive comments during her BUSINESS BEFORE QUESTIONS visits to fire stations throughout the country. The Government commissioned the Williams review on the DEATH OF A MEMBER impact of medical retirements for firefighters aged between 50 and 60, and especially the impact on women. Do the Mr Speaker: It is with great sadness that I have to Government accept the need for minimum fitness standards? announce the sudden death of our colleague Jim Dobbin, Member of Parliament for Heywood and Middleton, while on a Council of Europe trip to Poland. -

Green Events & Innovations Conference 2019

GREEN EVENTS & INNOVATIONS CONFERENCE 2019 Tuesday 5th March 2019 Royal Garden Hotel, Kensington High St, London, W8 4PT GREEN EVENTS & INNOVATIONS CONFERENCE 2019 CONTENTS Welcome ..............................................................................................................................................................4 Schedule ..............................................................................................................................................................5 Agenda .................................................................................................................................................................6 AGF Juicy Stats ................................................................................................................................................12 Delegates ..........................................................................................................................................................16 International AGF Awards Shortlist ........................................................................................................21 Feature: Campsite waste ............................................................................................................................22 Note paper ........................................................................................................................................................26 Welcome to the eleventh edition of the Green Events & Innovations conference (GEI11), presented -

Executive of the Week: Columbia Records Senior VP

Bulletin YOUR DAILY ENTERTAINMENT NEWS UPDATE JULY 9, 2021 Page 1 of 18 INSIDE Executive of the Week: • Inside Track: How the Music Business Columbia Records Senior VP Is Preparing to Return to the Office Marketing Jay Schumer • Health Professionals on the Mental Toll of BY DAN RYS Returning to Shows Mid-Pandemic yler, The Creator’s sixth album, CALL ME extensively, and how marketing has changed over the • Shamrock Capital, IF YOU GET LOST, was a smash hit upon course of his career. “Fans have a lot of things they After Buying Taylor its release: 169,000 equivalent album units, could be doing at any given moment,” he says, “so you Swift’s Catalog, the single week highest of his career; a better be giving them a really good reason to give you Raises $200M to Tsecond straight No. 1 on the Billboard 200, and sixth their attention.” Loan to Creators straight top five; and a promotional campaign that in- Tyler, The Creator’s CALL ME IF YOU GET • For the First Time, cluded a performance at the BET Awards and several LOST debuted at No. 1 on the Billboard 200 this People of Color well-received music videos. week with 169,000 equivalent album units, the Dominate Recording It also was another release from the artist that came highest mark of his career. What key decisions did Academy Board of bundled with an innovative marketing plan, helped you make to help make that happen? Trustees: Exclusive by a rollout featuring a phone number that fans could The success of this album comes first and foremost • Taylor Swift, call to receive personalized messages from Tyler, from having an artist like Tyler who is one-of-a-kind Jack Antonoff & which followed the VOTE IGOR campaign that Tyler and has continued to grow with every release. -

Linkin Park Videos for Download

Linkin park videos for download Numb/Encore [Live] (Clean Version) Linkin Park & Jay Z. Linkin Park - One Step Closer (Official Video) One Step Closer (Official Video) Linkin Park. Linkin Park. Tags: Linkin Park Video Songs, Linkin Park HD Mp4 Videos Download, Linkin Park Music Video Songs, Download 3gp Mp4 Linkin Park Videos, Linkin Park. Linkin Park - Numb Lyrics [HQ] [HD] Linkin Park - Numb (R.I.P Chester Bennington) HORVÁTH TAMÁS - Chester Bennington (Linkin Park) - Numb (Sztárban Sztár ). Linkin Park Heavy Video Download 3GP, MP4, HD MP4, And Watch Linkin Park Heavy Video. Watch videos & listen free to Linkin Park: In the End, Numb & more. Linkin Park is an American band from Agoura Hills, California. Formed in the band rose. This page have Linkin Park Full HD Music Videos. You can download Linkin Park p & p High Definition Blu-ray Quality Music Videos to your computer. Let's take a look back at Linkin Park's finest works as we count down their 10 Best Songs. Watch One More Light by Linkin Park online at Discover the latest music videos by Linkin Park on. hd video download, downloadable videos download music, music Numb - Linkin Park Official Video. Kiiara). Directed by Tim Mattia. Linkin Park's new album 'One More Light'. Available now: Linkin Park - Live At Download Festival In Paris, France Full Concert HD p. Video author: https. Linkin Park "Numb" off of the album METEORA. Directed by Joe Hahn. | http. Linkin Park "CASTLE OF GLASS" off of the album LIVING THINGS. "CASTLE OF I'll be spending all day. Videos. -

Using Green Man Festival As a Case Study

An Investigation to identify how festivals promotional techniques have developed over the years – using Green Man Festival as a case study Victoria Curran BA (hons) Events Management Cardiff Metropolitan University April 2018 i Declaration “I declare that this Dissertation has not already been accepted in substance for any degree and is not concurrently submitted in candidature for any degree. It is the result of my own independent research except where otherwise stated.” Name: Victoria Curran Signed: ii Abstract This research study was carried out in order to explore the different methods of marketing that Green Man Festival utilises, to discover how successful they are, and whether they have changed and developed throughout the years. The study intended to critically review the literature surrounding festivals and festival marketing theories, in order to provide conclusions supported by theory when evaluating the effectiveness of the promotional strategies. It aimed to discover how modern or digital marketing affected Green Man’s promotional techniques, to assess any identified promotional techniques and identify any connections with marketing theory, to investigate how they promote the festival towards their target market, and to finally provide recommendations for futuristic methods of promotion. The dissertation was presented coherently, consisting of five chapters. The first chapter was the introduction, providing a basic insight into the topics involved. The second contains a critical literature review where key themes were identified; the third chapter discussed the methodology used whilst the fourth chapter presents the results that were discovered, providing an analysis and discussion. The final chapter summarises the study, giving recommendations and identifying any limitations of the study. -

Section a Concept and Management

Section A Concept and Management 01-Raj-Ch-01-Section A.indd 1 24/01/2013 5:33:02 PM 01-Raj-Ch-01-Section A.indd 2 24/01/2013 5:33:02 PM Introduction to Events 1 Management In this chapter you will cover: x the historical development of events; x technical definitions of events management; x size of events within the sector; x an events industry; x value of areas of the events industry; x different types of events; x local authorities’ events strategies; x corporate events strategies; x community festivals; x charity events; x summary; x discussion questions; x case studies; x further reading. This chapter provides an historical overview of the events and festivals industry, and how it has developed over time. The core theme for this chapter is to establish a dialogue between event managers and event spe- cialists who need to have a consistent working relationship. Each strand of the chapter will be linked to industry best practice where appropriate. In addition, this chapter discusses the different types of events that exist within the events management industry. Specifically, the chapter will analyse and discuss a range of events and their implications for the events industry, including the creation of opportunities for community orientated events and festivals. 01-Raj-Ch-01-Section A.indd 3 24/01/2013 5:33:02 PM 4 SECTION A: CONCEPT AND MANAGEMENT The historical development of events Events, in the form of organised acts and performances, have their origins in ancient history. Events and festivals are well documented in the historical period before the fall of the Western Roman Empire (AD 476). -

59˚ 42 Issue Four

issue four 59˚ 42 14 12 03 Editor’s Letter 22 04 Disposed Media Gaming 06 Rev Says... 07 Wishlist 08 Gamer’s Diary 09 BigLime 10 News: wii 11 Freeware 12 User Created Gaming 14 Shadow Of The Colossus 16 Monkey Island 17 Game Reviews Music 19 Festivals 21 Good/Bad: The New Emo 26 22 Mogwai 23 DoorMat 24 Music Reviews Films 26 Six Feet Under 28 Silent Hill 30 U-Turn 31 Film Reviews Comics 33 Marvel’s Ultimates Line 35 Scott Pilgrim 36 Wolverine 37 Comic Reviews Gallery 33 42 Next Issue... Publisher Tim Cheesman Editors Andrew Revell/Ian Moreno-Melgar Art Director Andrew Campbell Contributors Dan Gassis/Jason Brown/Jason Robbins/Adam Parker/Robert Florence/Keith Andrew /Jamie Thomson/Danny Badger Designers Peter Wright/Rachel Wild Cover-art Melanie Caine [© Disposable Media 2006. // All images and characters are retained by original company holding.] DM4/EDITOR’S LETTER So we’ve changed. We’ve CHANGE IS GOOD.not been around long, but we’re doing ok. We think. But we’ve changed as we promised we would in issue 1 to reflect both what you asked for, and also what we want to do. It’s all part of a long term goal for the magazine which means there’ll be more issues more often, as well as the new look and new content. You might have noticed we’ve got a new website as well; it’ll be worth checking on a very frequent basis as we update it with DM news, and opinion. We’ve also gone and done the ‘scene’ thing and set up a MySpace page. -

Big Scottish Pop Quiz Questions

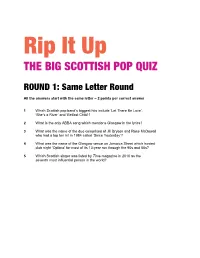

Rip It Up THE BIG SCOTTISH POP QUIZ ROUND 1: Same Letter Round All the answers start with the same letter – 2 points per correct answer 1 Which Scottish pop band’s biggest hits include ‘Let There Be Love’, ‘She’s a River’ and ‘Belfast Child’? 2 What is the only ABBA song which mentions Glasgow in the lyrics? 3 What was the name of the duo comprised of Jill Bryson and Rose McDowall who had a top ten hit in 1984 called ‘Since Yesterday’? 4 What was the name of the Glasgow venue on Jamaica Street which hosted club night ‘Optimo’ for most of its 13-year run through the 90s and 00s? 5 Which Scottish singer was listed by Time magazine in 2010 as the seventh most influential person in the world? ROUND 1: ANSWERS 1 Simple Minds 2 Super Trouper (1st line of 1st verse is “I was sick and tired of everything/ When I called you last night from Glasgow”) 3 Strawberry Switchblade 4 Sub Club (address is 22 Jamaica Street. After the fire in 1999, Optimo was temporarily based at Planet Peach and, for a while, Mas) 5 Susan Boyle (She was ranked 14 places above Barack Obama!) ROUND 6: Double or Bust 4 points per correct answer, but if you get one wrong, you get zero points for the round! 1 Which Scottish New Town was the birthplace of Indie band The Jesus and Mary Chain? a) East Kilbride b) Glenrothes c) Livingston 2 When Runrig singer Donnie Munro left the band to stand for Parliament in the late 1990s, what political party did he represent? a) Labour b) Liberal Democrat c) SNP 3 During which month of the year did T in the Park music festival usually take -

Sponsorship Packages

SPONSORSHIP PACKAGES 6 DECEMBER - TROXY, LONDON WWW.FESTIVALAWARDS.COM Annual Celebration of the UK Festival Industry THE EVENT An awards ceremony that will leave UK festival organisers feeling celebrated, indulged and inspired. A highlight of the UK Festivals Calendar, the UKFA was founded in 2002 and is now celebrating its 15th year. With over 650 festival organisers, music agents and trade suppliers in attendance, the evening brings together the UK Festival scene’s key players for a night of entertainment, networking, street food, innovative cocktails and an exclusive after party – all held at the historic Troxy in London. Recognising the festival industries’ brightest and best, previous award winners include Michael Eavis (Glastonbury Festival), Peter Gabriel (WOMAD), Download Festival, Latitude and TRNSMT. We look forward to celebrating 2018’s triumphs with this year proving to be bigger and better than ever. THE BENEFITS The Awards offer sponsors the opportunity to network with the UK’s leading festival influencers and gain extensive exposure: EXCLUSIVE NETWORKING OPPORTUNITIES With the UK festival industry’s key players in attendance, the Awards offers unparalleled opportunity to network and engage one-on-one with decision makers. POSITION YOUR BRAND IN FRONT OF THE UK’S TOP FESTIVAL ORGANISERS Last year’s shortlisted festivals are the most influential, established and recognisable in the country. The UK’s greatest influencers will be attending the event and sponsors will have the opportunity to showcase products and services to a broad prospect base. UK FESTIVAL AWARDS 2017 SPONSORS & SUPPORTERS SPONSORSHIP OPPORTUNITIES HEADLINE PARTNER SPONSORSHIP Bespoke Sponsor Packages £20,000 If you are looking for a more unique package, contact us for The Headline Partner package is designed to offer your brand details about bespoke sponsorship packages.