A Flexible Framework for File System Benchmarking &Pivot

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Java Implementation of the Graphical User Interface of the Octopus Distributed Operating System

Java implementation of the graphical user interface of the Octopus distributed operating system Submitted by Aram Sadogidis Advisor Prof. Spyros Lalis University of Thessaly Volos, Greece October 2012 Acknowledgements I am sincerely grateful for all the people that supported me during my Uni- versity studies. Special thanks to professor Spyros Lalis, my mentor, who had decisive influence in shaping my character as an engineer. Also many thanks to my family and friends, whose support all these years, encouraged me to keep moving forward. 1 Contents 1 Introduction 4 2 System software technologies 6 2.1 Inferno OS . 6 2.2 JavaSE and Android framework . 8 2.3 Synthetic file systems . 10 2.3.1 Styx . 10 2.3.2 Op . 12 3 Octopus OS 14 3.1 UpperWare architecture . 14 3.2 Omero, a filesystem based window system . 16 3.3 Olive, the Omero viewer . 19 3.4 Ox, the Octopus shell . 21 4 Java Octopus Terminal 23 4.1 JOlive . 24 4.2 Desktop version . 25 4.2.1 Omero package . 26 4.2.2 ui package . 27 4.3 Android version . 28 4.3.1 com.jolive.Omero . 28 4.3.2 com.jolive.ui . 29 4.3.3 Pull application's UI . 30 5 Future perspective 33 5.1 GPS resources . 33 5.2 JOp . 34 5.3 Authentication device . 34 5.4 Remote Voice commander . 34 5.5 Conclusion . 35 6 Thesis preview in Greek 38 2 List of Figures 2.1 An application operates on a synthetic file as if it is a disk file, effectively communicating with a synthetic file system server. -

Spring 2015 Cybersecurity Reboot

QUARTERLY VOL. 6 NO. 4 SPRING 2015 cybersecurity:# reboot IQT Quarterly is a publication of In-Q-Tel, Inc., the strategic investment firm that serves as a bridge between the U.S. Intelligence Community and venture-backed startup firms on the leading edge of technological innovation. IQT Quarterly advances the situational awareness component of the IQT mission, serving as a platform to debut, discuss, and debate issues of innovation in the areas of overlap between commercial potential and U.S. Intelligence Community needs. For comments or questions regarding IQT or this document, please visit www.iqt.org, write to [email protected], or call 703-248-3000. The views expressed are those of the authors in their personal capacities and do not necessarily reflect the opinion of IQT, their employers, or the Government. ©2015 In-Q-Tel, Inc. This document was prepared by In-Q-Tel, Inc., with Government funding (U.S. Government Contract No. 2014-14031000011). The Government has Government Purpose License Rights in this document. Subject to those rights, the reproduction, display, or distribution of the Quarterly without prior written consent from IQT is prohibited. EDITORIAL IQT Quarterly, published by In-Q-Tel, Inc. Editor-in-Chief: Adam Dove Lead Theme Editor: Nat Puffer Contributing Theme Editors: Greg Shipley, Seth Spergel, and Justin Wilder Contributing Editors: Brittany Carambio, Carrie Sessine, and Emma Shepard Design by Lomangino Studio LLC Printed in the United States of America QUARTERLY Identify. Adapt. Deliver. TABLE OF CONTENTS On Our Radar 02 By Nat Puffer A Look Inside: Cybersecurity Reboot 05 Building Trust in Insecure Code 06 By Jeff Williams No Silent Failure: The Pursuit of Cybersecurity 10 A Q&A with Dan Geer The Return of Dragons: How the Internet of Things 14 Is Creating New, Unexplored Territories By John Matherly The Insecurity of Things 17 By Stephen A. -

Persistent 9P Sessions for Plan 9

Persistent 9P Sessions for Plan 9 Gorka Guardiola, [email protected] Russ Cox, [email protected] Eric Van Hensbergen, [email protected] ABSTRACT Traditionally, Plan 9 [5] runs mainly on local networks, where lost connections are rare. As a result, most programs, including the kernel, do not bother to plan for their file server connections to fail. These programs must be restarted when a connection does fail. If the kernel’s connection to the root file server fails, the machine must be rebooted. This approach suffices only because lost connections are rare. Across long distance networks, where connection failures are more common, it becomes woefully inadequate. To address this problem, we wrote a program called recover, which proxies a 9P session on behalf of a client and takes care of redialing the remote server and reestablishing con- nection state as necessary, hiding network failures from the client. This paper presents the design and implementation of recover, along with performance benchmarks on Plan 9 and on Linux. 1. Introduction Plan 9 is a distributed system developed at Bell Labs [5]. Resources in Plan 9 are presented as synthetic file systems served to clients via 9P, a simple file protocol. Unlike file protocols such as NFS, 9P is stateful: per-connection state such as which files are opened by which clients is maintained by servers. Maintaining per-connection state allows 9P to be used for resources with sophisticated access control poli- cies, such as exclusive-use lock files and chat session multiplexers. It also makes servers easier to imple- ment, since they can forget about file ids once a connection is lost. -

Bell Labs, the Innovation Engine of Lucent

INTERNSHIPS FOR RESEARCH ENGINEERS/COMPUTER SCIENTISTS BELL LABS DUBLIN (IRELAND) – BELL LABS STUTTGART (GERMANY) Background Candidates are expected to have some experience related to the following topics: Bell Laboratories is a leading end-to-end systems and • Operating systems, virtualisation and computer solutions research lab working in the areas of cloud architectures. and distributed computing, platforms for real-time • big-data streaming and analytics, wireless networks, Software development skills (C/C++, Python). • semantic data access, services-centric operations and Computer networks and distributed systems. thermal management. The lab is embedded in the • Optimisation techniques and algorithms. great traditions of the Bell Labs systems research, in- • Probabilistic modelling of systems performance. ternationally renowned as the birthplace of modern The following skills or interests are also desirable: information theory, UNIX, the C/C++ programming • languages, Awk, Plan 9 from Bell Labs, Inferno, the Experience with OpenStack or OpenNebula or Spin formal verification tool, and many other sys- other cloud infrastructure management software. • tems, languages, and tools. Kernel-level programming (drivers, scheduler,...). • Xen, KVM and Linux scripting/administration. We offer summer internships in our Cloud • Parallel and distributed systems programming Computing team, spread across the facilities in Dublin (Ireland) and Stuttgart (Germany), focusing (MPI, OpenMP, CUDA, MapReduce, StarSs, …). • on research enabling future cloud -

Plan 9 from Bell Labs

Plan 9 from Bell Labs “UNIX++ Anyone?” Anant Narayanan Malaviya National Institute of Technology FOSS.IN 2007 What is it? Advanced technology transferred via mind-control from aliens in outer space Humans are not expected to understand it (Due apologies to lisperati.com) Yeah Right • More realistically, a distributed operating system • Designed by the creators of C, UNIX, AWK, UTF-8, TROFF etc. etc. • Widely acknowledged as UNIX’s true successor • Distributed under terms of the Lucent Public License, which appears on the OSI’s list of approved licenses, also considered free software by the FSF What For? “Not only is UNIX dead, it’s starting to smell really bad.” -- Rob Pike (circa 1991) • UNIX was a fantastic idea... • ...in it’s time - 1970’s • Designed primarily as a “time-sharing” system, before the PC era A closer look at Unix TODAY It Works! But that doesn’t mean we don’t develop superior alternates GNU/Linux • GNU’s not UNIX, but it is! • Linux was inspired by Minix, which was in turn inspired by UNIX • GNU/Linux (mostly) conforms to ANSI and POSIX requirements • GNU/Linux, on the desktop, is playing “catch-up” with Windows or Mac OS X, offering little in terms of technological innovation Ok, and... • Most of the “modern ideas” we use today were “bolted” on an ancient underlying system • Don’t believe me? A “modern” UNIX Terminal Where did it go wrong? • Early UNIX, “everything is a file” • Brilliant! • Only until people started adding “features” to the system... Why you shouldn’t be working with GNU/Linux • The Socket API • POSIX • X11 • The Bindings “rat-race” • 300 system calls and counting.. -

Security of OS-Level Virtualization Technologies: Technical Report

Security of OS-level virtualization technologies Elena Reshetova1, Janne Karhunen2, Thomas Nyman3, N. Asokan4 1 Intel OTC, Finland 2 Ericsson, Finland 3 University of Helsinki, Finland 4 Aalto University and University of Helsinki, Finland Abstract. The need for flexible, low-overhead virtualization is evident on many fronts ranging from high-density cloud servers to mobile devices. During the past decade OS-level virtualization has emerged as a new, efficient approach for virtualization, with implementations in multiple different Unix-based systems. Despite its popularity, there has been no systematic study of OS-level virtualization from the point of view of security. In this report, we conduct a comparative study of several OS- level virtualization systems, discuss their security and identify some gaps in current solutions. 1 Introduction During the past couple of decades the use of different virtualization technolo- gies has been on a steady rise. Since IBM CP-40 [19], the first virtual machine prototype in 1966, many different types of virtualization and their uses have been actively explored both by the research community and by the industry. A relatively recent approach, which is becoming increasingly popular due to its light-weight nature, is Operating System-Level Virtualization, where a number of distinct user space instances, often referred to as containers, are run on top of a shared operating system kernel. A fundamental difference between OS-level virtualization and more established competitors, such as Xen hypervisor [24], VMWare [48] and Linux Kernel Virtual Machine [29] (KVM), is that in OS-level virtualization, the virtualized artifacts are global kernel resources, as opposed to hardware. -

Nginx Essentials.Pdf

www.it-ebooks.info Nginx Essentials Excel in Nginx quickly by learning to use its most essential features in real-life applications Valery Kholodkov BIRMINGHAM - MUMBAI www.it-ebooks.info Nginx Essentials Copyright © 2015 Packt Publishing All rights reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, without the prior written permission of the publisher, except in the case of brief quotations embedded in critical articles or reviews. Every effort has been made in the preparation of this book to ensure the accuracy of the information presented. However, the information contained in this book is sold without warranty, either express or implied. Neither the author nor Packt Publishing, and its dealers and distributors will be held liable for any damages caused or alleged to be caused directly or indirectly by this book. Packt Publishing has endeavored to provide trademark information about all of the companies and products mentioned in this book by the appropriate use of capitals. However, Packt Publishing cannot guarantee the accuracy of this information. First published: July 2015 Production reference: 1170715 Published by Packt Publishing Ltd. Livery Place 35 Livery Street Birmingham B3 2PB, UK. ISBN 978-1-78528-953-8 www.packtpub.com www.it-ebooks.info Credits Author Project Coordinator Valery Kholodkov Vijay Kushlani Reviewers Proofreader Markus Jelsma Safis Editing Jesse Estill Lawson Daniel Parraz Indexer Priya Sane Commissioning Editor Dipika Gaonkar Production Coordinator Shantanu N. Zagade Acquisition Editor Usha Iyer Cover Work Shantanu N. Zagade Content Development Editor Nikhil Potdukhe Technical Editor Manali Gonsalves Copy Editor Roshni Banerjee www.it-ebooks.info About the Author Valery Kholodkov is a seasoned IT professional with a decade of experience in creating, building, scaling, and maintaining industrial-grade web services, web applications, and mobile application backends. -

Admin Tools for Joomla 4 Nicholas K

Admin Tools for Joomla 4 Nicholas K. Dionysopoulos Admin Tools for Joomla 4 Nicholas K. Dionysopoulos Copyright © 2010-2021 Akeeba Ltd Abstract This book covers the use of the Admin Tools site security component, module and plugin bundle for sites powered by Joomla!™ 4. Both the free Admin Tools Core and the subscription-based Admin Tools Professional editions are completely covered. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.3 or any later version published by the Free Software Foundation; with no Invariant Sections, no Front-Cover Texts, and no Back-Cover Texts. A copy of the license is included in the appendix entitled "The GNU Free Documentation License". Table of Contents 1. Getting Started .......................................................................................................................... 1 1. What is Admin Tools? ....................................................................................................... 1 1.1. Disclaimer ............................................................................................................. 1 1.2. The philosophy ....................................................................................................... 2 2. Server environment requirements ......................................................................................... 2 3. Installing Admin Tools ...................................................................................................... -

Pragmaticperl-Interviews-A4.Pdf

Pragmatic Perl Interviews pragmaticperl.com 2013—2015 Editor and interviewer: Viacheslav Tykhanovskyi Covers: Marko Ivanyk Revision: 2018-03-02 11:22 © Pragmatic Perl Contents 1 Preface .......................................... 1 2 Alexis Sukrieh (April 2013) ............................... 2 3 Sawyer X (May 2013) .................................. 10 4 Stevan Little (September 2013) ............................. 17 5 chromatic (October 2013) ................................ 22 6 Marc Lehmann (November 2013) ............................ 29 7 Tokuhiro Matsuno (January 2014) ........................... 46 8 Randal Schwartz (February 2014) ........................... 53 9 Christian Walde (May 2014) .............................. 56 10 Florian Ragwitz (rafl) (June 2014) ........................... 62 11 Curtis “Ovid” Poe (September 2014) .......................... 70 12 Leon Timmermans (October 2014) ........................... 77 13 Olaf Alders (December 2014) .............................. 81 14 Ricardo Signes (January 2015) ............................. 87 15 Neil Bowers (February 2015) .............................. 94 16 Renée Bäcker (June 2015) ................................ 102 17 David Golden (July 2015) ................................ 109 18 Philippe Bruhat (Book) (August 2015) . 115 19 Author .......................................... 123 i Preface 1 Preface Hello there! You have downloaded a compilation of interviews done with Perl pro- grammers in Pragmatic Perl journal from 2013 to 2015. Since the journal itself is in Russian -

Article 15 Phoenix Telecommunications

The Cover The upper cover image is an artist’s concept of the Phoenix Lander with legs deployed and thrusters on just before landing on the surface of Mars. This rendition was created by Corby Waste of JPL in 2003. As the Mars program artist, he has created artwork for several Mars missions [1]. During lander surface operations, the Phoenix project generated many exotic images from Mars. One of these, the lower cover image [2], is a vertical projection that combines hundreds of exposures taken by the lander’s Surface Stereo Imager camera and projects them as if looking down from above. The black circle is where the camera itself is mounted on the lander, out of view in images taken by the camera. North is toward the top of the image. This view comprises more than 100 different Stereo Surface Imager pointings, with images taken through three different filters at each pointing. The images were taken in the period from the 13th Martian day, or sol, after landing to the 47th sol (June 5 through July 12, 2008). The lander's Robotic Arm appears cut off in this mosaic view because component images were taken when the arm was out of the frame. DESCANSO Design and Performance Summary Series Article 15 Phoenix Telecommunications J im T aylor Stan Butman C had E dwards P eter I lott R ichard K ornfeld Dennis L ee Scott Shaffer Gina Signori J et Pr opulsion L abor ator y C alifornia I nstitute of T echnology Pasadena, California National Aeronautics and Space Administr ation J et Pr opulsion L abor ator y C alifor nia I nstitute of T echnology Pasadena, California August 2010 This research was carried out at the Jet Propulsion Laboratory, California Institute of Technology, under a contract with the National Aeronautics and Space Administration. -

Modern Perl, Fourth Edition

Prepared exclusively for none ofyourbusiness Prepared exclusively for none ofyourbusiness Early Praise for Modern Perl, Fourth Edition A dozen years ago I was sure I knew what Perl looked like: unreadable and obscure. chromatic showed me beautiful, structured expressive code then. He’s the right guy to teach Modern Perl. He was writing it before it existed. ➤ Daniel Steinberg President, DimSumThinking, Inc. A tour de force of idiomatic code, Modern Perl teaches you not just “how” but also “why.” ➤ David Farrell Editor, PerlTricks.com If I had to pick a single book to teach Perl 5, this is the one I’d choose. As I read it, I was reminded of the first time I read K&R. It will teach everything that one needs to know to write Perl 5 well. ➤ David Golden Member, Perl 5 Porters, Autopragmatic, LLC I’m about to teach a new hire Perl using the first edition of Modern Perl. I’d much rather use the updated copy! ➤ Belden Lyman Principal Software Engineer, MediaMath It’s not the Perl book you deserve. It’s the Perl book you need. ➤ Gizmo Mathboy Co-founder, Greater Lafayette Open Source Symposium (GLOSSY) Prepared exclusively for none ofyourbusiness We've left this page blank to make the page numbers the same in the electronic and paper books. We tried just leaving it out, but then people wrote us to ask about the missing pages. Anyway, Eddy the Gerbil wanted to say “hello.” Prepared exclusively for none ofyourbusiness Modern Perl, Fourth Edition chromatic The Pragmatic Bookshelf Dallas, Texas • Raleigh, North Carolina Prepared exclusively for none ofyourbusiness Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. -

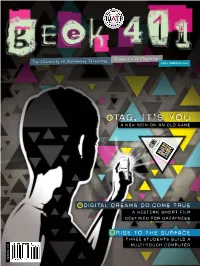

TAG, It's You! a NEW SPIN on an OLD GAME

Student Life Magazine The University of Advancing Technology Issue 5 SUMMER/FALL 2009 03 TAG, IT’S YOU A New Spin on an Old Game S N A P I T 50 D IGITAL DREAMS DO COME TRUE a Western Short FILM Destined for Greatness 24 Rise to The Surface Three Students Build a Multi-Touch Computer $6.95 SUMMER/FALL T.O.C. • • • LOOK FOR THESE MICROSOFT TAGS 04 TAG, IT'S YOU! A NEW SPIN ON AN OLD GAME TA B L E O F CON T E N T S GEEK 411 ISSUE 5 SUMMER/FALL 2009 ABOUT UAT 10 WE’RE TAKING OVER THE WORLD. JOIN US. 32 GET GEEKALICIOUS: T-SHIRT SALE 41 THE BRICKS (OUR AWESOME FACULTY) 49 THE MORTAR (OUR AWESOME STAFF) INSIDE THE TECH WORLD FEATURE 6 BIG BRAIN EVENTS STORIES 26 DEADLY TALENTED ALUMNI 35 WHAT'S YOUR GEEK IQ? 36 GO PLAY WITH YOUR DOTS 24 RISE TO THE SURFACE 38 WHAT’S HOT, WHAT’S NOT ThE RE STUDENTS BUILD A MULTI-TOUCH COMPUTER 42 DAYS OF FUTURE PAST 45 GADGETS & GIZMOS GEEK ESSENTIALS 12 GEEKS ON TOUR 18 DAY IN THE LIFE OF A DORM GEEK 30 LET THE TECH GAMES BEGIN 40 YOU KNOW YOU WANT THIS 46 HOW WE GOT SO AWESOME 47 WE GOT WHAT YOU NEED 22 GEEKILY EVER AFTER 54 GEEKS UNITE – CLUBS AND GROUPS HWTOO W UAT STUDENTS FELL IN LOVE AT FIRST SHOT STORIES ABOUT REALLY SMART PEOPLE 8 INVASION OF THE STAY PUFT BUNNY 29 RAY KURZWEIL 34 GEEK BLOGS 50 COWBOY DREAMS 20 DAVID WESSMAN IS THE MAN UAP T ROFESSOR DIRECTS FILM 16 LIVING THE GEEK DREAM 33 INTRODUCING… NEW GEEKS 14 WE DO STUFF THAT MATTERS 2 | GEEK 411 | UAT STUDENT LIFE MAGAZINE 09UT A 151 © CONTENTS COPYRIGHT BY FABCOM 20092008 LOOK FOR THESE MICROSOFT TAGS THROUGHOUT THIS S ISSUE OF GEEK 411 N AND TAG THEM A P TO GET MORE OF I THE STORY OR T BONUS CONTENT.