Download Paper

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Download (743Kb)

American Economic Journal: Applied Economics 2018, 10(2): 234–256 https://doi.org/10.1257/app.20150365 Education and Mortality: Evidence from a Social Experiment† By Costas Meghir, Mårten Palme, and Emilia Simeonova* We examine the effects on mortality and health due to a major Swedish educational reform that increased the years of compulsory schooling. Using the gradual phase-in of the reform between 1949 and 1962 across municipalities, we estimate insignificant effects of the reform on mortality in the affected cohort. From the confi- dence intervals, we can rule out effects larger than 1–1.4 months of increased life expectancy. We find no significant impacts on mortality for individuals of low socioeconomic status backgrounds, on deaths that are more likely to be affected by behavior, on hospitalizations, and consumption of prescribed drugs. JEL H52, I12, I21, I28 ( ) he strong correlation between socioeconomic status SES and health is one ( ) Tof the most recognized and studied in the social sciences. Economists have pointed at differences in resources, preferences, and knowledge associated with dif- ferent SES groups as possible explanations see, e.g., Grossman 2006 for an over- ( view . However, a causal link between any of these factors and later life health is ) hard to demonstrate, and the relative importance of different contributing factors is far from clear. A series of studies e.g., Lleras-Muney 2005, Oreopoulos 2006, Clark ( and Royer 2013, Lager and Torssander 2012, and summaries in Mazumder 2008 and 2012 , use regional differences in compulsory schooling laws or changes in national ) legislations on compulsory schooling as a source of exogenous variation in edu- cational attainment in order to identify a causal effect of education on health. -

Is the Greek Crisis One of Supply Or Demand?

YANNIS M. IOANNIDES Tufts University CHRISTOPHER A. PISSARIDES London School of Economics Is the Greek Crisis One of Supply or Demand? ABSTRACT Greece’s “supply” problems have been present since its acces- sion to the European Union in 1981; the “demand” problems caused by austerity and wage cuts have compounded the structural problems. This paper discusses the severity of the demand contraction, examines product market reforms, many of which have not been implemented, and their potential impact on com- petitiveness and the economy, and labor market reforms, many of which have been implemented but due to their timing have contributed to the collapse of demand. The paper argues in favor of eurozone-wide policies that would help Greece recover and of linking reforms with debt relief. reece joined the European Union (EU) in 1981 largely on politi- Gcal grounds to protect democracy after the malfunctioning political regimes that followed the civil war in 1949 and the disastrous military dictatorship of the years 1967–74. Not much attention was paid to the economy and its ability to withstand competition from economically more advanced European nations. A similar blind eye was turned to the economy when the country applied for membership in the euro area in 1999, becom- ing a full member in 2001. It is now blatantly obvious that the country was not in a position to compete and prosper in the European Union’s single market or in the euro area. A myriad of restrictions on free trade had been introduced piecemeal after 1949, with the pretext of protecting those who fought for democracy. -

Economics 881.25 Human Capital and Economic Development

Economics 881.25 Human Capital and Economic Development Class Meetings Class times Fridays 15:05 - 17:35 Classes begin 9 January 2015 Classes end 20 February 2015 Class location Social Sciences 111 Instructor Instructor Robert Garlick Email [email protected] Office Social Sciences 204 Office hours Wednesdays 13:30 - 15:30 for open office hours http://robertgarlick.youcanbook.me for one-on-one meetings about research 1 Course Overview This is a graduate, seminar-style course studying the intersection between development economics and the economics of human capital. The course is aimed primarily at doctoral students in eco- nomics who are interested in conducting research in development economics. Much of the material will also be relevant for graduate students interested in applied microeconomic research on human capital topics. The course will focus on two dimensions of human capital: education and health. Issues around nutrition and fertility will be discussed briefly but will not be a major focus. We will focus on economic topics relevant to low- and middle-income countries and will primarily read papers using data from these countries. We will also read some papers using data from developed countries, focusing on their theoretical or methodological contributions. We will only briefly discuss some macroeconomic aspects of the relationship between human capital and economic development. We will not study the relationship between human capital and the labor market, as this will be discussed in detail in Economics 881.26. The course has three goals: 1. Prepare students to conduct applied microeconomic research at the intersection between de- velopment economics and the economics of human capital 2. -

Gender and Child Health Investments in India Emily Oster NBER Working Paper No

NBER WORKING PAPER SERIES DOES INCREASED ACCESS INCREASE EQUALITY? GENDER AND CHILD HEALTH INVESTMENTS IN INDIA Emily Oster Working Paper 12743 http://www.nber.org/papers/w12743 NATIONAL BUREAU OF ECONOMIC RESEARCH 1050 Massachusetts Avenue Cambridge, MA 02138 December 2006 Gary Becker, Kerwin Charles, Steve Cicala, Amy Finkelstein, Andrew Francis, Jon Guryan, Matthew Gentzkow, Lawrence Katz, Michael Kremer, Steven Levitt, Kevin Murphy, Jesse Shapiro, Andrei Shleifer, Rebecca Thornton, and participants in seminars at Harvard University, the University of Chicago, and NBER provided helpful comments. I am grateful for funding from the Belfer Center, Kennedy School of Government. Laura Cervantes provided outstanding research assistance. The views expressed herein are those of the author(s) and do not necessarily reflect the views of the National Bureau of Economic Research. © 2006 by Emily Oster. All rights reserved. Short sections of text, not to exceed two paragraphs, may be quoted without explicit permission provided that full credit, including © notice, is given to the source. Does Increased Access Increase Equality? Gender and Child Health Investments in India Emily Oster NBER Working Paper No. 12743 December 2006 JEL No. I18,J13,J16,O12 ABSTRACT Policymakers often argue that increasing access to health care is one crucial avenue for decreasing gender inequality in the developing world. Although this is generally true in the cross section, time series evidence does not always point to the same conclusion. This paper analyzes the relationship between access to child health investments and gender inequality in those health investments in India. A simple theory of gender-biased parental investment suggests that gender inequality may actually be non-monotonically related to access to health investments. -

Allied Social Science Associations Atlanta, GA January 3–5, 2010

Allied Social Science Associations Atlanta, GA January 3–5, 2010 Contract negotiations, management and meeting arrangements for ASSA meetings are conducted by the American Economic Association. i ASSA_Program.indb 1 11/17/09 7:45 AM Thanks to the 2010 American Economic Association Program Committee Members Robert Hall, Chair Pol Antras Ravi Bansal Christian Broda Charles Calomiris David Card Raj Chetty Jonathan Eaton Jonathan Gruber Eric Hanushek Samuel Kortum Marc Melitz Dale Mortensen Aviv Nevo Valerie Ramey Dani Rodrik David Scharfstein Suzanne Scotchmer Fiona Scott-Morton Christopher Udry Kenneth West Cover Art is by Tracey Ashenfelter, daughter of Orley Ashenfelter, Princeton University, former editor of the American Economic Review and President-elect of the AEA for 2010. ii ASSA_Program.indb 2 11/17/09 7:45 AM Contents General Information . .iv Hotels and Meeting Rooms ......................... ix Listing of Advertisers and Exhibitors ................xxiv Allied Social Science Associations ................. xxvi Summary of Sessions by Organization .............. xxix Daily Program of Events ............................ 1 Program of Sessions Saturday, January 2 ......................... 25 Sunday, January 3 .......................... 26 Monday, January 4 . 122 Tuesday, January 5 . 227 Subject Area Index . 293 Index of Participants . 296 iii ASSA_Program.indb 3 11/17/09 7:45 AM General Information PROGRAM SCHEDULES A listing of sessions where papers will be presented and another covering activities such as business meetings and receptions are provided in this program. Admittance is limited to those wearing badges. Each listing is arranged chronologically by date and time of the activity; the hotel and room location for each session and function are indicated. CONVENTION FACILITIES Eighteen hotels are being used for all housing. -

Parent Engagement Ca...Oddlers' Skills Development

2/19/2020 Not just for play: parent engagement can boost toddlers’ skills development | YaleNews YaleNews Not just for play: parent engagement can boost toddlers’ skills development By Lisa Qian 14, 2020 A study involving low-income families in central Colombia found that toddlers whose parents engaged them in play with books and toys made of discarded household materials showed significant improvements in their cognitive and socio- emotional skills Encouraging low-income families to stimulate their toddlers with play and involve them in household activities can improve the children's cognitive and socio-emotional skills development, Yale researchers found in a new study of an early-childhood intervention designed for families in central Colombia. The study, published last month in the American Economic Review (https://www.aeaweb.org/articles? id=10.1257/aer.20150183&within%5Btitle%5D=on&within%5Babstract%5D=on&within%5Bauthor%5D=on&journal=1&q=parental+&from=j) , is one of the first to develop a model for understanding how early childhood interventions among the poor change parental behavior and thus affect the cognitive development of their children. “What the results emphasize is that shifting parental behavior towards more engagement with the child is very important for children in low-income environments,” said Yale economist Costas Meghir https://news.yale.edu/2020/02/14/not-just-play-parent-engagement-can-boost-toddlers-skills-development 1/3 2/19/2020 Not just for play: parent engagement can boost toddlers’ skills development | YaleNews (https://economics.yale.edu/people/faculty/costas-meghir) , a co-author of the paper. He noted that the fundamental aim of the research is to identify ways to prevent poverty from being passed down from one generation to the next by ensuring children develop to their full potential. -

Econ792 Reading 2020.Pdf

Economics 792: Labour Economics Provisional Outline, Spring 2020 This course will cover a number of topics in labour economics. Guidance on readings will be given in the lectures. There will be a number of problem sets throughout the course (where group work is encouraged), presentations, referee reports, and a research paper/proposal. These, together with class participation, will determine your final grade. The research paper will be optional. Students not submit- ting a paper will receive a lower grade. Small group work may be permitted on the research paper with the instructors approval, although all students must make substantive contributions to any paper. Labour Supply Facts Richard Blundell, Antoine Bozio, and Guy Laroque. Labor supply and the extensive margin. American Economic Review, 101(3):482–86, May 2011. Richard Blundell, Antoine Bozio, and Guy Laroque. Extensive and Intensive Margins of Labour Supply: Work and Working Hours in the US, the UK and France. Fiscal Studies, 34(1):1–29, 2013. Static Labour Supply Sören Blomquist and Whitney Newey. Nonparametric estimation with nonlinear budget sets. Econometrica, 70(6):2455–2480, 2002. Richard Blundell and Thomas Macurdy. Labor supply: A Review of Alternative Approaches. In Orley C. Ashenfelter and David Card, editors, Handbook of Labor Economics, volume 3, Part 1 of Handbook of Labor Economics, pages 1559–1695. Elsevier, 1999. Pierre A. Cahuc and André A. Zylberberg. Labor Economics. Mit Press, 2004. John F. Cogan. Fixed Costs and Labor Supply. Econometrica, 49(4):pp. 945–963, 1981. Jerry A. Hausman. The Econometrics of Nonlinear Budget Sets. Econometrica, 53(6):pp. 1255– 1282, 1985. -

Do Laboratory Experiments Measuring Social Preferences Reveal About the Real World? Author(S): Steven D

American Economic Association What Do Laboratory Experiments Measuring Social Preferences Reveal about the Real World? Author(s): Steven D. Levitt and John A. List Reviewed work(s): Source: The Journal of Economic Perspectives, Vol. 21, No. 2 (Spring, 2007), pp. 153-174 Published by: American Economic Association Stable URL: http://www.jstor.org/stable/30033722 . Accessed: 03/07/2012 00:24 Your use of the JSTOR archive indicates your acceptance of the Terms & Conditions of Use, available at . http://www.jstor.org/page/info/about/policies/terms.jsp . JSTOR is a not-for-profit service that helps scholars, researchers, and students discover, use, and build upon a wide range of content in a trusted digital archive. We use information technology and tools to increase productivity and facilitate new forms of scholarship. For more information about JSTOR, please contact [email protected]. American Economic Association is collaborating with JSTOR to digitize, preserve and extend access to The Journal of Economic Perspectives. http://www.jstor.org Journal of EconomicPerspectives-Volume 21, Number2-Spring 2007--Pages 153-174 What Do Laboratory Experiments Measuring Social Preferences Reveal About the Real World? Steven D. Levitt and John A. List Economists have increasingly turned to the experimental model of the physical sciences as a method to understand human behavior. Peer- reviewedreviewed articles using the methodology of experimental economics were almost nonexistent until the mid-1960s and surpassed 50 annually for the first time in 1982; and by 1998, the number of experimental papers published per year exceeded 200 (Holt, 2006). Lab experiments allow the investigator to influence the set of prices, budget sets, information sets, and actions available to actors, and thus measure the impact of these factors on behavior within the context of the labora- tory. -

The Use of Structural Models in Econometrics

Journal of Economic Perspectives—Volume 31, Number 2—Spring 2017—Pages 33–58 The Use of Structural Models in Econometrics Hamish Low and Costas Meghir he aim of this paper is to discuss the role of structural economic models in empirical analysis and policy design. This approach offers some valuable T payoffs, but also imposes some costs. Structural economic models focus on distinguishing clearly between the objec- tive function of the economic agents and their opportunity sets as defined by the economic environment. The key features of such an approach at its best are a tight connection with a theoretical framework alongside a clear link with the data that will allow one to understand how the model is identified. The set of assumptions under which the model inferences are valid should be clear: indeed, the clarity of the assumptions is what gives value to structural models. The central payoff of a structural econometric model is that it allows an empir- ical researcher to go beyond the conclusions of a more conventional empirical study that provides reduced-form causal relationships. Structural models define how outcomes relate to preferences and to relevant factors in the economic envi- ronment, identifying mechanisms that determine outcomes. Beyond this, they ■ Hamish Low is Professor of Economics, Cambridge University, Cambridge, United Kingdom, and Cambridge INET and Research Fellow, Institute for Fiscal Studies, London, United Kingdom. Costas Meghir is the Douglas A. Warner III Professor of Economics, Yale Univer- sity, New Haven, Connecticut; International Research Associate, Institute for Fiscal Studies, London, United Kingdom; Research Fellow, Institute of Labor Economics (IZA), Bonn, Germany; and Research Associate, National Bureau of Economic Research, Cambridge, Massachusetts. -

Syllabus for Lectures by Orazio Attanasio Part I the Production Function of Human Capital in Early Years

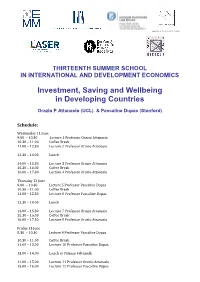

UNIVERSITA’ DEGLI STUDI DI TORINO THIRTEENTH SUMMER SCHOOL IN INTERNATIONAL AND DEVELOPMENT ECONOMICS Investment, Saving and Wellbeing in Developing Countries Orazio P Attanasio (UCL) & Pascaline Dupas (Stanford) Schedule: Wednesday 11 June 9.00 – 10.30 Lecture 1 Professor Orazio Attanasio 10.30 – 11.00 Coffee Break 11.00 – 12.30 Lecture 2 Professor Orazio Attanasio 12.30 – 14.00 Lunch 14.00 – 15.30 Lecture 3 Professor Orazio Attanasio 15.30 – 16.00 Coffee Break 16.00 – 17.30 Lecture 4 Professor Orazio Attanasio Thursday 12 June 9.00 – 10.30 Lecture 5 Professor Pascaline Dupas 10.30 – 11.00 Coffee Break 11.00 – 12.30 Lecture 6 Professor Pascaline Dupas 12.30 – 14.00 Lunch 14.00 – 15.30 Lecture 7 Professor Orazio Attanasio 15.30 – 16.00 Coffee Break 16.00 – 17.30 Lecture 8 Professor Orazio Attanasio Friday 13 June 8.30 – 10.30 Lecture 9 Professor Pascaline Dupas 10.30 – 11.00 Coffee Break 11.00 – 13.00 Lecture 10 Professor Pascaline Dupas 13.00 – 14.00 Lunch at Palazzo Feltrinelli 14.00 – 15.00 Lecture 11 Professor Orazio Attanasio 15.00 – 16.00 Lecture 12 Professor Pascaline Dupas Draft Syllabus for lectures by Orazio Attanasio Part I The production function of human capital in early years. Lecture 1, June 11 9:00-10:30 (90 minutes) 1.1 Evidence on human capital accumulation 1.1.1 The Socio Economic Gap in HK in early years in developing countries. 1.1.2 Interventions: community nurseries 1.1.3 Interventions: stimulations 1.1.3.1 The Jamaica Study 1.1.3.2 The Colombia Study Lecture 2, June 11:00-12:30 (90 minutes) 1.2 Models of accumulation of human capital: 1.2.1 The production function of human capital 1.2.2 Investment in human capital 1.2.3 Constraints to the accumulation of human capital. -

Three Essays in the Economics of Education Oswald Koussihouede

Three Essays in the Economics of Education Oswald Koussihouede To cite this version: Oswald Koussihouede. Three Essays in the Economics of Education. Economics and Finance. Uni- versité Gaston Berger, 2015. English. tel-01150504v2 HAL Id: tel-01150504 https://hal.archives-ouvertes.fr/tel-01150504v2 Submitted on 13 May 2015 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Copyright Université Gaston Berger Ecole Doctorale des Sciences de l’Homme et de la Société Unité de Formation et de Recherche en Sciences Economiques et de Gestion Three Essays in the Economics of Education Thèse présentée et soutenue publiquement le 13 Mars 2015 Pour l’obtention du grade de Docteur en sciences économiques Par: Oswald Koussihouèdé Jury: Mouhamadou Fall, Président, Université Gaston Berger, Sénégal Tanguy Bernard, Rapporteur, Université de Bordeaux, France Christian Monseur, Rapporteur, Université de Liège, Belgique Costas Meghir, Encadreur, Université Yale, Etats-Unis Adama Diaw, co-Encadreur, Université Gaston Berger, Sénégal L’Université Gaston Berger n’entend donner aucune approbation ni impro- bation aux opinions émises dans cette thèse. Ces opinions doivent être con- sidérées comme propres à leur(s) auteur(s). ii To Rachel-Sylviane, Emmanuelle and Théana, iii Acknowledgments I would like to acknowledge everyone who made this project possible. -

The Role of Cliometrics in History and Economics

Documents de travail « The Role of Cliometrics in History and Economics» Auteurs Claude Diebolt, Michael Haupert Document de Travail n° 2021 – 26 Juin 2021 Bureau d’Économie Théorique et Appliquée BETA www.beta-umr7522.fr @beta_economics Contact : [email protected] The Role of Cliometrics in History and Economics Claude Diebolt, CNRS, University of Strasbourg and Michael Haupert, University of Wisconsin-La Crosse Prepared for Bloomsbury History: Theory and Method Draft: June 10, 2021 Summary How did cliometrics in particular, and economic history in general, arrive at this crossroads, where it is at once considered to be a dying discipline and one that is spreading through the economics discipline as a whole? To understand the current status and future prospects of economic history, it is necessary to understand its past. Keywords Cliometrics, economic history, Robert Fogel, Douglass North, economic growth, econometrics, interdisciplinary economic history, new economic history, multidisciplinary, methodology, quantitative. JEL codes A12, N00, N01 Introduction In 2019 Diebolt and Haupert (2019a), in a response to the question of whether economic history had been assimilated by the economics discipline, argued that rather than assimilation, economic history resembled a ninja, and had infiltrated the discipline. That view of the current status of economic history is not universally shared. Abramitzky (2015 p 1242) bemoaned the fact that the typical economist only cares about the past “to the extent that it sheds light on the present.” More recently, Stefano Fenoaltea (2018) mourned what he saw as the loss of respect for the field of cliometrics. Abramitzky and Fenoaltea represent contemporary scholars who identified dark shadows encroaching upon economic historians.