Handout on Higher-Order Approximation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

An Introduction to Mathematical Modelling

An Introduction to Mathematical Modelling Glenn Marion, Bioinformatics and Statistics Scotland Given 2008 by Daniel Lawson and Glenn Marion 2008 Contents 1 Introduction 1 1.1 Whatismathematicalmodelling?. .......... 1 1.2 Whatobjectivescanmodellingachieve? . ............ 1 1.3 Classificationsofmodels . ......... 1 1.4 Stagesofmodelling............................... ....... 2 2 Building models 4 2.1 Gettingstarted .................................. ...... 4 2.2 Systemsanalysis ................................. ...... 4 2.2.1 Makingassumptions ............................. .... 4 2.2.2 Flowdiagrams .................................. 6 2.3 Choosingmathematicalequations. ........... 7 2.3.1 Equationsfromtheliterature . ........ 7 2.3.2 Analogiesfromphysics. ...... 8 2.3.3 Dataexploration ............................... .... 8 2.4 Solvingequations................................ ....... 9 2.4.1 Analytically.................................. .... 9 2.4.2 Numerically................................... 10 3 Studying models 12 3.1 Dimensionlessform............................... ....... 12 3.2 Asymptoticbehaviour ............................. ....... 12 3.3 Sensitivityanalysis . ......... 14 3.4 Modellingmodeloutput . ....... 16 4 Testing models 18 4.1 Testingtheassumptions . ........ 18 4.2 Modelstructure.................................. ...... 18 i 4.3 Predictionofpreviouslyunuseddata . ............ 18 4.3.1 Reasonsforpredictionerrors . ........ 20 4.4 Estimatingmodelparameters . ......... 20 4.5 Comparingtwomodelsforthesamesystem . ......... -

![Example 10.8 Testing the Steady-State Approximation. ⊕ a B C C a B C P + → → + → D Dt K K K K K K [ ]](https://docslib.b-cdn.net/cover/6323/example-10-8-testing-the-steady-state-approximation-a-b-c-c-a-b-c-p-d-dt-k-k-k-k-k-k-156323.webp)

Example 10.8 Testing the Steady-State Approximation. ⊕ a B C C a B C P + → → + → D Dt K K K K K K [ ]

Example 10.8 Testing the steady-state approximation. Å The steady-state approximation contains an apparent contradiction: we set the time derivative of the concentration of some species (a reaction intermediate) equal to zero — implying that it is a constant — and then derive a formula showing how it changes with time. Actually, there is no contradiction since all that is required it that the rate of change of the "steady" species be small compared to the rate of reaction (as measured by the rate of disappearance of the reactant or appearance of the product). But exactly when (in a practical sense) is this approximation appropriate? It is often applied as a matter of convenience and justified ex post facto — that is, if the resulting rate law fits the data then the approximation is considered justified. But as this example demonstrates, such reasoning is dangerous and possible erroneous. We examine the mechanism A + B ¾¾1® C C ¾¾2® A + B (10.12) 3 C® P (Note that the second reaction is the reverse of the first, so we have a reversible second-order reaction followed by an irreversible first-order reaction.) The rate constants are k1 for the forward reaction of the first step, k2 for the reverse of the first step, and k3 for the second step. This mechanism is readily solved for with the steady-state approximation to give d[A] k1k3 = - ke[A][B] with ke = (10.13) dt k2 + k3 (TEXT Eq. (10.38)). With initial concentrations of A and B equal, hence [A] = [B] for all times, this equation integrates to 1 1 = + ket (10.14) [A] A0 where A0 is the initial concentration (equal to 1 in work to follow). -

Differentiation Rules (Differential Calculus)

Differentiation Rules (Differential Calculus) 1. Notation The derivative of a function f with respect to one independent variable (usually x or t) is a function that will be denoted by D f . Note that f (x) and (D f )(x) are the values of these functions at x. 2. Alternate Notations for (D f )(x) d d f (x) d f 0 (1) For functions f in one variable, x, alternate notations are: Dx f (x), dx f (x), dx , dx (x), f (x), f (x). The “(x)” part might be dropped although technically this changes the meaning: f is the name of a function, dy 0 whereas f (x) is the value of it at x. If y = f (x), then Dxy, dx , y , etc. can be used. If the variable t represents time then Dt f can be written f˙. The differential, “d f ”, and the change in f ,“D f ”, are related to the derivative but have special meanings and are never used to indicate ordinary differentiation. dy 0 Historical note: Newton used y,˙ while Leibniz used dx . About a century later Lagrange introduced y and Arbogast introduced the operator notation D. 3. Domains The domain of D f is always a subset of the domain of f . The conventional domain of f , if f (x) is given by an algebraic expression, is all values of x for which the expression is defined and results in a real number. If f has the conventional domain, then D f usually, but not always, has conventional domain. Exceptions are noted below. -

PLEASANTON UNIFIED SCHOOL DISTRICT Math 8/Algebra I Course Outline Form Course Title

PLEASANTON UNIFIED SCHOOL DISTRICT Math 8/Algebra I Course Outline Form Course Title: Math 8/Algebra I Course Number/CBED Number: Grade Level: 8 Length of Course: 1 year Credit: 10 Meets Graduation Requirements: n/a Required for Graduation: Prerequisite: Math 6/7 or Math 7 Course Description: Main concepts from the CCSS 8th grade content standards include: knowing that there are numbers that are not rational, and approximate them by rational numbers; working with radicals and integer exponents; understanding the connections between proportional relationships, lines, and linear equations; analyzing and solving linear equations and pairs of simultaneous linear equations; defining, evaluating, and comparing functions; using functions to model relationships between quantities; understanding congruence and similarity using physical models, transparencies, or geometry software; understanding and applying the Pythagorean Theorem; investigating patterns of association in bivariate data. Algebra (CCSS Algebra) main concepts include: reason quantitatively and use units to solve problems; create equations that describe numbers or relationships; understanding solving equations as a process of reasoning and explain reasoning; solve equations and inequalities in one variable; solve systems of equations; represent and solve equations and inequalities graphically; extend the properties of exponents to rational exponents; use properties of rational and irrational numbers; analyze and solve linear equations and pairs of simultaneous linear equations; define, -

The Exponential Constant E

The exponential constant e mc-bus-expconstant-2009-1 Introduction The letter e is used in many mathematical calculations to stand for a particular number known as the exponential constant. This leaflet provides information about this important constant, and the related exponential function. The exponential constant The exponential constant is an important mathematical constant and is given the symbol e. Its value is approximately 2.718. It has been found that this value occurs so frequently when mathematics is used to model physical and economic phenomena that it is convenient to write simply e. It is often necessary to work out powers of this constant, such as e2, e3 and so on. Your scientific calculator will be programmed to do this already. You should check that you can use your calculator to do this. Look for a button marked ex, and check that e2 =7.389, and e3 = 20.086 In both cases we have quoted the answer to three decimal places although your calculator will give a more accurate answer than this. You should also check that you can evaluate negative and fractional powers of e such as e1/2 =1.649 and e−2 =0.135 The exponential function If we write y = ex we can calculate the value of y as we vary x. Values obtained in this way can be placed in a table. For example: x −3 −2 −1 01 2 3 y = ex 0.050 0.135 0.368 1 2.718 7.389 20.086 This is a table of values of the exponential function ex. -

1. Antiderivatives for Exponential Functions Recall That for F(X) = Ec⋅X, F ′(X) = C ⋅ Ec⋅X (For Any Constant C)

1. Antiderivatives for exponential functions Recall that for f(x) = ec⋅x, f ′(x) = c ⋅ ec⋅x (for any constant c). That is, ex is its own derivative. So it makes sense that it is its own antiderivative as well! Theorem 1.1 (Antiderivatives of exponential functions). Let f(x) = ec⋅x for some 1 constant c. Then F x ec⋅c D, for any constant D, is an antiderivative of ( ) = c + f(x). 1 c⋅x ′ 1 c⋅x c⋅x Proof. Consider F (x) = c e +D. Then by the chain rule, F (x) = c⋅ c e +0 = e . So F (x) is an antiderivative of f(x). Of course, the theorem does not work for c = 0, but then we would have that f(x) = e0 = 1, which is constant. By the power rule, an antiderivative would be F (x) = x + C for some constant C. 1 2. Antiderivative for f(x) = x We have the power rule for antiderivatives, but it does not work for f(x) = x−1. 1 However, we know that the derivative of ln(x) is x . So it makes sense that the 1 antiderivative of x should be ln(x). Unfortunately, it is not. But it is close. 1 1 Theorem 2.1 (Antiderivative of f(x) = x ). Let f(x) = x . Then the antiderivatives of f(x) are of the form F (x) = ln(SxS) + C. Proof. Notice that ln(x) for x > 0 F (x) = ln(SxS) = . ln(−x) for x < 0 ′ 1 For x > 0, we have [ln(x)] = x . -

Antiderivatives Math 121 Calculus II D Joyce, Spring 2013

Antiderivatives Math 121 Calculus II D Joyce, Spring 2013 Antiderivatives and the constant of integration. We'll start out this semester talking about antiderivatives. If the derivative of a function F isf, that is, F 0 = f, then we say F is an antiderivative of f. Of course, antiderivatives are important in solving problems when you know a derivative but not the function, but we'll soon see that lots of questions involving areas, volumes, and other things also come down to finding antiderivatives. That connection we'll see soon when we study the Fundamental Theorem of Calculus (FTC). For now, we'll just stick to the basic concept of antiderivatives. We can find antiderivatives of polynomials pretty easily. Suppose that we want to find an antiderivative F (x) of the polynomial f(x) = 4x3 + 5x2 − 3x + 8: We can find one by finding an antiderivative of each term and adding the results together. Since the derivative of x4 is 4x3, therefore an antiderivative of 4x3 is x4. It's not much harder to find an antiderivative of 5x2. Since the derivative of x3 is 3x2, an antiderivative of 5x2 is 5 3 3 x . Continue on and soon you see that an antiderivative of f(x) is 4 5 3 3 2 F (x) = x + 3 x − 2 x + 8x: There are, however, other antiderivatives of f(x). Since the derivative of a constant is 0, 4 5 3 3 2 we can add any constant to F (x) to find another antiderivative. Thus, x + 3 x − 2 x +8x+7 4 5 3 3 2 is another antiderivative of f(x). -

Mathematical Constants and Sequences

Mathematical Constants and Sequences a selection compiled by Stanislav Sýkora, Extra Byte, Castano Primo, Italy. Stan's Library, ISSN 2421-1230, Vol.II. First release March 31, 2008. Permalink via DOI: 10.3247/SL2Math08.001 This page is dedicated to my late math teacher Jaroslav Bayer who, back in 1955-8, kindled my passion for Mathematics. Math BOOKS | SI Units | SI Dimensions PHYSICS Constants (on a separate page) Mathematics LINKS | Stan's Library | Stan's HUB This is a constant-at-a-glance list. You can also download a PDF version for off-line use. But keep coming back, the list is growing! When a value is followed by #t, it should be a proven transcendental number (but I only did my best to find out, which need not suffice). Bold dots after a value are a link to the ••• OEIS ••• database. This website does not use any cookies, nor does it collect any information about its visitors (not even anonymous statistics). However, we decline any legal liability for typos, editing errors, and for the content of linked-to external web pages. Basic math constants Binary sequences Constants of number-theory functions More constants useful in Sciences Derived from the basic ones Combinatorial numbers, including Riemann zeta ζ(s) Planck's radiation law ... from 0 and 1 Binomial coefficients Dirichlet eta η(s) Functions sinc(z) and hsinc(z) ... from i Lah numbers Dedekind eta η(τ) Functions sinc(n,x) ... from 1 and i Stirling numbers Constants related to functions in C Ideal gas statistics ... from π Enumerations on sets Exponential exp Peak functions (spectral) .. -

Notes on Calculus and Optimization

Economics 101A Section Notes GSI: David Albouy Notes on Calculus and Optimization 1 Basic Calculus 1.1 Definition of a Derivative Let f (x) be some function of x, then the derivative of f, if it exists, is given by the following limit df (x) f (x + h) f (x) = lim − (Definition of Derivative) dx h 0 h → df although often this definition is hard to apply directly. It is common to write f 0 (x),or dx to be shorter, or dy if y = f (x) then dx for the derivative of y with respect to x. 1.2 Calculus Rules Here are some handy formulas which can be derived using the definitions, where f (x) and g (x) are functions of x and k is a constant which does not depend on x. d df (x) [kf (x)] = k (Constant Rule) dx dx d df (x) df (x) [f (x)+g (x)] = + (Sum Rule) dx dx dy d df (x) dg (x) [f (x) g (x)] = g (x)+f (x) (Product Rule) dx dx dx d f (x) df (x) g (x) dg(x) f (x) = dx − dx (Quotient Rule) dx g (x) [g (x)]2 · ¸ d f [g (x)] dg (x) f [g (x)] = (Chain Rule) dx dg dx For specific forms of f the following rules are useful in economics d xk = kxk 1 (Power Rule) dx − d ex = ex (Exponent Rule) dx d 1 ln x = (Logarithm Rule) dx x 1 Finally assuming that we can invert y = f (x) by solving for x in terms of y so that x = f − (y) then the following rule applies 1 df − (y) 1 = 1 (Inverse Rule) dy df (f − (y)) dx Example 1 Let y = f (x)=ex/2, then using the exponent rule and the chain rule, where g (x)=x/2,we df (x) d x/2 d x/2 d x x/2 1 1 x/2 get dx = dx e = d(x/2) e dx 2 = e 2 = 2 e . -

Common Core State Standards for MATHEMATICS

Common Core State StandardS for mathematics Common Core State StandardS for MATHEMATICS table of Contents Introduction 3 Standards for mathematical Practice 6 Standards for mathematical Content Kindergarten 9 Grade 1 13 Grade 2 17 Grade 3 21 Grade 4 27 Grade 5 33 Grade 6 39 Grade 7 46 Grade 8 52 High School — Introduction High School — Number and Quantity 58 High School — Algebra 62 High School — Functions 67 High School — Modeling 72 High School — Geometry 74 High School — Statistics and Probability 79 Glossary 85 Sample of Works Consulted 91 Common Core State StandardS for MATHEMATICS Introduction Toward greater focus and coherence Mathematics experiences in early childhood settings should concentrate on (1) number (which includes whole number, operations, and relations) and (2) geometry, spatial relations, and measurement, with more mathematics learning time devoted to number than to other topics. Mathematical process goals should be integrated in these content areas. — Mathematics Learning in Early Childhood, National Research Council, 2009 The composite standards [of Hong Kong, Korea and Singapore] have a number of features that can inform an international benchmarking process for the development of K–6 mathematics standards in the U.S. First, the composite standards concentrate the early learning of mathematics on the number, measurement, and geometry strands with less emphasis on data analysis and little exposure to algebra. The Hong Kong standards for grades 1–3 devote approximately half the targeted time to numbers and almost all the time remaining to geometry and measurement. — Ginsburg, Leinwand and Decker, 2009 Because the mathematics concepts in [U.S.] textbooks are often weak, the presentation becomes more mechanical than is ideal. -

Massachusetts Mathematics Curriculum Framework — 2017

Massachusetts Curriculum MATHEMATICS Framework – 2017 Grades Pre-Kindergarten to 12 i This document was prepared by the Massachusetts Department of Elementary and Secondary Education Board of Elementary and Secondary Education Members Mr. Paul Sagan, Chair, Cambridge Mr. Michael Moriarty, Holyoke Mr. James Morton, Vice Chair, Boston Dr. Pendred Noyce, Boston Ms. Katherine Craven, Brookline Mr. James Peyser, Secretary of Education, Milton Dr. Edward Doherty, Hyde Park Ms. Mary Ann Stewart, Lexington Dr. Roland Fryer, Cambridge Mr. Nathan Moore, Chair, Student Advisory Council, Ms. Margaret McKenna, Boston Scituate Mitchell D. Chester, Ed.D., Commissioner and Secretary to the Board The Massachusetts Department of Elementary and Secondary Education, an affirmative action employer, is committed to ensuring that all of its programs and facilities are accessible to all members of the public. We do not discriminate on the basis of age, color, disability, national origin, race, religion, sex, or sexual orientation. Inquiries regarding the Department’s compliance with Title IX and other civil rights laws may be directed to the Human Resources Director, 75 Pleasant St., Malden, MA, 02148, 781-338-6105. © 2017 Massachusetts Department of Elementary and Secondary Education. Permission is hereby granted to copy any or all parts of this document for non-commercial educational purposes. Please credit the “Massachusetts Department of Elementary and Secondary Education.” Massachusetts Department of Elementary and Secondary Education 75 Pleasant Street, Malden, MA 02148-4906 Phone 781-338-3000 TTY: N.E.T. Relay 800-439-2370 www.doe.mass.edu Massachusetts Department of Elementary and Secondary Education 75 Pleasant Street, Malden, Massachusetts 02148-4906 Dear Colleagues, I am pleased to present to you the Massachusetts Curriculum Framework for Mathematics adopted by the Board of Elementary and Secondary Education in March 2017. -

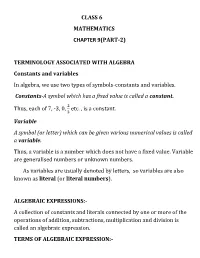

Class 6 Mathematics Chapter 9(Part-2)

CLASS 6 MATHEMATICS CHAPTER 9(PART-2) TERMINOLOGY ASSOCIATED WITH ALGEBRA Constants and variables In algebra, we use two types of symbols-constants and variables. Constants-A symbol which has a fixed value is called a constant. Thus, each of 7, -3, 0, etc. , is a constant. Variable A symbol (or letter) which can be given various numerical values is called a variable. Thus, a variable is a number which does not have a fixed value. Variable are generalised numbers or unknown numbers. As variables are usually denoted by letters, so variables are also known as literal (or literal numbers). ALGEBRAIC EXPRESSIONS:- A collection of constants and literals connected by one or more of the operations of addition, subtractions, multiplication and division is called an algebraic expression. TERMS OF ALGEBRAIC EXPRESSION:- The various parts of an algebraic expression separated by + or - Sign are called the terms of the algebraic expression. CONSTANT TERM:- The term of an algebraic expression having no literal is called its constant term. PRODUCT:- When two or more constants or literals (or both) are multiplied, then the result so obtained is called the product. FACTORS:- Each of the quantity (constant or literal) multiplied together to form a product is called a factor of the product. A constant factor is called a numerical factor and other factors are called variable (or literal) factors. COEFFICIENTS:- Any factor of a (non constant) term of an algebraic expression is called the coefficient of the remaining factor of the term. In particular, the constant part is called the numerical coefficient or simply the coefficient of the term and the remaining part is called the literal coefficient of the term.