American Elections 2020 – Social Media / Conspiration Theories

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Trump Contracts COVID

MILITARY VIDEO GAMES COLLEGE FOOTBALL National Guard designates Spelunky 2 drops Air Force finally taking units in Alabama, Arizona players into the role the field for its first to respond to civil unrest of intrepid explorer game as it hosts Navy Page 4 Page 14 Back page Report: Nearly 500 service members died by suicide in 2019 » Page 3 Volume 79, No. 120A ©SS 2020 CONTINGENCY EDITION SATURDAY, OCTOBER 3, 2020 stripes.com Free to Deployed Areas Trump contracts COVID White House says president experiencing ‘mild symptoms’ By Jill Colvin and Zeke Miller Trump has spent much of the year downplaying Associated Press the threat of a virus that has killed more than WASHINGTON — The White House said Friday 205,000 Americans. that President Donald Trump was suffering “mild His diagnosis was sure to have a destabilizing symptoms” of COVID-19, making the stunning effect in Washington and around the world, announcement after he returned from an evening raising questions about how far the virus has fundraiser without telling the crowd he had been spread through the highest levels of the U.S. exposed to an aide with the disease that has government. Hours before Trump announced he killed a million people worldwide. had contracted the virus, the White House said The announcement that the president of the a top aide who had traveled with him during the United States and first lady Melania Trump week had tested positive. had tested positive, tweeted by Trump shortly “Tonight, @FLOTUS and I tested positive for after midnight, plunged the country deeper into COVID-19. -

June 19, 2020 Volume 4, No

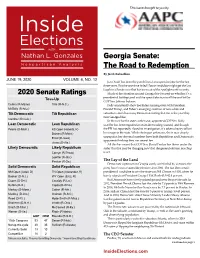

This issue brought to you by Georgia Senate: The Road to Redemption By Jacob Rubashkin JUNE 19, 2020 VOLUME 4, NO. 12 Jon Ossoff has been the punchline of an expensive joke for the last three years. But the one-time failed House candidate might get the last laugh in a Senate race that has been out of the spotlight until recently. 2020 Senate Ratings Much of the attention around Georgia has focused on whether it’s a Toss-Up presidential battleground and the special election to fill the seat left by GOP Sen. Johnny Isakson. Collins (R-Maine) Tillis (R-N.C.) Polls consistently show Joe Biden running even with President McSally (R-Ariz.) Donald Trump, and Biden’s emerging coalition of non-white and Tilt Democratic Tilt Republican suburban voters has many Democrats feeling that this is the year they turn Georgia blue. Gardner (R-Colo.) In the race for the state’s other seat, appointed-GOP Sen. Kelly Lean Democratic Lean Republican Loeffler has been engulfed in an insider trading scandal, and though Peters (D-Mich.) KS Open (Roberts, R) the FBI has reportedly closed its investigation, it’s taken a heavy toll on Daines (R-Mont.) her image in the state. While she began unknown, she is now deeply Ernst (R-Iowa) unpopular; her abysmal numbers have both Republican and Democratic opponents thinking they can unseat her. Jones (D-Ala.) All this has meant that GOP Sen. David Perdue has flown under the Likely Democratic Likely Republican radar. But that may be changing now that the general election matchup Cornyn (R-Texas) is set. -

FEDERAL ELECTIONS 2018: Election Results for the U.S. Senate and The

FEDERAL ELECTIONS 2018 Election Results for the U.S. Senate and the U.S. House of Representatives Federal Election Commission Washington, D.C. October 2019 Commissioners Ellen L. Weintraub, Chair Caroline C. Hunter, Vice Chair Steven T. Walther (Vacant) (Vacant) (Vacant) Statutory Officers Alec Palmer, Staff Director Lisa J. Stevenson, Acting General Counsel Christopher Skinner, Inspector General Compiled by: Federal Election Commission Public Disclosure and Media Relations Division Office of Communications 1050 First Street, N.E. Washington, D.C. 20463 800/424-9530 202/694-1120 Editors: Eileen J. Leamon, Deputy Assistant Staff Director for Disclosure Jason Bucelato, Senior Public Affairs Specialist Map Design: James Landon Jones, Multimedia Specialist TABLE OF CONTENTS Page Preface 1 Explanatory Notes 2 I. 2018 Election Results: Tables and Maps A. Summary Tables Table: 2018 General Election Votes Cast for U.S. Senate and House 5 Table: 2018 General Election Votes Cast by Party 6 Table: 2018 Primary and General Election Votes Cast for U.S. Congress 7 Table: 2018 Votes Cast for the U.S. Senate by Party 8 Table: 2018 Votes Cast for the U.S. House of Representatives by Party 9 B. Maps United States Congress Map: 2018 U.S. Senate Campaigns 11 Map: 2018 U.S. Senate Victors by Party 12 Map: 2018 U.S. Senate Victors by Popular Vote 13 Map: U.S. Senate Breakdown by Party after the 2018 General Election 14 Map: U.S. House Delegations by Party after the 2018 General Election 15 Map: U.S. House Delegations: States in Which All 2018 Incumbents Sought and Won Re-Election 16 II. -

Tlnitrd ~Tatrs ~Rnatr WASHINGTON, DC 20510

tlnitrd ~tatrs ~rnatr WASHINGTON, DC 20510 May 3, 2019 The Honorable Henry Kerner Special Counsel U.S. Office of Special Counsel 1730 M Street, NW Washington, DC 20036 Dear Special Counsel Kerner: As you know, the Hatch Act generally prohibits certain categories of political activities for all covered employees. 1 I write today to request your assistance with a review of recent oral statements by Kellyanne Conway, Assistant to the President and Senior Counselor. On April 27, 2019, Ms. Conway made the following statements during an interview on CNN: "You want to revisit this the way Joe Biden wants to revisit, respectfully, because he doesn't want to be held to account for his record or lack thereof. And I found his announcement video to be unfortunate, certainly a missed opportunity. But also just very dark and spooky, in that it's taking us, he doesn't have a vision for the future." The chyron on the bottom of the interview periodically identified Ms. Conway as "Counselor to President Trump."2 On April 30, 2019, Ms. Conway made the following statements in response to a question about infrastructure: "You've got middle-class is booming now despite what Joe Biden says. I don't know exactly what country he's talking about when he says he needs to rebuild the middle class. He also just sounds like someone who wasn't vice president for eight years. He's got this whole list of grievances of what's wrong with the country as if he didn't have, as if he didn't work in this building for eight years .. -

The Qanon Conspiracy

THE QANON CONSPIRACY: Destroying Families, Dividing Communities, Undermining Democracy THE QANON CONSPIRACY: PRESENTED BY Destroying Families, Dividing Communities, Undermining Democracy NETWORK CONTAGION RESEARCH INSTITUTE POLARIZATION AND EXTREMISM RESEARCH POWERED BY (NCRI) INNOVATION LAB (PERIL) Alex Goldenberg Brian Hughes Lead Intelligence Analyst, The Network Contagion Research Institute Caleb Cain Congressman Denver Riggleman Meili Criezis Jason Baumgartner Kesa White The Network Contagion Research Institute Cynthia Miller-Idriss Lea Marchl Alexander Reid-Ross Joel Finkelstein Director, The Network Contagion Research Institute Senior Research Fellow, Miller Center for Community Protection and Resilience, Rutgers University SPECIAL THANKS TO THE PERIL QANON ADVISORY BOARD Jaclyn Fox Sarah Hightower Douglas Rushkoff Linda Schegel THE QANON CONSPIRACY ● A CONTAGION AND IDEOLOGY REPORT FOREWORD “A lie doesn’t become truth, wrong doesn’t become right, and evil doesn’t become good just because it’s accepted by the majority.” –Booker T. Washington As a GOP Congressman, I have been uniquely positioned to experience a tumultuous two years on Capitol Hill. I voted to end the longest government shut down in history, was on the floor during impeachment, read the Mueller Report, governed during the COVID-19 pandemic, officiated a same-sex wedding (first sitting GOP congressman to do so), and eventually became the only Republican Congressman to speak out on the floor against the encroaching and insidious digital virus of conspiracy theories related to QAnon. Certainly, I can list the various theories that nest under the QAnon banner. Democrats participate in a deep state cabal as Satan worshiping pedophiles and harvesting adrenochrome from children. President-Elect Joe Biden ordered the killing of Seal Team 6. -

Donald Trump Jr

EXHIBIT Message From: Paul Manafo Sent: 6/8/2016 12:44:52 PM To: Donald Trump Jr. Subject: Re: Russia - Clinton - private and confidential see you then. p on 6/8/16, 12:02 PM, "Donald Trump Jr." > wrote: >Meeting got moved to 4 tomorrow at my offices . >Best, >Don > > > >Donald J. Trump Jr. >Executive Vice President of Development and Acquisitions >The Trump organization >725 Fi~h Avenue I New York, NY I 10022 trump.com > ' > > >-----Original Message----- >From: Rob Goldstone >Sent: Wednesday , June us, ZUlb 11:18 AM >To: Donald Trump Jr. >Subject: Re : Russia - Clinton - private and confidential > >They can ' t do today as she hasn ' t landed yet from Moscow 4pm is great tomorrow. >Best >Rob > >This iphone speaks many languages > >On Jun 8, 2016, at 11:15, Donald Trump Jr. wrote: > >Yes Rob I could do that unless they wanted to do 3 today instead ... just let me know a~d ill lock it in either way. >d > > > >Donald J. Trump Jr. >Executive Vice President of Development and Acquisitions The Trump organization >725 Fifth Avenue I New York, NY I 10022 trump.com > > > >---- -original Message----- >From: Rob Goldstone >Sent: Wednesday, June 08, 2016 10:34 AM >To: Donald Trump Jr. >Subject: Re: Russia - Clinton - private and confidential > >Good morning >Would it be possible to move tomorrow meeting to 4pm as the Russian attorney is in court until 3 i was just informed. >Best >Rob > >This iphone speaks many languages > RELEASED BY AUTHORITY OF THE CHAIRMAN OF THE SENATE JUDICIARY COMMITTEE CONFIDB>JTIAL CO().JFIDEMTIAL TREATMEMT REQUESTEO DJTFP00011895 >On Jun 7, 2016, at 18:14, Donald Trump Jr. -

Congressional Record—House H2574

H2574 CONGRESSIONAL RECORD — HOUSE May 19, 2021 Sewell (DelBene) Wilson (FL) Young (Joyce NAYS—208 Ruppersberger Slotkin (Axne) Wilson (SC) Slotkin (Axne) (Hayes) (OH)) (Raskin) Waters (Timmons) Aderholt Gohmert Moolenaar Waters Wilson (SC) Rush (Barraga´ n) Young (Joyce Allen (Barraga´ n) (Timmons) Gonzales, Tony Mooney (Underwood) Wilson (FL) Amodei (OH)) Gonzalez (OH) Moore (AL) Sewell (DelBene) (Hayes) The SPEAKER pro tempore. The Armstrong Good (VA) Moore (UT) question is on the resolution. Arrington Gooden (TX) Mullin f Babin Gosar Murphy (NC) The question was taken; and the Bacon Granger Nehls NATIONAL COMMISSION TO INVES- Speaker pro tempore announced that Baird Graves (LA) Newhouse TIGATE THE JANUARY 6 ATTACK the ayes appeared to have it. Balderson Graves (MO) Norman Banks Green (TN) Nunes ON THE UNITED STATES CAP- Mr. RESCHENTHALER. Mr. Speak- Barr Greene (GA) Obernolte ITOL COMPLEX ACT Bentz Griffith er, on that I demand the yeas and nays. Owens Mr. THOMPSON of Mississippi. Mr. Bergman Grothman Palazzo The SPEAKER pro tempore. Pursu- Bice (OK) Guest Palmer Speaker, pursuant to House Resolution ant to section 3(s) of House Resolution Biggs Guthrie Pence 409, I call up the bill (H.R. 3233) to es- 8, the yeas and nays are ordered. Bilirakis Hagedorn Perry tablish the National Commission to In- Bishop (NC) Harris Pfluger The vote was taken by electronic de- Boebert Harshbarger Posey vestigate the January 6 Attack on the vice, and there were—yeas 216, nays Bost Hartzler Reed United States Capitol Complex, and for 208, not voting 5, as follows: Brady Hern Reschenthaler other purposes, and ask for its imme- Brooks Herrell Rice (SC) diate consideration. -

![Arxiv:1610.01655V2 [Cs.SI] 3 Nov 2016](https://docslib.b-cdn.net/cover/3833/arxiv-1610-01655v2-cs-si-3-nov-2016-1153833.webp)

Arxiv:1610.01655V2 [Cs.SI] 3 Nov 2016

Trump vs. Hillary Analyzing Viral Tweets during US Presidential Elections 2016 Walid Magdy1 and Kareem Darwish2 1School of Informatics, The University of Edinburgh, UK 2Qatar Computing Research Institute, HBKU, Doha, Qatar Email: [email protected], [email protected] Twitter: @walid magdy, kareem2darwish Abstract during these two months on TweetElect. After manu- ally tagging all the tweets in our collection for support In this paper, we provide a quantitative and qual- for either candidate, we looked at: which candidate has itative analyses of the viral tweets related to the US presidential election. In our study, we focus more traction on Twitter and a more diverse support on analyzing the most retweeted 50 tweets for base; when a shift in the volume of supporting tweets everyday during September and October 2016. happens; and which tweets were the most viral. The resulting set is composed 3,050 viral tweets, We observed that retweet volume of pro-Trump and they were retweeted over 20.5 million times. tweets dominated the retweet volume of pro-Clinton We manually annotated the tweets as favorable tweets on most days during September 2016, and al- of Trump, Clinton, or neither. Our quantitative most all the days during October. A notable exception study shows that tweets favoring Trump were usu- was the day after the first presidential debate and the ally retweeted more than pro-Clinton tweets, with day after the leak of the Access Hollywood tape in which the exception of a few days in September and two days in October, especially the day following the Trump used lewd language. -

Analysing Journalists Written English on Twitter

Analysing Journalists Written English on Twitter A comparative study of language used in news coverage on Twitter and conventional news sites Douglas Askman Department of English Bachelor Degree Project English Linguistics Autumn 2020 Supervisor: Kate O’Farrell Analysing Journalists Written English on Twitter A comparative study of language used in news coverage on Twitter with conventional news sites. Douglas Askman Abstract The English language is in constant transition, it always has been and always will be. Historically the change has been caused by colonisation and migration. Today, however, the change is initiated by a much more powerful instrument: the Internet. The Internet revolution comes with superior changes to the English language and how people communicate. Computer Mediated Communication is arguably one of the main spaces for communication between people today, supported by the increasing amount and usage of social media platforms. Twitter is one of the largest social media platforms in the world today with a diverse set of users. The amount of journalists on Twitter have increased in the last few years, and today they make up 25 % of all verified accounts on the platform. Journalists use Twitter as a tool for marketing, research, and spreading of news. The aim of this study is to investigate whether there are linguistic differences between journalists’ writing on Twitter to their respective conventional news site. This is done through a Discourse Analysis, where types of informal language features are specifically accounted for. Conclusively the findings show signs of language differentiation between the two studied medias, with informality on twitter being a substantial part of the findings. -

Congress of the United States Washington D.C

Congress of the United States Washington D.C. 20515 April 29, 2020 The Honorable Nancy Pelosi The Honorable Kevin McCarthy Speaker of the House Minority Leader United States House of Representatives United States House of Representatives H-232, U.S. Capitol H-204, U.S. Capitol Washington, D.C. 20515 Washington, D.C. 20515 Dear Speaker Pelosi and Leader McCarthy: As Congress continues to work on economic relief legislation in response to the COVID-19 pandemic, we ask that you address the challenges faced by the U.S. scientific research workforce during this crisis. While COVID-19 related-research is now in overdrive, most other research has been slowed down or stopped due to pandemic-induced closures of campuses and laboratories. We are deeply concerned that the people who comprise the research workforce – graduate students, postdocs, principal investigators, and technical support staff – are at risk. While Federal rules have allowed researchers to continue to receive their salaries from federal grant funding, their work has been stopped due to shuttered laboratories and facilities and many researchers are currently unable to make progress on their grants. Additionally, researchers will need supplemental funding to support an additional four months’ salary, as many campuses will remain shuttered until the fall, at the earliest. Many core research facilities – typically funded by user fees – sit idle. Still, others have incurred significant costs for shutting down their labs, donating the personal protective equipment (PPE) to frontline health care workers, and cancelling planned experiments. Congress must act to preserve our current scientific workforce and ensure that the U.S. -

CONGRESSIONAL RECORD— Extensions of Remarks E871 HON

September 22, 2020 CONGRESSIONAL RECORD — Extensions of Remarks E871 COMMENDING THE WORK OF JOHN dress the dire need of diapers within the Kan- portunities for medical professionals to provide MOLIERE AND STANDARD COM- sas City area as I believe this will ultimately care in rural community-based settings. I urge MUNICATIONS lead to improved health for families within our my colleagues to support this bill. communities. f Madam Speaker, I proudly ask you to join HON. DENVER RIGGLEMAN PERSONAL EXPLANATION OF VIRGINIA me in recognizing HappyBottoms for their ef- fort in collecting, packaging and distributing IN THE HOUSE OF REPRESENTATIVES diapers to low income families across the Kan- HON. MARTHA ROBY Tuesday, September 22, 2020 sas City metro area. I am honored to rep- OF ALABAMA Mr. RIGGLEMAN. Madam Speaker, I rise resent this wonderful organization in the IN THE HOUSE OF REPRESENTATIVES today to recognize the work being done by 5th United States Congress. Tuesday, September 22, 2020 District residents to combat the COVID–19 f pandemic. The employees of Standard Com- Mrs. ROBY. Madam Speaker, I was unable MATERNAL HEALTH QUALITY munications, Inc. have worked diligently with to vote on Thursday, September 17. Had I IMPROVEMENT ACT OF 2020 the Department of Veterans affairs to install been present I would have voted as follows: NAY on Roll Call No. 193; YEA on Roll Call medical IT solutions. This work has included a SPEECH OF new device to aid in the decontamination of No. 194; and YEA on Roll Call No. 195. medical equipment using ultraviolet light. HON. ANNA G. -

Trump Administration Key Policy Personnel Updated: February 5, 2017 Positions NOT Subject to Senate Confirmation in Italics ______

Trump Administration Key Policy Personnel Updated: February 5, 2017 Positions NOT subject to Senate confirmation in italics ______________________________________________________________________________________________ White House Chief of Staff: Reince Priebus Priebus is the former Chairman of the Republican National Committee (RNC). He previously worked as chairman of the Republican Party of Wisconsin. He has a long history in Republican politics as a grassroots volunteer. He worked his way up through the ranks of the Republican Party of Wisconsin as 1st Congressional District Chairman, State Party Treasurer, First Vice Chair, and eventually State Party Chairman. In 2009, he served as General Counsel to the RNC, a role in which he volunteered his time. White House Chief Strategist and Senior Counselor: Stephen Bannon Bannon worked as the campaign CEO for Trump’s presidential campaign. He is the Executive Chairman of Breitbart News Network, LLC and the Chief Executive Officer of American Vantage Media Corporation and Affinity Media. Mr. Bannon is also a Partner of Societe Gererale, a talent management company in the entertainment business. He has served as the Chief Executive Officer and President of Genius Products, Inc. since February 2005. Attorney General: Senator Jeff Sessions (R-Ala.) Sen. Sessions began his legal career as a practicing attorney in Russellville, Alabama, and then in Mobile. Following a two- year stint as Assistant United States Attorney for the Southern District of Alabama, Sessions was nominated by President Reagan in 1981 and confirmed by the Senate to serve as the United States Attorney for Alabama’s Southern District, a position he held for 12 years. Sessions was elected Alabama Attorney General in 1995, serving as the state’s chief legal officer until 1997, when he entered the United States Senate.