No Bullshit Guide to Linear Algebra Preview

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Acting Without Bullshit

Journal of Liberal Arts and Humanities (JLAH) Issue: Vol. 1; No. 3 March 2020 (pp. 7-15) ISSN 2690-070X (Print) 2690-0718 (Online) Website: www.jlahnet.com E-mail: [email protected] Acting without Bullshit Dr. Rodney Whatley 2190 White Pines Drive Pensacola, FL 32526 Abstract This article is a guide to honest acting. Included is a discussion that identifies dishonest acting and the actor training that results in it. A definition of what truth onstage consists of is contrasted with a description of impractical acting exercises that should be avoided. The consequences of acting with bullshit are revealed. The article concludes with a description of the most practical and honest acting exercise. If you are a performance artist who loves shows with no dialogue, characters without names, or an unconnected series of events in lieu of plot, this essay isn’t for you. If you are an artist who communicates with sounds instead of words, this isn’t for you. However, if you like plots with specific characters pursuing superobjectives despite conflict, that explores the human condition, this essay is for you. This is a guide to practical, straightforward and honest acting. The actor must be honest in the relationship with the audience because you can’t shit a shitter, so don’t try. How can actors be honest onstage when acting is lying? By studying with good acting teachers who teach good acting classes, and aye, there’s the rub. Bad acting teachers train actors to get lost within their own reality, to surround themselves with the “magic” of acting and “become the character.” This teaches that the goal of acting is to convince the audience that the actor is the character. -

Extrema, So I Will Be Good and Keep It Qualitative; Binaries Must Go Off Into the Sunset Without Me

e x t r e m a or, antipodes aroog khaliq dedication for Dr. Kaminski, Dr. Klayder, my liver-friends, and all who read my words with care. yours always, aroog 1 considerations hai aadmī bajā.e ḳhud ik mahshar-e-ḳhayāl ham anjuman samajhte haiñ ḳhalvat hī kyuuñ na ho a man is no individual, rather he is a pandemonium of thoughts i consider him a group even in his solitude —mirza ghalib na pūchho ahd-e-ulfat kī bas ik ḳhvāb-e-pareshāñ thā na dil ko raah par laa.e na dil kā mudda.ā samjhe do not inquire of the era of love, it was merely a scattered dream; i did not tame my heart, nor did i understand its desires. —faiz ahmed faiz 2 babble / dreams of dying 3 there are questions i can only ask in poetry because if you take them literally i can smile, guileless, none of your concern sticking to me true story—one time i wrote poetic prose for class repeated the line “i am nothing” for resonance and ended up in the counselor’s, cornered by two women determined to see me at the finish line with neither blankness nor a crazed look nor grief obscuring my vision as i sway, pulled by the wind so when i ask you “do you sometimes dream of what the side of a head looks like after it’s bashed again and again against a brick wall?” answer simply yes or no. grey matter or red blood. no clutching your pearls, no calling my mother, no bullshit. -

It Must Be Karma: the Story of Vicki Joy and Johnnymoon

University of New Orleans ScholarWorks@UNO University of New Orleans Theses and Dissertations Dissertations and Theses 5-14-2010 It Must be Karma: The Story of Vicki Joy and Johnnymoon Richard Bolner University of New Orleans Follow this and additional works at: https://scholarworks.uno.edu/td Recommended Citation Bolner, Richard, "It Must be Karma: The Story of Vicki Joy and Johnnymoon" (2010). University of New Orleans Theses and Dissertations. 1121. https://scholarworks.uno.edu/td/1121 This Thesis is protected by copyright and/or related rights. It has been brought to you by ScholarWorks@UNO with permission from the rights-holder(s). You are free to use this Thesis in any way that is permitted by the copyright and related rights legislation that applies to your use. For other uses you need to obtain permission from the rights- holder(s) directly, unless additional rights are indicated by a Creative Commons license in the record and/or on the work itself. This Thesis has been accepted for inclusion in University of New Orleans Theses and Dissertations by an authorized administrator of ScholarWorks@UNO. For more information, please contact [email protected]. It Must be Karma: The Story of Vicki Joy and Johnnymoon A Thesis Submitted to the Graduate Faculty of the University of New Orleans in partial fulfillment of the requirements for the degree Master of Fine Arts in Film, Theatre and Communication Arts Creative Writing by Rick Bolner B.S. University of New Orleans, 1982 May, 2010 Table of Contents Prologue ...............................................................................................................................1 -

Bullshit Assertion Kotzee, Ben

University of Birmingham Bullshit Assertion Kotzee, Ben License: None: All rights reserved Document Version Peer reviewed version Citation for published version (Harvard): Kotzee, B 2019, Bullshit Assertion. in SG (ed.), Oxford Handbook of Assertion. Oxford University Press. Link to publication on Research at Birmingham portal General rights Unless a licence is specified above, all rights (including copyright and moral rights) in this document are retained by the authors and/or the copyright holders. The express permission of the copyright holder must be obtained for any use of this material other than for purposes permitted by law. •Users may freely distribute the URL that is used to identify this publication. •Users may download and/or print one copy of the publication from the University of Birmingham research portal for the purpose of private study or non-commercial research. •User may use extracts from the document in line with the concept of ‘fair dealing’ under the Copyright, Designs and Patents Act 1988 (?) •Users may not further distribute the material nor use it for the purposes of commercial gain. Where a licence is displayed above, please note the terms and conditions of the licence govern your use of this document. When citing, please reference the published version. Take down policy While the University of Birmingham exercises care and attention in making items available there are rare occasions when an item has been uploaded in error or has been deemed to be commercially or otherwise sensitive. If you believe that this is the case for this document, please contact [email protected] providing details and we will remove access to the work immediately and investigate. -

Make It New: Reshaping Jazz in the 21St Century

Make It New RESHAPING JAZZ IN THE 21ST CENTURY Bill Beuttler Copyright © 2019 by Bill Beuttler Lever Press (leverpress.org) is a publisher of pathbreaking scholarship. Supported by a consortium of liberal arts institutions focused on, and renowned for, excellence in both research and teaching, our press is grounded on three essential commitments: to be a digitally native press, to be a peer- reviewed, open access press that charges no fees to either authors or their institutions, and to be a press aligned with the ethos and mission of liberal arts colleges. This work is licensed under the Creative Commons Attribution- NonCommercial- NoDerivatives 4.0 International License. To view a copy of this license, visit http://creativecommons.org/licenses/ by-nc-nd/4.0/ or send a letter to Creative Commons, PO Box 1866, Mountain View, California, 94042, USA. DOI: https://doi.org/10.3998/mpub.11469938 Print ISBN: 978-1-64315-005- 5 Open access ISBN: 978-1-64315-006- 2 Library of Congress Control Number: 2019944840 Published in the United States of America by Lever Press, in partnership with Amherst College Press and Michigan Publishing Contents Member Institution Acknowledgments xi Introduction 1 1. Jason Moran 21 2. Vijay Iyer 53 3. Rudresh Mahanthappa 93 4. The Bad Plus 117 5. Miguel Zenón 155 6. Anat Cohen 181 7. Robert Glasper 203 8. Esperanza Spalding 231 Epilogue 259 Interview Sources 271 Notes 277 Acknowledgments 291 Member Institution Acknowledgments Lever Press is a joint venture. This work was made possible by the generous sup- port of -

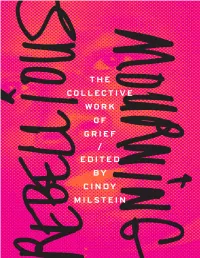

Rebellious Mourning / the Collective Work of Grief

REBELLIOUS MOURNING / THE COLLECTIVE WORK OF GRIEF EDITED BY CINDY MILSTEIN AK PRESS PRAISE FOR REBELLIOUS MOURNING In a time when so many lives are considered ungrievable (as coined by Judith Butler), grieving is a politically necessary act. This evocative collection reminds us that vulnerability and tenderness for each other and public grievability for life itself are some of the most profound acts of community resistance. —HARSHA WALIA, author of Undoing Border Imperialism Before the analysis and the theory comes the story, the tale of the suffering and the struggle. Connecting mothers demanding justice for their children killed by the police, to neighbors monitoring the effects of radiation after Fukushima, to activists bringing water to immigrants crossing the borderlands, or fighting mountaintop removal in West Virginia, or keeping the memory alive of those who died of AIDS, Rebellious Mourning uncovers the destruction of life that capitalist development leaves in its trail. But it’s also witness to the power of grief as a catalyst to collective resistance. It is a beautiful, moving book to be read over and over again. —SILVIA FEDERICI, author of Caliban and the Witch: Women, the Body, and Primitive Accumulation Our current political era is filled with mourning and loss. This powerful, intimate, beautiful book offers a transformative path toward healing and resurgence. —JORDAN FLAHERTY, author of No More Heroes: Grassroots Challenges to the Savior Mentality Rebellious Mourning offers thoughtful, artful essays by resisters on the meanings and uses of grief and mourning. This collection encourages us as people and organizers to understand the Phoenix-like power of these emotions. -

Social Media Is Bullshit

SOCIAL MEDIA IS BULLSHIT 053-50475_ch00_4P.indd i 7/14/12 6:37 AM 053-50475_ch00_4P.indd ii 7/14/12 6:37 AM 053-50475_ch00_4P.indd iii 7/14/12 6:37 AM social media is bullshit. Copyright © 2012 by Earth’s Temporary Solu- tion, LLC. For information, address St. Martin’s Press, 175 Fifth Avenue, New York, N.Y. 10011. www .stmartins .com Design by Steven Seighman ISBN 978- 1- 250- 00295- 2 (hardcover) ISBN 978-1-250-01750-5 (e-book) First Edition: September 2012 10 9 8 7 6 5 4 3 2 1 053-50475_ch00_4P.indd iv 7/14/12 6:37 AM To Amanda: I think you said it best, “If only we had known sooner, we would have done nothing diff erent.” 053-50475_ch00_4P.indd v 7/14/12 6:37 AM 053-50475_ch00_4P.indd vi 7/14/12 6:37 AM CONTENTS AN INTRODUCTION: BULLSHIT 101 One: Our Terrible, Horrible, No Good, Very Bad Web site 3 Two: Astonishing Tales of Mediocrity 6 Three: “I Wrote This Book for Pepsi” 10 Four: Social Media Is Bullshit 15 PART I: SOCIAL MEDIA IS BULLSHIT Five: There Is Nothing New Under the Sun . or on the Web 21 Six: Shovels and Sharecroppers 28 Seven: Yeah, That’s the Ticket! 33 Eight: And Now You Know . the Rest of the Story 43 Nine: The Asshole- Based Economy 54 053-50475_ch00_4P.indd vii 7/14/12 6:37 AM Ten: There’s No Such Thing as an Infl uencer 63 Eleven: Analyze This 74 PART II: MEET THE PEOPLE BEHIND THE BULLSHIT Twelve: Maybe “Social Media” Doesn’t Work So Well for Corporations, Either? 83 Thirteen: Kia and Facebook Sitting in a Tree . -

CALIFORNIA STATE UNIVERSITY, NORTHRIDGE 16 BARS a Thesis

CALIFORNIA STATE UNIVERSITY, NORTHRIDGE 16 BARS A thesis submitted in partial fulfillment of the requirements For the degree of Master of Arts in Screenwriting by John Reyes Cadiente May 2013 The thesis of John Reyes Cadiente is approved: Rappaport, Jared H, MFA Date Krasilovsky, Alexis R, MFA Date Sturgeon, Scott, MFA, Chair Date California State University, Northridge ii DEDICATION This thesis is dedicated to: My family. With their unconditional love and support, anything is possible. iii TABLE OF CONTENTS Signature Page…………………………………………………………………………… ii Dedication ……………………………………………………………………………….iii List of Tables …………………………………………………………………………….iv Abstract …………………………………………………………………………………v 16 BARS ………………………………………………………………………………….1 iv ABSTRACT 16 BARS by John Reyes Cadiente Master of Arts in Screenwriting When Jake, a witty high school student with a knack for hip-hop music, gets kicked out of his home and sent to a continuation school, he must now find his way back by surviving the school and ultimately becoming his own man. v OVER BLACK CHEERS of hundreds of rowdy teenagers uproar with excitement. HEAVY BREATHING of a boy who awaits to be brought on stage. EMINEM'S "TILL I COLLAPSE" plays. FADE IN. DREAM SEQUENCE INT. AUDITORIUM - NIGHT It's dark backstage. JAKE, 17 and short, breathes heavily and jitters behind the curtains. He wears a hoodie over his head similar to a boxer whose about to enter a ring. The song kicks it up a notch when a low rumbling of GUITARS and DRUM thumps build up. A hear thumping rhythm develops. The crowd outside sings along with the backing vocals. YO LEFT... YO LEFT... YO LEFT, RIGHT, LEFT! Jake looks down at his SCUFFED UP NIKE'S to catch light underneath the curtains hitting it. -

Embodied Women *No Bullshit* Circle

Embodied Women *No Bullshit* Circle Let’s begin - I’ve put ‘no bullshit’ is in the title because it’s so important for me to not be adding to the ‘New Age love and light’ deal – which can be pretty false, not always – but instead to curate a circle of women who are down with doing the work, wading through the shadows and asking the challenging questions of themselves and the world around them. Not airbrushing with ‘just be positive’ or ‘easy peasy manifesting’. Instead a way to develop genuine power by being truly yourself and truly embodied. Embodied, for me: In your body In control of your body Using it as a tool for yours and other’s good Being truly alive Feeling, in a world that is so often numb and allows the abuse and manipulation of women’s bodies. This is work I’ve been doing for years – personally, with other teachers and with clients at The Warrior Programme, which some of you will know of. • Exploring my shitty beliefs, derived from past experiences, and turning them into something that actually works for me. • Coming out of pain in the body and learning from it. • Learning to feel again. • Learning to be more than a number on the scales or in the bank. • Moving through the chronic culture of overworking and self-abandonment. I’m still doing all of it and will be for as long as I’m here. Attending this circle is based on the following assumptions: • You don’t like bullshit! You like plain-speak, honesty and openness. -

United States of America V. Vicente Garcia

AO 91 (REV.5/85) Criminal Complaint AUSA ANDREW PORTER 312-353-5358 AUSA T. DIAMANTATOS 312-353-4317 AUSA NANCY MILLER 312-353-4224 W4444444444444444444444444444444444444444444444444444444444444444444444444444444444444444444444444444444444444 UNITED STATES DISTRICT COURT NORTHERN DISTRICT OF ILLINOIS, EASTERN DIVISION UNITED STATES OF AMERICA CRIMINAL COMPLAINT v. CASE NUMBER VICENTE GARCIA, aka “DK” and others UNDER SEAL I, the undersigned complainant being duly sworn state the following is true and correct to the best of my knowledge and belief. Beginning in or aroundSeptember 2007 and continuing to present, in Cook County, in the Northern District of Illinois, and elsewhere, defendants, knowingly and intentionally conspired to possess with intent to distribute and to distribute in excess of 500 grams of mixtures containing cocaine, a Schedule II Narcotic Drug Controlled Substance, in violation of Title 21, United States Code, Section 841(a)(1), all in violation of Title 21 United States Code, Section 846. I further state that I am a(n) Special Agent, FBI and that this complaint is based on the following facts: See Attached Affidavit. Continued on the attached sheet and made a part hereof: X Yes No Signature of Complainant Sworn to before me and subscribed in my presence, September , 2008 at Chicago, Illinois Date City and State Maria Valdez, Magistrate Judge Name & Title of Judicial Officer Signature of Judicial Officer 2. VALENTIN BAEZ, aka “Baby 24,” “Valentin Biez;” 3. ROLANDO BAUTISTA, aka "Shorty;" 4. ALPHONSO CHAVEZ, aka "Ponch;" 5. JUAN DEJESUS, aka "Baby 28;" 6. DANNY DOMINGUEZ, aka "Baby Trigger,” “Baby T;” 7. JOSE DOMINGUEZ, aka "Silent;" 8. DANIEL GALINDO, aka "Horse;" 9. -

Busta Rhymes Bottles up Album Download Zip DOWNLOAD ALBUM: Busta Rhymes – Extinction Level Event 2: the Wrath of God (The Deluxe Edition) Zip Download

busta rhymes bottles up album download zip DOWNLOAD ALBUM: Busta Rhymes – Extinction Level Event 2: The Wrath of God (The Deluxe Edition) Zip Download. Busta Rhymes – Extinction Level Event 2: The Wrath of God (The Deluxe Edition) Zip Download. Busta Rhymes – Extinction Level Event 2: The Wrath of God (The Deluxe Edition) Zip’ s new singles is one of his best performance and definitely a must listen to. Busta Rhymes – Extinction Level Event 2: The Wrath of God (The Deluxe Edition) Zip Download. 1.E.L.E. 2 Intro 2.The Purge 3.Strap Yourself Down 4.Czar 5.Outta My Mind (feat. Bell Biv DeVoe) 6.E.L.E. 2 The Wrath of God (feat. Minister Louis Farrakhan) 7.Slow Flow 8.Don’t Go 9.Boomp! 10.True Indeed 11.Master Fard Muhammad 12.YUUUU 13.Oh No 14.The Don & The Boss 15.Best I Can 16.Where I Belong (feat. Mariah Carey) 17.Deep Thought 18.The Young God Speaks 19.Look Over Your Shoulder (feat. Kendrick Lamar) 20.You Will Never Find Another Me (feat. Mary J. Blige) 21.Freedom? (feat. NIKKI GRIER) 22.Satanic 23.Blowing The Speakers 24.Who Are You 25.Hope Your Dreams Come True 26.Calm Down (feat. Eminem) 27.Czar (feat. M.O.P. & CJ) 28.Follow the Wave (feat. Flipmode Squad, Rampage, Rah Digga & Spliff Star) 29.Blow A Million Racks 30.Hey You (feat. TRILLIAN) Related Articles. Busta Rhymes – Extinction Level Event 2: The Wrath of God (The Deluxe Edition) Zip Download. The post DOWNLOAD ALBUM: Busta Rhymes – Extinction Level Event 2: The Wrath of God (The Deluxe Edition) Zip Download appeared first on 77tunes. -

Read Razorcake Issue #36 As a PDF

So Very Unsexy shove—but any more rules than that, and it’s only a matter of time that I thought of this in Gainesville, Florida at The Fest. I was in your brushstrokes of good intentions have you painted into a corner. ZRQGHU 1R ¿JKWV 1R EXOOVKLW *UHDW SHRSOH *UHDW PXVLF $Q Here’s the connection: Too often, the folks who have the most expertly planned, large event. How’d this happen? “expert without actually doing it” advice are the ones who want more I’m not deaf, but I do admit that ninety-eight percent of the time rules enforced because they don’t feel in control of the situation; ZKHQ,KHDU³+H\GXGH\RXVKRXOGBBBBBBBBB ¿OOLQWKHEODQN hell, they may even have the best of intentions. But, ultimately, they with the zine. That’d totally keep it from suckin’,” I’m no longer want to steer from the back seat—yank the wheel—want to have paying attention. I’m picturing this really cute, fuzzy otter and he’s equal time without equal effort. They have the opportunity to walk ÀRDWLQJRQKLVEDFNWU\LQJWRFUDFNRSHQWKLVFODPZLWKDURFN0DQ away from a car you built if they wreck it. ,ORYHWKDWRWWHUJHWWLQJDOO'DUZLQRQWKHVKHOO¿VKORXQJLQJLQWKH ,¶OOOHW\RXLQRQDFRXSOHRIVHFUHWV 5D]RUFDNH¶VQRWEOLQGO\ ocean, being all cute, calm, and concentrated. And by the time the groping along, bumping into things, and somehow a zine ploops out person’s done telling me what I should do—without them offering of heinies every two months. It’s a lot of very tedious, constant work to take up the task they’re suggesting—that little otter’s opened WKDWLVVRYHU\XQVH[\WKDWLW¶VERULQJWRHYHQEULHÀ\PHQWLRQZKDW up that shell; he smiles as he eats.