Towards Semantic Music Information Extraction from the Web Using Rule Patterns and Supervised Learning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Places to Go, People to See Thursday, Feb

Versu Entertainment & Culture at Vanderbilt FEBRUARY 28—MARCH 12,2, 2008 NO. 7 RITES OF SPRING PLACES TO GO, PEOPLE TO SEE THURSDAY, FEB. 28 FRIDAY, FEB. 29 SATURDAY 3/1 Silverstein with The Devil Wears Prada — Rocketown John Davey, Rebekah McLeod and Kat Jones — Rocketown Sister Hazel — Wildhorse Saloon The Regulars Warped Tour alums and hardcore luminaries Silverstein bring their popular Indiana native John Davey just might be the solution to February blues — his unique pop/ Yes, they’re still playing together and touring. Yes, they can still rock sound to Nashville. The band teamed up with the Christian group The Devil folk sound is immediately soothing and appealing and is sure to put you in a good mood. with the best of ’em. Yes, you should go. Save all your money this THE RUTLEDGE Wears Prada for a long-winded U.S. tour. ($5, 7 p.m.) 401 Sixth Avenue South, 843-4000 week for that incredibly sweet sing-along to “All For You” (you know 410 Fourth Ave. S. 37201 ($15, 6 p.m.) 401 6th Avenue S., 843-4000 you love it). ($20-$45, 6 p.m.) 120 Second Ave. North, 902-8200 782-6858 Music in the Grand Lobby: Paula Chavis — The Frist Center for the Steep Canyon Rangers — Station Inn Red White Blue EP Release Show — The 5 Spot Visual Arts MERCY LOUNGE/CANNERY This bluegrass/honky-tonk band from North Carolina has enjoyed a rapid Soft rock has a new champion in Red White Blue. Check out their EP Nashville’s best-kept secret? The Frist hosts free live music in its lobby every Friday night. -

The Suffolk Ournal

Suffolk University Digital Collections @ Suffolk Suffolk Journal Suffolk University Student Newspapers 2008 Newspaper- Suffolk Journal vol. 63, no. 3, 1/23/2008 Suffolk Journal Follow this and additional works at: https://dc.suffolk.edu/journal Recommended Citation Suffolk Journal, "Newspaper- Suffolk Journal vol. 63, no. 3, 1/23/2008" (2008). Suffolk Journal. 482. https://dc.suffolk.edu/journal/482 This Newspaper is brought to you for free and open access by the Suffolk University Student Newspapers at Digital Collections @ Suffolk. It has been accepted for inclusion in Suffolk Journal by an authorized administrator of Digital Collections @ Suffolk. For more information, please contact [email protected]. nTL EXT EDITIOTN: SUFFOLK UNIVERSIITY • BOSTON, MASSACHUSETTS r~-in OJlJlr ir Lr fii V THE SUFFOLK OURNAL -m VOLUME 63, NUMBER 3 WWW.SUFFOLKJOURNAL.NE I WEDNESDAY • JANUARY 23, 2008 Downtown Crossing dorm becomes Expansion Update new home for Suffolk Students Christine Adams Journal Staff Ben Paulin and Suffolk University Continuing with Journal Staff will have on each other. the recent trend of cam Construction "The vitality the students pus expansion, Suffolk workers, city officials, and will bring meansa lot [to University filed its in the Suffolk community the area]." stitutional master plan alike gathered on a chilly Nucci also saidthat 10 notification form with night to unveil Suffolk's West is a win-win for the city of Boston on Jan. newest dormitory, 10 the neighborhood, the 11. According to Gordon West, on Wednesday Jan. 9. university, and the city King, Senior Director of The glitzy social- , ' li' of Boston saying the new Facilities Planning and ites of the Suffolk com residents will be "kicking Management, "The mas munity, whose guest list off a Renaissance in this ter plancalls for a physi included Mayor Thomas area." cal expansion of the Uni Menino, used the time to Maureen Wark, versity to accommodate chitchat with one an Director of Residence • more residents, academic other over hors d'oeuvres. -

Frances the Mute Storyline

Frances the mute storyline The next song L'via l'viaquez tells the story about Frances sister L'via that hides from the church or whatever because she watched the priest. It's a concept album of sorts, but since nothing with the Mars Volta is ever simple, Frances the Mute isn't a concept easily explained. Not that the band won't try. Frances the Mute is the second studio album by American progressive rock band The Mars In the Q & Mojo Classic Special Edition Pink Floyd & The Story of Prog Rock, the album came #18 in its list of "40 Cosmic Rock Albums" and the. Frances The Mute is easily one of the best albums I have ever heard. as the albums somewhat impossible to follow story revolves around a. frances the mute interpretation whole album He doesnt believe Cassandra, so he plays the story out in his mind and realises that it wasnt true. kind of feels like the whole story needs to be reconsidered. Frances the Mute - stop set to: ( long iTunes version); Cygnus. Frances the Mute. Vismund Cygnus"-- which is said to tell the story of an HIV-positive male prostitute and drug addict born out of rape, but. the story of Frances The Mute - posted in The Mars Volta: i was wondering, since the topic is very close to my heart, if anyone can tell me more Amputechture Would Be So Much Better If - Page 6 - The Mars. The follow up album, Frances the Mute, is maybe about a mute named. Cerpin Taxt: Protagonist of the story in the Mars Volta album, De-loused in the. -

Psaudio Copper

Issue 128 JANUARY 11TH, 2021 Things change with time, as my very close friend likes to say. Here’s my New Year’s wish: that things change for the better in 2021. A new year brings new possibilities. We’re saddened by the loss of Gerry Marsden (78) of British Invasion legends Gerry and the Pacemakers. Their first single, “How Do You Do It,” hit number one on the British charts in 1963 – before the Beatles ever accomplished that feat – and they went on to have other smash records like “Don’t Let the Sun Catch You Crying,” You’ll Never Walk Alone,” “I Like It” and “Ferry Cross the Mersey.” These songs are woven not just into the fabric of the 1960s, but our lives. In this issue: Anne E. Johnson covers the career of John Legend and rediscovers 17th century composer Marc-Antoine Charpentier. Larry Schenbeck gets soul and takes on “what if?” Ken Sander goes Stateside with The Stranglers. J.I. Agnew locks in his lockdown music system. Tom Gibbs looks at new releases from Kraftwerk, Julia Stone and Max Richter. Adrian Wu continues his series on testing in audio. John Seetoo mines a mind-boggling mic collection. Jay Jay French remembers Mountain’s Leslie West. Dan Schwartz contemplates his streaming royalties. Steven Bryan Bieler ponders the new year. Ray Chelstowski notes that January’s a slow month for concerts – with a few monumental exceptions. Stuart Marvin examines how musicians and their audiences are adapting to the pandemic. I reminisce about hanging out with Les Paul. We round out the issue with single-minded listening, the world’s coolest multi-room audio system, mixed media, and a girl on a mission. -

The Mars Volta Is Anybody There? Mp3, Flac, Wma

The Mars Volta Is Anybody There? mp3, flac, wma DOWNLOAD LINKS (Clickable) Genre: Rock Album: Is Anybody There? Country: US Released: 2009 Style: Experimental, Prog Rock MP3 version RAR size: 1618 mb FLAC version RAR size: 1366 mb WMA version RAR size: 1429 mb Rating: 4.2 Votes: 486 Other Formats: ADX DTS DXD AU AHX RA MP3 Tracklist A1 Roulette Dares 11:55 A2 Prelude To Cicatriz 2:27 A3 Cicatriz Esp Part 1 4:01 B Cicatriz Esp Part 2 18:22 C Drunkship 10:54 D1 Eunuch Provocateur (Demo) 6:08 D2 Inertiatic Esp (Demo) 6:14 Notes Housed in a black cardboard sleeve with folded cover.Plain black labels on LP1 and plain silver labels on LP2. Pressed on brown and dark blue marbled vinyl. All on 180 grams heavyweight vinyl. Recorded live at Lowlands, Holland on August 29, 2003. Two demos also available on this release. Barcode and Other Identifiers Matrix / Runout (Side A): LFS-78292003-A Matrix / Runout (Side B): LFS-78292003-B Matrix / Runout (Side C): LFS-78292003-C Matrix / Runout (Side D): LFS-78292003-D Other versions Category Artist Title (Format) Label Category Country Year Not On Label The Mars Is Anybody There? (2xLP, none (The Mars none US 2003 Volta Promo, Unofficial, Gre) Volta) The Mars Is Anybody There? (2xLP, none Not On Label none US 2009 Volta Unofficial) The Mars Is Anybody There? (2xLP, none Not On Label none US 2009 Volta Unofficial, Yel) Related Music albums to Is Anybody There? by The Mars Volta Monstromorgue / Cicatriz / Stomachal Corrosion - 3 Way Split Tape Mars Volta, The - The Widow Mars Volta - Octahedron The Mars Volta - Inertiatic ESP Mars Volta, The - Goliath Cicatriz - Colgado Por Ti The Mars Volta - de-loused in the comatorium Mars Volta, The - Televators. -

The Mars Volta (US)

2007-11-21 13:53 CET The Mars Volta (US) 19 februari - Oslo, Sentrum 20 februari - Stockholm, Cirkus Om ditt inre ”bra- musik-alarm” inte går av när du ser namnen Omar Rodriguez-Lopez och Cedric Bixler-Zavala är du i gravt behov av en hälsokontroll. Det är nämligen de två genialiska männen som utgör The Mars Volta, världens kanske mest kreativt fulländade band. Rodriguez-Lopez skriver musiken och spelar gitarr och Bixler-Zavala skriver text och sjunger i det här exceptionella och egensinniga partnerskapet. 2002 släppte bandet sin första EP och sedan dess har man hunnit med allt det där vanliga som framgångsrika band gör, man turnerar, spelar in och genomlever det nödvändiga onda i mediekontakter men tillskillnad från andra projekt så urholkas inte The Mars Volta. För det som utgör särskiljningens logik i fallet Volta, är konceptualiseringen. Ja, du läste rätt. Dom gör konceptplattor, precis som alla dom där lite halv- pretentiösa banden på 70-talet älskade att göra. Första fullängdaren var en hyllning till Bixler-Zavala och Rodriguez-Lopez ungdomsårs mentor i El Paso, Texas. Deras hjälte Julio Venegas var en lokal konstnär som begick självmord 1996. På skivan, som heter ”De-Loused In The Comatorium” berättas om den fiktiva Cerpin Taxt som hamnar i koma efter ett misslyckat självmordsförsök och upplever mirakulösa händelser i sina drömmar. På platta nummer två fortsatte männen att arbeta efter sitt framgångsrecept. ”Frances the Mute” från 2005, berättar historien om Frances och protagonisten Cygnus i ett sci-fi- osande narrativ. Historien är inspirerad av texter ur en dagbok men var bara stoftet som sådde fröt till Bixler-Zavalas vilda fantasifoster. -

Colocated with ACM Recsys 2011 Chicago, IL, USA October 23, 2011 Copyright C

Colocated with ACM RecSys 2011 Chicago, IL, USA October 23, 2011 Copyright c . These are an open-access workshop proceedings distributed under the terms of the Creative Com- mons Attribution License 3.0 Unported1, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited. 1http://creativecommons.org/licenses/by/3.0/ Organizing Committee Amelie´ Anglade, SoundCloud Oscar` Celma, Gracenote Ben Fields, Musicmetric Paul Lamere, The Echo Nest Brian Mcfee, Computer Audition Laboratory, University of California, San Diego Program Committee Claudio Baccigalupo, RED Interactive Agency Dominikus Baur, LMU Munich Mathieu Barthet, Queen Mary, University of London Thierry Bertin-Mahieux, Columbia University Sally Jo Cunningham, the University of Waikato Zeno Gantner, University of Hildesheim Fabien Gouyon, INESC Porto Peter Knees, Johannes Kepler University Daniel Lemire, Universite´ du Quebec´ Mark Levy, Last.fm Markus Schedl, Johannes Kepler University Alan Said, Technische Universitat¨ Berlin Doug Turnbull, Ithaca College Preface Welcome to WOMRAD, the Workshop on Music Recommendation and Discovery being held in conjunction with ACM RecSys. WOMRAD 2011 is being held on October 23, 2011, exactly 10 years after Steve Jobs introduced the very first iPod. Since then there has been been an amazing transformation in the world of music. Portable listening devices have advanced from that original iPod that allowed you to carry a thousand songs in your pocket to the latest iPhone that can put millions of songs in your pocket via music subscription services such as Rdio, Spotify or Rhapsody. Ten years ago a typical personal music collection numbered around a thousand songs. -

Noctourniquet Album Downloaded the Mars Volta: Every Album Ranked from Worst to Best

noctourniquet album downloaded The Mars Volta: Every album ranked from worst to best. Although The Mars Volta disbanded some 14 years ago, their entire discography has been released in a lavish 18 -LP vinyl boxset titled La Realidad de los Sueños, which chronicles the band’s six extraordinary albums in excruciating detail. And this is just the excuse we need to reappraise every album by the El Paso experimentalists. The prog rock band, led by vocalist Cedric Bixler-Zavala and guitarist Omar Rodríguez-López, disbanded in 2013 , when Cedric and Omar reformed their previous band At The Drive-In. Rumours persist, however, that The Mars Volta will reform or already have, with Cedric alluding to it on Twitter in 2019 . When pushed to elaborate, the vocalist suggested: “ What it’s NOT going to be is your ‘ fav member line-up’ playing their ‘ classic records’ in full etc. Maybe we’ll play old shit, who knows how we feel.” That was two years and a global pandemic ago, though, so who knows whether their reconnection will still go beyond the caretaking of their reissues to new material and live dates. While we wait in hope, let’s return to the task of ranking the band’s albums and a piece of small print. While we’ve explored their qualities, we haven’t delved into the respective concepts, because a) they deserve expansive word counts all to themselves, b) they’re often impenetrable, and c) they don’t have a lasting role in how good the music is – who, for instance, declares: ‘ I hate the album but love the concept?’ So with that clarified, let’s dive in… 6. -

Open Content Licensing

Open Content Licensing Open Content Licensing From Theory to Practice Edited by Lucie Guibault & Christina Angelopoulos Amsterdam University Press Cover design: Kok Korpershoek bno, Amsterdam Lay-out: JAPES, Amsterdam ISBN 978 90 8964 307 0 e-ISBN 978 90 4851 408 3 NUR 820 Creative Commons License CC BY NC (http://creativecommons.org/licenses/by-nc/3.0) L. Guibault & C. Angelopoulos / Amsterdam University Press, Amsterdam, 2011 Some rights reversed. Without limiting the rights under copyright reserved above, any part of this book may be reproduced, stored in or introduced into a retrieval system, or transmitted, in any form or by any means (electronic, mechanical, photocopying, recording or otherwise). Contents 1. Open Content Licensing: From Theory to Practice – An Introduction 7 Lucie Guibault, Institute for Information Law, University of Amsterdam 2. Towards a New Social Contract: Free-Licensing into the Knowledge Commons 21 Volker Grassmuck, Humboldt University Berlin and University of Sao Paulo 3. Is Open Content a Victim of its Own Success? Some Economic Thoughts on the Standardization of Licenses 51 Gerald Spindler and Philipp Zimbehl, University of Göttingen 4. (Re)introducing Formalities in Copyright as a Strategy for the Public Domain 75 Séverine Dusollier, Centre de Recherche Informatique et Droit, Université Notre- Dame de la Paix (Namur) 5. User-Related Assets and Drawbacks of Open Content Licensing 107 Till Kreutzer, Institute for legal questions on Free and Open Source Software (ifrOSS) 6. Owning the Right to Open Up Access to Scientific Publications 137 Lucie Guibault, Institute for Information Law, University of Amsterdam 7. Friends or Foes? Creative Commons, Freedom of Information Law and the European Union Framework for Reuse of Public Sector Information 169 Mireille van Eechoud, Institute for Information Law, University of Amsterdam 8. -

Album of the Week: Antemasque

Album Of The Week: Antemasque 2014 has been a weird year and some of the music that has been released so far definitely exemplifies the theme. There have been unlikely and brilliant collaborations, incredibly unique and experimental albums and stuff that just snuck up on you. Then there’s an album that very well could be the rock record of the year and it hasn’t even been properly released yet, but it was streaming on the interwebs beginning in July until the early fall. Behold Antemasque, a new project from the dynamic duo of At The Drive-In and The Mars Volta’s Omar Rodriguez-Lopez and Cedric Bixler-Zavala. It doesn’t hurt that this musical jewel also has Flea from The Red Hot Chili Peppers on bass and Dave Elitch on drums too (both members have previously collaborated with The Mars Volta in the past). The band’s sound harkens back to the punk influences of Rodriguez-Lopez and Bixler-Zavala while having a little more polish and a whole lot of power. Recorded at The Boat in Silverlake, Calif., which was previously owned by legendary hip-hop producers The Dust Brothers and now it’s Flea’s rhythmic science lab, the album is clear and forceful. It at times can seem to be very psychedelic and at other times it’s a prog-punk riot fest. Antemasque seems to be the lovechild of Rodriguez-Lopez and Bixler-Zavala’s previous two bands; you have the raw angst of At The Drive-In combining with the complex structures of The Mars Volta to make a purely original essence. -

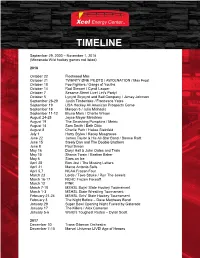

Xcel Energy Center Timeline

TIMELINE September 29, 2000 – November 1, 2018 (Minnesota Wild hockey games not listed) 2018 October 22 Fleetwood Mac October 21 TWENTY ØNE PILØTS / AWOLNATION / Max Frost October 18 Foo Fighters / Gangs of Youths October 14 Rod Stewart / Cyndi Lauper October 7 Sesame Street Live! Let’s Party! October 5 Lynyrd Skynyrd and Bad Company / Jamey Johnson September 28-29 Justin Timberlake / Francesco Yates September 19 USA Hockey All American Prospects Game September 18 Maroon 5 / Julia Michaels September 11-12 Bruno Mars / Charlie Wilson August 24-25 Joyce Meyer Ministries August 19 The Smashing Pumpkins / Metric August 14 Sam Smith / Beth Ditto August 8 Charlie Puth / Hailee Steinfeld July 1 Harry Styles / Kacey Musgraves June 22 James Taylor & His All-Star Band / Bonnie Raitt June 15 Steely Dan and The Doobie Brothers June 8 Paul Simon May 16 Daryl Hall & John Oates and Train May 15 Shania Twain / Bastian Baker May 6 Stars on Ice April 28 Bon Jovi / The Missing Letters April 21 Marco Antonio Solis April 5,7 NCAA Frozen Four March 23 Lorde / Tove Stryke / Run The Jewels March 16-17 NCHC Frozen Faceoff March 12 P!NK March 7-10 MSHSL Boys’ State Hockey Tournament March 1-3 MSHSL State Wrestling Tournament February 21-24 MSHSL Girls’ State Hockey Tournament February 3 The Night Before – Dave Matthews Band January 29 Super Bowl Opening Night Fueled by Gatorade January 17 The Killers / Alex Cameron January 5-6 World’s Toughest Rodeo – Dylan Scott 2017 December 30 Trans-Siberian Orchestra December 7-10 Marvel Universe LIVE! Age of Heroes 1 December 4 101.3 KDWB Jingle Ball - Fall Out Boy / Kesha / Charlie Puth / Halsey / Niall Horan / Camila Cabello / Liam Payne / Julia Michaels / Why Don't We December 3 U.S. -

The Mars Volta and the Symbolic Boundaries of Progressive Rock

GENRE AND/AS DISTINCTION: THE MARS VOLTA AND THE SYMBOLIC BOUNDARIES OF PROGRESSIVE ROCK R. Justin Frankeny A thesis submitted to the faculty at the University of North Carolina at Chapel Hill in partial fulfillment of the requirements for the degree of Master of Arts in Musicology in the School of Music. Chapel Hill 2020 Approved by: Aaron Harcus Michael A. Figueroa David F. Garcia © 2020 R. Justin Frankeny ALL RIGHTS RESERVED ii ABSTRACT R. Justin Frankeny: Genre and/as Distinction: The Mars Volta and the Symbolic Boundaries of Progressive Rock (Under the direction of Dr. Aaron Harcus) In the early 2000’s, The Mars Volta’s popularity among prog(ressive) rock fandom was, in many ways, a conundrum. Unlike 1970s prog rock that drew heavily on Western classical music, TMV members Omar Rodriguez-Lopez and Cedric Bixler-Zavala routinely insisted on the integral importance of salsa music to their style and asserted an ambivalent relationship to classic prog rock. This thesis builds on existing scholarship on cultural omnivorousness to assert that an increase in omnivorous musical tastes since prog rock’s inception in the 1970s not only explains The Mars Volta’s affiliation with the genre in the early 2000s, but also explains their mixed reception within a divided prog rock fanbase. Contrary to existing scholarship that suggests that the omnivore had largely supplanted the highbrow snob, this study suggests that the snob persisted in the prog rock fanbase during progressive rock’s resurgence (ca. 1997-2012), as distinguished by their assertion of the superiority of prog rock through discourses of musical complexity adapted from classical music.