Real-Time Static Gesture Detection Using Machine Learning By

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Learn to Use Signpuddle 1.0 on The

Some Dictionaries: ™ SignPuddle Online American Sign Language www.SignBank.org/signpuddle Arabic Sign Languages Brazilian Sign Language British Sign Language Colombian Sign Language Czech Sign Language Danish Sign Language Finnish Sign Language Flemish Sign Language French Sign Language German Sign Language Greek Sign Language International Sign Languages Irish Sign Language Italian Sign Language Japanese Sign Language Maltese Sign Language Come Splash in a Sign Puddle! Netherlands Sign Language 1. FREE on the web! Nicaraguan Sign Language Northern Ireland Sign Language 2. Search Sign Language Dictionaries. Norwegian Sign Language 3. Create your own signs and add them. Polish Sign Language 4. Send email in SignWriting®. Quebec Sign Language 5. Translate words to SignWriting. Spanish Sign Language 6. Create documents with SignWriting. Swiss Sign Languages 7. Have fun sharing signs on the internet! Taiwan Sign Language ...and others... http://www.SignBank.org/signpuddle Search by Words 1. Click on the icon: Search by Words 2. In the Search field: Type a word or a letter. 3. Press the Search button. 4. All the signs that use that word will list for you in SignWriting. 5. You can then copy the sign, or drag and drop it, into other documents. http://www.SignBank.org/signpuddle Search by Signs 1. Click on the icon: Search by Signs 2. In the Search field: Type a word or a letter. 3. Press the Search button. 4. The signs will list in small size. 5. Click on the small sign you want, and a larger version will appear... http://www.SignBank.org/signpuddle Search by Symbols 1. -

Sign Language Glove

Prototyping a Portable, Affordable Sign Language Glove A Major Qualifying Project Submitted to the Faculty of Worcester Polytechnic Institute In partial fulfillment of the requirements for the Degree of Bachelor of Science Electric and Computer Engineering By ____________________________ Princesa Cloutier ____________________________ Alex Helderman ____________________________ Rigen Mehilli Date: April 27, 2016 Project Advisors: ___________________________________ Professor Alexander Wyglinski, Advisor ___________________________________ Professor Alexander Emanuel, Advisor This report represents work of WPI undergraduate students submitted to the faculty as evidence of a degree requirement. WPI routinely publishes these reports on its web site without editorial or peer review. For more information about the projects program at WPI, see http://www.wpi.edu/Academics/Projects. Abstract Communication between speakers and non-speakers of American Sign Language (ASL) can be problematic, inconvenient, and expensive. This project attempts to bridge the communication gap by designing a portable glove that captures the user’s ASL gestures and outputs the translated text on a smartphone. The glove is equipped with flex sensors, contact sensors, and a gyroscope to measure the flexion of the fingers, the contact between fingers, and the rotation of the hand. The glove’s Arduino UNO microcontroller analyzes the sensor readings to identify the gesture from a library of learned gestures. The Bluetooth module transmits the gesture to a smartphone. Using this device, one day speakers of ASL may be able to communicate with others in an affordable and convenient way. ii | P a g e Acknowledgements Without the guidance and motivation of several groups of people, this project would not have achieved as much as it did. -

Top 300 Masters 2020

TOP 300 MASTERS 2020 2020 Top 300 MASTERS 1 About Broadcom MASTERS Broadcom MASTERS® (Math, Applied Science, Technology and Engineering for Rising Stars), a program of Society for Science & the Public, is the premier middle school science and engineering fair competition, inspiring the next generation of scientists, engineers and innovators who will solve the grand challenges of the 21st century and beyond. We believe middle school is a critical time when young people identify their personal passion, and if they discover an interest in STEM, they can be inspired to follow their passion by taking STEM courses in high school. Broadcom MASTERS is the only middle school STEM competition that leverages Society- affiliated science fairs as a critical component of the STEM talent pipeline. In 2020, all 6th, 7th, and 8th grade students around the country who were registered for their local or state Broadcom MASTERS affiliated fair were eligible to compete. After submitting the online application, the Top 300 MASTERS are selected by a panel of scientists, engineers, and educators from around the nation. The Top 300 MASTERS are honored for their work with a $125 cash prize, through the Society’s partnership with the U.S. Department of Defense as a member of the Defense STEM education Consortium (DSEC). Top 300 MASTERS also receive a prize package that includes an award ribbon, a Top 300 MASTERS certificate of accomplishment, a Broadcom MASTERS backpack, a Broadcom MASTERS decal, a one-year family digital subscription to Science News magazine, an Inventor's Notebook, courtesy of The Lemelson Foundation, a one-year subscription to Wolfram Mathematica software, courtesy of Wolfram Research, and a special prize from Jeff Glassman, CEO of Covington Capital Management. -

A Message from the President

Bulletin Toni Ziegler - Executive Secretary Volume 27, Number 1 March 2003 A Message from the President... “Now, when they come down to Canada Scarcely ‘bout half a year. .” Bob Dylan, “Canadee-i-o”, 1992 Annual Meeting in Calgary, August 2003 As usual, Dylan is right on the mark with his lyrics, since we are now fast approaching the 6-month mark to our annual meeting in Calgary, Alberta. By the time you receive this newsletter, abstracts will have been received and the scientific review will be underway by Marilyn Norconk and the rest of the program committee. This committee has already guaranteed a scientifically stimulating conference by arranging for invited presentations by Suzette Tardif, Linda Fedigan, Amy Vedder, and the receipient of the 2002 Distinguished Primatologist Award, Andy Hendrickx. The late meeting date (31 July – 2 August 2003) presumably allowed each of us to collect that last bit of crucial data needed for our abstracts! I look forward to hearing from all of you about the latest advances in primatology in disciplines ranging from anthropology to zoology, and having my students tell you about our recent research endeavors. ASP President Jeff French and Outstanding Poster Presentation winner Michael Rukstalis at the 2002 Oklahoma meetings World Politics and Primatology As I write these words in late February, the world faces an uncertain period where the risk of global instability is higher than at any other time in my 48-year lifetime. US and alllied troops are gathering around Iraq, North Korea is firing missiles into the Sea of Japan, and Pakistan and India are once again restless on their shared and disputed borders. -

A Sketch of the Linguistic Geography of Signed Languages in the Caribbean1,2

OCCASIONAL PAPER No. 38 A SKETCH OF THE LINGUISTIC GEOGRAPHY OF SIGNED LANGUAGES IN THE CARIBBEAN Ben Braithwaite The University of the West Indies, St. Augustine June 2017 SCL OCCASIONAL PAPERS PAPER NUMBER 38—JUNE 2017 Edited by Ronald Kephart (2014–2016) and Joseph T. Farquharson (2016–2018), SCL Publications Officers Copy editing by Sally J. Delgado and Ronald Kephart Proofreading by Paulson Skerritt and Sulare Telford EDITORIAL BOARD Joseph T. Farquharson The University of the West Indies, Mona (Chair) Janet L. Donnelly College of the Bahamas David Frank SIL International Ronald Kephart University of North Florida Salikoko S. Mufwene University of Chicago Ian E. Robertson The University of the West Indies, St. Augustine Geraldine Skeete The University of the West Indies, St. Augustine Donald C. Winford Ohio State University PUBLISHED BY THE SOCIETY FOR CARIBBEAN LINGUISTICS (SCL) c/o Department of Language, Linguistics and Philosophy, The University of the West Indies, Mona campus, Kingston 7, Jamaica. <www.scl-online.net> © 2017 Ben Braithwaite. All rights reserved. Not to be reproduced in any form without the written permission of the author. ISSN 1726–2496 THE LINGUISTIC GEOGRAPHY OF SIGNED LANGUAGES 3 A Sketch of the Linguistic Geography of Signed Languages in the Caribbean1,2 Ben Braithwaite The University of the West Indies, St. Augustine 1. Introduction HE Caribbean… is the location of almost every type of linguistic “Tphenomenon, and of every type of language situation. For example, trade and contact jargons, creole languages and dialects, ethnic vernaculars, and regional and nonstandard dialects are all spoken. There are also ancestral languages used for religious purposes…, regional standards, and international standards. -

Typology of Signed Languages: Differentiation Through Kinship Terminology Erin Wilkinson

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by University of New Mexico University of New Mexico UNM Digital Repository Linguistics ETDs Electronic Theses and Dissertations 7-1-2009 Typology of Signed Languages: Differentiation through Kinship Terminology Erin Wilkinson Follow this and additional works at: https://digitalrepository.unm.edu/ling_etds Recommended Citation Wilkinson, Erin. "Typology of Signed Languages: Differentiation through Kinship Terminology." (2009). https://digitalrepository.unm.edu/ling_etds/40 This Dissertation is brought to you for free and open access by the Electronic Theses and Dissertations at UNM Digital Repository. It has been accepted for inclusion in Linguistics ETDs by an authorized administrator of UNM Digital Repository. For more information, please contact [email protected]. TYPOLOGY OF SIGNED LANGUAGES: DIFFERENTIATION THROUGH KINSHIP TERMINOLOGY BY ERIN LAINE WILKINSON B.A., Language Studies, Wellesley College, 1999 M.A., Linguistics, Gallaudet University, 2001 DISSERTATION Submitted in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy Linguistics The University of New Mexico Albuquerque, New Mexico August, 2009 ©2009, Erin Laine Wilkinson ALL RIGHTS RESERVED iii DEDICATION To my mother iv ACKNOWLEDGMENTS Many thanks to Barbara Pennacchi for kick starting me on my dissertation by giving me a room at her house, cooking me dinner, and making Italian coffee in Rome during November 2007. Your endless support, patience, and thoughtful discussions are gratefully taken into my heart, and I truly appreciate what you have done for me. I heartily acknowledge Dr. William Croft, my advisor, for continuing to encourage me through the long number of months writing and rewriting these chapters. -

Detection of Finger Motion Using Flex Sensor for Assisting Speech Impaired

ISSN(Online): 2319-8753 ISSN (Print): 2347-6710 International Journal of Innovative Research in Science, Engineering and Technology (A High Impact Factor, Monthly, Peer Reviewed Journal) Visit: www.ijirset.com Vol. 6, Issue 10, October 2017 Detection of Finger Motion using Flex Sensor for Assisting Speech Impaired Heena Joshi1, Shweta Bhati2, Komal Sharma3, Vandana Matai4 Assistant Professor, Department of Electronics and Communication Engineering, Jodhpur Institute of Engineering and Technology, Jodhpur, Rajasthan, India1,2.3,4 ABSTRACT:This paper is mainly focused on aiding the speech impaired and paralysed patients. Our work helps in improving the communication with the deaf and dumb using flex sensor technology. A system is developed that can translate different signs including Indian sign language to corresponding text. About 9 billion people in the world come into this category which is quite large number to be ignored. Flex sensors placed on hand gloves pick up gestures and translate that to text data with the help of Analog to Digital convertor and microcontrollers. The main aim of the system is to develop a cheap glove that disabled people can use for communication. When the gesture of an alphabet is made respective alphabet is shown in the LCD. Sign language is prevalent amongst the deaf, dumb or blind individuals. With the conventional sign language not acknowledged to the signers, it affects their interpersonal relationships in the society in an adverse manner. A sophisticated method is required as deaf-dumb people use sign language for their communication which is difficult to understand by the normal people. KEYWORDS: Sensor, Gesture, LCD I. -

Sign Language Acronyms

Sign language acronyms Throughout the Manual, the following abbreviations for sign languages are used (some of which are acronyms based on the name of the sign language used in the respective countries): ABSL Al Sayyid Bedouin Sign Language AdaSL Adamorobe Sign Language (Ghana) ASL American Sign Language Auslan Australian Sign Language BSL British Sign Language CSL Chinese Sign Language DGS German Sign Language (Deutsche Gebärdensprache) DSGS Swiss-German Sign Language (Deutsch-Schweizerische Gebärdensprache) DTS Danish Sign Language (Dansk Tegnsprog) FinSL Finnish Sign Language GSL Greek Sign Language HKSL Hong Kong Sign Language HZJ Croatian Sign Language (Hrvatski Znakovni Jezik) IPSL Indopakistani Sign Language Inuit SL Inuit Sign Language (Canada) Irish SL Irish Sign Language Israeli SL Israeli Sign Language ÍTM Icelandic Sign Language (Íslenskt táknmál) KK Sign Language of Desa Kolok, Bali (Kata Kolok) KSL Korean Sign Language LIS Italian Sign Language (Lingua dei Segni Italiana) LIU Jordanian Sign Language (Lughat il-Ishaara il-Urdunia) LSA Argentine Sign Language (Lengua de Señas Argentina) Libras Brazilian Sign Language (Língua de Sinais Brasileira) LSC Catalan Sign Language (Llengua de Signes Catalana) LSCol Colombian Sign Language (Lengua de Señas Colombiana) LSE Spanish Sign Language (Lengua de Signos Española) LSF French Sign Language (Langue des Signes Française) LSQ Quebec Sign Language (Langue des Signes Québécoise) NGT Sign Language of the Netherlands (Nederlandse Gebarentaal) NicSL Nicaraguan Sign Language NS Japanese Sign Language (Nihon Syuwa) NSL Norwegian Sign Language NZSL New Zealand Sign Language DOI 10.1515/9781501511806-003, © 2017 Josep Quer, Carlo Cecchetto, Caterina Donati, Carlo Geraci, Meltem Kelepir, Roland Pfau, and Markus Steinbach, published by De Gruyter. -

Other-Initiated Repair in Argentine Sign Language

Open Linguistics 2016; 2: 1–34 Research Article Open Access Elizabeth Manrique* Other-initiated repair in Argentine Sign Language DOI 10.1515/opli-2016-0001 Received September 29, 2014; accepted October 28, 2015 Abstract: Other-initiated repair is an essential interactional practice to secure mutual understanding in everyday interaction. This article presents evidence from a large conversational corpus of a sign language, showing that signers of Argentine Sign Language (Lengua de Señas Argentina or ‘LSA’), like users of spoken languages, use a systematic set of linguistic formats and practices to indicate troubles of signing, seeing and understanding. The general aim of this article is to provide a general overview of the different visual-gestural linguistic patterns of other-initiated repair sequences in LSA. It also describes the quantitative distribution of other-initiated repair formats based on a collection of 213 cases. It describes the multimodal components of open and restricted types of repair initiators, and reports a previously undescribed implicit practice to initiate repair in LSA in comparison to explicitly produced formats. Part of a special issue presenting repair systems across a range of languages, this article contributes to a better understanding of the phenomenon of other-initiated repair in terms of visual and gestural practices in human interaction in both signed and spoken languages. Keywords: other-initiated repair; conversation analysis; sign language The other-initiated repair system acts as the main guarantee of intersubjective understanding (Schegloff, 1992; Schegloff et al., 1977) playing a crucial role in any kind of human interaction, regardless of the semiotic system employed (Enfield, 2009a). -

Watchtower Publications List

WATCHTOWER PUBLICATIONS LIST January 2011 This booklet contains a list of items currently available in the United States. © 2011 WATCH TOWER BIBLE AND TRACT SOCIETY OF PENNSYLVANIA All Rights Reserved Watchtower Publications List English (S-15-E Us) Made in the United States INTRODUCTION This Watchtower Publications List (S-15) is a listing of publications and languages available to con- gregations in your branch territory. After each monthly announcement to all congregations of new publi- cations available is received, please feel free to add the new publications to your list. This will help you to know quickly and easily what is currently available. Each item listed is preceded by a four-digit item number. To expedite and improve the handling of each congregation’s monthly literature request, please use the four-digit item number when requesting literature using the jw.org Web site or listing items on page 4 of the Literature Request Form (S-14). Special- request items, which are marked by an asterisk (*), should only be submitted when specifically requested by a publisher. Special-request items should not be stocked in anticipation of requests. Languages are listed alphabetically in the Watchtower Publications List, with the language that it is being generated in at the beginning. Items in the Watchtower Publications List are divided into appropriate categories for each language. Within each category, items are alphabetized by the first word in the title of the publication. The catego- ries are: Annual Items Dramas Calendars Empty -

[.35 **Natural Language Processing Class Here Computational Linguistics See Manual at 006.35 Vs

006 006 006 DeweyiDecimaliClassification006 006 [.35 **Natural language processing Class here computational linguistics See Manual at 006.35 vs. 410.285 *Use notation 019 from Table 1 as modified at 004.019 400 DeweyiDecimaliClassification 400 400 DeweyiDecimali400Classification Language 400 [400 [400 *‡Language Class here interdisciplinary works on language and literature For literature, see 800; for rhetoric, see 808. For the language of a specific discipline or subject, see the discipline or subject, plus notation 014 from Table 1, e.g., language of science 501.4 (Option A: To give local emphasis or a shorter number to a specific language, class in 410, where full instructions appear (Option B: To give local emphasis or a shorter number to a specific language, place before 420 through use of a letter or other symbol. Full instructions appear under 420–490) 400 DeweyiDecimali400Classification Language 400 SUMMARY [401–409 Standard subdivisions and bilingualism [410 Linguistics [420 English and Old English (Anglo-Saxon) [430 German and related languages [440 French and related Romance languages [450 Italian, Dalmatian, Romanian, Rhaetian, Sardinian, Corsican [460 Spanish, Portuguese, Galician [470 Latin and related Italic languages [480 Classical Greek and related Hellenic languages [490 Other languages 401 DeweyiDecimali401Classification Language 401 [401 *‡Philosophy and theory See Manual at 401 vs. 121.68, 149.94, 410.1 401 DeweyiDecimali401Classification Language 401 [.3 *‡International languages Class here universal languages; general -

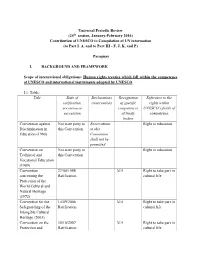

Universal Periodic Reporting

Universal Periodic Review (24th session, January-February 2016) Contribution of UNESCO to Compilation of UN information (to Part I. A. and to Part III - F, J, K, and P) Paraguay I. BACKGROUND AND FRAMEWORK Scope of international obligations: Human rights treaties which fall within the competence of UNESCO and international instruments adopted by UNESCO I.1. Table: Title Date of Declarations Recognition Reference to the ratification, /reservations of specific rights within accession or competences UNESCO’s fields of succession of treaty competence bodies Convention against Not state party to Reservations Right to education Discrimination in this Convention to this Education (1960) Convention shall not be permitted Convention on Not state party to Right to education Technical and this Convention Vocational Education (1989) Convention 27/04/1988 N/A Right to take part in concerning the Ratification cultural life Protection of the World Cultural and Natural Heritage (1972) Convention for the 14/09/2006 N/A Right to take part in Safeguarding of the Ratification cultural life Intangible Cultural Heritage (2003) Convention on the 30/10/2007 N/A Right to take part in Protection and Ratification cultural life 2 Promotion of the Diversity of Cultural Expressions (2005) II. Input to Part III. Implementation of international human rights obligations, taking into account applicable international humanitarian law to items F, J, K, and P Right to education 1. NORMATIVE FRAMEWORK 1.1. Constitutional Framework1: 1. The right to education is provided in the Constitution adopted on 20 June 19922. Article 73 of the Constitution defines the right to education as the “right to a comprehensive, permanent educational system, conceived as a system and a process to be realized within the cultural context of the community”.