Statistics: Challenges and Opportunities for the Twenty-First Century

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Epidemiology and Public Health 2006–2007

August 25, 2006 ale university 2007 – Number 11 bulletin of y Series 102 Epidemiology and Public Health 2006 bulletin of yale university August 25, 2006 Epidemiology and Public Health Periodicals postage paid New Haven, Connecticut 06520-8227 ct bulletin of yale university bulletin of yale New Haven Bulletin of Yale University The University is committed to basing judgments concerning the admission, education, and employment of individuals upon their qualifications and abilities and affirmatively seeks to attract Postmaster: Send address changes to Bulletin of Yale University, to its faculty, staff, and student body qualified persons of diverse backgrounds. In accordance with PO Box 208227, New Haven ct 06520-8227 this policy and as delineated by federal and Connecticut law, Yale does not discriminate in admis- sions, educational programs, or employment against any individual on account of that individual’s PO Box 208230, New Haven ct 06520-8230 sex, race, color, religion, age, disability, status as a special disabled veteran, veteran of the Vietnam Periodicals postage paid at New Haven, Connecticut era, or other covered veteran, or national or ethnic origin; nor does Yale discriminate on the basis of sexual orientation. Issued seventeen times a year: one time a year in May, November, and December; two times University policy is committed to affirmative action under law in employment of women, a year in June; three times a year in July and September; six times a year in August minority group members, individuals with disabilities, special disabled veterans, veterans of the Vietnam era, and other covered veterans. Managing Editor: Linda Koch Lorimer Inquiries concerning these policies may be referred to Valerie O. -

Mathematical Economics - B.S

College of Mathematical Economics - B.S. Arts and Sciences The mathematical economics major offers students a degree program that combines College Requirements mathematics, statistics, and economics. In today’s increasingly complicated international I. Foreign Language (placement exam recommended) ........................................... 0-14 business world, a strong preparation in the fundamentals of both economics and II. Disciplinary Requirements mathematics is crucial to success. This degree program is designed to prepare a student a. Natural Science .............................................................................................3 to go directly into the business world with skills that are in high demand, or to go on b. Social Science (completed by Major Requirements) to graduate study in economics or finance. A degree in mathematical economics would, c. Humanities ....................................................................................................3 for example, prepare a student for the beginning of a career in operations research or III. Laboratory or Field Work........................................................................................1 actuarial science. IV. Electives ..................................................................................................................6 120 hours (minimum) College Requirement hours: ..........................................................13-27 Any student earning a Bachelor of Science (BS) degree must complete a minimum of 60 hours in natural, -

Priorities for the National Science Foundation

December 2020 Priorities for the National Science Foundation Founded in 1888, the American Mathematical Society (AMS) is dedicated to advancing the interests of mathematical research and scholarship and connecting the diverse global mathematical community. We do this through our book and journal publications, meetings and conferences, database of research publications1 that goes back to the early 1800s, professional services, advocacy, and awareness programs. The AMS has 30,000 individual members worldwide and supports mathemat- ical scientists at every career stage. The AMS advocates for increased and sustained funding for the National Science Foundation (NSF). The applications The NSF supports more fundamental research in the of advances in mathematical sciences—and done at colleges and theoretical science, 2 universities—than any other federal agency. A signif- including theory of icant increase in Congressional appropriations would mathe matics, occur help address the effects of years of high-quality grant on a time scale that proposals that go unfunded due to limited funding. means the investment Those unmet needs continue. A 2019 National Science Board report3 stated that in fiscal year 2018, “approxi- is often hard to justify mately $3.4 billion was requested for declined proposals in the short run. that were rated Very Good or higher in the merit review process.” This accounts for about 5440 declined proposals at the NSF. The U.S. is leaving potentially transformative scientific research unfunded, while other countries are making significant investments. In the next section we give an overview of our two priorities. The second, and final section offers a discussion of existing funding mechanisms for mathematicians. -

Mathematical and Physical Sciences

Overview of the Directorate for Mathematical and Physical Sciences NSF Grants Conference Hosted by George Washington University Arlington, VA, October 6-7, 2014 Bogdan Mihaila, [email protected] Program Director Division of Physics US Government NSF Vision and Goals Vision » A Nation that creates and exploits new concepts in science and engineering and provides global leadership in research and education Mission » To promote the progress of science; to advance the national health, prosperity, and welfare; to secure the national defense Strategic Goals » Transform the frontiers of science and engineering » Stimulate innovation and address societal needs through research & education » Excel as a Federal Science Agency NSF in a Nutshell Independent agency to support basic research & education Grant mechanism in two forms: » Unsolicited, curiosity driven (the majority of the $) » Solicited, more focused All fields of science/engineering Merit review: Intellectual Merit & Broader Impacts Discipline-based structure, some cross-disciplinary Support large facilities NSF Organization Chart Office of Diversity & National Science Board Director Inclusion (ODI) (NSB) Deputy Director Office of the General Counsel (OGC) Office of the NSB Office Office of International & Inspector General $482M (OIG) ($4.3M) Integrative Activities (OIIA) ($14.2M) Office of Legislative & Public Affairs (OLPA) Computer & Mathematical Biological Information Engineering Geosciences & Physical Sciences Science & (ENG) (GEO) Sciences (BIO) Engineering (MPS) (CISE) -

Introduction to Statistically Designed Experiments, NREL

Introduction to Statistically Designed Experiments Mike Heaney ([email protected]) ASQ Denver Section 1300 Monthly Membership Meeting September 13, 2016 Englewood, Colorado NREL/PR-5500-66898 Objectives Give some basic background information on statistically designed experiments Demonstrate the advantages of using statistically designed experiments1 1 Often referred to as design of experiments (DOE or DOX), or experimental design. 2 A Few Thoughts Many companies/organizations do experiments for Process improvement Product development Marketing strategies We need to plan our experiments as efficiently and effectively as possible Statistically designed experiments have a proven track record (Mullin 2013 in Chemical & Engineering News) Conclusions supported by statistical tests Substantial reduction in total experiments Why are we still planning experiments like it’s the 19th century? 3 Outline • Research Problems • The Linear Model • Key Types of Designs o Full Factorial o Fractional Factorial o Response Surface • Sequential Approach • Summary 4 Research Problems Research Problems Is a dependent variable (Y) affected by multiple independent variables (Xs)? Objective: Increase bearing lifetime (Hellstrand 1989) Y Bearing lifetime (hours) X1 Inner ring heat treatment Outer ring osculation (ratio Outer Ring X2 between ball diameter and outer ring raceway radius) Ball X3 Cage design Cage Inner Ring 6 Research Problems Objective: Hydrogen cyanide (HCN) removal in diesel exhaust gas (Zhao et al. 2006) Y HCN conversion X1 Propene -

Appendix A: Mathematical Formulas

Appendix A: Mathematical Formulas Logarithms Inab = \na -\-\nb', \na/b = \na— \nb; \na^=b\na Geometric Series If Irl < 1, we find that oo oo E ar^ = ; y^akr^ = r; ^=0 A:=o y^ n Z-^ 1 -r ^^ 1 -r ^=1 k=0 Limits UHospitaVs rule. Suppose that \\vOix->XQ fix) = lim;c-^A:o sM = 0* or that liirix-^xo Z^-^) = l™x^jco SM — =t^^- Then, under some conditions, we can write that y fix) ^. fix) um = hm . x-^XQ g{x) x^xo g^{x) If the functions f\x) and g\x) satisfy the same conditions as f(x) and g(x), we can repeat the process. Moreover, the constant XQ may be equal to ±oo. Derivatives Derivative of a quotient: d fix) gix)fix) - fix)g\x) = 7^ 11 gix) ^0. dx gix) g^ix) 340 Appendix A: Mathematical Formulas Remark. This formula can also be obtained by differentiating the product f(x)h(x), where/Z(A:) := l/g(x). Chain rule. If w = ^(jc), then we have: d d du , , ^fiu) = —f(u) . — = f\u)g\x) = AgixWix). dx du dx For example, if w = jc^, we calculate — exp(M^) = exp(w^)2w • 2x = 4x^ expU"*). dx Integrals Integration by parts: j udv = uv — I vdu. Integration by substitution. If the inverse function g~^ exists, then we can write that rh rd j f(x)dx = j f[g(y)]g{y)dy, where a = g(c) ^ c = g ^(a) and b = g(d) <^ d = g ^(b). For example, / e''^'dx-^=^' f 2yeydy=2yey\l-2 f e^ dy = 2e^-\-2. -

Doctoral Degrees Conferred 2008–20009

EXCERPTS FROM 2009 SECOND REPORT ANNUAL SURVEY OF THE MATHEMATICAL SCIENCES (AMS-ASA-IMS-MAA-SIAIM) Matteson, David, Statistical inference for multivariate Doctoral Degrees nonlinear time series. Rosenthal, Dale, W. R., Trade classification and nearly- Conferred 2008–20009 gamma random variables. Song, Minsun, Restricted parameter space models for Supplementary List testing gene-gene interactions. The following list supplements the list of thesis titles published in Zheng, Xinghua, Critical branching random walks and the February 2010 Notices, pages 281–301. spatial epidemic. Zibman, Chava, Adjusting for confounding in a semi- ALABAMA parametric Bayesian model of short term effects of air pollution on respiratory health. University of Alabama (4) INFORMATION SYSTEMS, STATisTICS AND MANAGEMENT IOWA SCIENCE University of Iowa (4) Alhammadi, Yousuf, Neural network control charts for STATisTICS AND ACTUARIAL SCIENCE Poisson processes. Ahn, Kwang Woo, Topics in statistical epidemiology. Anderson, Billie, Study of reject inference techniques. Devasher, Michael, An evaluation of optimal experimental Fang, Xiangming, Generalized additive models with designs subject to parameter uncertainty for properties correlated data. of compartmental models used in individual Hao, Xuemiao, Asymptotic tail probabilities in insurance pharmacokinetic studies. and finance. Song, Jung-Eun, Bayesian linear regression via partition. CALIFORNIA KENTUCKY California Institute of Technology (1) University of Louisville (1) BIOINFORMATICS AND BIOSTATisTICS CONTROL AND DYNAMICAL SYSTEMS Lan, Ling, Inference for multistate models. Shi, Ling, Resource optimization for networked estimator with guaranteed estimation quality. LOUISIANA University of California, Riverside (3 Tulane University (1) MATHEMATICS BIOSTATisTICS Alvarez, Vicente, A numercial computation of eigenfunctions for the Kusuoka laplacian on the Yi, Yeonjoo, Two part longitudinal models of zero heavy Sierpinski gasket. -

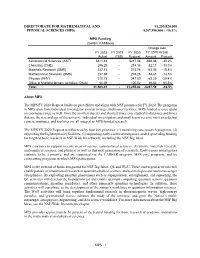

Mathematical and Physical Sciences

DIRECTORATE FOR MATHEMATICAL AND $1,255,820,000 PHYSICAL SCIENCES (MPS) -$247,590,000 / -16.5% MPS Funding (Dollars in Millions) Change over FY 2018 FY 2019 FY 2020 FY 2018 Actual Actual (TBD) Request Amount Percent Astronomical Sciences (AST) $311.16 - $217.08 -$94.08 -30.2% Chemistry (CHE) 246.29 - 214.18 -32.11 -13.0% Materials Research (DMR) 337.14 - 273.78 -63.36 -18.8% Mathematical Sciences (DMS) 237.69 - 203.26 -34.43 -14.5% Physics (PHY) 310.75 - 247.50 -63.25 -20.4% Office of Multidisciplinary Activities (OMA) 60.39 - 100.02 39.63 65.6% Total $1,503.41 - $1,255.82 -$247.59 -16.5% About MPS The MPS FY 2020 Request builds on past efforts and aligns with NSF priorities for FY 2020. The programs in MPS span from individual investigator awards to large, multi-user facilities. MPS-funded science spans an enormous range as well: from the smallest objects and shortest times ever studied to distances and times that are the size and age of the universe. Individual investigators and small teams receive most awards, but centers, institutes, and facilities are all integral to MPS-funded research. The MPS FY 2020 Request is influenced by four key priorities: (1) sustaining core research programs, (2) supporting the highest priority facilities, (3) supporting early-career investigators, and (4) providing funding for targeted basic research in NSF-Wide Investments, including the NSF Big Ideas. MPS continues to support its core areas of science (astronomical sciences, chemistry, materials research, mathematical sciences, and physics) as well as thet next generation of scientists. -

Brochure on Careers in Applied Mathematics

careers in applied mathematics Options for STEM Majors society for industrial and applied mathematics 2 / careers in applied mathematics Mathematics and computational science are utilized in almost every discipline of science, engineering, industry, and technology. New application areas are WHERE CAN YOU WHAT KINDS OF PROBLEMS MIGHT YOU WORK ON? constantly being discovered MAKE AN IMPACT? While careers in mathematics may differ widely by discipline while established techniques Many different types and job title, one thing remains constant among them— are being applied in new of organizations hire problem solving. Some potential problems that someone with ways and in emerging fields. mathematicians and mathematical training might encounter are described below. Consequently, a wide variety computational scientists. Which of them do you find most intriguing, and why? of career opportunities You can easily search the • How can an airline use smarter scheduling to reduce costs of are open to people with websites of organizations and aircraft parking and engine maintenance? Or smarter pricing mathematical talent and corporations that interest to maximize profit? training. you to learn more about their • How can one design a detailed plan for a clinical trial? Building such a plan requires advanced statistical skills and In this guide, you will find answers to sophisticated knowledge of the design of experiments. questions about careers in applied mathematics • Is ethanol a viable solution for the world’s dependence and computational science, and profiles on fossil fuels? Can biofuel production be optimized to of professionals working in a variety of combat negative implications on the world’s economy and environment? environments for which a strong background • How do we use major advances in computing power to in mathematics is necessary for success. -

Report of the MPSAC

Report of the Subcommittee of the MPSAC to Examine the Question of Renaming the Division of Mathematical Sciences James Berger (Duke University) Emery Brown (Massachusetts Institute of Technology) Kevin Corlette (University of Chicago) Irene Fonseca (Carnegie Mellon University) Juan C. Meza (University of California – Merced) Fred Roberts (Rutgers University) (chair) Background Dr. Sastry Pantula, Director of the NSF Division of Mathematical Sciences, has proposed that the Division be renamed the Division of Mathematical and Statistical Sciences. In a letter to Dr. James Berger, chair of the MPSAC, the MPS Assistant Director Ed Seidel asked Dr. Berger to form a subcommittee to examine the question of renaming the Division of Mathematical Sciences at NSF. It included the following charge to the subcommittee: Charge The subcommittee will develop the arguments, both in favor of, and against, the suggestion of renaming the Division of Mathematical Sciences. In doing so, the scope of the subcommittee will include the following: • Seeking input from the mathematical and statistical societies within the U.S; • Seeking input from individual members of the community on this matter; and • Developing a document listing the arguments in favor of, and against, the suggestion. • And other comments relevant to this topic. In order to accept input from the public, MPS will arrange for creation of a website or email address to which the community may send its comments. Comments may be unsigned. While the subcommittee is not required to announce its meetings and hold them open to the public because it will be reporting directly to the MPSAC, any documents received by or created by the subcommittee may be subject to access by the public. -

STATISTICAL SCIENCE Volume 31, Number 4 November 2016

STATISTICAL SCIENCE Volume 31, Number 4 November 2016 ApproximateModelsandRobustDecisions..................James Watson and Chris Holmes 465 ModelUncertaintyFirst,NotAfterwards....................Ingrid Glad and Nils Lid Hjort 490 ContextualityofMisspecificationandData-DependentLosses..............Peter Grünwald 495 SelectionofKLNeighbourhoodinRobustBayesianInference..........Natalia A. Bochkina 499 IssuesinRobustnessAnalysis.............................................Michael Goldstein 503 Nonparametric Bayesian Clay for Robust Decision Bricks ..................................................Christian P. Robert and Judith Rousseau 506 Ambiguity Aversion and Model Misspecification: An Economic Perspective ................................................Lars Peter Hansen and Massimo Marinacci 511 Rejoinder: Approximate Models and Robust Decisions. .James Watson and Chris Holmes 516 Filtering and Tracking Survival Propensity (Reconsidering the Foundations of Reliability) .......................................................................Nozer D. Singpurwalla 521 On Software and System Reliability Growth and Testing. ..............FrankP.A.Coolen 541 HowAboutWearingTwoHats,FirstPopper’sandthendeFinetti’s?..............Elja Arjas 545 WhatDoes“Propensity”Add?...................................................Jane Hutton 549 Reconciling the Subjective and Objective Aspects of Probability ...............Glenn Shafer 552 Rejoinder:ConcertUnlikely,“Jugalbandi”Perhaps..................Nozer D. Singpurwalla 555 ChaosCommunication:ACaseofStatisticalEngineering...............Anthony -

MORSE Mathematics Operational Research Statistics Economics

MORSE Mathematics Operational Research Statistics Economics BSc Hons • MMORSE • SCHOOL Mathematics MORSE (BSc Hons • MMORSE ) Honours Degrees in Mathematics, Operational Research, Statistics and Economics • UNIVERSITY MORSE An integrated Single Honours Degree, designed to provide a sound basis leading to What is MORSE? a wide variety of careers. MORSE is a single honours degree The theory of pure mathematics is taught • CAREER in mathematics and its practical with relevance to applications in operational application to Business, Finance, research, statistics, finance, econometrics Economics, and other topics and it and mathematical economics. › Professions is available only at the University of No previous knowledge of these areas is • Actuary Warwick. Instead of pursuing the necessary. • Accountancy more traditional areas of Applied • Finance Mathematics, MORSE relates Available as a three year BSc (UCAS CODE: Pure Mathematics to the modern GLN0) or as a four year integrated Master’s › Statistician application areas described opposite. (UCAS CODE: GOL0) in which students specialise in their final two years in one • Industry MORSE contains a balance of of four possible streams: Actuarial and Financial Mathematics; Operational • Civil Service mathematical theory and practical Research and Statistics; Econometrics and work in order to produce high quality › Operational Mathematical Economics; or Statistics with graduates who are mathematically Mathematics. Control equipped to deal with the practical • Management problems of the modern world. Application should be made to only one Consultancy of these courses. If in doubt, apply for the four year integrated Master’s because it is • Modelling possible to transfer to the three year BSc at the end of the second year.