Research on Identification of Road Features from Point Cloud

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Session on Flexible Automation in Manufacturing Systems

Call for Papers a Session on Flexible Automation in Manufacturing Systems 2016 ASME/ISCIE International Symposium on Flexible Automation (ISFA2016) InterContinental Hotel & Conference Center August 1-3, 2016, Cleveland, Ohio, USA Session Technical Focus Flexible automation is rapidly becoming a predominant trait in modern manufacturing systems. There is a constant need for improved understanding of system-level interactions between various machines, operations and humans in today’s manufacturing, which is increasingly characterized by high levels of flexibility because of the need for personalized, customized products. We cordially invite you to submit a paper to share your knowledge and expertise in all areas related to Flexible Automation in Manufacturing Systems. Papers from the industrial sector are particularly encouraged. The session welcomes both theoretical and applied papers in areas including, but not limited to: Modeling, characterization and optimization of operations in manufacturing systems, including Work in Progress (WIP) dynamics, maintenance, productivity, logistics etc. Productivity optimization Quality and process control Automating optimization of additive manufacturing processes Process diagnostics and maintenance optimization in manufacturing systems Staff assignment and optimization Adaptive manufacturing systems Sustainability in flexible manufacturing systems Paper Submission The deadline for submission of your contribution to this session is February 29, 2016. Both short and long papers will be considered and reviewed. For short papers, please submit a summary of 1,000 words or less (short papers will be limited to 4 pages) and, for long papers, please submit a manuscript of no more than eight pages. All summaries and manuscripts should be submitted through the conference website at http://engineering.case.edu/conference/ISFA2016/. -

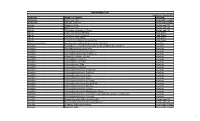

Unai Members List August 2021

UNAI MEMBER LIST Updated 27 August 2021 COUNTRY NAME OF SCHOOL REGION Afghanistan Kateb University Asia and the Pacific Afghanistan Spinghar University Asia and the Pacific Albania Academy of Arts Europe and CIS Albania Epoka University Europe and CIS Albania Polytechnic University of Tirana Europe and CIS Algeria Centre Universitaire d'El Tarf Arab States Algeria Université 8 Mai 1945 Guelma Arab States Algeria Université Ferhat Abbas Arab States Algeria University of Mohamed Boudiaf M’Sila Arab States Antigua and Barbuda American University of Antigua College of Medicine Americas Argentina Facultad de Ciencias Económicas de la Universidad de Buenos Aires Americas Argentina Facultad Regional Buenos Aires Americas Argentina Universidad Abierta Interamericana Americas Argentina Universidad Argentina de la Empresa Americas Argentina Universidad Católica de Salta Americas Argentina Universidad de Congreso Americas Argentina Universidad de La Punta Americas Argentina Universidad del CEMA Americas Argentina Universidad del Salvador Americas Argentina Universidad Nacional de Avellaneda Americas Argentina Universidad Nacional de Cordoba Americas Argentina Universidad Nacional de Cuyo Americas Argentina Universidad Nacional de Jujuy Americas Argentina Universidad Nacional de la Pampa Americas Argentina Universidad Nacional de Mar del Plata Americas Argentina Universidad Nacional de Quilmes Americas Argentina Universidad Nacional de Rosario Americas Argentina Universidad Nacional de Santiago del Estero Americas Argentina Universidad Nacional de -

![No.204 (English) [Pdf]](https://docslib.b-cdn.net/cover/3278/no-204-english-pdf-1013278.webp)

No.204 (English) [Pdf]

ISSN 2188-109X 一般社団法人 大 学 英 語 教 育 学 会 ―――――――――――――――――――――――――――――――――――――― March 2019 The Japan Association of College English Teachers No.204 ―――――――――――――――――――――――――――――――――――――― Contents Foreword (Naoko Ozeki) 1 Report from the Committee of 4 Report from Headquarters 2 Academic Affairs Reports from Chapters 5 Foreword Naoko Ozeki Vice-President of JACET Director, Committee of Academic Publication (Meiji University) To JACET members and supporters, Recently, JACET has been going through changes in terms of its evaluation of papers JACET publishes influential articles in the submitted to the JACET Journal and JACET JACET Journal, JACET International International Convention Selected Papers. One of Convention Selected Papers, and JAAL in JACET the most noticeable changes is the area of Proceedings, each of which is published annually, globalization. For example, since we have and provides an incentive for both researchers and established affiliations with various organizations instructors to do further research and improve such as JALT (Japan), AILA (international), their practice. ALAK (Korea), ETA-ROCK (Taiwan), and JACET 通信―――――――――――――――<1>―――――――――――――――――3189 RELC (Singapore), we have invited international of APA format will be a requirement for having a plenary speakers and guest speakers to our paper accepted in JACET publications. We are international conferences and summer seminars. looking forward to reading your future We have also invited these speakers to write submissions to our journals. articles about the topics they talked about at the conferences for the JACET Journals and Selected Papers. We hope that those who could not Report from the JACET Headquarters participate in the conferences or summer seminars will be able to share the main ideas and insights of Secretary General these speakers’ presentations by reading their Yukinari Shimoyama articles. -

Graduate School Overview

AY 2019 Graduate School Overview <Reference Only> Osaka City University Table of Contents Page History ・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・ 1 Enrollment Quotas ・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・ 1 Research Fields and Classes Graduate School of Business ・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・ 2 Graduate School of Economics ・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・ 4 Graduate School of Law ・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・ 5 Graduate School of Literature and Human Sciences ・・・・・・・・・・・・・・・ 7 Graduate School of Science ・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・ 12 Graduate School of Engineering ・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・ 15 Graduate School of Medicine ・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・ 19 Graduate School of Nursing ・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・ 26 Graduate School of Human Life Science ・・・・・・・・・・・・・・・・・・・・・・・・・・・28 Graduate School for Creative Cities ・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・ 31 Graduate School of Urban Management ・・・・・・・・・・・・・・・・・・・・・・・・・・・32 Degrees ・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・34 Entrance Examinations ・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・35 Alma Maters of Enrollees ・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・ 40 Graduate School Exam Schedule (tentative) ・・・・・・・・・・・・・・・・・・・・・・・・・・・42 Directions ・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・・44 History■ History Osaka City University, the foundation of this graduate school, was established using a reform of the Japanese educational system in 1949 as an opportunity to merge the former -

2. Law of Property and Obligations

146 WASEDA BULLETIN OF COMPARATIVE LAW Vol. 28 2.First Session on October 12, 2008 Reports: (5)“Standards of Judicial Review” Masaomi Kimizuka(Professor, Yokohama National University). (6)“Theories of Discretion and Theories of Human Rights” George Shishido(Professor, Hitotsubashi University). (7)“Unconstitutionality and Illegality” Tatsuya Fujii(Professor, Tsukuba University). (8)“Confirmational Litigation as ‘Fundamental Right Litigation’” Hiroyuki Munesue(Professor, Osaka University). 3. Second Session on October 7, 2007 Reports: (9)“Non-‘cases and controversies’ Litigation and Judicial Power” Keiko Yamagishi(Professor, Chukyo Univeristy). (10)“Remedies for Governmental Omission” Mitsuru Noro(Professor, Osaka University). (11)“Temporary Remedies” Ryuji Yamamoto(Professor, Tokyo University). (12)“The Activated Circumstances of Constitutional and Administrative Litigation” Norihiko Sugihara(Judge, Tokyo District Court). 2. Law of Property and Obligations The Japan Association of Private Law held its 72nd General Meeting at Nagoya University on October 12 and 13, 2008. First Day: Reports: First Section (1)“Rents and Fixtures as Security for the Mortgage Loan―From a Cash Flow Control Perspective” DEVELOPMENTS IN 2008 ― ACADEMIC SOCIETIES 147 Noriyuki Aoki(Associate Professor, Waseda University). (2)“Die Neuorientierung des Persönlichkeitsrechts” Kazunari Kimura(Lecturer, Setsunan University). (3)“Pure Economic Loss Due to Defective Buildings” Akiko Shindo(Associate Professor, Hokkaido University). (4)“‘Consideration of Relative Fault’ in the Law of Unjustified Enrichment―A suggestion from the Common Law” Akimichi Sasakawa(Associate Professor, Kobe Gakuin University). Second Section (1)“La dissuation et la sanction dans la responsabilité civile” Masako Hiromine(Associate Professor, Kobe Gakuin University). (2)“Rücktritt und § 541 jZGB” Junkou Toyama( Associate Professor, Otaru University of Commerce). (3)“Das Beurteilungskriterium der Teilnichtigkeit” Katsuhiro Kondo(Associate Professor, Fukushima University). -

Masayuki Hirukawa

MASAYUKI HIRUKAWA Address Faculty of Economics Phone (+81)(0)72-839-8095 Setsunan University Fax (+81)(0)72-839-8138 17-8 Ikeda Nakamachi E-mail [email protected] Neyagawa, Osaka 572-8508, Japan Web http://www.setsunan.ac.jp/~hirukawa/ Personal • Citizenship: Japan (Permanent Address: Handa, Aichi, Japan). • Date of Birth: 7 October 1965. • Marital Status: Married, 1 child. Education • Ph.D. in Economics, University of Wisconsin-Madison, Madison, WI, USA, August 2004. § Doctoral Dissertation: Ø Title: “Heteroskedasticity and Autocorrelation Consistent Covariance Matrix Estimation.” Ø Reading Committee: Bruce E. Hansen (Chair); Gautam Tripathi; Kenneth D. West. § Minor: Statistics. • M.S. in Economics, University of Wisconsin-Madison, Madison, WI, USA, December 2000. • M.S. in Industrial Administration, Carnegie Mellon University, Pittsburgh, PA, USA, May 1997. • B.A. in Economics, University of Tokyo, Tokyo, Japan, March 1989. Academic Positions • Professor, Faculty of Economics, Setsunan University, Neyagawa, Osaka, Japan, September 2010 - present. • Assistant Professor, Department of Economics, Northern Illinois University, DeKalb, IL, USA, August 2007 - May 2010. • Assistant Professor, Department of Economics, Concordia University, Montréal, QC, Canada, July 2004 - May 2007. Visiting and Adjunct Positions • Visiting Fellow, Centre Interuniversitaire de Recherche en Économie Quantitative (CIREQ), September 2008. • Short-Term Visitor, Center for International Research on the Japanese Economy (CIRJE), University of Tokyo, June 2006 and May 2008. • Adjunct Professor, Department of Economics, Concordia University, June 2007 - April 2008. • Research Fellow, Centre Interuniversitaire de Recherche en Économie Quantitative (CIREQ), April 2006 - April 2008. Professional Services • Reviewer for Grant-in-Aid for Scientific Research (Field: Economic Statistics), Japan Society for the Promotion of Science, December 2015 - November 2016 and December 2016 - November 2017. -

The Japan Association for American Studies the 48Th Annual Meeting (2014) Dates: Sat

The Japan Association for American Studies The 48th Annual Meeting (2014) Dates: Sat. June 7 — Sun. June 8, 2014 Venue: Okinawa Convention Center (Ginowan City, Okinawa) For access, see Okinawa Convention Center HP (http://www.oki-conven.jp/en/) Registration: Main Entrance Hall, Conference Building A (Affiliations are as of April 2014. All presentations/sessions will be in Japanese except those with asterisk*.) Saturday, June 7 Independent Paper Sessions (09:15-12:00) [Conference Building B, B1~B7] (“GS” stands for “graduate student.”) Session A: Politics, Military and Diplomacy [B3, B4] Chair: Kaori TAKADA (Otsuma Women’s University) Koji ITO (GS, Osaka University) “An Analysis of the Development of America’s Insular Policies in the Late 1890s with a Special Emphasis on Hawaii and Cuba” Keita OKUHIRO (GS, University at Albany, State University of New York) “Civil-Military Relations in the Politics of National Defense: The War Department and Congress, 1939-1941” Atsuko SHIGESAWA (GS, Hiroshima City University) “America's Rearmament Plans after World War II: With a Focus on Civilian Efforts” Shunsuke SHIKATA (Kobe University) “US policies toward Taiwanese economic development and Overseas Chinese in the early Cold War” Somei KOBAYASHI (Kyung Hee University, Korea), “The ‘Secret Pact’ on the VOA Relocation Cost and Okinawa Reversion: U.S.-Japan and U.S.-Korea Negotiations on the VOA Relay Station on Okinawa” Commentator: Takuya SASAKI (Rikkyo University) Session B: Political Economy and Publicness [B2] Chair: Chitose SATO (Tsukuba University) Yuri AMANO (GS, University of Tokyo) “The Yellow Fever Epidemic of 1793 in Philadelphia and the Paradigm of Sensibility” Hirobumi ENDO (GS, University of Tokyo) “Legitimacy and Contingency: An Inquiry into the Diversified Concept of Sovereignty Focusing on the Nullification Crisis” 1 Kiwako UEDA (GS, Hitotsubashi University) “City Reconstruction and the Sexual Politics in San Francisco, 1906-13” Isao SUTO (Meiji University) “The International Monetary Fund in the Beginning: Did the U.S. -

1. Japanese National, Public Or Private Universities

1. Japanese National, Public or Private Universities National Universities Hokkaido University Hokkaido University of Education Muroran Institute of Technology Otaru University of Commerce Obihiro University of Agriculture and Veterinary Medicine Kitami Institute of Technology Hirosaki University Iwate University Tohoku University Miyagi University of Education Akita University Yamagata University Fukushima University Ibaraki University Utsunomiya University Gunma University Saitama University Chiba University The University of Tokyo Tokyo Medical and Dental University Tokyo University of Foreign Studies Tokyo Geijutsu Daigaku (Tokyo University of the Arts) Tokyo Institute of Technology Tokyo University of Marine Science and Technology Ochanomizu University Tokyo Gakugei University Tokyo University of Agriculture and Technology The University of Electro-Communications Hitotsubashi University Yokohama National University Niigata University University of Toyama Kanazawa University University of Fukui University of Yamanashi Shinshu University Gifu University Shizuoka University Nagoya University Nagoya Institute of Technology Aichi University of Education Mie University Shiga University Kyoto University Kyoto University of Education Kyoto Institute of Technology Osaka University Osaka Kyoiku University Kobe University Nara University of Education Nara Women's University Wakayama University Tottori University Shimane University Okayama University Hiroshima University Yamaguchi University The University of Tokushima Kagawa University Ehime -

3Rdprogram.Pdf

http://www.icu.ac.jp/img/pdf/info/icu_map2011_12.pdf ○17 Kiyoshi Togasaki Memorial Dialogue House [JR Chuo Line] Musashisakai or Mitaka Station http://www.icu.ac.jp/en/access.html Host University’s Secretaries: Yoshito Ishio, Sawa Omori General Manager: Sawa Omori (International Christian University) 12:20-12:30 Speaker: Yoshinobu Araki (President of the ASPOS, Professor of Musashino University) 12:30-13:30 “The Idea on Capitalism of Public Interests: Breaking with Market Omnipotent Thinking” Speaker: Hiroshi Iyetomi (Professor, Graduate School of Economics, University of Tokyo) Chair: Yoshinobu Araki (Musashino University) 13:40-16:10 Chair, Yoshito Ishio (International Christian University) Paper 1: “From the Perspective of Social Capital” Hiroo Harada (Senshu University) Paper 2: “Toward Construction of General Knowledge” Takuo Dome (Osaka University) Paper 3: “New Understanding of Adam Smith’s Market Theory” Naohiro Yashiro (International Christian University) Discussant: Katsumi Okui (Otemon Gakuin University), Hiroshi Taniguchi (Chuo University), Yujiro Oiwa (Tokyo International University) 16:20-18:40 Chair: Hiroyuki Arayama (Nagoya University, President of Japan Economic Policy Association) Paper 1: “New Currency Theory: We Can Pay Up National Debt!—Simulation of the Chicago Plan (Currency Reform)” Kaoru Yamaguchi (Doshisha University) Paper 2: “Constructing New Theory with Economic Physics” Hideki Takayasu (Sony Computer Science Institute) Discussants: Yasuto Ugai (Kansai University), Asahi Noguchi (Senshu University), Hiroshi -

Jams/Jaims Icbi2008 1

JAMS/JAIMS ICBI2008 JAMS/JAIMS International Conference on Business & Information 2008 Increasing Global Relationship by IT and Business 2nd September 2008, JAIMS, Honolulu, Hawaii, USA PROGRAM at a Glance 8:30 - 9:00 Reception (Cafetorium) Hideto Kono Seminar Room (214) Takeo Tachikawa (Yokohama college of commerce) 9:00 - 9:10 Opening Remarks Blair Odo (JAIMS) Keynote New Technologies and Fundamental Business Shifts: Travel Industry as Exemplar 9:15 - 10:15 Presentation 1 David Lassner (University of Hawaii) Keynote Perspective Studies for Management Information System 10:20 - 11:00 Presentation 2 Toyokazu Nose (Osaka Institute of Technology) A (206) B (208) C (210) 11:10 - 12:10 Session1 PROGRAMMING MARKETING EDUCATION 12:10 - 13:00 Lunch (Cafetorium) 13:00 - 14:00 Session2 MANUFACTURING IT(1) SIMULATION(1) 14:00 - 14:10 Coffee Break (Cafetorium) 14:10 - 15:10 Session3 PRICING MODEL URBAN DEVELOPING MANAGEMENT(1) 15:10 - 15:20 Coffee Break (Cafetorium) 15:20 - 16:20 Session4 MANAGEMENT(2) IT(2) SECURITY 16:20 - 16:30 Coffee Break (Cafetorium) 16:30 - 17:30 Session5 BUSINESS IT(3) 18:00 – 20:00 Convivial Party PROGRAM SCHEDULE 8:30 - 9:00 Reception (Cafetorium) Hideto Kono Seminar Room (214) 9:00 - 9:10 Takeo Tachikawa (Yokohama college of commerce) Opening Blair Odo (JAIMS) Remarks 9:15 - 10:15 New Technologies and Fundamental Business Shifts: Travel Industry as Exemplar, David Lassner(University of Hawaii) Keynote Facilitator : Tatsumi Shimada (Setsunan University) Presentation 1 10:20 - 11:00 Perspective Studies for Management Information -

Available to Download

2018 Conference Organizers & Staff Program Committee Gary Herrigel Sébastien Lechevalier Mary Gallagher Tobias Schulze-Cleven Local Organizing Committee Masayo Fujimoto Tadashi Yagi Sayaka Sakoda Local Organizing Staff Madoka Watanabe Asuka Nakatani Mari Bito Rieko Ikeda Zhang Tao Kensuke Takamura Xiangyu Li Hiroko Ryu SASE Paris Staff Martha Zuber (Executive Director) Jacob Bromberg Patricia Zraidi Shaun William Owen Table of Contents At-A-Glance Calendar …………………………………………………………………………….. 2 Presidential Welcome ……………………………………………………….…………………… 5 About This Program…………………………………………...……...……………………….….. 6 This Year’s Conference Theme……………………….……………………………………….. 7 Next Year’s Conference Theme…………………………………………………………..…… 8 Call for 2019 Mini-Conference Themes…………………………………………………… 9 Special Events……………………………………………………………………………………… 10 General Information for Participants…………………………………………………….. 11 Maps…………….……………………………………………………………………………………… 12 SASE Early Career Workshop Awards…………………………………………………… 15 EHESS/ Fondation France-Japon Young Researcher Travel Prizes………….. 17 EHESS/ Fondation France-Japon Best Paper Award………………………………. 18 SER Best Paper Prize……………………………………………………………………………. 19 SASE 2018 Elections…………………………………………………………………………….. 20 List of Sessions and Rooms by Network and Mini-Conference……………..…. 21 Main Schedule……………………………………………………………………………………... 36 Participant Index……..…………………………………………………………………………....89 SASE 2018: Global Reordering: Prospects for Equality, Democracy, and Justice 1 Doshisha University SASE 30th Annual Conference Doshisha University -

Detailed Program

Monday, July 14 ------------------------------- Monday, July 14: ROOM 301A ------------------------------- 13:30 p.m.-15:30 p.m.: SESSION J-8 Monitoring, Sensing, and Control in Automated Systems Co-chair Hayato Yoshioka (Tokyo Institute of Technology) Co-chair Noaki Hayashi (Osaka University) Improvement of Anticipatory Classifier System with Internal Memory Tomohiro Hayashida, Ichiro Nishizaki, Ryosuke Sakato (Hiroshima University) Distributed Event-Triggered Control for Consensus Problems with Non-Linear Output Functions Kenji Nomura, Naoki Hayashi, Shigemasa Takai (Osaka University) Correspondence Matching for Stereo Vision Based Outdoor Measurement András Rövid (Óbuda University), Takeshi Hashimoto, Shohei Takayanagi (Shizuoka University) Distributed Coordination Architecture for Cooperative Multi-Robot Task Control Using Petri Nets Gen'ichi Yasuda (Nagasaki Institute of Applied Science) PERFORMANCE ASSESSMENT OF A WEIGH FEEDER Hiroshi Tajika, Takao Sato (University of Hyogo), Yoshihiro Ohnishi (Ehime University), Toru Yamamoto (Hiroshima University), Nozomu Araki, Yasuo Konishi (University of Hyogo) 15:50 p.m.-17:30 p.m. : SESSION J-8 Monitoring, Sensing, and Control in Automated Systems : SESSION J-9 Precision Machinery System and Micro/Nano Technologies : SESSION U-4 Nanomanufacturing and Nanoinformatics Co-chair Qiang Huang (University of Southern California) Co-chair Hayato Yoshioka (Tokyo Institute of Technology) Sensorless Cutting Force Estimation in Ball-Screw-Driven Stage Using Full-Closed Control Yuki Yamada, Yasuhiro Kakinuma