Message-Passing Interface for Java Applications: Practical Aspects of Leveraging High Performance Computing to Speed and Scale up the Semantic Web

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Distributed Algorithms with Theoretic Scalability Analysis of Radial and Looped Load flows for Power Distribution Systems

Electric Power Systems Research 65 (2003) 169Á/177 www.elsevier.com/locate/epsr Distributed algorithms with theoretic scalability analysis of radial and looped load flows for power distribution systems Fangxing Li *, Robert P. Broadwater ECE Department Virginia Tech, Blacksburg, VA 24060, USA Received 15 April 2002; received in revised form 14 December 2002; accepted 16 December 2002 Abstract This paper presents distributed algorithms for both radial and looped load flows for unbalanced, multi-phase power distribution systems. The distributed algorithms are developed from tree-based sequential algorithms. Formulas of scalability for the distributed algorithms are presented. It is shown that computation time dominates communication time in the distributed computing model. This provides benefits to real-time load flow calculations, network reconfigurations, and optimization studies that rely on load flow calculations. Also, test results match the predictions of derived formulas. This shows the formulas can be used to predict the computation time when additional processors are involved. # 2003 Elsevier Science B.V. All rights reserved. Keywords: Distributed computing; Scalability analysis; Radial load flow; Looped load flow; Power distribution systems 1. Introduction Also, the method presented in Ref. [10] was tested in radial distribution systems with no more than 528 buses. Parallel and distributed computing has been applied More recent works [11Á/14] presented distributed to many scientific and engineering computations such as implementations for power flows or power flow based weather forecasting and nuclear simulations [1,2]. It also algorithms like optimizations and contingency analysis. has been applied to power system analysis calculations These works also targeted power transmission systems. [3Á/14]. -

Scalability and Performance Management of Internet Applications in the Cloud

Hasso-Plattner-Institut University of Potsdam Internet Technology and Systems Group Scalability and Performance Management of Internet Applications in the Cloud A thesis submitted for the degree of "Doktors der Ingenieurwissenschaften" (Dr.-Ing.) in IT Systems Engineering Faculty of Mathematics and Natural Sciences University of Potsdam By: Wesam Dawoud Supervisor: Prof. Dr. Christoph Meinel Potsdam, Germany, March 2013 This work is licensed under a Creative Commons License: Attribution – Noncommercial – No Derivative Works 3.0 Germany To view a copy of this license visit http://creativecommons.org/licenses/by-nc-nd/3.0/de/ Published online at the Institutional Repository of the University of Potsdam: URL http://opus.kobv.de/ubp/volltexte/2013/6818/ URN urn:nbn:de:kobv:517-opus-68187 http://nbn-resolving.de/urn:nbn:de:kobv:517-opus-68187 To my lovely parents To my lovely wife Safaa To my lovely kids Shatha and Yazan Acknowledgements At Hasso Plattner Institute (HPI), I had the opportunity to meet many wonderful people. It is my pleasure to thank those who sup- ported me to make this thesis possible. First and foremost, I would like to thank my Ph.D. supervisor, Prof. Dr. Christoph Meinel, for his continues support. In spite of his tight schedule, he always found the time to discuss, guide, and motivate my research ideas. The thanks are extended to Dr. Karin-Irene Eiermann for assisting me even before moving to Germany. I am also grateful for Michaela Schmitz. She managed everything well to make everyones life easier. I owe a thanks to Dr. Nemeth Sharon for helping me to improve my English writing skills. -

Scalability and Optimization Strategies for GPU Enhanced Neural Networks (Genn)

Scalability and Optimization Strategies for GPU Enhanced Neural Networks (GeNN) Naresh Balaji1, Esin Yavuz2, Thomas Nowotny2 {T.Nowotny, E.Yavuz}@sussex.ac.uk, [email protected] 1National Institute of Technology, Tiruchirappalli, India 2School of Engineering and Informatics, University of Sussex, Brighton, UK Abstract: Simulation of spiking neural networks has been traditionally done on high-performance supercomputers or large-scale clusters. Utilizing the parallel nature of neural network computation algorithms, GeNN (GPU Enhanced Neural Network) provides a simulation environment that performs on General Purpose NVIDIA GPUs with a code generation based approach. GeNN allows the users to design and simulate neural networks by specifying the populations of neurons at different stages, their synapse connection densities and the model of individual neurons. In this report we describe work on how to scale synaptic weights based on the configuration of the user-defined network to ensure sufficient spiking and subsequent effective learning. We also discuss optimization strategies particular to GPU computing: sparse representation of synapse connections and occupancy based block-size determination. Keywords: Spiking Neural Network, GPGPU, CUDA, code-generation, occupancy, optimization 1. Introduction The computational performance of traditional single-core CPUs has steadily increased since its arrival, primarily due to the combination of increased clock frequency, process technology advancements and compiler optimizations. The clock frequency was pushed higher and higher, from 5 MHz to 3 GHz in the years from 1983 to 2002, until it reached a stall a few years ago [1] when the power consumption and dissipation of the transistors reached peaks that could not compensated by its cost [2]. -

Cluster, Grid and Cloud Computing: a Detailed Comparison

The 6th International Conference on Computer Science & Education (ICCSE 2011) August 3-5, 2011. SuperStar Virgo, Singapore ThC 3.33 Cluster, Grid and Cloud Computing: A Detailed Comparison Naidila Sadashiv S. M Dilip Kumar Dept. of Computer Science and Engineering Dept. of Computer Science and Engineering Acharya Institute of Technology University Visvesvaraya College of Engineering (UVCE) Bangalore, India Bangalore, India [email protected] [email protected] Abstract—Cloud computing is rapidly growing as an alterna- with out any prior reservation and hence eliminates over- tive to conventional computing. However, it is based on models provisioning and improves resource utilization. like cluster computing, distributed computing, utility computing To the best of our knowledge, in the literature, only a few and grid computing in general. This paper presents an end-to- end comparison between Cluster Computing, Grid Computing comparisons have been appeared in the field of computing. and Cloud Computing, along with the challenges they face. This In this paper we bring out a complete comparison of the could help in better understanding these models and to know three computing models. Rest of the paper is organized as how they differ from its related concepts, all in one go. It also follows. The cluster computing, grid computing and cloud discusses the ongoing projects and different applications that use computing models are briefly explained in Section II. Issues these computing models as a platform for execution. An insight into some of the tools which can be used in the three computing and challenges related to these computing models are listed models to design and develop applications is given. -

Grid Computing: What Is It, and Why Do I Care?*

Grid Computing: What Is It, and Why Do I Care?* Ken MacInnis <[email protected]> * Or, “Mi caja es su caja!” (c) Ken MacInnis 2004 1 Outline Introduction and Motivation Examples Architecture, Components, Tools Lessons Learned and The Future Questions? (c) Ken MacInnis 2004 2 What is “grid computing”? Many different definitions: Utility computing Cycles for sale Distributed computing distributed.net RC5, SETI@Home High-performance resource sharing Clusters, storage, visualization, networking “We will probably see the spread of ‘computer utilities’, which, like present electric and telephone utilities, will service individual homes and offices across the country.” Len Kleinrock (1969) The word “grid” doesn’t equal Grid Computing: Sun Grid Engine is a mere scheduler! (c) Ken MacInnis 2004 3 Better definitions: Common protocols allowing large problems to be solved in a distributed multi-resource multi-user environment. “A computational grid is a hardware and software infrastructure that provides dependable, consistent, pervasive, and inexpensive access to high-end computational capabilities.” Kesselman & Foster (1998) “…coordinated resource sharing and problem solving in dynamic, multi- institutional virtual organizations.” Kesselman, Foster, Tuecke (2000) (c) Ken MacInnis 2004 4 New Challenges for Computing Grid computing evolved out of a need to share resources Flexible, ever-changing “virtual organizations” High-energy physics, astronomy, more Differing site policies with common needs Disparate computing needs -

Cloud Computing Over Cluster, Grid Computing: a Comparative Analysis

Journal of Grid and Distributed Computing Volume 1, Issue 1, 2011, pp-01-04 Available online at: http://www.bioinfo.in/contents.php?id=92 Cloud Computing Over Cluster, Grid Computing: a Comparative Analysis 1Indu Gandotra, 2Pawanesh Abrol, 3 Pooja Gupta, 3Rohit Uppal and 3Sandeep Singh 1Department of MCA, MIET, Jammu 2Department of Computer Science & IT, Jammu Univ, Jammu 3Department of MCA, MIET, Jammu e-mail: [email protected], [email protected], [email protected], [email protected], [email protected] Abstract—There are dozens of definitions for cloud Virtualization is a technology that enables sharing of computing and through each definition we can get the cloud resources. Cloud computing platform can become different idea about what a cloud computing exacting is? more flexible, extensible and reusable by adopting the Cloud computing is not a very new concept because it is concept of service oriented architecture [5].We will not connected to grid computing paradigm whose concept came need to unwrap the shrink wrapped software and install. into existence thirteen years ago. Cloud computing is not only related to Grid Computing but also to Utility computing The cloud is really very easier, just to install single as well as Cluster computing. Cloud computing is a software in the centralized facility and cover all the computing platform for sharing resources that include requirements of the company’s users [1]. software’s, business process, infrastructures and applications. Cloud computing also relies on the technology II. CLUSTER COMPUTING of virtualization. In this paper, we will discuss about Grid computing, Cluster computing and Cloud computing i.e. -

“Grid Computing”

VISHVESHWARAIAH TECHNOLOGICAL UNIVERSITY S.D.M COLLEGE OF ENGINEERING AND TECHNOLOGY A seminar report on “Grid Computing” Submitted by Nagaraj Baddi (2SD07CS402) 8th semester DEPARTMENT OF COMPUTER SCIENCE ENGINEERING 2009-10 1 VISHVESHWARAIAH TECHNOLOGICAL UNIVERSITY S.D.M COLLEGE OF ENGINEERING AND TECHNOLOGY DEPARTMENT OF COMPUTER SCIENCE ENGINEERING CERTIFICATE Certified that the seminar work entitled “Grid Computing” is a bonafide work presented by Mr. Nagaraj.M.Baddi, bearing USN 2SD07CS402 in a partial fulfillment for the award of degree of Bachelor of Engineering in Computer Science Engineering of the Vishveshwaraiah Technological University Belgaum, during the year 2009-10. The seminar report has been approved as it satisfies the academic requirements with respect to seminar work presented for the Bachelor of Engineering Degree. Staff in charge H.O.D CSE (S. L. DESHPANDE) (S. M. JOSHI) Name: Nagaraj M. Baddi USN: 2SD07CS402 2 INDEX 1. Introduction 4 2. History 5 3. How Grid Computing Works 6 4. Related technologies 8 4.1 Cluster computing 8 4.2 Peer-to-peer computing 9 4.3 Internet computing 9 5. Grid Computing Logical Levels 10 5.1 Cluster Grid 10 5.2 Campus Grid 10 5.3 Global Grid 10 6. Grid Architecture 11 6.1 Grid fabric 11 6.2 Core Grid middleware 12 6.3 User-level Grid middleware 12 6.4 Grid applications and portals. 13 7. Grid Applications 13 7.1 Distributed supercomputing 13 7.2 High-throughput computing 14 7.3 On-demand computing 14 7.4 Data-intensive computing 14 7.5 Collaborative computing 15 8. Difference: Grid Computing vs Cloud Computing 15 9. -

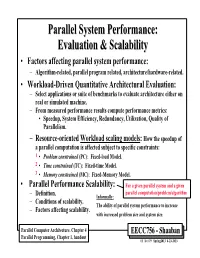

Parallel System Performance: Evaluation & Scalability

ParallelParallel SystemSystem Performance:Performance: EvaluationEvaluation && ScalabilityScalability • Factors affecting parallel system performance: – Algorithm-related, parallel program related, architecture/hardware-related. • Workload-Driven Quantitative Architectural Evaluation: – Select applications or suite of benchmarks to evaluate architecture either on real or simulated machine. – From measured performance results compute performance metrics: • Speedup, System Efficiency, Redundancy, Utilization, Quality of Parallelism. – Resource-oriented Workload scaling models: How the speedup of a parallel computation is affected subject to specific constraints: 1 • Problem constrained (PC): Fixed-load Model. 2 • Time constrained (TC): Fixed-time Model. 3 • Memory constrained (MC): Fixed-Memory Model. • Parallel Performance Scalability: For a given parallel system and a given parallel computation/problem/algorithm – Definition. Informally: – Conditions of scalability. The ability of parallel system performance to increase – Factors affecting scalability. with increased problem size and system size. Parallel Computer Architecture, Chapter 4 EECC756 - Shaaban Parallel Programming, Chapter 1, handout #1 lec # 9 Spring2013 4-23-2013 Parallel Program Performance • Parallel processing goal is to maximize speedup: Time(1) Sequential Work Speedup = < Time(p) Max (Work + Synch Wait Time + Comm Cost + Extra Work) Fixed Problem Size Speedup Max for any processor Parallelizing Overheads • By: 1 – Balancing computations/overheads (workload) on processors -

Multiprocessing and Scalability

Multiprocessing and Scalability A.R. Hurson Computer Science and Engineering The Pennsylvania State University 1 Multiprocessing and Scalability Large-scale multiprocessor systems have long held the promise of substantially higher performance than traditional uni- processor systems. However, due to a number of difficult problems, the potential of these machines has been difficult to realize. This is because of the: Fall 2004 2 Multiprocessing and Scalability Advances in technology ─ Rate of increase in performance of uni-processor, Complexity of multiprocessor system design ─ This drastically effected the cost and implementation cycle. Programmability of multiprocessor system ─ Design complexity of parallel algorithms and parallel programs. Fall 2004 3 Multiprocessing and Scalability Programming a parallel machine is more difficult than a sequential one. In addition, it takes much effort to port an existing sequential program to a parallel machine than to a newly developed sequential machine. Lack of good parallel programming environments and standard parallel languages also has further aggravated this issue. Fall 2004 4 Multiprocessing and Scalability As a result, absolute performance of many early concurrent machines was not significantly better than available or soon- to-be available uni-processors. Fall 2004 5 Multiprocessing and Scalability Recently, there has been an increased interest in large-scale or massively parallel processing systems. This interest stems from many factors, including: Advances in integrated technology. Very -

Computer Systems Architecture

CS 352H: Computer Systems Architecture Topic 14: Multicores, Multiprocessors, and Clusters University of Texas at Austin CS352H - Computer Systems Architecture Fall 2009 Don Fussell Introduction Goal: connecting multiple computers to get higher performance Multiprocessors Scalability, availability, power efficiency Job-level (process-level) parallelism High throughput for independent jobs Parallel processing program Single program run on multiple processors Multicore microprocessors Chips with multiple processors (cores) University of Texas at Austin CS352H - Computer Systems Architecture Fall 2009 Don Fussell 2 Hardware and Software Hardware Serial: e.g., Pentium 4 Parallel: e.g., quad-core Xeon e5345 Software Sequential: e.g., matrix multiplication Concurrent: e.g., operating system Sequential/concurrent software can run on serial/parallel hardware Challenge: making effective use of parallel hardware University of Texas at Austin CS352H - Computer Systems Architecture Fall 2009 Don Fussell 3 What We’ve Already Covered §2.11: Parallelism and Instructions Synchronization §3.6: Parallelism and Computer Arithmetic Associativity §4.10: Parallelism and Advanced Instruction-Level Parallelism §5.8: Parallelism and Memory Hierarchies Cache Coherence §6.9: Parallelism and I/O: Redundant Arrays of Inexpensive Disks University of Texas at Austin CS352H - Computer Systems Architecture Fall 2009 Don Fussell 4 Parallel Programming Parallel software is the problem Need to get significant performance improvement Otherwise, just use a faster uniprocessor, -

Strategies for Managing Business Disruption Due to Grid Computing

Strategies for managing business disruption due to Grid Computing by Vidyadhar Phalke Ph.D. Computer Science, Rutgers University, New Jersey, 1995 M.S. Computer Science, Rutgers University, New Jersey, 1992 B.Tech. Computer Science, Indian Institute of Technology, Delhi, 1989 Submitted to the MIT Sloan School of Management in Partial Fulfillment of the Requirements for the Degree of Master of Science in the Management of Technology at the Massachusetts Institute of Technology June 2003 © 2003 Vidyadhar Phalke All Rights Reserved The author hereby grants to MIT permission to reproduce and to distribute publicly paper and electronic copies of this thesis document in whole or in part Signature of Author: MIT Sloan School of Management 9 May 2003 Certified By: Starling D. Hunter III Theodore T. Miller Career Development Assistant Professor Thesis Supervisor Accepted By: David A. Weber Director, Management of Technology Program 2 Strategies for managing business disruption due to Grid Computing by Vidyadhar Phalke Submitted to the MIT Sloan School of Management on May 9 2003 in partial fulfillment of the requirements for the degree of Master of Science in the Management of Technology ABSTRACT In the technology centric businesses disruptive technologies displace incumbents time and again, sometimes to the extent that incumbents go bankrupt. In this thesis we would address the issue of what strategies are essential to prepare for and to manage disruptions for the affected businesses and industries. Specifically we will look at grid computing that is poised to disrupt (1) certain Enterprise IT departments, and (2) the software industry in the high-performance and web services space. -

Pascal Viewer: a Tool for the Visualization of Parallel Scalability Trends

PaScal Viewer: a Tool for the Visualization of Parallel Scalability Trends 1st Anderson B. N. da Silva 2nd Daniel A. M. Cunha Pro-Reitoria´ de Pesquisa, Inovac¸ao˜ e Pos-Graduac¸´ ao˜ Dept. de Eng. de Comp. e Automac¸ao˜ Instituto Federal da Paraiba Univ. Federal do Rio Grande do Norte Joao Pessoa, Brazil Natal, Brazil [email protected] [email protected] 3st Vitor R. G. Silva 4th Alex F. de A. Furtunato 5th Samuel Xavier de Souza Dept. de Eng. de Comp. e Automac¸ao˜ Diretoria de Tecnologia da Informac¸ao˜ Dept. de Eng. de Comp. e Automac¸ao˜ Univ. Federal do Rio Grande do Norte Instituto Federal do Rio Grande do Norte Univ. Federal do Rio Grande do Norte Natal, Brazil Natal, Brazil Natal, Brazil [email protected] [email protected] [email protected] Abstract—Taking advantage of the growing number of cores particular environment. The configuration of this environment in supercomputers to increase the scalabilty of parallel programs includes the number of cores, their operating frequency, and is an increasing challenge. Many advanced profiling tools have the size of the input data or the problem size. Among the var- been developed to assist programmers in the process of analyzing data related to the execution of their program. Programmers ious collected information, the elapsed time in each function can act upon the information generated by these data and make of the code, the number of function calls, and the memory their programs reach higher performance levels. However, the consumption of the program can be cited to name just a few information provided by profiling tools is generally designed [2].