Sound, Embodiment, and the Experience Of

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The First but Hopefully Not the Last: How the Last of Us Redefines the Survival Horror Video Game Genre

The College of Wooster Open Works Senior Independent Study Theses 2018 The First But Hopefully Not the Last: How The Last Of Us Redefines the Survival Horror Video Game Genre Joseph T. Gonzales The College of Wooster, [email protected] Follow this and additional works at: https://openworks.wooster.edu/independentstudy Part of the Other Arts and Humanities Commons, and the Other Film and Media Studies Commons Recommended Citation Gonzales, Joseph T., "The First But Hopefully Not the Last: How The Last Of Us Redefines the Survival Horror Video Game Genre" (2018). Senior Independent Study Theses. Paper 8219. This Senior Independent Study Thesis Exemplar is brought to you by Open Works, a service of The College of Wooster Libraries. It has been accepted for inclusion in Senior Independent Study Theses by an authorized administrator of Open Works. For more information, please contact [email protected]. © Copyright 2018 Joseph T. Gonzales THE FIRST BUT HOPEFULLY NOT THE LAST: HOW THE LAST OF US REDEFINES THE SURVIVAL HORROR VIDEO GAME GENRE by Joseph Gonzales An Independent Study Thesis Presented in Partial Fulfillment of the Course Requirements for Senior Independent Study: The Department of Communication March 7, 2018 Advisor: Dr. Ahmet Atay ABSTRACT For this study, I applied generic criticism, which looks at how a text subverts and adheres to patterns and formats in its respective genre, to analyze how The Last of Us redefined the survival horror video game genre through its narrative. Although some tropes are present in the game and are necessary to stay tonally consistent to the genre, I argued that much of the focus of the game is shifted from the typical situational horror of the monsters and violence to the overall narrative, effective dialogue, strategic use of cinematic elements, and character development throughout the course of the game. -

Sound, Embodiment, and the Experience of Interactivity in Video Games & Virtual

Sound, Embodiment, and the Experience of Interactivity in Video Games & Virtual Environments –DRAFT—citations incomplete! Lori Landay, Professor of Cultural Studies Berklee College of Music This presentation is part of a larger project that explores perception and imagination in interactive media, and how they are created by sound, image, and action. It marks an early stage in extending my previous work on the virtual kinoeye in virtual worlds and video games to consider aural, haptic, and kinetic aspects of embodiment and interactivity. SLIDE 2 2 In particular, the argument is that innovative sound has the potential to bridge the gaps in experiencing embodiment caused by disconnections between the perceived body in physical, representational, and imagined contexts. To explore this, I draw on Walter Murch’s spectrum of encoded and embodied sound, interviews with sound designers and composers, ways of thinking about the body from phenomenology, video game studies scholarship, and examples of the relationship between sound effects and music in video games. Let’s start with a question philosopher Don Ihde poses when he asks his students to imagine then describe an activity they have never done. Often students choose jumping out of an airplane with a parachute, and as he works through the distinctions between the possible senses of one’s own body as the physical body, a first-person perspective of the perceiving body, which 3 he calls the here-body, or the objectified over-there-body, the third-person view of one’s own body, he asks, “Where does one feel the wind?” Ihde argues the full multidimensional sensory experience is in the embodied perspective. -

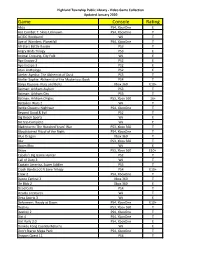

Game Console Rating

Highland Township Public Library - Video Game Collection Updated January 2020 Game Console Rating Abzu PS4, XboxOne E Ace Combat 7: Skies Unknown PS4, XboxOne T AC/DC Rockband Wii T Age of Wonders: Planetfall PS4, XboxOne T All-Stars Battle Royale PS3 T Angry Birds Trilogy PS3 E Animal Crossing, City Folk Wii E Ape Escape 2 PS2 E Ape Escape 3 PS2 E Atari Anthology PS2 E Atelier Ayesha: The Alchemist of Dusk PS3 T Atelier Sophie: Alchemist of the Mysterious Book PS4 T Banjo Kazooie- Nuts and Bolts Xbox 360 E10+ Batman: Arkham Asylum PS3 T Batman: Arkham City PS3 T Batman: Arkham Origins PS3, Xbox 360 16+ Battalion Wars 2 Wii T Battle Chasers: Nightwar PS4, XboxOne T Beyond Good & Evil PS2 T Big Beach Sports Wii E Bit Trip Complete Wii E Bladestorm: The Hundred Years' War PS3, Xbox 360 T Bloodstained Ritual of the Night PS4, XboxOne T Blue Dragon Xbox 360 T Blur PS3, Xbox 360 T Boom Blox Wii E Brave PS3, Xbox 360 E10+ Cabela's Big Game Hunter PS2 T Call of Duty 3 Wii T Captain America, Super Soldier PS3 T Crash Bandicoot N Sane Trilogy PS4 E10+ Crew 2 PS4, XboxOne T Dance Central 3 Xbox 360 T De Blob 2 Xbox 360 E Dead Cells PS4 T Deadly Creatures Wii T Deca Sports 3 Wii E Deformers: Ready at Dawn PS4, XboxOne E10+ Destiny PS3, Xbox 360 T Destiny 2 PS4, XboxOne T Dirt 4 PS4, XboxOne T Dirt Rally 2.0 PS4, XboxOne E Donkey Kong Country Returns Wii E Don't Starve Mega Pack PS4, XboxOne T Dragon Quest 11 PS4 T Highland Township Public Library - Video Game Collection Updated January 2020 Game Console Rating Dragon Quest Builders PS4 E10+ Dragon -

Intersomatic Awareness in Game Design

The London School of Economics and Political Science Intersomatic Awareness in Game Design Siobhán Thomas A thesis submitted to the Department of Management of the London School of Economics for the degree of Doctor of Philosophy. London, June 2015 1 Declaration I certify that the thesis I have presented for examination for the PhD degree of the London School of Economics and Political Science is solely my own work. The copyright of this thesis rests with the author. Quotation from it is permitted, provided that full acknowledgement is made. This thesis may not be reproduced without my prior written consent. I warrant that this authorisation does not, to the best of my belief, infringe the rights of any third party. I declare that my thesis consists of 66,515 words. 2 Abstract The aim of this qualitative research study was to develop an understanding of the lived experiences of game designers from the particular vantage point of intersomatic awareness. Intersomatic awareness is an interbodily awareness based on the premise that the body of another is always understood through the body of the self. While the term intersomatics is related to intersubjectivity, intercoordination, and intercorporeality it has a specific focus on somatic relationships between lived bodies. This research examined game designers’ body-oriented design practices, finding that within design work the body is a ground of experiential knowledge which is largely untapped. To access this knowledge a hermeneutic methodology was employed. The thesis presents a functional model of intersomatic awareness comprised of four dimensions: sensory ordering, sensory intensification, somatic imprinting, and somatic marking. -

The Last Guardian Free Download

THE LAST GUARDIAN FREE DOWNLOAD Eoin Colfer | 328 pages | 10 Jul 2012 | Hyperion | 9781423161615 | English | New York, United States The Last Guardian His own development process? August 6th, - Fumito Ueda says the new project has a long way to go before finishing development. Also, Trico's size prevents it from entering small spaces, although not from lack of trying. The The Last Guardian is regurgitated, now suddenly covered in the tattoo-like markings. There are very few games that become legendary for a single moment, a single unforgettable The Last Guardian, but Ico is certainly one of them. While initially stunned by the talismans, Trico overcomes its fear and rescues the Boy, using its partially-healed wings to glide to safety. Inside, they discover the Master of the Valleyan evil being resembling an orb of both darkness and pale light, who controls the Knights and the other Trico-beasts. It upsets our deeply learned patterns of spatial reasoning in video games. Refuses to commit to a release. Retrieved 17 February The Last Guardian distribution:. The Last Guardian received "generally favorable" reviews, according to video game review aggregator Metacritic. Retrieved 13 June The game is so beautiful and amazing with a fascinating story. One including a strange tower that Trico does not dare go The Last Guardian. August 4th, - Shuhei Yoshida: "Team Ico has something really, really good on the way. When it first encounters the Boy, it is hostile, as he is under the direction of the Master. Start a Wiki. There were a hundred games released this year that are more fluid The Last Guardian fun to play minute-to-minute, and dozens that perform with a silky smooth frame rate, yet I'll remember this adventure with Trico long after I've forgotten those. -

Data Protection Rights: What the Public Want and What the Public Want from Data Protection Authorities

Data protection rights: What the public want and what the public want from Data Protection Authorities Executive Summary 1. In order to be effective Data Protection Authorities (DPAs) need to understand what the public are concerned about, how they understand their data protection rights, what they expect DPAs to do to uphold their rights and how they would like to be empowered by DPAs to use them. 2. There is of course no ‘one size fits all’ view on what the public are concerned about, at more granular level there are much more nuanced views on sharing their personal data and how this should be used by organisations and what they would like to see from DPAs. There are however a number of themes which appear from the views of the public across Europe. 3. The commonly recurring themes of what the public want from data protection are; • Control over their personal data; • Transparency – they want to know what organisations will do with their personal data; • To understand the different purposes and benefits of data sharing; • Security of their personal data; and • Specific rights of access, deletion and portable personal data. 1 Data Protection Rights: What the public want and what the public want from Data Protection Authorities Prepared by the ICO for the European conference of Data Protection Authorities, Manchester - May 2015 4. The themes of what the public want from DPAs are; • Independence – DPAs free from outside influence; • Consistency – where possible a consistent approach to data protection across the EU; • Visibility – DPAs making themselves known, providing clear help and guidance to them and also to organisations; • Privacy certification, seals and trust marks – giving them confidence in the organisations who are processing their personal data; • Responsive to new technologies – DPAs that understand the privacy implications of the new technologies they encounter in their daily lives; and • Enforcement – appropriate remedies that are used effectively by DPAs to ensure that organisations comply with data protection rules. -

The Dynamics of the Player Narrative How Choice

THE DYNAMICS OF THE PLAYER NARRATIVE HOW CHOICE SHAPES VIDEOGAME LITERATURE A Thesis Presented to The Academic Faculty by Jeffrey Scott Bryan In Partial Fulfillment of the Requirements for the Degree Master’s of Science in Digital Media in the School of Literature, Media, and Communication Georgia Institute of Technology May, 2013 Copyright 2013 by Jeffrey Bryan THE DYNAMICS OF THE PLAYER NARRATIVE HOW CHOICE SHAPES VIDEOGAME LITERATURE Approved by: Dr. Ian Bogost, Advisor Dr. Jay Bolter School of Literature, Media, and School of Literature, Media, and Communication Communication Georgia Institute of Technology Georgia Institute of Technology Dr. Janet Murray Dr. Todd Petersen School of Literature, Media, and College of Humanities & Social Communication Sciences Georgia Institute of Technology Southern Utah University Date Approved: 3 April 2013 To my wife, Cora, for her unyielding faith in me. To Fallon, Quinn, and Silas, who inspire me every day to be more than the sum of my parts. To my mom for buying my first Super Nintendo. ACKNOWLEDGEMENTS I wish to thank my committee for the advice given and the time taken to help keep my analysis on course. I am grateful for the wealth of minds around me and the opportunities I have had to share my many disparate ideas. I would like to thank Dr. Kathleen Bieke, professor emeritus of English at Northwest College. When everyone else saw a high school dropout, she saw an English major with a wonderful dream of studying videogames as literature. I would also like to thank my grandparents, Captain E.K. Walling and Grace Walling, without whom I would never have been able to conceptualize a world in which I could possibly be attending graduate school. -

Web Content Guidelines for Playstation®4

Web Content Guidelines for PlayStation®4 Version 9.00 © 2021 Sony Interactive Entertainment Inc. [Copyright and Trademarks] "PlayStation" and "DUALSHOCK" are registered trademarks or trademarks of Sony Interactive Entertainment Inc. Oracle and Java are registered trademarks of Oracle and/or its affiliates. "Mozilla" is a registered trademark of the Mozilla Foundation. The Bluetooth® word mark and logos are registered trademarks owned by the Bluetooth SIG, Inc. and any use of such marks by Sony Interactive Entertainment Inc. is under license. Other trademarks and trade names are those of their respective owners. Safari is a trademark of Apple Inc., registered in the U.S. and other countries. DigiCert is a trademark of DigiCert, Inc. and is protected under the laws of the United States and possibly other countries. Symantec and GeoTrust are trademarks or registered trademarks of Symantec Corporation or its affiliates in the U.S. and other countries. Other names may be trademarks of their respective owners. VeriSign is a trademark of VeriSign, Inc. All other company, product, and service names on this guideline are trade names, trademarks, or registered trademarks of their respective owners. [Terms and Conditions] All rights (including, but not limited to, copyright) pertaining to this Guideline are managed, owned, or used with permission, by SIE. Except for personal, non-commercial, internal use, you are prohibited from using (including, but not limited to, copying, modifying, reproducing in whole or in part, uploading, transmitting, distributing, licensing, selling and publishing) any of this Guideline, without obtaining SIE’s prior written permission. SIE AND/OR ANY OF ITS AFFILIATES MAKE NO REPRESENTATION AND WARRANTY, EXPRESS OR IMPLIED, STATUTORY OR OTHERWISE, INCLUDING WARRANTIES OR REPRESENTATIONS WITH RESPECT TO THE ACCURACY, RELIABILITY, COMPLETENESS, FITNESS FOR PARTICULAR PURPOSE, NON-INFRINGEMENT OF THIRD PARTIES RIGHTS AND/OR SAFETY OF THE CONTENTS OF THIS GUIDELINE, AND ANY REPRESENTATIONS AND WARRANTIES RELATING THERETO ARE EXPRESSLY DISCLAIMED. -

WHITE PAPER VERSION 1.03 the ICO Public Sale

WHITE PAPER VERSION 1.03 The ICO public sale: 2018 Q4 The Mobile Computational Network Page 1 PREFACE We exist in a computer age where there is a struggle to cope with a scarcity of computational resources. A vast digital influx of critical data emerges daily, yet our reach to such capacity is challenged to keep afloat. It all stems from “Big Data” commercial applications, and exponential growth of ambitious scientific research. We seek to understand everything and everyone - from subatomic particles, to analysis of DNA genomes, social behavior and the outer limits of astrophysics and cosmology. From the “micro” to the “macro”, our insatiable computational needs kept spiraling out of control. The NATEE project is one of computational conservation. NATEE is a word in the Thai language which translates as “minute”. We can envision the millions of smartphone conversations unravelling “per minute” across the internet helps to illuminate the company’s name, and untapped potential of “mobile grid computing” Page 2 Table of Contents 1 Introduction 4 2 Understanding grid computing 7 3 Market situation 8 4 Problem statement 9 5 Solution outline of the vision 11 6 NATEE’s applications for big data 12 7 Business model 13 8 NATEE company and research 17 9 Key success factors 18 10 NATEE ecosystem 20 11 NATEE architecture 21 12 NATEE platform 25 13 Grid management 27 14 Incentive Mechanism for Mobile Decentralized Computing Systems 30 15 NATEE Research collaboration with NTU 32 16 Tokenomics the NATEE token 33 17 Crowd sale NATEE ico plans 35 18 Team NATEE 39 19 References 42 Page 3 INTRODUCTION “Connecting passive usages of personal device to some passive income” 1 Global computing power is a critically important resource of our time. -

The Last Guardian Narrating Through Mechanics and Empathy

The Last Guardian Narrating through Mechanics and Empathy Beat Suter In my dream I was flying. Flying through the darkness … I awoke to find myself in a strange cave. I noticed with a start that I was not alone. Beside me lay a great man-eating beast. (The Last Guardian 2016) Thus an older man begins with a deep voice from offstage and tells in retrospect his story, which he experienced as a boy. The poetic beginning is quickly re- placed by the presence of the boy and the beast that have to get familiar with each other and find their way in a foreign environment. Together they learn the mechanics of interaction and progress. The narrative mechanics of the game The Last Guardian couldn’t be easier at first. A boy and an animal find themselves in a chasm, which looks like a dun- geon. The large beast is chained and injured. The boy wakes up from uncon- sciousness and does not know where he is. It quickly becomes clear that the boy and the animal are in mutual dependence to each other. One cannot move for- ward without the other. EMPATHY AND SMALL NARRATIVE INTERPERSONAL INTERACTIONS It takes time for them to get to know each other and develop mutual support and cooperation. It is a slow approach. The boy tries to win the trust of the animal, 310 | Beat Suter which is much more powerful than him. This is the only way he can free the ani- mal from the chain and pull the spears from its wounds. -

Synchronization by Distal Dendrite-Targeting Interneurons

BRAIN INSTITUTE Federal University of Rio Grande do Norte Synchronization by Distal Dendrite-targeting Interneurons - phenomenon, concept and reality - THESIS for the degree of Doctor of Philosophy in Neuroscience submitted by Markus Michael Hilscher Matriculation: 2013116277 at the Brain Institute of the Federal University of Rio Grande do Norte Advisor: Dr. Emelie Katarina Svahn Leão Brain Institute, Federal University of Rio Grande do Norte, Brazil Co-Advisor: Dr. Richardson Naves Leão Brain Institute, Federal University of Rio Grande do Norte, Brazil Co-Advisor: Dr. Klas Kullander Department of Neuroscience, Uppsala University, Sweden Opponent: Dr. Christopher Kushmerick Institute of Biological Sciences, Federal University of Minas Gerais, Brazil Opponent: Dr. Gilad Silberberg Department of Neuroscience, Karolinska Institute, Sweden Natal, 01.04.2017 Federal University of Rio Grande do Norte 59056-450 Natal Av. Nascimento de Castro, 2155 www.neuro.ufrn.br BRAIN INSTITUTE Federal University of Rio Grande do Norte Sincronização através de interneurônios que inervam dendritos distais - fenômeno, conceito e realidade - TESE para a obtenção do título de Doutor em Neurociências apresentada por Markus Michael Hilscher Matrícula 2013116277 no Instituto do Cérebro da Universidade Federal do Rio Grande do Norte Orientadora: Dra. Emelie Katarina Svahn Leão Instituto do Cérebro, Universidade Federal do Rio Grande do Norte, Brasil Co-Orientador: Dr. Richardson Naves Leão Instituto do Cérebro, Universidade Federal do Rio Grande do Norte, Brasil Co-Orientador: Dr. Klas Kullander Departamento de Neurociências, Universidade de Uppsala, Suécia Avaliador: Dr. Christopher Kushmerick Instituto de Ciências Biológicas, Universidade Federal de Minas Gerais, Brasil Avaliador: Dr. Gilad Silberberg Departamento de Neurociências, Instituto Karolinska, Suécia Natal, 01.04.2017 Federal University of Rio Grande do Norte 59056-450 Natal Av. -

Involvement and Presence in Four Different PC-Games

Adaptation into a Game: Involvement and Presence in Four Different PC-Games Jari Takatalo*, Jukka Häkkinen#, Jyrki Kaistinen*, Jeppe Komulainen*, Heikki Särkelä* & Göte Nyman* *University of Helsinki #Nokia Research Center P.O. Box 9, 00014 Helsinki, Finland P.O. Box 45, 00045 Helsinki, Finland [email protected] [email protected] ABSTRACT This study examines psychological adaptation in four differ- Gaming Experience ent game-worlds. The concept of adaptation is used to de- Realness scribe the way the players willingly form a relationship with Computer and video games are said to be engaging because the physical and social reality provided by the game. It is they are capable of providing visually realistic and life-like measured by using a model consisting of two psychologi- 3D –environments for action [19]. Realistic high-quality cally relevant constructs: involvement and presence. These graphics are also found to be one of the most important constructs and the seven measurement scales measuring game characters in empirical studies [37]. Besides graphics, them have been factor-analytically extracted in a large also sound and setting are considered essential to form a (n=2182 subjects) data collected both via the Internet and in high degree of realism in games [29, 37]. the laboratory. Several background variables were also stud- In addition to perceptual realness also functional realness or ied to further understand the differences in players’ adapta- physical consistency in the game-world is required for im- tion. The results showed different adaptation profiles among pressive gaming experience [6]. Inconsistencies in the game- the four studied games.