Fair Leader Election for Rational Agents in Asynchronous Rings and Networks

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Sok: Tools for Game Theoretic Models of Security for Cryptocurrencies

SoK: Tools for Game Theoretic Models of Security for Cryptocurrencies Sarah Azouvi Alexander Hicks Protocol Labs University College London University College London Abstract form of mining rewards, suggesting that they could be prop- erly aligned and avoid traditional failures. Unfortunately, Cryptocurrencies have garnered much attention in recent many attacks related to incentives have nonetheless been years, both from the academic community and industry. One found for many cryptocurrencies [45, 46, 103], due to the interesting aspect of cryptocurrencies is their explicit consid- use of lacking models. While many papers aim to consider eration of incentives at the protocol level, which has motivated both standard security and game theoretic guarantees, the vast a large body of work, yet many open problems still exist and majority end up considering them separately despite their current systems rarely deal with incentive related problems relation in practice. well. This issue arises due to the gap between Cryptography Here, we consider the ways in which models in Cryptog- and Distributed Systems security, which deals with traditional raphy and Distributed Systems (DS) can explicitly consider security problems that ignore the explicit consideration of in- game theoretic properties and incorporated into a system, centives, and Game Theory, which deals best with situations looking at requirements based on existing cryptocurrencies. involving incentives. With this work, we offer a systemati- zation of the work that relates to this problem, considering papers that blend Game Theory with Cryptography or Dis- Methodology tributed systems. This gives an overview of the available tools, and we look at their (potential) use in practice, in the context As we are covering a topic that incorporates many different of existing blockchain based systems that have been proposed fields coming up with an extensive list of papers would have or implemented. -

Chicago Journal of Theoretical Computer Science the MIT Press

Chicago Journal of Theoretical Computer Science The MIT Press Volume 1997, Article 2 3 June 1997 ISSN 1073–0486. MIT Press Journals, Five Cambridge Center, Cambridge, MA 02142-1493 USA; (617)253-2889; [email protected], [email protected]. Published one article at a time in LATEX source form on the Internet. Pag- ination varies from copy to copy. For more information and other articles see: http://www-mitpress.mit.edu/jrnls-catalog/chicago.html • http://www.cs.uchicago.edu/publications/cjtcs/ • ftp://mitpress.mit.edu/pub/CJTCS • ftp://cs.uchicago.edu/pub/publications/cjtcs • Kann et al. Hardness of Approximating Max k-Cut (Info) The Chicago Journal of Theoretical Computer Science is abstracted or in- R R R dexed in Research Alert, SciSearch, Current Contents /Engineering Com- R puting & Technology, and CompuMath Citation Index. c 1997 The Massachusetts Institute of Technology. Subscribers are licensed to use journal articles in a variety of ways, limited only as required to insure fair attribution to authors and the journal, and to prohibit use in a competing commercial product. See the journal’s World Wide Web site for further details. Address inquiries to the Subsidiary Rights Manager, MIT Press Journals; (617)253-2864; [email protected]. The Chicago Journal of Theoretical Computer Science is a peer-reviewed scholarly journal in theoretical computer science. The journal is committed to providing a forum for significant results on theoretical aspects of all topics in computer science. Editor in chief: Janos Simon Consulting -

Download This PDF File

T G¨ P 2012 C N Deadline: December 31, 2011 The Gödel Prize for outstanding papers in the area of theoretical computer sci- ence is sponsored jointly by the European Association for Theoretical Computer Science (EATCS) and the Association for Computing Machinery, Special Inter- est Group on Algorithms and Computation Theory (ACM-SIGACT). The award is presented annually, with the presentation taking place alternately at the Inter- national Colloquium on Automata, Languages, and Programming (ICALP) and the ACM Symposium on Theory of Computing (STOC). The 20th prize will be awarded at the 39th International Colloquium on Automata, Languages, and Pro- gramming to be held at the University of Warwick, UK, in July 2012. The Prize is named in honor of Kurt Gödel in recognition of his major contribu- tions to mathematical logic and of his interest, discovered in a letter he wrote to John von Neumann shortly before von Neumann’s death, in what has become the famous P versus NP question. The Prize includes an award of USD 5000. AWARD COMMITTEE: The winner of the Prize is selected by a committee of six members. The EATCS President and the SIGACT Chair each appoint three members to the committee, to serve staggered three-year terms. The committee is chaired alternately by representatives of EATCS and SIGACT. The 2012 Award Committee consists of Sanjeev Arora (Princeton University), Josep Díaz (Uni- versitat Politècnica de Catalunya), Giuseppe Italiano (Università a˘ di Roma Tor Vergata), Mogens Nielsen (University of Aarhus), Daniel Spielman (Yale Univer- sity), and Eli Upfal (Brown University). ELIGIBILITY: The rule for the 2011 Prize is given below and supersedes any di fferent interpretation of the parametric rule to be found on websites on both SIGACT and EATCS. -

Chicago Journal of Theoretical Computer Science the MIT Press

Chicago Journal of Theoretical Computer Science The MIT Press Volume 1998, Article 2 16 March 1998 ISSN 1073–0486. MIT Press Journals, Five Cambridge Center, Cambridge, MA 02142-1493 USA; (617)253-2889; [email protected], [email protected]. Published one article at a time in LATEX source form on the Internet. Pag- ination varies from copy to copy. For more information and other articles see: http://mitpress.mit.edu/CJTCS/ • http://www.cs.uchicago.edu/publications/cjtcs/ • ftp://mitpress.mit.edu/pub/CJTCS • ftp://cs.uchicago.edu/pub/publications/cjtcs • Kupferman and Vardi Verification of Systems (Info) The Chicago Journal of Theoretical Computer Science is abstracted or in- R R R dexed in Research Alert, SciSearch, Current Contents /Engineering Com- R puting & Technology, and CompuMath Citation Index. c 1998 The Massachusetts Institute of Technology. Subscribers are licensed to use journal articles in a variety of ways, limited only as required to insure fair attribution to authors and the journal, and to prohibit use in a competing commercial product. See the journal’s World Wide Web site for further details. Address inquiries to the Subsidiary Rights Manager, MIT Press Journals; (617)253-2864; [email protected]. The Chicago Journal of Theoretical Computer Science is a peer-reviewed scholarly journal in theoretical computer science. The journal is committed to providing a forum for significant results on theoretical aspects of all topics in computer science. Editor in chief: Janos Simon Consulting editors: Joseph Halpern, -

SIGACT Viability

SIGACT Name and Mission: SIGACT: SIGACT Algorithms & Computation Theory The primary mission of ACM SIGACT (Association for Computing Machinery Special Interest Group on Algorithms and Computation Theory) is to foster and promote the discovery and dissemination of high quality research in the domain of theoretical computer science. The field of theoretical computer science is interpreted broadly so as to include algorithms, data structures, complexity theory, distributed computation, parallel computation, VLSI, machine learning, computational biology, computational geometry, information theory, cryptography, quantum computation, computational number theory and algebra, program semantics and verification, automata theory, and the study of randomness. Work in this field is often distinguished by its emphasis on mathematical technique and rigor. Newsletters: Volume Issue Issue Date Number of Pages Actually Mailed 2014 45 01 March 2014 N/A 2013 44 04 December 2013 104 27-Dec-13 44 03 September 2013 96 30-Sep-13 44 02 June 2013 148 13-Jun-13 44 01 March 2013 116 18-Mar-13 2012 43 04 December 2012 140 29-Jan-13 43 03 September 2012 120 06-Sep-12 43 02 June 2012 144 25-Jun-12 43 01 March 2012 100 20-Mar-12 2011 42 04 December 2011 104 29-Dec-11 42 03 September 2011 108 29-Sep-11 42 02 June 2011 104 N/A 42 01 March 2011 140 23-Mar-11 2010 41 04 December 2010 128 15-Dec-10 41 03 September 2010 128 13-Sep-10 41 02 June 2010 92 17-Jun-10 41 01 March 2010 132 17-Mar-10 Membership: based on fiscal year dates 1st Year 2 + Years Total Year Professional -

3Rd International Workshop on Strategic Reasoning

Proceedings of the 3rd International Workshop on Strategic Reasoning Oxford, United Kingdom, September 21-22, 2015 Edited by: Julian Gutierrez, Fabio Mogavero, Aniello Murano, Michael Wooldridge Preface This volume contains the papers presented at SR 2015: the 3rd International Workshop on Strategic Reasoning held on September 20-21, 2015 in Oxford. Strategic reasoning is one of the most active research areas in the multi-agent system domain. The literature in this field is extensive and provides a plethora of logics for modelling strategic ability. Theoretical results are now being used in many exciting domains, including software tools for information system security, robot teams with sophisticated adaptive strategies, and automatic players ca- pable of beating expert human adversary, just to cite a few. All these examples share the challenge of developing novel theories, tools, and techniques for agent- based reasoning that take into account the likely behaviour of adversaries. The SR international workshop aims to bring together researchers working on differ- ent aspects of strategic reasoning in computer science and artificial intelligence, both from a theoretical and a practical point of view. This year SR has four invited talks: 1. Coalgebraic Analysis of Equilibria in Infinite Games. Samson Abramsky (University of Oxford). 2. In Between High and Low Rationality. Johan van Benthem (University of Amsterdam and Stanford University). 3. Language-based Games. Joseph Halpern (Cornell University). 4. A Revisionist History of Algorithmic Game Theory. Moshe Vardi (Rice University). We also have four invited tool presentations and eleven contributed papers. Each submission to SR 2015 was evaluated by three reviewers for quality and relevance to the topics of the workshop. -

Mark Rogers Tuttle

Mark Rogers Tuttle Intel Corporation 8 Melanie Lane 77 Reed Road Arlington, MA 02474-2619 Hudson, MA 01749 (781) 648-1106 (978) 553-3305 [email protected] [email protected] www.markrtuttle.com Research Interests Distributed computation (design, analysis, and verification of distributed algorithms; mod- els and semantics of distributed computation; formal methods for specification and verifi- cation of algorithms), sequential and parallel algorithms, general theory of computation. Education MASSACHUSETTS INSTITUTE OF TECHNOLOGY Cambridge, MA Ph.D. in Computer Science, September 1989. Thesis Title: Knowledge and Distributed Computation. Thesis Advisor: Nancy A. Lynch. First place on computer algorithms Ph.D. qualifying exam; letter of commendation for exceptional performance in Computer System Architecture under Professor Arvind; IBM Graduate Fellowship; GTE Graduate Fellowship; Mortar Board National Foundation Fellowship; NSF Graduate Fellowship Competition, honorable mention; member, Sigma Xi. MASSACHUSETTS INSTITUTE OF TECHNOLOGY Cambridge, MA M.S. in Computer Science, May 1987. Thesis Title: Hierarchical Correctness Proofs for Distributed Algorithms. Thesis Advisor: Nancy A. Lynch. UNIVERSITY OF NEBRASKA–LINCOLN Lincoln, NE B.S. in Mathematics and Computer Science, June 1984. With highest distinction. Ranked first in graduating class; member Phi Beta Kappa and Mortar Board; designated “No- table Person of the University,” Chancellor’s Scholar, Regents’ Scholar, and Joel Stebbins Scholar. Recognition Best paper award at Formal Methods in -

Contents U U U

Contents u u u ACM Awards Reception and Banquet, June 2018 .................................................. 2 Introduction ......................................................................................................................... 3 A.M. Turing Award .............................................................................................................. 4 ACM Prize in Computing ................................................................................................. 5 ACM Charles P. “Chuck” Thacker Breakthrough in Computing Award ............. 6 ACM – AAAI Allen Newell Award .................................................................................. 7 Software System Award ................................................................................................... 8 Grace Murray Hopper Award ......................................................................................... 9 Paris Kanellakis Theory and Practice Award ...........................................................10 Karl V. Karlstrom Outstanding Educator Award .....................................................11 Eugene L. Lawler Award for Humanitarian Contributions within Computer Science and Informatics ..........................................................12 Distinguished Service Award .......................................................................................13 ACM Athena Lecturer Award ........................................................................................14 Outstanding Contribution -

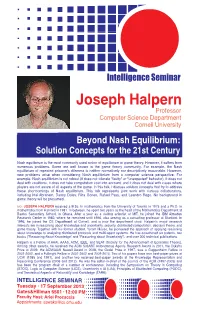

Joseph Halpern Professor Computer Science Department Cornell University Beyond Nash Equilibrium: Solution Concepts for the 21St Century

QuickTime™ and a decompressor are needed to see this picture. Intelligence Seminar Joseph Halpern Professor Computer Science Department Cornell University Beyond Nash Equilibrium: Solution Concepts for the 21st Century Nash equilibrium is the most commonly used notion of equilibrium in game theory. However, it suffers from numerous problems. Some are well known in the game theory community. For example, the Nash equilibrium of repeated prisoner's dilemma is neither normatively nor descriptively reasonable. However, new problems arise when considering Nash equilibrium from a computer science perspective. For example, Nash equilibrium is not robust (it does not tolerate "faulty" or "unexpected" behavior), it does not deal with coalitions, it does not take computation cost into account, and it does not deal with cases where players are not aware of all aspects of the game. In this talk, I discuss solution concepts that try to address these shortcomings of Nash equilibrium. This talk represents joint work with various collaborators, including Ittai Abraham, Danny Dolev, Rica Gonen, Rafael Pass, and Leandro Rego. No background in game theory will be presumed. BIO: JOSEPH HALPERN received a B.Sc. in mathematics from the University of Toronto in 1975 and a Ph.D. in mathematics from Harvard in 1981. In between, he spent two years as the head of the Mathematics Department at Bawku Secondary School, in Ghana. After a year as a visiting scientist at MIT, he joined the IBM Almaden Research Center in 1982, where he remained until 1996, also serving as a consulting professor at Stanford. In 1996, he joined the CS Department at Cornell, and is now the department chair. -

Mark Rogers Tuttle

Mark Rogers Tuttle HP Labs Cambridge 8 Melanie Lane One Cambridge Center Arlington, MA 02474-2619 Cambridge, MA 02142-1612 (781) 648-1106 [email protected] (617) 551-7635 Research Interests Distributed computation (design, analysis, and verification of distributed algorithms; mod- els and semantics of distributed computation; formal methods for specification and verifi- cation of algorithms), sequential and parallel algorithms, general theory of computation. Education Massachusetts Institute of Technology Cambridge, MA Ph.D. in Computer Science, September 1989. Thesis Title: Knowledge and Distributed Computation. Thesis Advisor: Nancy A. Lynch. First place on computer algorithms Ph.D. qualifying exam; letter of commendation for exceptional performance in Computer System Architecture under Professor Arvind; IBM Graduate Fellowship; GTE Graduate Fellowship; Mortar Board National Foundation Fellowship; NSF Graduate Fellowship Competition, honorable mention; member, Sigma Xi. Massachusetts Institute of Technology Cambridge, MA M.S. in Computer Science, May 1987. Thesis Title: Hierarchical Correctness Proofs for Distributed Algorithms. Thesis Advisor: Nancy A. Lynch. University of Nebraska–Lincoln Lincoln, NE B.S. in Mathematics and Computer Science, June 1984. With highest distinction. Ranked first in graduating class; member Phi Beta Kappa and Mortar Board; designated “No- table Person of the University,” Chancellor’s Scholar, Regents’ Scholar, and Joel Stebbins Scholar. Awards Young Alumni Achievement Award, University of Nebraska–Lincoln, May 1997 Certificate of Recognition, Digital Equipment Corporation, Wildfire Verification Project, April 1998 National champion, ACM National Scholastic Programming Competition, UNL team, 1983 Employment Hewlett Packard Company (2002–present) Cambridge, MA Principal Member of Technical Staff, HP Labs Cambridge, under director Richard Zippel. Compaq Computer Corporation (1999–2002) Cambridge, MA Promoted to Principal Member of Technical Staff, Cambridge Research Lab, under direc- tors R. -

Cornell Booklet

cornell-vin UNIVERSITY PROJECT 2 Cornell-VinUniversity Project Cornell-VinUniversity Project 3 INTRODUCTION “The goal of VinUniversity is to be a university of the high- est international standards in research, teaching and training for students both at the undergraduate and graduate levels, serving as a magnet for the most talented faculty and students from all over the world.” Rohit Verma Founding Provost, VinUniversity Protem Committee Member, VinUniversity Project Former Dean of External Relations, Cornell SC Johnson College of Business On November 27, 2017, Cornell University, on behalf of the Cornell SC Johnson College of Busi- ness, entered into a multi-year academic consulting contract with Vingroup - a large conglom- erate located in Hanoi, Vietnam. The goal of this project is to support Vingroup in its aspiration to create a new, world-class private university – VinUniversity. The new university will include Colleges of Business, Engineering, and Health Sciences. Cornell is involved with every aspect of VinUniversity's development, spanning infrastructure, governance, faculty hiring, and curricu- lum development. Cornell is leading the consultive collaboration for the Business and Engineer- ing Colleges. The University of Pennsylvania is advising on the Health Sciences College. Public engagement is central to Cornell's landgrant mission, and this unique opportunity extends that mission internationally. Situated within the Cornell SC Johnson College of Business, The Cornell-VinUniversity Project Team will manage this collaboration. Cornell’s primary role will be to build its faculty and lead- ership teams to validate and assure the quality of curriculum developed by VinUniversity faculty, and to guide VinUniversity on a path of international accreditation. -

Chicago Journal of Theoretical Computer Science MIT Press

Chicago Journal of Theoretical Computer Science MIT Press Volume 1995, Article 2 21 July, 1995 ISSN 1073–0486. MIT Press Journals, 55 Hayward St., Cambridge, MA 02142; (617)253-2889; [email protected], [email protected]. Pub- lished one article at a time in LATEX source form on the Internet. Pagination varies from copy to copy. For more information and other articles see: http://www-mitpress.mit.edu/jrnls-catalog/chicago.html/ • http://www.cs.uchicago.edu/publications/cjtcs/ • gopher.mit.edu • gopher.cs.uchicago.edu • anonymous ftp at mitpress.mit.edu • anonymous ftp at cs.uchicago.edu • Grolmusz Weak mod m Representation of Boolean Functions (Info) c 1995 by Massachusetts Institute of Technology. Subscribers are licensed to use journal articles in a variety of ways, limited only as required to insure fair attribution to authors and the journal, and to prohibit use in a competing commercial product. See the journal’s World Wide Web site for further details. Address inquiries to the Subsidiary Rights Manager, MIT Press Journals; (617)253-2864; [email protected]. The Chicago Journal of Theoretical Computer Science is a peer-reviewed scholarly journal in theoretical computer science. The journal is committed to providing a forum for significant results on theoretical aspects of all topics in Computer Science. Editor in chief: Janos Simon Consulting editors: Joseph Halpern, Stuart A. Kurtz, Raimund Seidel Editors: Martin Abadi Greg Frederickson John Mitchell Pankaj Agarwal Andrew Goldberg Ketan Mulmuley Eric Allender Georg Gottlob Gil Neiger Tetsuo Asano Vassos Hadzilacos David Peleg Laszl´o Babai Juris Hartmanis Andrew Pitts Eric Bach Maurice Herlihy James Royer Stephen Brookes Stephen Homer Alan Selman Jin-Yi Cai Neil Immerman Nir Shavit Anne Condon Paris Kanellakis Eva Tardos Cynthia Dwork Howard Karloff Sam Toueg David Eppstein Philip Klein Moshe Vardi Ronald Fagin Phokion Kolaitis Jennifer Welch Lance Fortnow Stephen Mahaney Pierre Wolper Steven Fortune Michael Merritt Managing editor: Michael J.