Identification and Characterization of Events in Social Media

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Dark Side User Manual

USER MANUAL 1.0.2 EASTWEST | THE DARK SIDE IMPORTANT COMPATIBILITY NOTE! Our Revolutionary New Opus Software Engine Our brand new Opus software engine has been years in development, and replaces the Play engine. All EastWest Libraries (with the exception of the original Hollywood Orchestra, the original Hollywood Solo Instruments, and the MIDI Guitar Series) are supported in Opus, allowing them to take advantage of a faster, more powerful, more flexible, and better looking software engine. Opus comes with some incredible new features such as individual instrument down- loads, customized key-switches, new effects for the mixer page, scalable retina user interface upgrades for legacy products, a powerful new script language, and many more features that allow you to completely customize the sound of each instrument. It’s one of the most exciting developments in the history of our company and will be the launching pad for many exciting new products in the future. Using Opus and Play Together Opus and Play are two separate software products, anything you have saved in your projects will still load up inside the saved Play version of the plugins. You can update your current/existing projects to Opus if you so choose, or leave them saved within Play. After purchasing or upgrading to Opus you do not need to use Play, but it may be more convenient to make small adjustments to an older composition in your DAW loading the instruments saved in Play instead of replacing them with Opus. For any new composi- tion, just use Opus. A Note About User Manuals All EastWest Libraries have their own user manuals (like this one) that refer to instru- ments and controls that are specific to their respective libraries, as well as referencing the Play User Manual for controls that are common to all EastWest Libraries. -

Calendar Filled with Holes by Adrienne Belz Girl’S Soccer Game Against Bryan

the Vol. 57 No. 5 bagpipeonline.com BAGPIPEOctober 7, 2010 The Parking Lottery Exploring Covenant’s parking vices and virtues ANNIE HUNTINGTON by Karin Olson late to class looking for one.” plaints have been from off-campus However, many students find parking. [email protected] Greg Ford, Safety and Security students. parking at Scotland Yard to be “They should either not let Coordinator, believes frustration There are an estimated 834 park- inconvenient. freshmen have cars or they need to For those driving cars at Covenant with parking anywhere is inevi- ing spaces on Covenant’s campus “I didn’t pay $100 for an off- invest more money in off-campus College, finding a parking spot can table. where students may park. This campus sticker so I could park in parking for students,” said Gore. quickly spiral into a moment of “I often feel that Covenant gets a year, the school sold about 680 Scotland Yard. By the time I get to Parking permits at Covenant anxiety and frustration. ‘bad rap’ when it comes to parking permits. Ford said the lots closer to school, I don’t have time for a long are $100 for main campus park- “You’re lucky to find a parking simply because parking is a sensi- campus are clogged because people walk up from one of the satellite ing (off-campus parking permit spot the first few lots you try,” said tive issue anywhere you go,” he are refusing to park in Scotland parking lots,” said senior Lisa Cow- free of charge), $75 for Scotland senior Alison Gore, who lives off said. -

Record Collector Magazine

The Year of the HOBSE hat Mark Linkous of priated lyrical inspiration from south Waits, Grandaddy and Mercury Rev, Sparklehorse once briefly Virginian novelists, Ohio-born cinematog- Sparklehorse cut through their off-kilter died is probably the least raphers and film-makers, l6th Century music with almost perverse experimenta- interesting thing about him. English dramatists, esoteric American tion. They are as likely to create vigorous, In 1997, the American musician troubadors, a French poet and music critic feedback-enfettered rock songs as they collapsed in the en suite bathroom of and a deadpan American songlrrriter. He's are drowsy instrumentals; as likely to a London hotel with his legs pinned also sung about: pigs, horses, butchered forge fragile, debilitated ballads as beneath him for 14 hours. He was cows, "painbirds" and "pianobirds", esoteric, sample-heavy interludes. eventually found by a maid and rabbits, spiders, insects, leeches, hum- For the most part, this music is taken to St. Mary's Hospital, where, mingbirds, "hundreds of sparrows", poignant, melancholic and touching. On for two minutes, his heart stopped wolves, crows, snails, dogs, tigers and their first album, Viuadixiesubmarine- beating. It reads like a meta "more yellow birds". transmissionplot, Linkous even sang rock'n'roll story for a man who like Mark Linkous and his more or less about rescuing an iniured hawk: "I chased kindred spirits Grandaddy - anon)"rnous -has co-conspirators crush guitars, him round, tried to pick him up." Linkous tried to circumvent rock'n'roll clich6. tape loops, organs and frequently distort- has explained, "but he kept biting Nearly dying isn't cool. -

David Lynch to Show New Works at Solo Gallery Exhibition.” Los Angeles Times

Ng, David. “David Lynch to show new works at solo gallery exhibition.” Los Angeles Times. 14 November 2013. Web. David Lynch to show new works at solo gallery exhibition David Lynch will be opening a show this month at Kayne Griffin Corcoran. It will run Nov. 23 through Jan.4. David Lynch hasn't released a new feature since "Inland Empire" in 2006, but the director has been creating visual art and exhibiting surreal creations in gallery shows. Lynch held solo exhibitions in Los Angeles in 2009 and 2011, and will be opening yet another show this month at Kayne Griffin Corcoran. The exhibition, which is being curated by Brett Littman, executive director of the Drawing Center in New York, will focus on how Lynch uses "naming" in film, photography, drawings and other works dating from 1968 to the present. Kayne Griffin Corcoran said the show will run from Nov. 23 to Jan. 4, and will feature a group of new paintings, drawings and watercolors by Lynch. The filmmaker, whose credits include "Blue Velvet," "Mulholland Dr." and the "Twin Peaks" television series, has previously exhibited his art at William Griffin Gallery in Santa Monica. In 2009, he showed original artwork for the album "Dark Night of the Soul," featuring music by Danger Mouse and Sparklehorse, at L.A.'s Michael Kohn Gallery. Next year, Lynch's work will be the subject of a retrospective show at the Pennsylvania Academy of Fine Arts in Philadelphia, where the director studied in his youth. The show will feature approximately 75 paintings and drawings created by Lynch since 1965. -

Championsip Celebrating the Creators of Jobs, Innovation, Safety, and Access

April 23, 2014 U.S. Chamber of Commerce Washington, D.C. 2014 CHAMPIONSIP Celebrating the Creators of Jobs, Innovation, Safety, and Access 2014 IP Champions Agenda April 23, 2014 Hall of Flags | U.S. Chamber of Commerce Time Details 8:30 AM Registration 9:00 AM Opening Remarks • David Hirschmann, President & CEO, Global Intellectual Property Center 9:05 AM Business Innovations Panel Moderator: Mark Crowell, Executive Director, U.Va. Innovations • Reza Monazami, Ph.D, University of Virginia • Jalali Hartman, Founder & Chief Human, ROBAUTO, Inc 9:55 AM Presentation of Awards to Business Innovators • Mark Crowell, Executive Director, U.Va Innovations • Reza Monazami, Ph.D, University of Virginia • Jalali Hartman, Founder & Chief Human, ROBAUTO, Inc. 10:00 AM Consumer Awareness Campaigns - Panel Discussion • Marjorie Clifton, Executive Director, Center for Safe Internet Pharmacies • Libby Baney, Executive Director, Alliance for Safe Online Pharmacies • Alun Jones, Chief of Communication and Advocacy, United Nations Office of Drugs and Crime • Chuck Westfall, Technical Advisor, Professional Engineering & Solutions Division, Canon U.S.A. 10:55 AM Presentation of Awards to Consumer Awareness Campaigns • “Be Safe. Buy Smart.” - CSIP • “Counterfeit: Don’t buy into organized crime.” - UNODC • “Anti-Counterfeit Consumer Awareness Campaign” - Canon U.S.A. 11:00 AM Consumer Awareness Speech • Introduction by: Sandra Aistars, Chief Executive Officer, Copyright Alliance • David Lowery, Musician and Guest Lecturer, Terry College of Business, University of Georgia 11:20 AM Presentation of Award to Consumer Awareness Speaker • David Lowery, Musician and Guest Lecturer, Terry College of Business, University of Georgia 11:25 AM Presentation of Awards to Public Officials Introduction by: William Reid, Vice President, Global Anti- Counterfeiting Operations, Eli Lilly • Mark Cohen, Senior Counsel, China, U. -

Just Announced

JUST ANNOUNCED Aldous Harding Wed 30 & Thu 31 Mar 2022, Barbican Hall, 8pm Tickets £20 – £25 New Zealand singer-songwriter Aldous Harding, alongside her band, makes her Barbican debut in March 2022. The two shows will feature material from her three studio albums to date. Harding, whose music has been described as ‘disquietingly beautiful and unsettling as her image’ by the Guardian, drew critical acclaim for the gothic indie folk style on her eponymous self-titled 2014 debut album. Her two follow-up albums – 2017’s Party and 2019's Designer – were released on British independent label 4AD. Both records were co-produced by John Parish (PJ Harvey, Sparklehorse). John Parish also produced Harding’s track Old Peel – known and loved by fans as the set-closer at her most recent live shows – which will be digitally released on 15 June and as a limited 7” on 9 July 2021. Aldous Harding was awarded a prestigious Silver Scroll Award for The Barrel (the New Zealand equivalent of the Ivor Novellos) and was nominated in the Solo Artist category in 2020’s Q Awards. Designer also made the top 5 in Mojo's 2019 Best Albums of the Year. Touring extensively pre-pandemic, Harding is renowned for the captivating state of possession she occupies in live performances, winning over audiences across the world. Produced by the Barbican in association with Bird On The Wire On sale to Barbican patrons and members on Wed 16 Jun 2021 On general sale on Fri 18 Jun 2021 Find out more FURTHER EVENT DETAILS ANNOUNCED George the Poet: Live from the Barbican Thu 1 Jul 2021, Barbican Hall, 8pm Tickets £20 – 30 & £12.50 (livestream) George the Poet will bring his innovative brand of musical poetry to Live from the Barbican this summer. -

Ghosts in the Photographs Oxford’S Post-Rock Sound Explorers Talk Terrifying Auditions, Music from Outer Space and Playing in the Dark

[email protected] @NightshiftMag NightshiftMag nightshiftmag.co.uk Free every month NIGHTSHIFT Issue 277 August Oxford’s Music Magazine 2018 photo: Helen Messenger “The great thing about not having a vocalist is the listener has to think for themselves: they take away whatever the music has made them feel, not what someone has told them to feel.” Ghosts in the Photographs Oxford’s post-rock sound explorers talk terrifying auditions, music from outer space and playing in the dark. Also in this issue: THE CELLAR under threat again. ALPHABET BACKWARDS return. Introducing THE DOLLYMOPS CORNBURY reviewed. plus All your Oxford music news, reviews and gigs for August NIGHTSHIFT: PO Box 312, Kidlington, OX5 1ZU. Phone: 01865 372255 NEWS Nightshift: PO Box 312, Kidlington, OX5 1ZU Phone: 01865 372255 email: [email protected] Online: nightshiftmag.co.uk old self. “This past week Joe has been under general anaesthetic twice: first for neurosurgery and second THE CELLAR faces another fight for its survival following a severe for his leg. The neurosurgery reduction of its capacity last month. consisted of reshaping part of his 15,000 signed a petition to save the venue from closure last year when skull that had collapsed and been the building’s owners, the St Michael’s and All Saints charities put in an compressed into the brain by the application to change the use of the building to retail storage. The plans, weight of the truck’s trailer. The which would have ended live music at The Cellar after 40 years, were operation was successful, but the thrown out by Oxford City Council planning officers. -

CMJ Directory SPARKLEHORSE: Back in the Saddle Again Story by Colin Helms

CMJ Directory SPARKLEHORSE: Back In The Saddle Again Story by Colin Helms With his latest, Sparklehorse mainstay Mark Linkous finds gentle beauty on the other side of pain. Five years ago, Mark Linkous nearly died. He then spent over 12 months in a wheelchair, struggling with chronic depression and a crippling reliance on painkillers, and subsequently found, or so the story goes, that he couldn't remember how to write songs anymore. This year he's found the means - credit spiritual rebirth or the blackest sense of ironic humor - to not only create his most affecting Sparklehorse recording to date, but to brazenly dub the album It's A Wonderful Life. "If I've learned nothing else, it's that I should at least try to notice one little thing every day," he offers. "Whether it's not getting eaten by a bear, or seeing a little kid smile at you on the street. Before I go to bed I say 'I'm glad I didn't wake up dead today.'" The infamous day Linkous nearly woke up dead - the aftermath of a night in a London hotel room during which too many Valium and antidepressants were consumed - may have put a damper on Sparklehorse's output in the time since, but it seems to have changed Linkous's life (and life-work) for the better. Wonderful Life's scarred and cracked sonic façade strongly resembles that of 1995's Vivadixiesub-marinetransmissionplot and 1999's Good Morning Spider, but it's a gentler, somehow lighter collection, populated by horse heads and tiger hearts, fat babies and yellow birds. -

Music Globally Protected Marks List (GPML) Music Brands & Music Artists

Music Globally Protected Marks List (GPML) Music Brands & Music Artists © 2012 - DotMusic Limited (.MUSIC™). All Rights Reserved. DotMusic reserves the right to modify this Document .This Document cannot be distributed, modified or reproduced in whole or in part without the prior expressed permission of DotMusic. 1 Disclaimer: This GPML Document is subject to change. Only artists exceeding 1 million units in sales of global digital and physical units are eligible for inclusion in the GPML. Brands are eligible if they are globally-recognized and have been mentioned in established music trade publications. Please provide DotMusic with evidence that such criteria is met at [email protected] if you would like your artist name of brand name to be included in the DotMusic GPML. GLOBALLY PROTECTED MARKS LIST (GPML) - MUSIC ARTISTS DOTMUSIC (.MUSIC) ? and the Mysterians 10 Years 10,000 Maniacs © 2012 - DotMusic Limited (.MUSIC™). All Rights Reserved. DotMusic reserves the right to modify this Document .This Document 10cc can not be distributed, modified or reproduced in whole or in part 12 Stones without the prior expressed permission of DotMusic. Visit 13th Floor Elevators www.music.us 1910 Fruitgum Co. 2 Unlimited Disclaimer: This GPML Document is subject to change. Only artists exceeding 1 million units in sales of global digital and physical units are eligible for inclusion in the GPML. 3 Doors Down Brands are eligible if they are globally-recognized and have been mentioned in 30 Seconds to Mars established music trade publications. Please -

1 Arcade Fire

All Songs Considered Listeners Pick The Best Music Of 2010: The Top 100 1 Arcade Fire: The Suburbs 2 The Black Keys: Brothers 3 The National: High Violet 4 Mumford & Sons: Sigh No More 5 Broken Bells: Broken Bells 6 LCD Soundsystem: This Is Happening 7 Vampire Weekend: Contra 8 Sufjan Stevens: The Age Of Adz 9 Beach House: Teen Dream 10 Kanye West: My Beautiful Dark Twisted Fantasy 11 Gorillaz: Plastic Beach 12 Sleigh Bells: Treats 13 Jonsi: Go 14 Local Natives: Gorilla Manor 15 Band Of Horses: Infinite Arms 16 The Tallest Man On Earth: The Wild Hunt 17 Deerhunter: Halcyon Digest 18 Spoon: Transference 19 Janelle Monae: The ArchAndroid 20 Yeasayer: Odd Blood 21 Ray LaMontagne and The Pariah Dogs: God Willin' and the Creek Don't Rise 22 New Pornographers: Together 23 Broken Social Scene: Forgiveness Rock Record 24 Joanna Newsom: Have One On Me 25 Cee Lo: The Ladykiller 26 MGMT: Congratulations 27 Sharon Jones And The Dap Kings: I Learned The Hard Way 28 Massive Attack: Heligoland 29 Josh Ritter: So Runs The World Away 30 Girl Talk: All Day 31 She And Him: Volume II 32 Bruce Springsteen: The Promise 33 Belle And Sebastian: Write About Love 34 Big Boi: Sir Lucious Left Foot: The Son Of Dusty Chico 35 Best Coast: Crazy For You 36 The White Stripes: Under Great White Northern Lights 37 Danger Mouse And Sparklehorse: Dark Night Of The Soul 38 Caribou: Swim 39 The Roots: How I Got Over 40 Kings Of Leon: Come Around Sundown 41 Frightened Rabbit: The Winter Of Mixed Drinks 42 Neil Young: Le Noise 43 Drive-By Truckers: The Big To-Do 44 Flying Lotus: Cosmogramma 45 Carolina Chocolate Drops: Genuine Negro Jig 46 Dead Weather: Sea of Cowards 47 Laura Veirs: July Flame 48 The Walkmen: Lisbon 49 Dr. -

L & W Program Final 2

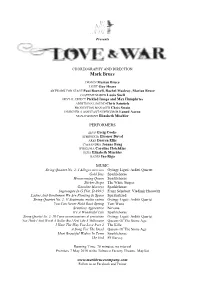

Presents CHOREOGRAPHY AND DIRECTION Mark Bruce DESIGN Marian Bruce LIGHT Guy Hoare ARTWORK FOR STAGE Paul Boswell, Rachel Macleay, Marian Bruce COSTUME MAKER Lucia Snell SPECIAL EFFECT Pickled Image and Max Humphries ADDITIONAL SOUND Chris Samuels PRODUCTION MANAGER Chris Swain DESIGNER’S ASSISTANT/SUPERVISOR Luned Aaron MANAGEMENT Elizabeth Mischler PERFORMERS ZEUS Greig Cooke APHRODITE Eleanor Duval ARES Darren Ellis CASSANDRA Joanne Fong IPHIGENIA Caroline Hotchkiss HERA Elizabeth Mischler HADES Ino Riga MUSIC String Quartet No. 2: I Allegro nervoso György Ligeti: Arditti Quartet Gold Day Sparklehorse Homecoming Queen Sparklehorse Slicker Drips The White Stripes Gasoline Horseys Sparklehorse Impromptu In G Flat, D 899/3 Franz Schubert: Vladimir Horowitz Ladies And Gentlemen We Are Floating In Space Spiritualized String Quartet No. 2: II Sostenuto, molto calmo György Ligeti: Arditti Quartet You Can Never Hold Back Spring Tom Waits Scentless Apprentice Nirvana It's A Wonderful Life Sparklehorse String Quartet No. 2: III Come un meccanismo di precisione György Ligeti: Arditti Quartet You Think I Ain't Worth A Dollar But I Feel Like A Millionaire Queens Of The Stone Age I Hate The Way You Love Part 2 The Kills A Song For The Dead Queens Of The Stone Age Most Beautiful Widow In Town Sparklehorse The End PJ Harvey Running Time: 70 minutes, no interval Premiere 7 May 2010 at the Tobacco Factory Theatre, Mayfest www.markbrucecompany.com Follow us on Facebook and Twitter Mark Bruce has choreographed and danced and made Romeo Error and Good to Go (for Evolution professionally in the UK and abroad, working with at The Place) and Tempt My Better Angel and No More Rosas, Introdans, Extemporary Dance Theatre and Ghosts (for Richard Alston Dance Company). -

Dark Night of the Soul

Danger Mouse And Sparklehorse - Dark Night Of The Soul Uncut has reviewed plenty of obscure albums in its time but this may be a first: an album that doesn’t officially exist. As a result of some undisclosed contractual snafu, EMI put the mockers on Dark Night Of The Soul just as it was being readied for release. Undeterred, Danger Mouse pressed ahead anyway, offering Dark Night… as a limited edition photo book featuring David Lynch ’s “visual narrative” for the project, accompanied by a blank, recordable CD and the not-so-cryptic instruction to “use it as you will”. Though careful not to say as much, Danger Mouse was essentially encouraging us to bootleg his own LP, which by this point had mysteriously surfaced on file-sharing networks. Danger Mouse has form in this area, of course. The Grey Album , his inventive mash-up of Jay-Z and The Beatles , also aroused the wrath of EMI, who held the rights to The White Album . It made his reputation, if not his fortune, so he knows the value of peer-to-peer propaganda. On the other hand, Danger Mouse’s clever feint here may just have succeeded in adding a layer of intrigue to a project which, despite its impressive cast list, is not as fascinating as its creators probably hoped. Take Gorillaz ’s Demon Days, substitute Sparklehorse ’s Mark Linkous for Damon Albarn , and David Lynch ’s murky snapshots of sinister smalltown America for Jamie Hewlett ’s anarchic cartoons, and you’ve got the basic idea of Dark Night… There are some big-name cameos – Iggy Pop , Frank Black , a surprisingly compelling Suzanne Vega – but it’s questionable whether a collaborative free-for-all was the best way to approach a concept album about loss of faith; dark nights of the soul, by their very nature, usually demand to be suffered in isolation.