Clustering and Cartographic Simplification of Point Data Set

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

UT Newsletter

Managing Director & Editor-in-Chief: Abbas Ghanbari Baghestan (PhD) Compiler(s): Soghra Davarifard, Mansoureh Asbari, and Zohreh Ramezani Doustkouhi Translator: Mona Jafari Translation Supervisor: Dr. Maryam Soltan Beyad Photographer(s): Farshad Zohali, Akbar Pourbagher Moghadam, and Abolfazl Rajabian Graphic Designer(s): Mehraveh Taghizadeh and Mohammad Reza Gharghani Compositor and Typesetter: L. Eskandarpour Publisher: Office of Public Relations, University of Tehran (UT), May 2021 Address: UT Central Administration, 16 Azar St. Tehran, Iran. Tell: 61113417, 66419831, E-mail: [email protected], Website: www.ut.ac.ir/en Preface the constituent parts of a network of science and knowledge repositories comprised of human, The establishment of the University of Tehran in infrastructural, and technological resources. A 1313 (1934 AD), as the successor to Amir Kabir’s survey of the university’s major historical events Dar ul-Funun (1851 AD), represented a watershed since its establishment, an examination of the in early 14th-century Iran (according to the Solar lives and activities of key players in maintaining Hijri calendar). From the outset, the University of the leading position of the University of Tehran in Tehran bore the title of “Iran’s largest Academic the national scientific, research, and technological Institution”, and now, after about 90 years since arenas, and a study of the contexts and factors its establishment, the University of Tehran still making for the powerful and productive presence gleams like a gemstone aloft in the firmament of of the University of Tehran in the regional and Iran’s scientific knowledge, holding such noble international scientific, research, and technological titles as the “First Modern University in Iran”, arenas all serve to substantiate such a claim. -

EURAS-Brochure-2021.Pdf

EURASIAN UNIVERSITIES UNION THE UNION WHERE CONTINENTS COME TOGETHER ACCESS TO A UNIQUE NETWORK OF HIGHER EDUCATION www.euras-edu.org REACH OUT A WIDE NETWORK OUR UNION EURAS – Eurasian Universities Union has been Through getting involved in EURAS you growing the higher education network via Europe can expand your network that might allow and Asia since 2008. The members that are the you to run new partnerships with brilliant major institutions of their countries have been opportunities. Keep yourself informed sharing the knowledge and experiences in order to about higher education in the region by achieve the highest quality. connecting with the prestigious members. EURAS aims to build awareness regarding the value and importance of the Eurasian region as per 50+ countries from various parts of its role in terms of world history and civilization. Europe and Asia This shall lead all the political, economical and social aspects of the Eurasian continent to a perfectionist identity by using the power of education. 120+ universities with steadily increasing number of members and partners VISION Our vision is to promote sustainable peace and advanced technology worldwide through the development of culture and new educational EURAS Members News systems. Our vision for the future is that of a society of self-aware and highlyqualified individuals benefiting from global education and mobility services. EURAS aims to open the borders of EURAS Monthly e-newsletter education to the public and to favor the exchange of knowledge and best practices among higher education institutions from the entire Eurasian region. In order to accomplish these goals, We believe that connecting universities from EURAS Journals ( EJOH, EJEAS, EJOSS), diverse backgrounds can make the dierence in a Multi-disciplinary, peer-reviewed guaranteeing real equality and accessibility to International Journals excellence in educational standards. -

University of Tehran the Symbol of Iranian Higher Education University of Tehran, Dynamic, Ethical and Creative University of Tehran at a Glance

University of Tehran The Symbol of Iranian Higher Education University of Tehran, Dynamic, Ethical and Creative University of Tehran at a Glance • Founded: 1934 (In modern phase and name) • University of Tehran enjoys an old tradition of education dating back to Dar- al-Fonoon about 160 years ago, to the seminaries of 700 years ago, and to Jondishapour in Sassanid period (224- 651 A.D.), • University of Tehran is a comprehensive university offering variety of disciplines. UT in the Horizon of 2020 • University of Tehran has been breaking the scientific frontiers at national and regional levels, trains faithful and learned humans who enjoy the freedom of thought and expression. • UT is a pioneer in developing the inspiring scientific concepts and basic researches, as well as a promoter of developmental and applied research. • UT produces capable graduates and establishes knowledge-based enterprises at world standard to form models of national development based on Islamic values. University of Tehran’s Statistics 1 Basic Statistics 2 Faculty Statistics 3 Student Statistics 4 Graduate Statistics 5 Staff Statistics 6 University Ranking 7 Paper Statistics 8 Library Statistics Basic Statistics: Number of Campuses: 8 • Main campus: •Tehran • Off campuses: • Abouraihan (Pakdasht) • Agriculture and Natural Resources (Alborz Province) • Caspian & Fouman Campus • Farabi (Qom Province) • Alborz International Campus • Aras International Campus • Kish International Campus •Science & Technology Park Number of Colleges: 9 Number of Faculties/Schools: -

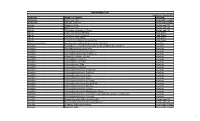

Unai Members List August 2021

UNAI MEMBER LIST Updated 27 August 2021 COUNTRY NAME OF SCHOOL REGION Afghanistan Kateb University Asia and the Pacific Afghanistan Spinghar University Asia and the Pacific Albania Academy of Arts Europe and CIS Albania Epoka University Europe and CIS Albania Polytechnic University of Tirana Europe and CIS Algeria Centre Universitaire d'El Tarf Arab States Algeria Université 8 Mai 1945 Guelma Arab States Algeria Université Ferhat Abbas Arab States Algeria University of Mohamed Boudiaf M’Sila Arab States Antigua and Barbuda American University of Antigua College of Medicine Americas Argentina Facultad de Ciencias Económicas de la Universidad de Buenos Aires Americas Argentina Facultad Regional Buenos Aires Americas Argentina Universidad Abierta Interamericana Americas Argentina Universidad Argentina de la Empresa Americas Argentina Universidad Católica de Salta Americas Argentina Universidad de Congreso Americas Argentina Universidad de La Punta Americas Argentina Universidad del CEMA Americas Argentina Universidad del Salvador Americas Argentina Universidad Nacional de Avellaneda Americas Argentina Universidad Nacional de Cordoba Americas Argentina Universidad Nacional de Cuyo Americas Argentina Universidad Nacional de Jujuy Americas Argentina Universidad Nacional de la Pampa Americas Argentina Universidad Nacional de Mar del Plata Americas Argentina Universidad Nacional de Quilmes Americas Argentina Universidad Nacional de Rosario Americas Argentina Universidad Nacional de Santiago del Estero Americas Argentina Universidad Nacional de -

The IUGG Electronic Journal

UNION GEODESIQUE ET GEOPHYSIQUE INTERNATIONALE INTERNATIONAL UNION OF GEODESY AND GEOPHYSICS The IUGG Electronic Journal Volume 8 No. 8 (1 August 2008) This short, informal newsletter is intended to keep IUGG Member National Committees informed about the activities of the IUGG Associations, and actions of the IUGG Secretariat. Past issues are posted on the IUGG Web site (http://www.iugg.org/publications/ejournals/). Please forward this message to those who will benefit from the information. Your comments are welcome. Contents 1. News from the International Council for Science (ICSU). 2. Fiftieth Anniversary of the Iranian Member Adhering Organization. 3. Report on the IUGG-ESOF Symposium “The Planet Earth”. 4. Report on the 12th International Symposium on Equatorial Aeronomy. 5. World Scientific Publishing offers a book discount to IUGG Members. 6. IUGG-related meetings occurring during August – October 2008. 1. News from the International Council for Science (ICSU) ICSU endorses the International Year of Astronomy (IYA 2009) The Executive Board of ICSU at its last meeting endorsed the International Year of Astronomy 2009. The International Astronomical Union (IAU), a Member of ICSU, launched 2009 as the International Year of Astronomy (IYA2009) under the theme "The Universe, yours to discover". Research and knowledge systems ICSU is represented on the coordinating committee for the UNESCO Forum on Higher Education, Research and Knowledge, which is organising a global research seminar in Paris on 28-30 November, 2008. This will bring together researchers actively involved in studying research and knowledge systems in key fields such as higher education, science and technology, innovation, social sciences, health and agriculture. -

Robert Reza Asaadi Curriculum Vitae September 2019

1 Robert Reza Asaadi Curriculum Vitae September 2019 Department of Political Science Email: [email protected] Mark O. Hatfield School of Government URL: http://www.pdx.edu/hatfieldschool/robert-asaadi Portland State University Urban Center Building 506 SW Mill Street, Suite 650 Portland, OR 97201 ACADEMIC APPOINTMENTS Instructor, Portland State University (2018-2020) Department of Political Science and Department of International & Global Studies Affiliated Faculty Member, Middle East Studies Center (MESC) Adjunct Assistant Professor, Portland State University (2015-2018) Department of Political Science and Department of International & Global Studies Affiliated Faculty Member, Middle East Studies Center (MESC) Adjunct Faculty, Portland Community College (spring-summer 2018) Social Sciences Department Instructor, University of Minnesota (summer 2015) Department of Political Science Teaching Assistant, University of Minnesota (2010-2014) Department of Political Science EDUCATION Ph.D., Political Science (2016), University of Minnesota, Minneapolis, MN Dissertation: Colonies, Clients, and Rogues: Power and the Production of Order in International Politics [http://search.proquest.com/docview/1817634377/] Subfields: International Relations, Comparative Politics Focus: Iranian politics, comparative historical analysis, International Relations theory Committee: Raymond Duvall (Advisor), Martin Sampson III, Kathleen Collins, Ron Aminzade M.A., Political Science (2012), University of Minnesota, Minneapolis, MN B.S., Political Science (2008), summa -

ICSUROAP Annualreport2016

ICSU REGIONAL OFFICE FOR ASIA AND THE PACIFIC 2016 ANNUAL REPORT VISION The long-term ICSU vision is for a world where science is used for the benefit of all, excellence in science is valued and scientific knowledge is effectively linked to policy-making. In such a world, universal and equitable access to high quality scientific data and information is a reality and all countries have the scientific capacity to use these and to contribute to generating the new knowledge that is necessary to establish their own development pathways in a sustainable manner. MISSION ICSU mobilizes knowledge and resources of the international science community for the benefit of society, to: • Identify and address major issues of importance to science and society • Facilitate interaction amongst scientists across all disciplines and from all countries • Promote the participation of all scientists in the international scientific endeavour, regardless of race, citizenship, language, political stance and gender • Provide independent, authoritative advice to stimulate constructive dialogue between the scientific community and governments, civil society and the private sector CONTENTS ICSU VISION AND MISSION 2 MESSAGE FROM THE DIRECTOR 3 MESSAGE FROM THE CHAIR OF THE REGIONAL COMMITTEE 4 EVENTS IN 2016 6 PLANNING, Coordinating AND PROMOTING RESEARCH 11 Health and Wellbeing in the Changing Urban Environment 11 • 1st Meeting of Science Planning Group on Epigenetics 11 11 Natural Hazards and Risk • 5th Meeting of Steering Group on Natural Hazards and Risk 13 • United -

Dr. Ir. Sk. Mustak

Dr. ir. Sk. Mustak Assistant Professor, Department of Geography, School of Environment & Earth Science, Central University of Punjab, Bathinda, Punjab (India) +91 7047680305 [email protected]/[email protected] Education: Degree/ University /Board Year Subject/ Specialization Certificate MS by ITC, University of Twente, Netherlands 2018 Geoinformation Science and Research Earth Observation, Urban and regional planning, Urban Geoinformatics and Geo-intelligence PhD Pt. Ravishankar Shukla University, Raipur 2016 Urban geography, Urban Geoinformatics and Geo-intelligence M.Phil. Pt. Ravishankar Shukla University, Raipur 2008 Agricultural Geography M.A. Pt. Ravishankar Shukla University, Raipur 2007 Geography Experience: Position University/Institution Period Assistant Professor Central University of Punjab, Bathinda, Punjab 2020 to till (India) date Coordinator(Research) Ecoinformatics division, Ashoka Trust for 2018 to Research in Ecology and Environment (ATREE), 2020 Bangalore Research Associate Ecoinformatics lab, Ashoka Trust for Research 2018 to in Ecology and Environment (ATREE), 2018 Bangalore Teaching Assistant School of Studies in Geography, Pt. Ravishankar 2009 to Shukla University, Raipur, Chhattisgarh 2015 Citations of Research Publications: My research publications have been cited 185 times with h-index of 5 and i10-index of 3 (Source: Google Scholar October 6, 2020). Total Impact Factor = 7.58. Google scholar : https://scholar.google.co.in/citations?user=NA1S68UAAAAJ&hl=en Research gate: https://www.researchgate.net/profile/Sk_Mustak -

Elections 2015.Pages

1 ELECTIONS: Thanks for making our Seventh OFFICE OF SECRETARY ! CANDIDATE’S BIOS ! ! CANDIDATE ! OFFICE OF VICE-PRESIDENT Ghazzal Dabiri holds a Ph.D. in Iranian Studies ! in the Department of CANDIDATE Near Eastern Languages ! a n d C u l t u r e s f r o m Rudi Matthee received UCLA. She is currently a his Ph.D. in Islamic post-doctoral fellow at S t u d i e s f r o m t h e Ghent University and has University of California, taught at Columbia Los Angles. Since 1993 University, where she was also Persian Studies he has been teaching at program coordinator, UCLA, and CSUF. Her the University of research focuses on the development of and Delaware, where he is cross-sections between Iranian historiography Distinguished Professor of Middle Eastern and Persian epics as well as on the social History. Matthee has published widely on history of early Islamic Iran. Based on this, she Safavid and Qajar Iran as well as Egypt. He has published the following articles: “Visions authored The Politics of Trade in Safavid of Heaven and Hell from Late Antiquity in the Iran: Silk for Silver, 1600-1730 (1999), Near East” (2009); “The Shahnama: Between recipient of the prize for best non-Persian the Samanids and Ghaznavids” (2010), and language book on Iranian history awarded by “Historiography and the Sho’ubiya Movement” the Iranian Ministry of Culture; honorable (2013). mention for British-Kuwaiti Friendship Prize; The Pursuit of Pleasure: Drugs and ! Other publications include: “The Mother Stimulants in Iranian History, 1500-1900 Tongue: An Introduction to the Persian (2005), recipient of the Albert Hourani Book Language.” PBS Frontline: Tehran Bureau Prize and the Saidi Sirjani Prize; Persia in and ;“Shiraz Nights.” PBS Frontline: Tehran Crisis: Safavid Decline and the Fall of Isfahan Bureau, August 10, 2009. -

1St ICA European Symposium on Cartography

Proceedings of the 1st ICA European Symposium on Cartography Georg Gartner and Haosheng Huang (Editors) Vienna, Austria November 10–12, 2015 Editors Georg Gartner, [email protected] Haosheng Huang, [email protected] Research Group Cartography Vienna University of Technology This document contains the online proceedings of the 1st ICA European Symposium on Cartography (EuroCarto 2015), held on November 10-12, 2015 in Vienna, Austria. The symposium was organized by the Research Group Cartography, Vienna University of Technology, and endorsed by International Cartographic Association (ICA) and Österreichischen Kartographischen Kommission (ÖKK). Type setting of the chapters by the authors, processed by Manuela Schmidt, Haosheng Huang and Wangshu Wang. Think before you print! ISBN 978-1-907075-03-2 © 2015 Research Group Cartography, Vienna University of Technology. The copyright of each paper within the proceedings is with its authors. All rights reserved. ii Table of Contents Section I: Cartographic Modelling and Design Jari Korpi and Paula Ahonen-Rainio Design Guidelines for Pictographic Symbols: Evidence from Symbols Designed by Students ................................. 1 Andrea Nass and Stephan van Gasselt Dynamic Cartography: Map-Animation Concepts for Point Features ................................ 20 Sarah Tauscher and Karl Neumann A Displacement Method for Maps Showing Dense Sets of Points of Interest ......................................................... 22 Jakub Straka, Marta Sojčáková and Róbert Fencík Model of Dynamic Labelling of Populated Places in Slovakia ... 24 Wasim A. M. Shoman and Fatih Gülgen A Research in Cartographic Labeling to Predict the Suitable Amount of Labeling in Multi-Resolution Maps ............. 26 Jana Moser and Sebastian Koslitz Pins or Points? Cartographically Appealing Webmaps and Technical Challenges: the Example of “Landschaften in Deutschland Online” ............................................................................ -

Preface, Committee and Sponsors

Preface Dear Distinguished Delegates and Guests, The Organizing Committee warmly welcomes our distinguished delegates and guests to the 10th edition of the IACSIT/IACT/UASTRO International Conference on Aerospace, Robotics, Manufacturing Systems, Mechanical Engineering and Bioengineering (OPTIROB, ICAEM, ICREB 2015), held on June 27-30, 2015, Jupiter, ROMANIA (www.optirob.com , www.icaem.org, www.icreb.org). The IACSIT/IACT/UASTRO International Conferences are organized by the departments of University POLITEHNICA of Bucharest (Aerospace, Machines and Manufacturing Systems, Mechanical Engineering, Mechanisms and Robotics departments), Romania, under the higher tutelage of the Romanian Academy of Technical Sciences- Mechanical Branch in cooperation with: Academy of Scientists from Romania, University Medicine and Pharmacy of Bucharest, Elias University Emergency Hospital of Bucharest, “Henri Coanda” Air Force Academy, Pro Optica Romanian Society, INCDMTM, Land Forces Academy, Military Equipment and Technologies Research Agency and Solid Mechanics Institute of the Romanian Academy. The conference will be sustained by International Association of Computer Science and Information Technology of Singapore (IACSIT), International Academy of Computer Technology of USA (IACT), International University Association for Science and Technology of Romania (UASTRO) and Science, Human Excellency and University Sports Society (SSEUSU). The main objective of the conference is to bring together leading researchers, engineers and scientists in the fields of interest from Romania and from around the world in order to: provide a platform for researchers, engineers, academicians as well as industrial professionals to present their latest experiences and developments activities in the field of Robotics, Aerospace, Mechanical Engineering, Manufacturing Systems and Bioengineering; provide opportunities for attendees to exchange new ideas and application experiences face to face, to establish business or research relations and to find global partners for future collaboration. -

ICSU-ROAP-Annual-Report-2011

Regional Office for Asia and the Pacific Annual Report 2011 ICSU ROAP Office ICSU Regional Office for Asia & Pacific, 902-4, Jalan Tun Ismail, 50480 Kuala Lumpur, MALAYSIA, Tel : +603 26984192 Fax : +603 26917961 Email: [email protected] Strengthening international science www.icsu.org/asia-pacific for the benefit of society ICSU Regional Office for Asia & the Pacific Annual Report 2011 p.I ICSU Vision Founded in 1931, the International Council for Science (ICSU) is a non-governmental organization The long-term ICSU vision is for a world where science is used for the benet of all, excellence in science representing a global membership that includes both national scientic bodies (120 National Members is valued and scientic knowledge is effectively linked to policy-making. In such a world, universal and representing 140 countries) and International Scientic Unions (30 Members). The ICSU ‘family’ also equitable access to high quality scientic data and information is a reality and all countries have the includes upwards of 20 Interdisciplinary Bodies - international scientic networks established to address scientic capacity to use these and to contribute to generating the new knowledge that is necessary to specic areas of investigation. Through this international network, ICSU coordinates interdisciplinary establish their own development pathways in a sustainable manner. research to address major issues of relevance to both science and society. In addition, the Council actively advocates for freedom in the conduct of science, promotes equitable access to scientic data and information, and facilitates science education and capacity building. Mission ICSU mobilizes knowledge and resources of the international science community for the benet of society, ICSU ROAP to: The ICSU Regional Ofce for Asia and the Pacic (ROAP) was inaugurated on 19 September 2006 by the t JEFOUJGZBOEBEESFTTNBKPSJTTVFTPGJNQPSUBODFUPTDJFODFBOETPDJFUZ then Deputy Prime Minister of Malaysia, Y.A.B.