Introduction to Amd Gpu Programming With

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

GLSL 4.50 Spec

The OpenGL® Shading Language Language Version: 4.50 Document Revision: 7 09-May-2017 Editor: John Kessenich, Google Version 1.1 Authors: John Kessenich, Dave Baldwin, Randi Rost Copyright (c) 2008-2017 The Khronos Group Inc. All Rights Reserved. This specification is protected by copyright laws and contains material proprietary to the Khronos Group, Inc. It or any components may not be reproduced, republished, distributed, transmitted, displayed, broadcast, or otherwise exploited in any manner without the express prior written permission of Khronos Group. You may use this specification for implementing the functionality therein, without altering or removing any trademark, copyright or other notice from the specification, but the receipt or possession of this specification does not convey any rights to reproduce, disclose, or distribute its contents, or to manufacture, use, or sell anything that it may describe, in whole or in part. Khronos Group grants express permission to any current Promoter, Contributor or Adopter member of Khronos to copy and redistribute UNMODIFIED versions of this specification in any fashion, provided that NO CHARGE is made for the specification and the latest available update of the specification for any version of the API is used whenever possible. Such distributed specification may be reformatted AS LONG AS the contents of the specification are not changed in any way. The specification may be incorporated into a product that is sold as long as such product includes significant independent work developed by the seller. A link to the current version of this specification on the Khronos Group website should be included whenever possible with specification distributions. -

Implementing FPGA Design with the Opencl Standard

Implementing FPGA Design with the OpenCL Standard WP-01173-3.0 White Paper Utilizing the Khronos Group’s OpenCL™ standard on an FPGA may offer significantly higher performance and at much lower power than is available today from hardware architectures such as CPUs, graphics processing units (GPUs), and digital signal processing (DSP) units. In addition, an FPGA-based heterogeneous system (CPU + FPGA) using the OpenCL standard has a significant time-to-market advantage compared to traditional FPGA development using lower level hardware description languages (HDLs) such as Verilog or VHDL. 1 OpenCL and the OpenCL logo are trademarks of Apple Inc. used by permission by Khronos. Introduction The initial era of programmable technologies contained two different extremes of programmability. As illustrated in Figure 1, one extreme was represented by single core CPU and digital signal processing (DSP) units. These devices were programmable using software consisting of a list of instructions to be executed. These instructions were created in a manner that was conceptually sequential to the programmer, although an advanced processor could reorder instructions to extract instruction-level parallelism from these sequential programs at run time. In contrast, the other extreme of programmable technology was represented by the FPGA. These devices are programmed by creating configurable hardware circuits, which execute completely in parallel. A designer using an FPGA is essentially creating a massively- fine-grained parallel application. For many years, these extremes coexisted with each type of programmability being applied to different application domains. However, recent trends in technology scaling have favored technologies that are both programmable and parallel. Figure 1. -

The Amd Linux Graphics Stack – 2018 Edition Nicolai Hähnle Fosdem 2018

THE AMD LINUX GRAPHICS STACK – 2018 EDITION NICOLAI HÄHNLE FOSDEM 2018 1FEBRUARY 2018 | CONFIDENTIAL GRAPHICS STACK: KERNEL / USER-SPACE / X SERVER Mesa OpenGL & Multimedia Vulkan Vulkan radv AMDVLK OpenGL X Server radeonsi Pro/ r600 Workstation radeon amdgpu LLVM SCPC libdrm radeon amdgpu FEBRUARY 2018 | AMD LINUX GRAPHICS STACK 2FEBRUARY 2018 | CONFIDENTIAL GRAPHICS STACK: OPEN-SOURCE / CLOSED-SOURCE Mesa OpenGL & Multimedia Vulkan Vulkan radv AMDVLK OpenGL X Server radeonsi Pro/ r600 Workstation radeon amdgpu LLVM SCPC libdrm radeon amdgpu FEBRUARY 2018 | AMD LINUX GRAPHICS STACK 3FEBRUARY 2018 | CONFIDENTIAL GRAPHICS STACK: SUPPORT FOR GCN / PRE-GCN HARDWARE ROUGHLY: GCN = NEW GPUS OF THE LAST 5 YEARS Mesa OpenGL & Multimedia Vulkan Vulkan radv AMDVLK OpenGL X Server radeonsi Pro/ r600 Workstation radeon amdgpu LLVM(*) SCPC libdrm radeon amdgpu (*) LLVM has pre-GCN support only for compute FEBRUARY 2018 | AMD LINUX GRAPHICS STACK 4FEBRUARY 2018 | CONFIDENTIAL GRAPHICS STACK: PHASING OUT “LEGACY” COMPONENTS Mesa OpenGL & Multimedia Vulkan Vulkan radv AMDVLK OpenGL X Server radeonsi Pro/ r600 Workstation radeon amdgpu LLVM SCPC libdrm radeon amdgpu FEBRUARY 2018 | AMD LINUX GRAPHICS STACK 5FEBRUARY 2018 | CONFIDENTIAL MAJOR MILESTONES OF 2017 . Upstreaming the DC display driver . Open-sourcing the AMDVLK Vulkan driver . Unified driver delivery . OpenGL 4.5 conformance in the open-source Mesa driver . Zero-day open-source support for new hardware FEBRUARY 2018 | AMD LINUX GRAPHICS STACK 6FEBRUARY 2018 | CONFIDENTIAL KERNEL: AMDGPU AND RADEON HARDWARE SUPPORT Pre-GCN radeon GCN 1st gen (Southern Islands, SI, gfx6) GCN 2nd gen (Sea Islands, CI(K), gfx7) GCN 3rd gen (Volcanic Islands, VI, gfx8) amdgpu GCN 4th gen (Polaris, RX 4xx, RX 5xx) GCN 5th gen (RX Vega, Ryzen Mobile, gfx9) FEBRUARY 2018 | AMD LINUX GRAPHICS STACK 7FEBRUARY 2018 | CONFIDENTIAL KERNEL: AMDGPU VS. -

Khronos Template 2015

Ecosystem Overview Neil Trevett | Khronos President NVIDIA Vice President Developer Ecosystem [email protected] | @neilt3d © Copyright Khronos Group 2016 - Page 1 Khronos Mission Software Silicon Khronos is an Industry Consortium of over 100 companies creating royalty-free, open standard APIs to enable software to access hardware acceleration for graphics, parallel compute and vision © Copyright Khronos Group 2016 - Page 2 http://accelerateyourworld.org/ © Copyright Khronos Group 2016 - Page 3 Vision Pipeline Challenges and Opportunities Growing Camera Diversity Diverse Vision Processors Sensor Proliferation 22 Flexible sensor and camera Use efficient acceleration to Combine vision output control to GENERATE PROCESS with other sensor data an image stream the image stream on device © Copyright Khronos Group 2016 - Page 4 OpenVX – Low Power Vision Acceleration • Higher level abstraction API - Targeted at real-time mobile and embedded platforms • Performance portability across diverse architectures - Multi-core CPUs, GPUs, DSPs and DSP arrays, ISPs, Dedicated hardware… • Extends portable vision acceleration to very low power domains - Doesn’t require high-power CPU/GPU Complex - Lower precision requirements than OpenCL - Low-power host can setup and manage frame-rate graph Vision Engine Middleware Application X100 Dedicated Vision Processing Hardware Efficiency Vision DSPs X10 GPU Compute Accelerator Multi-core Accelerator Power Efficiency Power X1 CPU Accelerator Computation Flexibility © Copyright Khronos Group 2016 - Page 5 OpenVX Graphs -

Khronos Native Platform Graphics Interface (EGL Version 1.4 - April 6, 2011)

Khronos Native Platform Graphics Interface (EGL Version 1.4 - April 6, 2011) Editor: Jon Leech 2 Copyright (c) 2002-2011 The Khronos Group Inc. All Rights Reserved. This specification is protected by copyright laws and contains material proprietary to the Khronos Group, Inc. It or any components may not be reproduced, repub- lished, distributed, transmitted, displayed, broadcast or otherwise exploited in any manner without the express prior written permission of Khronos Group. You may use this specification for implementing the functionality therein, without altering or removing any trademark, copyright or other notice from the specification, but the receipt or possession of this specification does not convey any rights to reproduce, disclose, or distribute its contents, or to manufacture, use, or sell anything that it may describe, in whole or in part. Khronos Group grants express permission to any current Promoter, Contributor or Adopter member of Khronos to copy and redistribute UNMODIFIED versions of this specification in any fashion, provided that NO CHARGE is made for the specification and the latest available update of the specification for any version of the API is used whenever possible. Such distributed specification may be re- formatted AS LONG AS the contents of the specification are not changed in any way. The specification may be incorporated into a product that is sold as long as such product includes significant independent work developed by the seller. A link to the current version of this specification on the Khronos Group web-site should be included whenever possible with specification distributions. Khronos Group makes no, and expressly disclaims any, representations or war- ranties, express or implied, regarding this specification, including, without limita- tion, any implied warranties of merchantability or fitness for a particular purpose or non-infringement of any intellectual property. -

The Openvx™ Specification

The OpenVX™ Specification Version 1.0.1 Document Revision: r31169 Generated on Wed May 13 2015 08:41:43 Khronos Vision Working Group Editor: Susheel Gautam Editor: Erik Rainey Copyright ©2014 The Khronos Group Inc. i Copyright ©2014 The Khronos Group Inc. All Rights Reserved. This specification is protected by copyright laws and contains material proprietary to the Khronos Group, Inc. It or any components may not be reproduced, republished, distributed, transmitted, displayed, broadcast or otherwise exploited in any manner without the express prior written permission of Khronos Group. You may use this specifica- tion for implementing the functionality therein, without altering or removing any trademark, copyright or other notice from the specification, but the receipt or possession of this specification does not convey any rights to reproduce, disclose, or distribute its contents, or to manufacture, use, or sell anything that it may describe, in whole or in part. Khronos Group grants express permission to any current Promoter, Contributor or Adopter member of Khronos to copy and redistribute UNMODIFIED versions of this specification in any fashion, provided that NO CHARGE is made for the specification and the latest available update of the specification for any version of the API is used whenever possible. Such distributed specification may be re-formatted AS LONG AS the contents of the specifi- cation are not changed in any way. The specification may be incorporated into a product that is sold as long as such product includes significant independent work developed by the seller. A link to the current version of this specification on the Khronos Group web-site should be included whenever possible with specification distributions. -

SYCL – Introduction and Hands-On

SYCL – Introduction and Hands-on Thomas Applencourt + people on next slide - [email protected] Argonne Leadership Computing Facility Argonne National Laboratory 9700 S. Cass Ave Argonne, IL 60349 Book Keeping Get the example and the presentation: 1 git clone https://github.com/alcf-perfengr/sycltrain 2 cd sycltrain/presentation/2020_07_30_ATPESC People who are here to help: • Collen Bertoni - [email protected] • Brian Homerding - [email protected] • Nevin ”:)” Liber [email protected] • Ben Odom - [email protected] • Dan Petre - [email protected]) 1/17 Table of contents 1. Introduction 2. Theory 3. Hands-on 4. Conclusion 2/17 Introduction What programming model to use to target GPU? • OpenMP (pragma based) • Cuda (proprietary) • Hip (low level) • OpenCL (low level) • Kokkos, RAJA, OCCA (high level, abstraction layer, academic projects) 3/17 What is SYCL™?1 1. Target C++ programmers (template, lambda) 1.1 No language extension 1.2 No pragmas 1.3 No attribute 2. Borrow lot of concept from battle tested OpenCL (platform, device, work-group, range) 3. Single Source (two compilations passes) 4. High level data-transfer 5. SYCL is a Specification developed by the Khronos Group (OpenCL, SPIR, Vulkan, OpenGL) 1SYCL Doesn’t mean ”Someone You Couldn’t Love”. Sadly. 4/17 SYCL Implementations 2 2Credit: Khronos groups (https://www.khronos.org/sycl/) 5/17 Goal of this presentation 1. Give you a feel of SYCL 2. Go through code examples (and make you do some homework) 3. Teach you enough so that can search for the rest if you interested 4. Question are welcomed! 3 3Please just talk, or use slack 6/17 Theory A picture is worth a thousand words4 4and this is a UML diagram so maybe more! 7/17 Memory management: SYCL innovation 1. -

Opencl on The

Introduction to OpenCL Cliff Woolley, NVIDIA Developer Technology Group Welcome to the OpenCL Tutorial! . OpenCL Platform Model . OpenCL Execution Model . Mapping the Execution Model onto the Platform Model . Introduction to OpenCL Programming . Additional Information and Resources OpenCL is a trademark of Apple, Inc. Design Goals of OpenCL . Use all computational resources in the system — CPUs, GPUs and other processors as peers . Efficient parallel programming model — Based on C99 — Data- and task- parallel computational model — Abstract the specifics of underlying hardware — Specify accuracy of floating-point computations . Desktop and Handheld Profiles © Copyright Khronos Group, 2010 OPENCL PLATFORM MODEL It’s a Heterogeneous World . A modern platform includes: – One or more CPUs CPU – One or more GPUs CPU – Optional accelerators (e.g., DSPs) GPU GMCH DRAM ICH GMCH = graphics memory control hub ICH = Input/output control hub © Copyright Khronos Group, 2010 OpenCL Platform Model Computational Resources … … … … … …… Host … Processing …… Element … … Compute Device Compute Unit OpenCL Platform Model Computational Resources … … … … … …… Host … Processing …… Element … … Compute Device Compute Unit OpenCL Platform Model on CUDA Compute Architecture … CUDA CPU … Streaming … Processor … … …… Host … Processing …… Element … … Compute Device Compute Unit CUDA CUDA-Enabled Streaming GPU Multiprocessor Anatomy of an OpenCL Application OpenCL Application Host Code Device Code • Written in C/C++ • Written in OpenCL C • Executes on the host • Executes on the device … … … … … …… Host … Compute …… Devices … … Host code sends commands to the Devices: … to transfer data between host memory and device memories … to execute device code Anatomy of an OpenCL Application . Serial code executes in a Host (CPU) thread . Parallel code executes in many Device (GPU) threads across multiple processing elements OpenCL Application Host = CPU Serial code Device = GPU Parallel code … Host = CPU Serial code Device = GPU Parallel code .. -

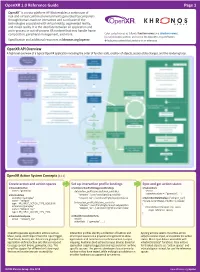

Openxr 1.0 Reference Guide Page 1

OpenXR 1.0 Reference Guide Page 1 OpenXR™ is a cross-platform API that enables a continuum of real-and-virtual combined environments generated by computers through human-machine interaction and is inclusive of the technologies associated with virtual reality, augmented reality, and mixed reality. It is the interface between an application and an in-process or out-of-process XR runtime that may handle frame composition, peripheral management, and more. Color-coded names as follows: function names and structure names. [n.n.n] Indicates sections and text in the OpenXR 1.0 specification. Specification and additional resources at khronos.org/openxr £ Indicates content that pertains to an extension. OpenXR API Overview A high level overview of a typical OpenXR application including the order of function calls, creation of objects, session state changes, and the rendering loop. OpenXR Action System Concepts [11.1] Create action and action spaces Set up interaction profile bindings Sync and get action states xrCreateActionSet xrSetInteractionProfileSuggestedBindings xrSyncActions name = "gameplay" /interaction_profiles/oculus/touch_controller session activeActionSets = { "gameplay", ...} xrCreateAction "teleport": /user/hand/right/input/a/click actionSet="gameplay" "teleport_ray": /user/hand/right/input/aim/pose xrGetActionStateBoolean ("teleport_ray") name = “teleport” if (state.currentState) // button is pressed /interaction_profiles/htc/vive_controller type = XR_INPUT_ACTION_TYPE_BOOLEAN { actionSet="gameplay" "teleport": /user/hand/right/input/trackpad/click -

AMD Linux Driver 2021.10 Release Notes

[AMD Official Use Only - Internal Distribution Only] AMD Linux Driver 2021.10 Release Notes 1. Overview AMD’s Linux® Driver’s includes open source graphics driver for AMD’s embedded platforms and other peripheral devices on selected development platforms. New features supported in this release: 1. New LTS kernel 5.10.5. 2. Bug fixes and driver updates. 2. Linux® kernel Support 1. 5.10.5 LTS 3. Linux Distribution Support 1. Ubuntu 20.04.1 4. Component Versions The following table shows git commit details of the sources and binaries used in the package. The patches present in patches folder of this release package has to be applied on top of the git commit mentioned in the below table to get the full sources corresponding to this driver release. The sources directory in this package contains patches pre-applied to these commit ids. 2021.10 Linux Driver Release Notes 1 [AMD Official Use Only - Internal Distribution Only] Component Version Commit ID Source Link for git clone Name Kernel 5.10.5 f5247949c0a9304ae43a895f29216a9d876f https://git.kernel.org/pub/scm/linux/ker 3919 nel/git/stable/linux.git Libdrm 2.4.103 5dea8f56ee620e9a3ace34a99ebf0175efb5 https://github.com/freedesktop/mesa- 7b11 drm.git Mesa 21.1.0-dev 38f012e0238f145f4c83bf7abf59afceee333 https://github.com/mesa3d/mesa.git 397 Ddx 19.1.0 6234a1b2652f469071c0c9b0d8b0f4a8079e https://github.com/freedesktop/xorg- fe74 xf86-video-amdgpu.git Gstomx 1.0.0.1 5c4bff4a433dff1c5d005edfceaf727b6214b git://people.freedesktop.org/~leoliu/gsto b74 mx Wayland 1.15.0 ea09c2fde7fcfc7e24a19ae5c5977981e9bef -

The Opengl ES Shading Language

The OpenGL ES® Shading Language Language Version: 3.20 Document Revision: 12 246 JuneAugust 2015 Editor: Robert J. Simpson, Qualcomm OpenGL GLSL editor: John Kessenich, LunarG GLSL version 1.1 Authors: John Kessenich, Dave Baldwin, Randi Rost 1 Copyright (c) 2013-2015 The Khronos Group Inc. All Rights Reserved. This specification is protected by copyright laws and contains material proprietary to the Khronos Group, Inc. It or any components may not be reproduced, republished, distributed, transmitted, displayed, broadcast, or otherwise exploited in any manner without the express prior written permission of Khronos Group. You may use this specification for implementing the functionality therein, without altering or removing any trademark, copyright or other notice from the specification, but the receipt or possession of this specification does not convey any rights to reproduce, disclose, or distribute its contents, or to manufacture, use, or sell anything that it may describe, in whole or in part. Khronos Group grants express permission to any current Promoter, Contributor or Adopter member of Khronos to copy and redistribute UNMODIFIED versions of this specification in any fashion, provided that NO CHARGE is made for the specification and the latest available update of the specification for any version of the API is used whenever possible. Such distributed specification may be reformatted AS LONG AS the contents of the specification are not changed in any way. The specification may be incorporated into a product that is sold as long as such product includes significant independent work developed by the seller. A link to the current version of this specification on the Khronos Group website should be included whenever possible with specification distributions. -

Pny-Nvidia-Quadro-P2200.Pdf

UNMATCHED POWER. UNMATCHED CREATIVE FREEDOM. NVIDIA® QUADRO® P2200 Power and Performance in a Compact FEATURES Form Factor. > Four DisplayPort 1.4 1 The Quadro P2200 is the perfect balance of Connectors performance, compelling features, and compact > DisplayPort with Audio form factor delivering incredible creative > NVIDIA nView™ Desktop experience and productivity across a variety of Management Software professional 3D applications. It features a Pascal > HDCP 2.2 Support GPU with 1280 CUDA cores, large 5 GB GDDR5X > NVIDIA Mosaic2 on-board memory, and the power to drive up to four PNY PART NUMBER VCQP2200-SB > NVIDIA Iray and 5K (5120x2880 @ 60Hz) displays natively. Accelerate SPECIFICATIONS MentalRay Support product development and content creation GPU Memory 5 GB GDDR5X workflows with a GPU that delivers the fluid PACKAGE CONTENTS Memory Interface 160-bit interactivity you need to work with large scenes and > NVIDIA Quadro P2200 Memory Bandwidth Up to 200 GB/s models. Professional Graphics NVIDIA CUDA® Cores 1280 Quadro cards are certified with a broad range of board System Interface PCI Express 3.0 x16 sophisticated professional applications, tested by WARRANTY AND Max Power Consumption 75 W leading workstation manufacturers, and backed by SUPPORT a global team of support specialists. This gives you Thermal Solution Active > 3-Year Warranty the peace of mind to focus on doing your best work. Form Factor 4.4” H x 7.9” L, Single Slot Whether you’re developing revolutionary products > Pre- and Post-Sales or telling spectacularly vivid visual stories, Quadro Technical Support Display Connectors 4x DP 1.4 gives you the performance to do it brilliantly.