MIMBCD-UI Information Systems and Computer Engineering

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Investigating the Practical Use of Computational Fluid Dynamics Simulation of Airflow in the Nasal Cavity and Paranasal Sinuses NOSE Version 1.0 2018-08-30

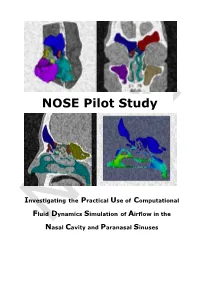

NOSE Pilot Study Investigating the Practical Use of Computational Fluid Dynamics Simulation of Airflow in the NNOSEasal Cavity and Paranasal Sinuses NOSE Pilot Study Investigating the Practical Use of Computational Fluid Dynamics Simulation of Airflow in the Nasal Cavity and Paranasal Sinuses NOSE Version 1.0 2018-08-30 NOSE Pilot Study Investigating the Practical Use of Computational Fluid Dynamics Simulation Version 1.0 of Airflow in the Nasal Cavity and Paranasal Sinuses NOSE Pilot Study Investigating the Practical Use of Computational Fluid Dynamics Simulation of Airflow in the Nasal Cavity and Paranasal Sinuses Project Number 1000043433 Project Title NOSE Pilot Study Document Reference Investigating the Practical Use of Computational Date 2018-08-30 Title Fluid Dynamics Simulation of Airflow in the Nasal Cavity and Paranasal Sinuses Document Name NOSE_PS_FINAL_v1 Version Draft draft final Restrictions public internal restricted : Distribution Steirische Forschungsförderung Authors Koch Walter, Koch Gerda, Vitiello Massimo, Ortiz Ramiro, Stockklauser Jutta, Benda Odo Abstract The NOSE Pilot study evaluated the technical and scientific environment required for establishing a service portfolio that includes CFD simulation and 3D visualization services for ENT specialists. For this purpose the state-of-the-art of these technologies and their use for upper airways diagnostics were analysed. Keywords Rhinology, Computational Fluid Dynamics, 3D Visualization, Clinical Pathways, Service Center, Knowledge Base Document Revisions Version Date Author(s) Description of Change 1.0 2018-08-30 Koch Walter, Koch Final Version Gerda, Vitiello Massimo, Ortiz NOSERamiro, Stockklauser Jutta, Benda Odo 2018-08-30 Seite 3 / 82 Copyright © AIT ForschungsgesmbH NOSE_PS_FINAL_v1 NOSE Pilot Study Investigating the Practical Use of Computational Fluid Dynamics Simulation Version 1.0 of Airflow in the Nasal Cavity and Paranasal Sinuses Table of Contents Acknowledgments .................................................................................. -

Evaluation of DICOM Viewer Software for Workflow Integration in Clinical Trials

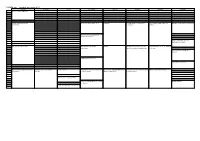

Evaluation of DICOM Viewer Software for Workflow Integration in Clinical Trials Daniel Haak1*, Charles-E. Page, Klaus Kabino, Thomas M. Deserno Department of Medical Informatics, Uniklinik RWTH Aachen, 52057 Aachen, Germany ABSTRACT The digital imaging and communications in medicine (DICOM) protocol is nowadays the leading standard for capture, exchange and storage of image data in medical applications. A broad range of commercial, free, and open source software tools supporting a variety of DICOM functionality exists. However, different from patient’s care in hospital, DICOM has not yet arrived in electronic data capture systems (EDCS) for clinical trials. Due to missing integration, even just the visualization of patient’s image data in electronic case report forms (eCRFs) is impossible. Four increasing levels for integration of DICOM components into EDCS are conceivable, raising functionality but also demands on interfaces with each level. Hence, in this paper, a comprehensive evaluation of 27 DICOM viewer software projects is performed, investigating viewing functionality as well as interfaces for integration. Concerning general, integration, and viewing requirements the survey involves the criteria (i) license, (ii) support, (iii) platform, (iv) interfaces, (v) two- dimensional (2D) and (vi) three-dimensional (3D) image viewing functionality. Optimal viewers are suggested for applications in clinical trials for 3D imaging, hospital communication, and workflow. Focusing on open source solutions, the viewers ImageJ and MicroView are superior for 3D visualization, whereas GingkoCADx is advantageous for hospital integration. Concerning workflow optimization in multi-centered clinical trials, we suggest the open source viewer Weasis. Covering most use cases, an EDCS and PACS interconnection with Weasis is suggested. -

Impacting the Bioscience Progress by Backporting Software for Bio-Linux

Impacting the bioscience progress by backporting software for Bio-Linux Sasa Paporovic [email protected] v0.9 What is Bio-Linux and what is it good for - also its drawbacks: If someone says to use or to have a Linux this is correct as like it is imprecise. It does not exist a Linux as full functional operating system by itself. What was originally meant by the term Linux was the operating system core[1]. The so called kernel, or in a case of a Linux operating system the Linux kernel. It is originally designed and programmed by Linus Torvalds, who is also today the developer in chef or to say it with his words, he is the “alpha-male” of all developers[2]. Anyway, what we have today are Distributions[3]. It has become common to call them simply “a Linux”. This means that there are organizations out there, mostly private, some funded and some other commercial, which gather all what is needed to design around the Linux kernel a full functional operating system. This targets mostly Software, but also web and service infrastructure. Some of them have a history that is nearly as long as the Linux kernel is alive, like Debian. Some others are younger like Ubuntu and some more others are very young, like Bio-Linux[4]. The last Linux, the Bio-Linux, especially its latest version Bio-Linux 7 we are focusing here[5]. In year 2006 Bio-Linux with the work of Tim Booth[42] and team gives its rising[6] and provide an operating system that was and still specialized in providing a bioinformatic specific software environment for the working needs in this corner of bioscience. -

A Case Study from the Openmrs Open-Source Radiology Information System

Journal of Digital Imaging (2018) 31:361–370 https://doi.org/10.1007/s10278-018-0088-5 A Platform for Innovation and Standards Evaluation: a Case Study from the OpenMRS Open-Source Radiology Information System Judy W. Gichoya1 & Marc Kohli2 & Larry Ivange3 & Teri S. Schmidt4 & Saptarshi Purkayastha5 Published online: 10 May 2018 # The Author(s) 2018 Abstract Open-source development can provide a platform for innovation by seeking feedback from community members as well as providing tools and infrastructure to test new standards. Vendors of proprietary systems may delay adoption of new standards until there are sufficient incentives such as legal mandates or financial incentives to encourage/mandate adoption. Moreover, open-source systems in healthcare have been widely adopted in low- and middle-income countries and can be used to bridge gaps that exist in global health radiology. Since 2011, the authors, along with a community of open-source contributors, have worked on developing an open-source radiology information system (RIS) across two communities—OpenMRS and LibreHealth. The main purpose of the RIS is to implement core radiology workflows, on which others can build and test new radiology standards. This work has resulted in three major releases of the system, with current architectural changes driven by changing technology, development of new standards in health and imaging informatics, and changing user needs. At their core, both these communities are focused on building general-purpose EHR systems, but based on user contributions from the fringes, we have been able to create an innovative system that has been used by hospitals and clinics in four different countries. -

FOSDEM 2013 Schedule

FOSDEM 2013 - Saturday 2013-02-02 (1/9) Janson K.1.105 Ferrer Chavanne Lameere H.1301 H.1302 H.1308 10:30 Welcome to FOSDEM 2013 10:45 11:00 How we made the Jenkins QEMU USB status report 2012 Rockbuild The neat guide to Fedora RPM LinuxonAndroid and SlapOS on Wayland for Application Developers community Packaging Android 11:15 11:30 CRIU: Checkpoint and Restore (mostly) In Userspace 11:45 Ubuntu Online Accounts for application developers 12:00 The Devil is in the Details Vtrill: Rbridges for Virtual PTXdist Building RPM packages from Git Emdedded distro shootout: buildroot Networking repositories with git-buildpackage vs. Debian Better software through user 12:15 research 12:30 Bringing Xen to CentOS-6 Sketching interactions 12:45 13:00 The Open Observatory of Network Porting Fedora to 64-bit ARM Coding Goûter A brief tutorial on Xen's advanced Guacamayo -- Building Multimedia Package management and creation ARM v7 State of the Body ↴ Interference systems security features Appliance with Yocto ↴ in Gentoo Linux ↴ 13:15 Spoiling and Counter-spoiling 13:30 oVirt Live Storage Migration - Under Modern CMake ↴ the Hood ↴ ZONE: towards a better news feed ↴ 13:45 FOSDEM 2013 - Saturday 2013-02-02 (2/9) H.1309 H.2213 H.2214 AW1.120 AW1.121 AW1.125 AW1.126 Guillissen 10:30 10:45 11:00 Metaphor and BDD XMPP 101 Storytelling FLOSS Welcome and Introduction The room open() process Scripting Apache OpenOffice: Welcome to the Perl d… Introductory Nutshell Programs Inheritance versus Roles (Writer, Calc, Impress) 11:15 Signal/Collect: Processing Large -

And Contribute to Debian Med

Stay home . and contribute to Debian Med Andreas Tille Debian Online, 29. August 2020 Andreas Tille (Debian) Stay home . and contribute to Debian Med Online, 29. August 2020 1 / 50 1 What is Debian Med? 2 COVID-19 hackathons 3 Single contributions to the first hackathon 4 Summary Andreas Tille (Debian) Stay home . and contribute to Debian Med Online, 29. August 2020 2 / 50 Back in 2001 at DebConf 1 Andreas Tille (Debian) Stay home . and contribute to Debian Med Online, 29. August 2020 3 / 50 the first talk about the concept was prepared Andreas Tille (Debian) Stay home . and contribute to Debian Med Online, 29. August 2020 4 / 50 Debian Andreas Tille (Debian) Stay home . and contribute to Debian Med Online, 29. August 2020 5 / 50 Role of Blends to attract specific users Andreas Tille (Debian) Stay home . and contribute to Debian Med Online, 29. August 2020 6 / 50 Role of Blends to attract specific users Andreas Tille (Debian) Stay home . and contribute to Debian Med Online, 29. August 2020 6 / 50 Role of Blends to attract specific users Andreas Tille (Debian) Stay home . and contribute to Debian Med Online, 29. August 2020 6 / 50 Role of Blends to attract specific users Andreas Tille (Debian) Stay home . and contribute to Debian Med Online, 29. August 2020 6 / 50 Med-bio task of Debian Med Andreas Tille (Debian) Stay home . and contribute to Debian Med Online, 29. August 2020 7 / 50 Packages in selected tasks of Debian Med 800 700 600 500 400 300 200 100 0 imaging-dev imaging his 2002 2004 2006 2008 epi 2010 2012 2014 2016 2018 2020 bio-dev Andreas Tille (Debian) Stay home . -

Orthanc: Free Ecosystem for Medical Imaging

Free ecosystem for medical imaging 1 2 Worldwide explosion of medical images Belgium (2013): 33 millions of imaging studies for 11 millions of people CT + MRI + PET-CT Reason: Multimodal and longitudinal imaging (oncology, cardiovascular diseases, surgery, neurology...) 3 The hype: AI for medical imaging 4 The reality: It’s still Jurassic Park! 5 Sharing images (basic need) is painful inside hospitals hospitals to patients between hospitals among skilled workers 6 Some terminology: Imaging flows for radiology Everything is driven by software through the DICOM standard! 7 The real-world difficulties 8 Pain 1: Interoperability and lock-in Good news: A single worldwide standard in open-access! • The DICOM standard is very complex, both for users and developers. • Many specialized vendors, with costly, proprietary and monolithic ecosystems ⇒ highriskof high risk of lock-in, few agility. • Interoperability is checked in “Connectathons” where vendors meet (N² complexity) ⇒ highriskof no reference implementation. • Not every PACS comes with teleradiology (remote expertise) ⇒ highriskof need to combine vendors. • Few IT expertise in hospitals about imaging⇒ highriskof need to share knowledge. Heterogeneous modalities ▶ very problematic in emerging economies! 9 Pain 2: The PACS is focused on radiology • Hard to retrieve and access the raw DICOM files. • The PACS must be interfaced with specialized software (nuclear medicine, radiotherapy, dentistry, neurosurgery…). • Multitude of files, as images are split slice-by-slice (one typical 3D image = -

PDF Download

53 © 2017 IMIA and Schattauer GmbH The Role of Free/Libre and Open Source Software in Learning Health Systems C. Paton1, T. Karopka2 1 Group Head for Global Health Informatics, Centre for Tropical Medicine and Global Health, University of Oxford, UK 2 Chair of IMIA OS WG, Chair of EFMI LIFOSS WG, Project Manager, BioCon Valley GmbH, Greifswald, Germany LHSs, examining the academic literature, Summary Introduction the MedFLOSS database that catalogues Objective: To give an overview of the role of Free/Libre and As more patient data are collected electron- FLOSS in use in healthcare [6], and the Open Source Software (FLOSS) in the context of secondary use of ically through Electronic Health Records grey literature from websites, reports, and patient data to enable Learning Health Systems (LHSs). (EHRs) and other information systems used personal communications with experts in Methods: We conducted an environmental scan of the academic in healthcare organisations, the opportunity the area of FLOSS adoption in healthcare. and grey literature utilising the MedFLOSS database of open to reuse the data these systems collect for Although some EHRs and research source systems in healthcare to inform a discussion of the role research and quality improvement becomes systems are fully open source, such as the of open source in developing LHSs that reuse patient data for more apparent. There is now a wide range of Veterans Information Systems and Technology research and quality improvement. large-scale research projects [1, 2] that are Architecture (VistA) [7], modern healthcare Results: A wide range of FLOSS is identified that contributes to reusing routinely collected data for analysis infrastructure is often a combination of the information technology (IT) infrastructure of LHSs including and the concept of a Learning Health Sys- open source and proprietary systems. -

MASTER THESIS a Gnu Health Monitoring Module

MASTER THESIS Thesis submitted in partial fulfillment of the requirements for the degree of Master of Science in Engineering at the Univer- sity of Applied Sciences Technikum Wien - Degree Program Biomedical Engineering Sciences A Gnu Health Monitoring Module By: Barbora Veselá, BSc Student Number: 51867978 Supervisors: FH-Prof. Dipl-Ing. Dr. Stefan Sauermann Dipl-Ing. Dr. Johannes Kropf Vienna, May 20, 2019 Abstract This thesis focuses on the development of a GNU Health Module for electrocardiogram monitor- ing and the development of an application providing a fundamental electrocardiogram analysis. The theoretical part contains a brief introduction to hospital information systems including elec- tronic patient record and healthcare data standards information, followed by a description of the GNU Health application and the implementation of the electrocardiogram analysis, written in the Python programming language. The practical part deals with the development of the GNU Health Monitoring module and the external application for signal analysis. The results, disscussion and the conclusion follow. Keywords: Hospital Information System, Gnu Health, Tryton, Monitoring Module, Python ECG Analysis Kurzfassung Diese Arbeit befasst sich mit der Entwicklung eines GNU-Gesundheitsmoduls zur Elek- trokardiogrammberwachung und der Entwicklung einer Anwendung, die eine grundlegende Elektrokardiogrammanalyse bereitstellt. Der theoretische Teil enth eine kurze Einfhrung in Krankenhausinformationssysteme, einschlieich Informationen zu Patientenakten und -

Orthanc – a Lightweight, Restful Dicom Server for Healthcare and Medical Research

ORTHANC – A LIGHTWEIGHT, RESTFUL DICOM SERVER FOR HEALTHCARE AND MEDICAL RESEARCH S. Jodogne, C. Bernard, M. Devillers, E. Lenaerts and P. Coucke Department of Medical Physics, University Hospital of Liege,` 4000 Liege,` Belgium ABSTRACT The DICOM protocol was initially developed with a point-to-point approach in mind. In practice, this means that In this paper, the Orthanc open-source software is introduced. any change to the DICOM topology of the hospital (such Orthanc is a lightweight, yet powerful standalone DICOM as the adding, the removal or the renaming of one DICOM store for healthcare and medical research. Multiple instances modality) impacts all the DICOM modalities that are con- of Orthanc can easily be deployed in the hospital network or nected to the updated modality. This makes the computer even in the same computer, which simplifies the interconnec- network of the hospital tedious to manage, since the various tion between the DICOM modalities and the data manage- DICOM modalities are typically supported by separate pri- ment of medical images. Orthanc is unique with respect to vate companies: It is difficult to synchronize even a single the fact that it provides a modern RESTful API: Orthanc can intervention. For this reason, the DICOM topology is often be driven from any computer language to automate clinical implemented as a star-shaped network: The PACS (Picture processes. Finally, Orthanc comes bundled with an embed- Archiving and Communication System) of the hospital serves ded Web interface that allows the end-users to browse and as a central hub that dispatches the images between the vari- interact with the content of the DICOM store. -

List of Open-Source Health Software

10/05/2017 List of open-source health software - Wikipedia List of open-source health software From Wikipedia, the free encyclopedia This is an old revision of this page, as edited by G-eight (talk | contribs) at 13:04, 22 December 2015 (Added Bahmni as one of the available Open Source hospital systems with links to its website, and to referenced pages from OpenMRS.). The present address (URL) is a permanent link to this revision, which may differ significantly from the current revision (https://en.wikipedia.org/wiki/List_of_open- source_health_software). (diff) ← Previous revision | Latest revision (diff) | Newer revision → (diff) The following is a list of software packages and applications licensed under an open-source license or in the public domain for use in the health care industry. Contents 1 Public health and biosurveillance 2 Dental management and patient record 3 Electronic health or medical record 4 Medical practice management software 5 Health system management 6 Logistics & Supply Chain 7 Imaging/visualization 8 Medical information systems 9 Research 10 Mobile devices 11 Out-of-the-box distributions 12 Interoperability testing 13 See also 14 References Public health and biosurveillance Epi Info is public domain statistical software for epidemiology developed by Centers for Disease Control and Prevention. Spatiotemporal Epidemiological Modeler is a tool, originally developed at IBM Research, for modeling and visualizing the spread of infectious diseases. Dental management and patient record Open Dental is the first open-source dental management package with very broad capabilities on record management, patient scheduling and dental office management. Electronic health or medical record Bahmni (http://www.bahmni.org) is an open source hospital system designed for low resource settings. -

Bioinformatik Und Medizinische Bildverarbeitung

Bioinformatik und medizinische Bildverarbei- tung Andreas Tille Bioinformatik und medizinische Historisches Debian Pure Bildverarbeitung Blends Mit Debian Med Aufgaben in Bioinformatik und der Testen für wis- senschaftliche medizinischen Bildverarbeitung lösen Anwendung Support Bioinformatik Andreas Tille Medizinische Bildverarbei- tung Debian Ausblick Chemnitz, 12. März 2017 Bioinformatik und medizinische Bildverarbei- 1 tung Historisches Andreas Tille 2 Debian Pure Blends Historisches Debian Pure Blends 3 Testen für wissenschaftliche Anwendung Testen für wis- senschaftliche Anwendung 4 Support Support Bioinformatik 5 Medizinische Bioinformatik Bildverarbei- tung Ausblick 6 Medizinische Bildverarbeitung 7 Ausblick Bordeaux 2001 DebConf 1 Bioinformatik und medizinische Bildverarbei- tung Andreas Tille Historisches Debian Pure Blends Testen für wis- senschaftliche Anwendung Support Bioinformatik Medizinische Bildverarbei- tung Ausblick Vorbereitung erster Vortrag über Debian Med Bioinformatik und medizinische Bildverarbei- tung Andreas Tille Historisches Debian Pure Blends Testen für wis- senschaftliche Anwendung Support Bioinformatik Medizinische Bildverarbei- tung Ausblick Lektion von DebConf 15 Heidelberg Bioinformatik und medizinische Bildverarbei- tung Andreas Tille Historisches Debian Pure Blends Testen für wis- senschaftliche Anwendung Support I After 15 years people are starting to use what Bioinformatik I’m working on. Medizinische Bildverarbei- John Only 15 years? You young guys should be tung more patient. I should hurry up since after 15 Ausblick years I might be dead but you have so much time. Image by Olaf Kosinsky CC BY-SA 3.0 Lektion von DebConf 15 Heidelberg Bioinformatik und medizinische Bildverarbei- tung Andreas Tille Historisches Debian Pure Blends Testen für wis- senschaftliche Anwendung Support I After 15 years people are starting to use what Bioinformatik I’m working on. Medizinische Bildverarbei- John Only 15 years? You young guys should be tung more patient.