Artificial Intelligence and Robotics Artificial Intelligence and Robotics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Current Advances in Cognitive Robotics Amir Aly, Sascha Griffiths, Francesca Stramandinoli

Towards Intelligent Social Robots: Current Advances in Cognitive Robotics Amir Aly, Sascha Griffiths, Francesca Stramandinoli To cite this version: Amir Aly, Sascha Griffiths, Francesca Stramandinoli. Towards Intelligent Social Robots: Current Advances in Cognitive Robotics . France. 2015. hal-01673866 HAL Id: hal-01673866 https://hal.archives-ouvertes.fr/hal-01673866 Submitted on 1 Jan 2018 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Proceedings of the Full Day Workshop Towards Intelligent Social Robots: Current Advances in Cognitive Robotics in Conjunction with Humanoids 2015 South Korea November 3, 2015 Amir Aly1, Sascha Griffiths2, Francesca Stramandinoli3 1- ENSTA ParisTech – France 2- Queen Mary University – England 3- Italian Institute of Technology – Italy Towards Emerging Multimodal Cognitive Representations from Neural Self-Organization German I. Parisi, Cornelius Weber and Stefan Wermter Knowledge Technology Institute, Department of Informatics University of Hamburg, Germany fparisi,weber,[email protected] http://www.informatik.uni-hamburg.de/WTM/ Abstract—The integration of multisensory information plays a processing of a huge amount of visual information to learn crucial role in autonomous robotics. In this work, we investigate inherent spatiotemporal dependencies in the data. To tackle how robust multimodal representations can naturally develop in this issue, learning-based mechanisms have been typically used a self-organized manner from co-occurring multisensory inputs. -

2017 Colorado Tech

BizWest | | 2017 Colorado TECH $100 CHEERS, CHALLENGES FOR • Colorado companies help to propel • UCAR, NREL to co-anchor COLORADO’S TECH INDUSTRY fledgling drone industry forward The Innovation Corridor at WTC Denver • Startup creating platform for patents, • Symmetry Storage plans to grow its app crowdfunding, idea protection for storage solutions ‘city by city’ • Two-man company in Fort Collins The Directory: info on innovating backup cameras for autos 2,400+ Colorado Tech Firms Presented by: BizWest GO FAST WITH FIBER Stay productive with Fiber LET’S GET DOWN Internet’s upload and download speeds up to 1 Gig. (Some speeds TO BUSINESS. may not be available in your area.) BE MORE EFFICIENT WITH MANAGED OFFICE Spend less time managing CenturyLink products and services are designed to help you your technology and more on your business. with your changing business needs, so you can focus on growing your business. Now that’s helpful, seriously. STAY CONNECTED WITH HOSTED VOIP Automatically reroute calls from your desk phone to any phone you want. Find out how we can help at GET PREDICTABLE PRICING centurylink.com/helpful WITH A BUSINESS BUNDLE or call 303.992.3765 Keep costs low with a two-year price lock. After that? Your monthly rate stays low. Services not available everywhere. © 2017 CenturyLink. All Rights Reserved. Listed broadband speeds vary due to conditions outside of network control, including customer location and equipment, and are not guaranteed. Price Lock – Applies only to the monthly recurring charges for the required 24-month term of qualifying services; excludes all taxes, fees and surcharges, monthly recurring fees for modem/router and professional installation, and shipping and handling HGGHQTEWUVQOGToUOQFGOQTTQWVGT1ƛGTTGSWKTGUEWUVQOGTVQTGOCKPKPIQQFUVCPFKPICPFVGTOKPCVGUKHEWUVQOGTEJCPIGUVJGKTCEEQWPVKPCP[OCPPGT including any change to the required CenturyLink services (canceled, upgraded, downgraded), telephone number change, or change of physical location of any installed service (including customer moves from location of installed services). -

Humanoid Robots – from Fiction to Reality?

KI-Zeitschrift, 4/08, pp. 5-9, December 2008 Humanoid Robots { From Fiction to Reality? Sven Behnke Humanoid robots have been fascinating people ever since the invention of robots. They are the embodiment of artificial intelligence. While in science fiction, human-like robots act autonomously in complex human-populated environments, in reality, the capabilities of humanoid robots are quite limited. This article reviews the history of humanoid robots, discusses the state-of-the-art and speculates about future developments in the field. 1 Introduction 1495 Leonardo da Vinci designed and possibly built a mechan- ical device that looked like an armored knight. It was designed Humanoid robots, robots with an anthropomorphic body plan to sit up, wave its arms, and move its head via a flexible neck and human-like senses, are enjoying increasing popularity as re- while opening and closing its jaw. By the eighteenth century, search tool. More and more groups worldwide work on issues like elaborate mechanical dolls were able to write short phrases, play bipedal locomotion, dexterous manipulation, audio-visual per- musical instruments, and perform other simple, life-like acts. ception, human-robot interaction, adaptive control, and learn- In 1921 the word robot was coined by Karel Capek in its ing, targeted for the application in humanoid robots. theatre play: R.U.R. (Rossum's Universal Robots). The me- These efforts are motivated by the vision to create a new chanical servant in the play had a humanoid appearance. The kind of tool: robots that work in close cooperation with hu- first humanoid robot to appear in the movies was Maria in the mans in the same environment that we designed to suit our film Metropolis (Fritz Lang, 1926). -

Robotic Pets in the Lives of Preschool Children

Robotic pets in the lives of preschool children Peter H. Kahn, Jr., Batya Friedman, Deanne R. Pérez-Granados and Nathan G. Freier University of Washington / Stanford University / University of Washington This study examined preschool children’s reasoning about and behavioral interactions with one of the most advanced robotic pets currently on the retail market, Sony’s robotic dog AIBO. Eighty children, equally divided between two age groups, 34–50 months and 58–74 months, participated in individual sessions with two artifacts: AIBO and a stuffed dog. Evaluation and justification results showed similarities in children’s reasoning across artifacts. In contrast, children engaged more often in apprehensive behavior and attempts at reciprocity with AIBO, and more often mistreated the stuffed dog and endowed it with animation. Discussion focuses on how robotic pets, as representative of an emerging technological genre, may be (a) blurring foundational ontological categories, and (b) impacting children’s social and moral development. Keywords: AIBO, children, human–computer interaction, human–robot interaction, moral development, social development, Value Sensitive Design Animals have long been an important part of children’s lives, offering comfort and companionship, and promoting the development of moral reciprocity and responsibility (Beck & Katcher, 1996; Kahn, 1999; Melson, 2001). Yet in recent years there has been a movement to create robotic pets that mimic aspects of their biological counterparts. In turn, researchers have begun to ask important questions. Can robotic pets, compared to biological pets, provide children with similar developmental outcomes (Druin & Hendler, 2000; Pérez-Granados, 2002; Turkle, 2000)? How do children conceive of this genre of robots? It is a genre that some researchers have begun to refer to as “social robots” (Bartneck & Forlizzi, 2004; Breazeal, 2003): robots that, to varying degrees, have some constellation of being personified, embodied, adaptive, and autonomous; and Interaction Studies 7:3 (2006), 405–436. -

Robotic Pets in Human Lives: Implications for the Human–Animal Bond and for Human Relationships with Personified Technologies ∗ Gail F

Journal of Social Issues, Vol. 65, No. 3, 2009, pp. 545--567 Robotic Pets in Human Lives: Implications for the Human–Animal Bond and for Human Relationships with Personified Technologies ∗ Gail F. Melson Purdue University Peter H. Kahn, Jr. University of Washington Alan Beck Purdue University Batya Friedman University of Washington Robotic “pets” are being marketed as social companions and are used in the emerging field of robot-assisted activities, including robot-assisted therapy (RAA). However,the limits to and potential of robotic analogues of living animals as social and therapeutic partners remain unclear. Do children and adults view robotic pets as “animal-like,” “machine-like,” or some combination of both? How do social behaviors differ toward a robotic versus living dog? To address these issues, we synthesized data from three studies of the robotic dog AIBO: (1) a content analysis of 6,438 Internet postings by 182 adult AIBO owners; (2) observations ∗ Correspondence concerning this article should be addressed to Gail F. Melson, Depart- ment of CDFS, 101 Gates Road, Purdue University, West Lafayette, IN 47907-20202 [e-mail: [email protected]]. We thank Brian Gill for assistance with statistical analyses. We also thank the following individuals (in alphabetical order) for assistance with data collection, transcript preparation, and coding: Jocelyne Albert, Nathan Freier, Erik Garrett, Oana Georgescu, Brian Gilbert, Jennifer Hagman, Migume Inoue, and Trace Roberts. This material is based on work supported by the National Science Foundation under Grant No. IIS-0102558 and IIS-0325035. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the National Science Foundation. -

Neuroprosthetic Interfaces – the Reality Behind Bionics and Cyborgs

1. Korrektur/PDF - Mentis - Schleidgen - Human Nature / Rhema 23.12.10 / Seite: 163 Gerald E. Loeb NEUROPROSTHETIC INTERFACES – THE REALITY BEHIND BIONICS AND CYBORGS History and Definitions The invisible and seemingly magical powers of electricity were employed as early as the ancient Greeks and Romans, who used shocks from electric fish to treat pain. More technological forms of electricity became a favorite of quack medical practitioners in the Victorian era (McNeal, 1977), an unfortunate tradition that persists to this day. In the 20th century the elucidation of neural signaling and the orderly design of electrophysiological instrumentation provided a theoretical foundation for the real therapeutic advances discussed herein (Hambrecht, 1992). Nevertheless, neural prosthetics continues to be haunted by projects that fall along the spectrum from blind empiricism to outright fraud. That problem has been exacerbated by two historical circumstances. The first was the rejection by »rigorous scientists« of early attempts at clinical treatment that then turned out much better than they had predicted (Sterling et al., 1971). This tended to encourage the empiricists to ignore the science even when it was helpful or necessary. The second was the inevitable fictionalization by the entertainment industry, which invented and adopted terminology and projects in ways that the lay public often did not distinguish from reality. The terms defined below are increasingly encountered in both scientific and lay discussions. I have selected the usages most relevant to this discussion; other usages occur: – Neural Control – The use of electronic interfaces with the nervous system both to study normal function and to repair dysfunction (as used by Dr. -

A Politico-Social History of Algolt (With a Chronology in the Form of a Log Book)

A Politico-Social History of Algolt (With a Chronology in the Form of a Log Book) R. w. BEMER Introduction This is an admittedly fragmentary chronicle of events in the develop ment of the algorithmic language ALGOL. Nevertheless, it seems perti nent, while we await the advent of a technical and conceptual history, to outline the matrix of forces which shaped that history in a political and social sense. Perhaps the author's role is only that of recorder of visible events, rather than the complex interplay of ideas which have made ALGOL the force it is in the computational world. It is true, as Professor Ershov stated in his review of a draft of the present work, that "the reading of this history, rich in curious details, nevertheless does not enable the beginner to understand why ALGOL, with a history that would seem more disappointing than triumphant, changed the face of current programming". I can only state that the time scale and my own lesser competence do not allow the tracing of conceptual development in requisite detail. Books are sure to follow in this area, particularly one by Knuth. A further defect in the present work is the relatively lesser availability of European input to the log, although I could claim better access than many in the U.S.A. This is regrettable in view of the relatively stronger support given to ALGOL in Europe. Perhaps this calmer acceptance had the effect of reducing the number of significant entries for a log such as this. Following a brief view of the pattern of events come the entries of the chronology, or log, numbered for reference in the text. -

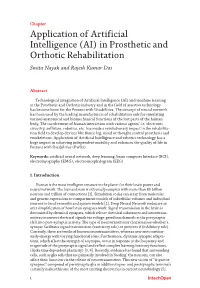

Robotics Section Guide for Web.Indd

ROBOTICS GUIDE Soldering Teachers Notes Early Higher Hobby/Home The Robot Product Code Description The Brand Assembly Required Tools That May Be Helpful Devices Req Software KS1 KS2 KS3 KS4 Required Available Years Education School VEX 123! 70-6250 CLICK HERE VEX No ✘ None No ✔ ✔ ✔ ✔ Dot 70-1101 CLICK HERE Wonder Workshop No ✘ iOS or Android phones/tablets. Apps are also available for Kindle. Free Apps to download ✔ ✔ ✔ ✔ Dash 70-1100 CLICK HERE Wonder Workshop No ✘ iOS or Android phones/tablets. Apps are also available for Kindle. Free Apps to download ✔ ✔ ✔ ✔ ✔ Ready to Go Robot Cue 70-1108 CLICK HERE Wonder Workshop No ✘ iOS or Android phones/tablets. Apps are also available for Kindle. Free Apps to download ✔ ✔ ✔ ✔ Codey Rocky 75-0516 CLICK HERE Makeblock No ✘ PC, Laptop Free downloadb ale Mblock Software ✔ ✔ ✔ ✔ Ozobot 70-8200 CLICK HERE Ozobot No ✘ PC, Laptop Free downloads ✔ ✔ ✔ ✔ Ohbot 76-0000 CLICK HERE Ohbot No ✘ PC, Laptop Free downloads for Windows or Pi ✔ ✔ ✔ ✔ SoftBank NAO 70-8893 CLICK HERE No ✘ PC, Laptop or Ipad Choregraph - free to download ✔ ✔ ✔ ✔ ✔ ✔ ✔ Robotics Humanoid Robots SoftBank Pepper 70-8870 CLICK HERE No ✘ PC, Laptop or Ipad Choregraph - free to download ✔ ✔ ✔ ✔ ✔ ✔ ✔ Robotics Ohbot 76-0001 CLICK HERE Ohbot Assembly is part of the fun & learning! ✘ PC, Laptop Free downloads for Windows or Pi ✔ ✔ ✔ ✔ FABLE 00-0408 CLICK HERE Shape Robotics Assembly is part of the fun & learning! ✘ PC, Laptop or Ipad Free Downloadable ✔ ✔ ✔ ✔ VEX GO! 70-6311 CLICK HERE VEX Assembly is part of the fun & learning! ✘ Windows, Mac, -

Security Applications of Formal Language Theory

Dartmouth College Dartmouth Digital Commons Computer Science Technical Reports Computer Science 11-25-2011 Security Applications of Formal Language Theory Len Sassaman Dartmouth College Meredith L. Patterson Dartmouth College Sergey Bratus Dartmouth College Michael E. Locasto Dartmouth College Anna Shubina Dartmouth College Follow this and additional works at: https://digitalcommons.dartmouth.edu/cs_tr Part of the Computer Sciences Commons Dartmouth Digital Commons Citation Sassaman, Len; Patterson, Meredith L.; Bratus, Sergey; Locasto, Michael E.; and Shubina, Anna, "Security Applications of Formal Language Theory" (2011). Computer Science Technical Report TR2011-709. https://digitalcommons.dartmouth.edu/cs_tr/335 This Technical Report is brought to you for free and open access by the Computer Science at Dartmouth Digital Commons. It has been accepted for inclusion in Computer Science Technical Reports by an authorized administrator of Dartmouth Digital Commons. For more information, please contact [email protected]. Security Applications of Formal Language Theory Dartmouth Computer Science Technical Report TR2011-709 Len Sassaman, Meredith L. Patterson, Sergey Bratus, Michael E. Locasto, Anna Shubina November 25, 2011 Abstract We present an approach to improving the security of complex, composed systems based on formal language theory, and show how this approach leads to advances in input validation, security modeling, attack surface reduction, and ultimately, software design and programming methodology. We cite examples based on real-world security flaws in common protocols representing different classes of protocol complexity. We also introduce a formalization of an exploit development technique, the parse tree differential attack, made possible by our conception of the role of formal grammars in security. These insights make possible future advances in software auditing techniques applicable to static and dynamic binary analysis, fuzzing, and general reverse-engineering and exploit development. -

Application of Artificial Intelligence (AI) in Prosthetic and Orthotic Rehabilitation Smita Nayak and Rajesh Kumar Das

Chapter Application of Artificial Intelligence (AI) in Prosthetic and Orthotic Rehabilitation Smita Nayak and Rajesh Kumar Das Abstract Technological integration of Artificial Intelligence (AI) and machine learning in the Prosthetic and Orthotic industry and in the field of assistive technology has become boon for the Persons with Disabilities. The concept of neural network has been used by the leading manufacturers of rehabilitation aids for simulating various anatomical and biomechanical functions of the lost parts of the human body. The involvement of human interaction with various agents’ i.e. electronic circuitry, software, robotics, etc. has made a revolutionary impact in the rehabilita- tion field to develop devices like Bionic leg, mind or thought control prosthesis and exoskeletons. Application of Artificial Intelligence and robotics technology has a huge impact in achieving independent mobility and enhances the quality of life in Persons with Disabilities (PwDs). Keywords: artificial neural network, deep learning, brain computer Interface (BCI), electromyography (EMG), electroencephalogram (EEG) 1. Introduction Human is the most intelligent creature in the planet for their brain power and neural network. The human brain is extremely complex with more than 80 billion neurons and trillion of connections [1]. Simulation scales can array from molecular and genetic expressions to compartment models of subcellular volumes and individual neurons to local networks and system models [2]. Deep Neural Network nodes are an over simplification of how brain synapses work. Signal transmission in the brain is dominated by chemical synapses, which release chemical substances and neurotrans- mitters to convert electrical signals via voltage-gated ion channels at the presynaptic cleft into post-synaptic activity. -

Fetishism and the Culture of the Automobile

FETISHISM AND THE CULTURE OF THE AUTOMOBILE James Duncan Mackintosh B.A.(hons.), Simon Fraser University, 1985 THESIS SUBMITTED IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF MASTER OF ARTS in the Department of Communication Q~amesMackintosh 1990 SIMON FRASER UNIVERSITY August 1990 All rights reserved. This work may not be reproduced in whole or in part, by photocopy or other means, without permission of the author. APPROVAL NAME : James Duncan Mackintosh DEGREE : Master of Arts (Communication) TITLE OF THESIS: Fetishism and the Culture of the Automobile EXAMINING COMMITTEE: Chairman : Dr. William D. Richards, Jr. \ -1 Dr. Martih Labbu Associate Professor Senior Supervisor Dr. Alison C.M. Beale Assistant Professor \I I Dr. - Jerry Zqlove, Associate Professor, Department of ~n~lish, External Examiner DATE APPROVED : 20 August 1990 PARTIAL COPYRIGHT LICENCE I hereby grant to Simon Fraser University the right to lend my thesis or dissertation (the title of which is shown below) to users of the Simon Fraser University Library, and to make partial or single copies only for such users or in response to a request from the library of any other university, or other educational institution, on its own behalf or for one of its users. I further agree that permission for multiple copying of this thesis for scholarly purposes may be granted by me or the Dean of Graduate Studies. It is understood that copying or publication of this thesis for financial gain shall not be allowed without my written permission. Title of Thesis/Dissertation: Fetishism and the Culture of the Automobile. Author : -re James Duncan Mackintosh name 20 August 1990 date ABSTRACT This thesis explores the notion of fetishism as an appropriate instrument of cultural criticism to investigate the rites and rituals surrounding the automobile. -

Warren Mcculloch and the British Cyberneticians

Warren McCulloch and the British cyberneticians Article (Accepted Version) Husbands, Phil and Holland, Owen (2012) Warren McCulloch and the British cyberneticians. Interdisciplinary Science Reviews, 37 (3). pp. 237-253. ISSN 0308-0188 This version is available from Sussex Research Online: http://sro.sussex.ac.uk/id/eprint/43089/ This document is made available in accordance with publisher policies and may differ from the published version or from the version of record. If you wish to cite this item you are advised to consult the publisher’s version. Please see the URL above for details on accessing the published version. Copyright and reuse: Sussex Research Online is a digital repository of the research output of the University. Copyright and all moral rights to the version of the paper presented here belong to the individual author(s) and/or other copyright owners. To the extent reasonable and practicable, the material made available in SRO has been checked for eligibility before being made available. Copies of full text items generally can be reproduced, displayed or performed and given to third parties in any format or medium for personal research or study, educational, or not-for-profit purposes without prior permission or charge, provided that the authors, title and full bibliographic details are credited, a hyperlink and/or URL is given for the original metadata page and the content is not changed in any way. http://sro.sussex.ac.uk Warren McCulloch and the British Cyberneticians1 Phil Husbands and Owen Holland Dept. Informatics, University of Sussex Abstract Warren McCulloch was a significant influence on a number of British cyberneticians, as some British pioneers in this area were on him.