THE WORLD in 30 MINUTES I've Been Processing Text for a Living

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Doctor's Thesis Studies on Multilingual Information Processing

NAIST-IS-DT9761021 Doctor’s Thesis Studies on Multilingual Information Processing on the Internet Akira Maeda September 18, 2000 Department of Information Systems Graduate School of Information Science Nara Institute of Science and Technology Doctor’s Thesis submitted to Graduate School of Information Science, Nara Institute of Science and Technology in partial fulfillment of the requirements for the degree of DOCTOR of ENGINEERING Akira Maeda Thesis committee: Shunsuke Uemura, Professor Yuji Matsumoto, Professor Minoru Ito, Professor Masatoshi Yoshikawa, Associate Professor Studies on Multilingual Information Processing on the Internet ∗ Akira Maeda Abstract With the increasing popularity of the Internet in various part of the world, the languages used for Web documents are expanded from English to various languages. However, there are many unsolved problems in order to realize an information system which can handle such multilingual documents in a unified manner. From the user’s point of view, three most fundamental text processing functions for the general use of the World Wide Web are display, input, and retrieval of the text. However, for languages such as Japanese, Chinese, and Korean, character fonts and input methods that are necessary for displaying and inputting texts, are not always installed on the client side. From the system’s point of view, one of the most troublesome problems is that, many Web documents do not have meta information of the character coding system and the language used for the document itself, although character coding systems used for Web documents vary according to the language. It may result in troubles such as incorrect display on Web browsers, and inaccurate indexing on Web search engines. -

Alphabetization† †† Wendy Korwin*, Haakon Lund** *119 W

Knowl. Org. 46(2019)No.3 209 W. Korwin and H. Lund. Alphabetization Alphabetization† †† Wendy Korwin*, Haakon Lund** *119 W. Dunedin Rd., Columbus, OH 43214, USA, <[email protected]> **University of Copenhagen, Department of Information Studies, DK-2300 Copenhagen S Denmark, <[email protected]> Wendy Korwin received her PhD in American studies from the College of William and Mary in 2017 with a dissertation entitled Material Literacy: Alphabets, Bodies, and Consumer Culture. She has worked as both a librarian and an archivist, and is currently based in Columbus, Ohio, United States. Haakon Lund is Associate Professor at the University of Copenhagen, Department of Information Studies in Denmark. He is educated as a librarian (MLSc) from the Royal School of Library and Information Science, and his research includes research data management, system usability and users, and gaze interaction. He has pre- sented his research at international conferences and published several journal articles. Korwin, Wendy and Haakon Lund. 2019. “Alphabetization.” Knowledge Organization 46(3): 209-222. 62 references. DOI:10.5771/0943-7444-2019-3-209. Abstract: The article provides definitions of alphabetization and related concepts and traces its historical devel- opment and challenges, covering analog as well as digital media. It introduces basic principles as well as standards, norms, and guidelines. The function of alphabetization is considered and related to alternatives such as system- atic arrangement or classification. Received: 18 February 2019; Revised: 15 March 2019; Accepted: 21 March 2019 Keywords: order, orders, lettering, alphabetization, arrangement † Derived from the article of similar title in the ISKO Encyclopedia of Knowledge Organization Version 1.0; published 2019-01-10. -

Frequently Asked Questions and Selected Resources on Cyrillic Multilingual Computing

Frequently Asked Questions and Selected Resources on Cyrillic Multilingual Computing Kevin S. Hawkins SUMMARY. The persistence of multiple standards, both de jure and de facto, for handling text on the computer is perhaps the most perplexing problem facing those who wish to use a computer in more than one lan- guage, or even to provide access to data across more than one operating system and application platform. Using Cyrillic as an example, this ar- ticle attempts to answer questions commonly asked by non-expert computer users who wish to work in more than one language. [Article copies available for a fee from The Haworth Document Delivery Service: 1-800-HAWORTH. E-mail address: <[email protected]> Website: <http://www.HaworthPress.com> © 2005 by The Haworth Press, Inc. All rights reserved.] Kevin S. Hawkins, MS, is Electronic Publishing Librarian, Scholarly Publishing Office, University Library, University of Michigan. Address correspondence to: Kevin S. Hawkins, 300 Hatcher Graduate Library North, 920 North University Avenue, Ann Arbor, MI 48109-1205 USA (E-mail: kshawkin@ umich.edu). The author wishes to thank David Dubin and the other members of the GSLIS Re- search Writing Group at the University of Illinois at Urbana-Champaign for their com- prehensive advice, and Troy Williams, Benjamin Rifkin, Michael Brewer, David Dubin, Tatiana Poliakevitch, Irina Roskin, Peter Houtzagers, and Uladzimir Katkouski for their specific corrections and suggestions. [Haworth co-indexing entry note]: “Frequently Asked Questions and Selected Resources on Cyrillic Multilingual Computing.” Hawkins, Kevin S. Co-published simultaneously in Slavic & East European Infor- mation Resources (The Haworth Information Press, an imprint of The Haworth Press, Inc.) Vol. -

Sample Chapter 3

108_GILLAM.ch03.fm Page 61 Monday, August 19, 2002 1:58 PM 3 Architecture: Not Just a Pile of Code Charts f you’re used to working with ASCII or other similar encodings designed I for European languages, you’ll find Unicode noticeably different from those other standards. You’ll also find that when you’re dealing with Unicode text, various assumptions you may have made in the past about how you deal with text don’t hold. If you’ve worked with encodings for other languages, at least some characteristics of Unicode will be familiar to you, but even then, some pieces of Unicode will be unfamiliar. Unicode is more than just a big pile of code charts. To be sure, it includes a big pile of code charts, but Unicode goes much further. It doesn’t just take a bunch of character forms and assign numbers to them; it adds a wealth of infor- mation on what those characters mean and how they are used. Unlike virtually all other character encoding standards, Unicode isn’t de- signed for the encoding of a single language or a family of closely related lan- guages. Rather, Unicode is designed for the encoding of all written languages. The current version doesn’t give you a way to encode all written languages (and in fact, this concept is such a slippery thing to define that it probably never will), but it does provide a way to encode an extremely wide variety of lan- guages. The languages vary tremendously in how they are written, so Unicode must be flexible enough to accommodate all of them. -

Surface Or Essence: Beyond the Coded Character Set Model

Surface or Essence: Beyond the Coded Character Set Model. Shigeki Moro1) Abstract For almost all users, the coded character set model is the only way to use characters with their computers. Although there have been frequent arguments about the many problems of coded character sets, until now, there was almost nothing on the philosophical consideration on a character in the field of Computer science. In this paper, the similarity between the coded character set model and Aristotle’s Essentialism and the consequent problems derived from it, is discussed. Then the importance of the surface of the character is pointed out using the ´ecrituretheory of Jacques Derrida. Lastly, the Chaon model of the CHISE project is introduced as one of the solutions to this problem. Keywords: Unicode, Aristotle’s Essentialism, Derrida’s Theory of ´ecriture,Chaon model “Depth must be hidden. Where? On the surface.” other local and super character code sets are still —Hugo von Hofmannsthal (1874-1929) being developed, and the repertoires of the existing character sets are increasing even now. What users 1 Introduction. can only do is to choose and follow these character sets. Writing, is not only considered as one of the most The main reason for this is that there are both fundamental mediums of intellectual activities, but sides: Writing is not only dependent on a context, also a frequently used one, which is not restricted but that it is transmitted exceeding the context (it to the use of computers alone. Needless to say that is contrastive with oral language being indivisible the coded character set model (abbreviation being from a context). -

Section 18.1, Han

The Unicode® Standard Version 13.0 – Core Specification To learn about the latest version of the Unicode Standard, see http://www.unicode.org/versions/latest/. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and the publisher was aware of a trade- mark claim, the designations have been printed with initial capital letters or in all capitals. Unicode and the Unicode Logo are registered trademarks of Unicode, Inc., in the United States and other countries. The authors and publisher have taken care in the preparation of this specification, but make no expressed or implied warranty of any kind and assume no responsibility for errors or omissions. No liability is assumed for incidental or consequential damages in connection with or arising out of the use of the information or programs contained herein. The Unicode Character Database and other files are provided as-is by Unicode, Inc. No claims are made as to fitness for any particular purpose. No warranties of any kind are expressed or implied. The recipient agrees to determine applicability of information provided. © 2020 Unicode, Inc. All rights reserved. This publication is protected by copyright, and permission must be obtained from the publisher prior to any prohibited reproduction. For information regarding permissions, inquire at http://www.unicode.org/reporting.html. For information about the Unicode terms of use, please see http://www.unicode.org/copyright.html. The Unicode Standard / the Unicode Consortium; edited by the Unicode Consortium. — Version 13.0. Includes index. ISBN 978-1-936213-26-9 (http://www.unicode.org/versions/Unicode13.0.0/) 1. -

Electronic Document Preparation Pocket Primer

Electronic Document Preparation Pocket Primer Vít Novotný December 4, 2018 Creative Commons Attribution 3.0 Unported (cc by 3.0) Contents Introduction 1 1 Writing 3 1.1 Text Processing 4 1.1.1 Character Encoding 4 1.1.2 Text Input 12 1.1.3 Text Editors 13 1.1.4 Interactive Document Preparation Systems 13 1.1.5 Regular Expressions 14 1.2 Version Control 17 2 Markup 21 2.1 Meta Markup Languages 22 2.1.1 The General Markup Language 22 2.1.2 The Extensible Markup Language 23 2.2 Markup on the World Wide Web 28 2.2.1 The Hypertext Markup Language 28 2.2.2 The Extensible Hypertext Markup Language 29 2.2.3 The Semantic Web and Linked Data 31 2.3 Document Preparation Systems 32 2.3.1 Batch-oriented Systems 35 2.3.2 Interactive Systems 36 2.4 Lightweight Markup Languages 39 3 Design 41 3.1 Fonts 41 3.2 Structural Elements 42 3.2.1 Paragraphs and Stanzas 42 iv CONTENTS 3.2.2 Headings 45 3.2.3 Tables and Lists 46 3.2.4 Notes 46 3.2.5 Quotations 47 3.3 Page Layout 48 3.4 Color 48 3.4.1 Theory 48 3.4.2 Schemes 51 Bibliography 53 Acronyms 61 Index 65 Introduction With the advent of the digital age, typesetting has become available to virtually anyone equipped with a personal computer. Beautiful text documents can now be crafted using free and consumer-grade software, which often obviates the need for the involvement of a professional designer and typesetter. -

Automated Malware Analysis Report for Set-Up.Exe

ID: 355727 Sample Name: Set-up.exe Cookbook: default.jbs Time: 13:38:24 Date: 21/02/2021 Version: 31.0.0 Emerald Table of Contents Table of Contents 2 Analysis Report Set-up.exe 4 Overview 4 General Information 4 Detection 4 Signatures 4 Classification 4 Startup 4 Malware Configuration 4 Yara Overview 4 Sigma Overview 4 Signature Overview 4 Compliance: 5 Mitre Att&ck Matrix 5 Behavior Graph 5 Screenshots 6 Thumbnails 6 Antivirus, Machine Learning and Genetic Malware Detection 7 Initial Sample 7 Dropped Files 7 Unpacked PE Files 7 Domains 7 URLs 7 Domains and IPs 8 Contacted Domains 8 URLs from Memory and Binaries 8 Contacted IPs 8 General Information 8 Simulations 9 Behavior and APIs 9 Joe Sandbox View / Context 9 IPs 9 Domains 9 ASN 9 JA3 Fingerprints 9 Dropped Files 9 Created / dropped Files 10 Static File Info 10 General 10 File Icon 10 Static PE Info 10 General 10 Authenticode Signature 11 Entrypoint Preview 11 Rich Headers 12 Data Directories 12 Sections 13 Resources 13 Imports 14 Version Infos 16 Possible Origin 16 Network Behavior 16 Code Manipulations 16 Statistics 16 System Behavior 16 Analysis Process: Set-up.exe PID: 3976 Parent PID: 5896 16 Copyright null 2021 Page 2 of 21 General 16 File Activities 17 File Created 17 File Written 17 File Read 21 Registry Activities 21 Key Value Created 21 Disassembly 21 Code Analysis 21 Copyright null 2021 Page 3 of 21 Analysis Report Set-up.exe Overview General Information Detection Signatures Classification Sample Set-up.exe Name: CCoonntttaaiiinnss fffuunncctttiiioonnaallliiitttyy tttoo -

I18n, M17n, Unicode, and All That

I18N, M17N, UNICODE, AND ALL THAT Tim Bray General-Purpose Web Geek Sun Microsystems /[a-zA-Z]+/ This is probably a bug. The Problems We Have To Solve Identifying characters Storage Byte⇔character mapping Transfer Good string API Published in 1996; it has 74 major sections, most of which discuss whole families of writing systems. www.w3.org/TR/charmod Identifying Characters 1,1 17 “Planes”14,1 each with 64k code points: U+0000 – U+10FFFF BMP 12 Unicode Code Points 0 0000 1 0000 Basic Multilingual Plane 2 0000 Dead Languages & Math 3 0000 Han Characters 4 0000 5 0000 Non-BMP 6 0000 7 0000 99,024 characters defined in Unicode 5.0 “Astral” Planes 8 0000 9 0000 A 0000 B 0000 C 0000 D 0000 E 0000 Language F 0000 10 0000 Private Use T ags The Basic Multilingual Plane (BMP) U+0000 – U+FFFF 0000 Alphabets 1000 2000 3000 Punctuation 4000 Asian-language Support 5000 Han Characters 6000 7000 8000 9000 A000 Y B000 i Hangul C000 D000 E000 (*: Legacy-Compatibility junk)Surrogates F000 Private Use * Unicode Character Database 00C8;LATIN CAPITAL LETTER E WITH GRAVE;Lu;0;L;0045 0300;;;;N;LATIN CAPITAL LETTER E GRAVE;;;00E8; “Character #200 is LATIN CAPITAL LETTER E WITH GRAVE, a lower-case letter, combining class 0, renders L-to-R, can be composed by U+0045/U+0300, had a differentÈ name in Unicode 1, isn’t a number, lowercase is U+00E8.” www.unicode.org/Public/Unidata $ U+0024 DOLLAR SIGN Ž U+017D LATIN CAPITAL LETTER Z WITH CARON ® U+00AE REGISTERED SIGN ή U+03AE GREEK SMALL LETTER ETA WITH TONOS Ж U+0416 CYRILLIC CAPITAL LETTER ZHE א U+05D0 HEBREW LETTER -

Omega — Why Bother with Unicode?

Omega — why Bother with Unicode? Robin Fairbairns University of Cambridge Computer Laboratory Pembroke Street Cambridge CB23QG UK Email: [email protected] Abstract Yannis Haralambous’ and John Plaice’s Omega system employs Unicode as its coding system. This short note (which previously appeared in the UKTUG mag- azine Baskerville volume 5, number 3) considers the rationale behind Unicode itself and behind its adoption for Omega. Introduction and written by Yannis Haralambous (Lille) and John Plaice (Universit´e Laval, Montr´eal). It follows on As almost all T X users who ‘listen to the networks’ E quite naturally from Yannis’ work on exotic lan- at all will know, the Francophone T X users’ group, E guages (see, among many examples, Haralambous, GUTenberg, arranged a meeting in March at CERN 1990; 1991; 1993; 1994), which have always seemed (Geneva) to ‘launch’ Ω. The UKTUG responded to to me to be bedevilled by problems of text encoding. GUTenberg’s plea for support to enable T Xusers E Simply, Ω (the program) is able to read scripts from impoverished countries to attend, by making that are encoded in Unicode (or in some other code the first disbursement from the UKTUG’s newly- that is readily transformable to Unicode), and then established Cathy Booth fund. The meeting was to process them in the same way that T Xdoes. well attended, with representatives from both East- E Parallel work has defined formats for fonts and other ern and Western Europe (including me; I also at- necessary files to deal with the demands arising from tended with UKTUG money), and one representa- AFONT Unicode input, and upgraded versions of MET , tive from Australia (though he is presently resident the virtual font utilities, and so on, have been writ- in Europe, too). -

The Unicode Standard, Version 4.0--Online Edition

This PDF file is an excerpt from The Unicode Standard, Version 4.0, issued by the Unicode Consor- tium and published by Addison-Wesley. The material has been modified slightly for this online edi- tion, however the PDF files have not been modified to reflect the corrections found on the Updates and Errata page (http://www.unicode.org/errata/). For information on more recent versions of the standard, see http://www.unicode.org/standard/versions/enumeratedversions.html. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and Addison-Wesley was aware of a trademark claim, the designations have been printed in initial capital letters. However, not all words in initial capital letters are trademark designations. The Unicode® Consortium is a registered trademark, and Unicode™ is a trademark of Unicode, Inc. The Unicode logo is a trademark of Unicode, Inc., and may be registered in some jurisdictions. The authors and publisher have taken care in preparation of this book, but make no expressed or implied warranty of any kind and assume no responsibility for errors or omissions. No liability is assumed for incidental or consequential damages in connection with or arising out of the use of the information or programs contained herein. The Unicode Character Database and other files are provided as-is by Unicode®, Inc. No claims are made as to fitness for any particular purpose. No warranties of any kind are expressed or implied. The recipient agrees to determine applicability of information provided. Dai Kan-Wa Jiten used as the source of reference Kanji codes was written by Tetsuji Morohashi and published by Taishukan Shoten. -

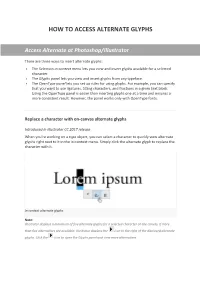

How to Access Alternate Glyphs

HOW TO ACCESS ALTERNATE GLYPHS Access Alternate at Photoshop/Illustrator There are three ways to insert alternate glyphs: • The Selection in-context menu lets you view and insert glyphs available for a selected character. • The Glyphs panel lets you view and insert glyphs from any typeface. • The OpenType panel lets you set up rules for using glyphs. For example, you can specify that you want to use ligatures, titling characters, and fractions in a given text block. Using the OpenType panel is easier than inserting glyphs one at a time and ensures a more consistent result. However, the panel works only with OpenType fonts. Replace a character with on-canvas alternate glyphs Introduced in Illustrator CC 2017 release When you're working on a type object, you can select a character to quickly view alternate glyphs right next to it in the in-context menu. Simply click the alternate glyph to replace the character with it. In-context alternate glyphs Note: Illustrator displays a maximum of five alternate glyphs for a selected character on the canvas. If more than five alternatives are available, Illustrator displays the icon to the right of the displayed alternate glyphs. Click the icon to open the Glyphs panel and view more alternatives. Glyphs panel overview You use the Glyphs panel (Window > Type > Glyphs) to view the glyphs in a font and insert specific glyphs in your document. By default, the Glyphs panel displays all the glyphs for the currently selected font. You can change the font by selecting a different font family and style at the bottom of the panel.