Parallelism'for'ia'and'ia'for' Parallelism'

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Intel® Quick Sync Video Technology Guide

WP80 Superguide 3: THE CLOUD VIDEO SUPERGUIDE JANUARY/FEBRUARY 2015 SPONSORED CONTENT Intel® Quick Sync Video Technology and Intel® Xeon® Processor Based Servers— Flexible Transcode Performance and Quality Video transcoding involves converting provides enterprise-quality HEVC and and boost image quality. Some key one compressed video format to audio codecs, Intel® VTune™ Amplifier improvements include the following: another. In the past this process has XE performance analysis tools and Video • Additional JPEG/MJPEG decode in been a compute-intensive task which Quality Caliper stream quality analyzer. the multi-format codec engine. This demanded a large amount of precious Additionally, product family members, support is on top of existing energy- CPU resources. Intel® Quick Synch Video Intel® Video Pro Analyzer and Intel® efficient, high-performance AVC (QSV) can enable hardware-accelerated Stress Bitstreams and Encoder bundles encode/decode that sustains multiple transcoding to deliver better performance enable production–scale validation and 4K and Ultra HD video streams. than transcoding on the CPU without debug of encode, transcode, and decode • A dedicated new video quality engine sacrificing quality. and playback applications. to provide extensive video processing First introduced in 2011, Intel Quick Intel Media Server Studio SDK at low power consumption Sync technology is available in the Intel® implements many codec and tools • Programmable and media-optimized Xeon® Processor E3-1200 v3 with Intel components initially in software, and EU (execution units)/samplers for HD Graphics P4600/4700 and Iris™ Pro later as hybrid (software and hardware) high quality P5200. (From here on, we’ll simply refer to or entirely in hardware. -

GPU Developments 2018

GPU Developments 2018 2018 GPU Developments 2018 © Copyright Jon Peddie Research 2019. All rights reserved. Reproduction in whole or in part is prohibited without written permission from Jon Peddie Research. This report is the property of Jon Peddie Research (JPR) and made available to a restricted number of clients only upon these terms and conditions. Agreement not to copy or disclose. This report and all future reports or other materials provided by JPR pursuant to this subscription (collectively, “Reports”) are protected by: (i) federal copyright, pursuant to the Copyright Act of 1976; and (ii) the nondisclosure provisions set forth immediately following. License, exclusive use, and agreement not to disclose. Reports are the trade secret property exclusively of JPR and are made available to a restricted number of clients, for their exclusive use and only upon the following terms and conditions. JPR grants site-wide license to read and utilize the information in the Reports, exclusively to the initial subscriber to the Reports, its subsidiaries, divisions, and employees (collectively, “Subscriber”). The Reports shall, at all times, be treated by Subscriber as proprietary and confidential documents, for internal use only. Subscriber agrees that it will not reproduce for or share any of the material in the Reports (“Material”) with any entity or individual other than Subscriber (“Shared Third Party”) (collectively, “Share” or “Sharing”), without the advance written permission of JPR. Subscriber shall be liable for any breach of this agreement and shall be subject to cancellation of its subscription to Reports. Without limiting this liability, Subscriber shall be liable for any damages suffered by JPR as a result of any Sharing of any Material, without advance written permission of JPR. -

Keepixo’S Genova Live:Speed Is the Latest Addition to Genova Virtualizable Software Family of Products

High Density Video Transcoding Keepixo’s Genova Live:Speed is the latest addition to Genova Virtualizable Software family of products. It runs on the Kontron SYMKLOUD platform and leverages Intel’s Quick Sync Video (QSV) technology to dramatically increase transcoding density; minimize power consumption while meeting professional service grade levels. Media Processing Acceleration Genova Live:Speed The explosive growth of Internet video traffic has put Keepixo worked with Kontron to leverage the Kontron pressure on video transcoding infrastructures to SYMKLOUD Converged Infrastructure platform and optimize bandwidth, power consumption and cost of developed a comprehensive package that optimizes the operations. High density transcoding solutions such as performance of QSV and allows smooth deployment of Intel’s Quick Sync Video technology have emerged to highly dense transcoding infrastructures comprising of address these challenges. They introduce various levels hundreds of services. of HW acceleration to off-load computationally intensive tasks. Initially targeted at consumer Keepixo selected the Kontron SymKloud MS2910 applications, high density transcoding has become a platform to implement its high density transcoding viable option for professional transcoding solution. The MS2910 platform comes in a 2U (21” infrastructures when included in suitable packages that depth) chassis, dual hot-swappable 10GbE switches, address the requirements of professional applications. and can accommodate up to 9 modular compute servers, each hosting 2 independent CPUs for a total of Intel Quick Sync Video (QSV) is available on a range of up to 18 CPUs per chassis. The SymKloud compute Intel i7 and Xeon E3 processors fitted with on-chip nodes can be of different mix-and-match processor graphics. -

Rethinking Visual Cloud Workload Distribution

WHITE PAPER Media and Communications Content Creation and Distribution RethinkingVisualCloud WorkloadDistribution Creating a New Model With visual computing workloads growing at an accelerating pace, cloud service providers (CSPs), communications service providers (CoSPs), and enterprises are rethinking the physical and virtual distribution of compute resources to more effectively balance cost and deployment efficiency while achieving exceptional performance. Visual cloud deployments accommodate a diverse range of streaming workloads, encompassing media processing and delivery, cloud graphics, cloud gaming, media analytics, and immersive media. Contending with the onslaught of new visual workloads will require more nimble, scalable, virtualized infrastructures; the capability of shifting workloads to the network edge when appropriate; and a collection of tools, software, and hardware components to support individual use cases fluidly. Advanced network technologies and cloud architectures are essential for agile distribution of visual cloud workloads. A 2017 report, Cisco Visual Networking Index: Forecast and Methodology, 2016–2021, projected strong growth in all Internet and managed IP video- related sectors. Compound annual growth rate (CAGR) figures during this time span, calculated in petabytes per month, included these predictions: • Content delivery network (CDN) traffic: 44 percent increase globally • Consumer-managed IP video traffic: 19,619 petabytes per month (14 percent increase) by 2021 • Consumer Internet video: 27 percent increase for fixed, 55 percent increase for mobile The impact of this media growth on cloud-based data centers will produce a burden on those CSPs, CoSPs, and enterprises that are not equipped to deal with TableofContents large-scale media workloads dynamically. Solutions to this challenge include: Creating a New Model . 1 • Increasingflexibilityandoptimizingprocessing: Virtualization and software- defined infrastructure (SDI) make it easier to balance workloads on available OpenSourceSoftware resources. -

Intel® Quick Sync Video and Ffmpeg Installation and Validation Guide

White paper Intel® Quick Sync Video and FFmpeg Installation and Validation Guide Introduction Intel® Quick Sync Video technology on Intel® Iris™ Pro Graphics and Intel® HD graphics provides transcode acceleration on Linux* systems in FFmpeg* 2.8 and later editions. This paper is a detailed step-by-step guide to enabling h264_qsv, mpeg2_qsv, and hevc_qsv hardware accelerated codecs in the FFmpeg framework. For a quicker overview, please see this article. Performance note: The *_qsv implementations are intended to provide easy access to Intel hardware capabilities for FFmpeg users, but are less efficient than custom applications optimized for Intel® Media Server Studio. Document note: Monospace type = command line inputs/outputs. Pink = highlights to call special attention to important command line I/O details. Getting Started 1. Install Intel Media Server Studio for Linux. Download from software.intel.com/intel-media-server- studio. This is a prerequisite for the *_qsv codecs as it provides the foundation for encode acceleration. See the next chapter for more info on edition choices. Note: Professional edition install is required for hevc_qsv. 2. Get the latest FFmpeg source from https://www.FFmpeg.org/download.html. Intel Quick Sync Video support is available in FFmpeg 2.8 and later editions. The install steps outlined below were verified with ffmpeg release 3.2.2 3. Configure FFmpeg with “--enable –libmfx –enable-nonfree”, build, and install. This requires copying include files to /opt/intel/mediasdk/include/mfx and adding a libmfx.pc file. More details below. 4. Test transcode with an accelerated codec such as “-vcodec h264_qsv” on the FFmpeg command line. -

System Design for Telecommunication Gateways

P1: OTE/OTE/SPH P2: OTE FM BLBK307-Bachmutsky August 30, 2010 15:13 Printer Name: Yet to Come SYSTEM DESIGN FOR TELECOMMUNICATION GATEWAYS Alexander Bachmutsky Nokia Siemens Networks, USA A John Wiley and Sons, Ltd., Publication P1: OTE/OTE/SPH P2: OTE FM BLBK307-Bachmutsky August 30, 2010 15:13 Printer Name: Yet to Come P1: OTE/OTE/SPH P2: OTE FM BLBK307-Bachmutsky August 30, 2010 15:13 Printer Name: Yet to Come SYSTEM DESIGN FOR TELECOMMUNICATION GATEWAYS P1: OTE/OTE/SPH P2: OTE FM BLBK307-Bachmutsky August 30, 2010 15:13 Printer Name: Yet to Come P1: OTE/OTE/SPH P2: OTE FM BLBK307-Bachmutsky August 30, 2010 15:13 Printer Name: Yet to Come SYSTEM DESIGN FOR TELECOMMUNICATION GATEWAYS Alexander Bachmutsky Nokia Siemens Networks, USA A John Wiley and Sons, Ltd., Publication P1: OTE/OTE/SPH P2: OTE FM BLBK307-Bachmutsky August 30, 2010 15:13 Printer Name: Yet to Come This edition first published 2011 C 2011 John Wiley & Sons, Ltd Registered office John Wiley & Sons Ltd, The Atrium, Southern Gate, Chichester, West Sussex, PO19 8SQ, United Kingdom For details of our global editorial offices, for customer services and for information about how to apply for permission to reuse the copyright material in this book please see our website at www.wiley.com. The right of the author to be identified as the author of this work has been asserted in accordance with the Copyright, Designs and Patents Act 1988. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording or otherwise, except as permitted by the UK Copyright, Designs and Patents Act 1988, without the prior permission of the publisher. -

H P Intel® Quick Sync Video Tec Nology on Intel® Iris™ Graphics

WHITE PAPER Intel Quick Sync Video Intel® Quick Sync Video Tech n ology on Intel® Iris™ Graphics and Intel® HD Grap h ics family—Flexible Transcode Performance an d Quality The 4th generation Intel® Core™ Processor introduces Intel® Iris™ Pro Graphics, Intel® Iris™ Graphics and Intel® HD Graphics (4200+ Series), featuring the newest iteration of Intel® Quick Sync Video technology. In addition, Intel Quick Sync technology is also available in the Intel Xeon® Processor E3-1200 v3 product family with Intel HD Graphics P4600/P4700. For the purposes of this whitepaper, we will refer simply to Intel Quick Sync technology. This advanced graphics solution sets a milestone in performance by delivering a blazingly fast transcode experience, and balances image quality and performance through a series of optimized user targets for easy adoption. This paper explains the changes in Intel Quick Sync Video transcode target usages, describes the new high-performance hardware acceleration engine, and sets performance and quality expectations for each target usage. Introduction In the past, transcoding has been a Video transcoding involves converting one compute-intensive task that demanded a compressed video format to another. The large amount of precious CPU resources. process can apply changes to the format, With multiple cores and more powerful such as moving from MPEG2 to H.264, or processors driving today’s systems, transcoding can change the properties of transcoding happens faster, and is a given format, like bit rate or resolution. commonly used to support the format Conversions cannot generally happen in a requirements of a whole range of video single step; rather, a series of transitions consumption devices. -

Processing Multimedia Workloads on Heterogeneous Multicore Architectures

Doctoral Dissertation Processing Multimedia Workloads on Heterogeneous Multicore Architectures H˚akon Kvale Stensland February 2015 Submitted to the Faculty of Mathematics and Natural Sciences at the University of Oslo in partial fulfilment of the requirements for the degree of Philosophiae Doctor © Håkon Kvale Stensland, 2015 Series of dissertations submitted to the Faculty of Mathematics and Natural Sciences, University of Oslo No. 1601 ISSN 1501-7710 All rights reserved. No part of this publication may be reproduced or transmitted, in any form or by any means, without permission. Cover: Hanne Baadsgaard Utigard. Printed in Norway: AIT Oslo AS. Produced in co-operation with Akademika Publishing. The thesis is produced by Akademika Publishing merely in connection with the thesis defence. Kindly direct all inquiries regarding the thesis to the copyright holder or the unit which grants the doctorate. Abstract Processor architectures have been evolving quickly since the introduction of the central processing unit. For a very long time, one of the important means of increasing per- formance was to increase the clock frequency. However, in the last decade, processor manufacturers have hit the so-called power wall, with high heat dissipation. To overcome this problem, processors were designed with reduced clock frequencies but with multiple cores and, later, heterogeneous processing elements. This shift introduced a new challenge for programmers: Legacy applications, written without parallelization in mind, gain no benefits from moving to multicore and heterogeneous architectures. Another challenge for the programmers is that heterogeneous architecture designs are very different with respect to caches, memory types, execution unit organization, and so forth and, in the worst case, a programmer must completely rewrite the application to obtain the best performance on the new architecture. -

Extracting and Mapping Industry 4.0 Technologies Using Wikipedia

Computers in Industry 100 (2018) 244–257 Contents lists available at ScienceDirect Computers in Industry journal homepage: www.elsevier.com/locate/compind Extracting and mapping industry 4.0 technologies using wikipedia T ⁎ Filippo Chiarelloa, , Leonello Trivellib, Andrea Bonaccorsia, Gualtiero Fantonic a Department of Energy, Systems, Territory and Construction Engineering, University of Pisa, Largo Lucio Lazzarino, 2, 56126 Pisa, Italy b Department of Economics and Management, University of Pisa, Via Cosimo Ridolfi, 10, 56124 Pisa, Italy c Department of Mechanical, Nuclear and Production Engineering, University of Pisa, Largo Lucio Lazzarino, 2, 56126 Pisa, Italy ARTICLE INFO ABSTRACT Keywords: The explosion of the interest in the industry 4.0 generated a hype on both academia and business: the former is Industry 4.0 attracted for the opportunities given by the emergence of such a new field, the latter is pulled by incentives and Digital industry national investment plans. The Industry 4.0 technological field is not new but it is highly heterogeneous (actually Industrial IoT it is the aggregation point of more than 30 different fields of the technology). For this reason, many stakeholders Big data feel uncomfortable since they do not master the whole set of technologies, they manifested a lack of knowledge Digital currency and problems of communication with other domains. Programming languages Computing Actually such problem is twofold, on one side a common vocabulary that helps domain experts to have a Embedded systems mutual understanding is missing Riel et al. [1], on the other side, an overall standardization effort would be IoT beneficial to integrate existing terminologies in a reference architecture for the Industry 4.0 paradigm Smit et al. -

SAE WCX Digital Summit

SAE WCX Digital Summit Technical Session Schedule As of April 15, 2021 19:40:33 PM Wednesday, March 17 Live Pre Event: Women's Panel - Moving Innovation Forward Session Code WP100 Room 1 Session 12:30 p.m. Time Paper No. Title 12:15 p.m. ORAL ONLY Meet and Greet with Fellow Attendees . 12:30 p.m. ORAL ONLY Welcome and Opening Remarks Terry Barclay, Inforum 12:35 p.m. Panel Roundtable Discussion - Moving Innovation Forward With the evolution of automation, MaaS, connectivity, smart Infrastructure and vehicle electrification based upon the economic climate, managers within the mobility industry are having to look at new development and implementation strategies for innovations. Hear this group of expert panelist talk about the impact of this new normal on leading teams to create innovative products based upon consumer demand and a need for safer more efficient vehicles.Sponsored by Learn more about the Roundtable Participants Moderators - Kristin Slanina, TrueCar Inc. Panelists - Jacquelyn Birdsall, Toyota; Karen Folger, VP Automations, Bosch USA; Raelyn Holmes, R.L. Holmes Consulting LLC; Desi Ujkashevic, Director of Engineering, Ford Motor Company; 1:40 p.m. ORAL ONLY Closing Remarks Carla Bailo, Center For Automotive Research Tuesday, March 30 SAE Sits Down with DTE Energy and talks EVs Session Code WC100 Room TBD Session 11:30 a.m. With recent OEM strategy announcements and the CA 2035 mandate, EVs are posed to make a critical market impact over the next few years, but how ready is the grid? Come hear Sean Gouda, Manager, electrification -

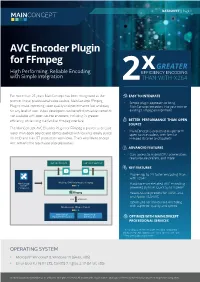

AVC Encoder Plugin for Ffmpeg High Performing, Reliable Encoding with Simple Integration

DATASHEET | Page 1 AVC Encoder Plugin for FFmpeg High Performing, Reliable Encoding with Simple Integration For more than 25 years MainConcept has been recognized as the EASY TO INTEGRATE premier line of professional video codecs. MainConcept FFmpeg • Simple plugin approach to bring Plugins make improving video quality and performance fast and easy MainConcept encoders into your new or for any level of user. Video developers can benefit from advancements existing FFmpeg environment not available with open source encoders, including 2x greater efficiency, while using the familiar FFmpeg interface. BETTER PERFORMANCE THAN OPEN SOURCE The MainConcept AVC Encoder Plugin for FFmpeg is proven to encode • MainConcept is proven to outperform faster than open source and comes packed with features ideally suited open source codecs, with familiar for VOD and live OTT production workflows. That’s why MainConcept FFmpeg libraries and toolsets AVC remains the top choice of professionals. ADVANCED FEATURES • Gain access to Hybrid GPU acceleration, ready-to-use presets, and more LIVE / FILE INPUT LIVE / FILE OUTPUT KEY FEATURES • Proven up to 2X faster encoding than with x264(1) • Hardware-accelerated AVC encoding powered by Intel Quick Sync Video(2) • Ready-to-use presets for DASH-264 and Apple HLS-AVC OMX INTERFACE • Optimized for low bitrate encoding MainConcept FFmpeg Plugins with superior quality and speed MainConcept MainConcept Hybrid HEVC Encoder AVC Encoder OPTIMIZE WITH MAINCONCEPT PROFESSIONAL SERVICES (1) According to recent encoder test data comparing MainConcept AVC against x264 for a specific use case (2) Requires additional license OPERATING SYSTEM • Microsoft® Windows® 8, Windows 10 (64-bit, x86) • Linux Ubuntu 16.04 LTS, CentOS 7.4 glibc 2.17 (64-bit, x86) © 2020 MainConcept GmbH or its affiliates. -

GPU Developments 2017T

GPU Developments 2017 2018 GPU Developments 2017t © Copyright Jon Peddie Research 2018. All rights reserved. Reproduction in whole or in part is prohibited without written permission from Jon Peddie Research. This report is the property of Jon Peddie Research (JPR) and made available to a restricted number of clients only upon these terms and conditions. Agreement not to copy or disclose. This report and all future reports or other materials provided by JPR pursuant to this subscription (collectively, “Reports”) are protected by: (i) federal copyright, pursuant to the Copyright Act of 1976; and (ii) the nondisclosure provisions set forth immediately following. License, exclusive use, and agreement not to disclose. Reports are the trade secret property exclusively of JPR and are made available to a restricted number of clients, for their exclusive use and only upon the following terms and conditions. JPR grants site-wide license to read and utilize the information in the Reports, exclusively to the initial subscriber to the Reports, its subsidiaries, divisions, and employees (collectively, “Subscriber”). The Reports shall, at all times, be treated by Subscriber as proprietary and confidential documents, for internal use only. Subscriber agrees that it will not reproduce for or share any of the material in the Reports (“Material”) with any entity or individual other than Subscriber (“Shared Third Party”) (collectively, “Share” or “Sharing”), without the advance written permission of JPR. Subscriber shall be liable for any breach of this agreement and shall be subject to cancellation of its subscription to Reports. Without limiting this liability, Subscriber shall be liable for any damages suffered by JPR as a result of any Sharing of any Material, without advance written permission of JPR.