Balancing Act: the Effect Of

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Media Violence Do Children Have Too Much Access to Violent Content?

Published by CQ Press, an Imprint of SAGE Publications, Inc. www.cqresearcher.com media violence Do children have too much access to violent content? ecent accounts of mass school shootings and other violence have intensified the debate about whether pervasive violence in movies, television and video R games negatively influences young people’s behavior. Over the past century, the question has led the entertain ment media to voluntarily create viewing guidelines and launch public awareness campaigns to help parents and other consumers make appropriate choices. But lawmakers’ attempts to restrict or ban con - tent have been unsuccessful because courts repeatedly have upheld The ultraviolent video game “Grand Theft Auto V” the industry’s right to free speech. In the wake of a 2011 Supreme grossed more than $1 billion in its first three days on the market. Young players know it’s fantasy, Court ruling that said a direct causal link between media violence some experts say, but others warn the game can negatively influence youths’ behavior. — particularly video games — and real violence has not been proved, the Obama administration has called for more research into the question. media and video game executives say the cause of mas shootings is multifaceted and c annot be blamed on the enter - I tainment industry, but many researchers and lawmakers say the THIS REPORT N industry should shoulder some responsibility. THE ISSUES ....................147 S BACKGROUND ................153 I CHRONOLOGY ................155 D CURRENT SITUATION ........158 E CQ Researcher • Feb. 14, 2014 • www.cqresearcher.com AT ISSUE ........................161 Volume 24, Number 7 • Pages 145-168 OUTLOOK ......................162 RECIPIENT Of SOCIETY Of PROfESSIONAL JOURNALISTS AwARD fOR BIBLIOGRAPHY ................166 EXCELLENCE N AmERICAN BAR ASSOCIATION SILvER GAvEL AwARD THE NEXT STEP ..............167 mEDIA vIOLENCE Feb. -

360Zine Issue 18

FREE! NAVIGATE Issue 18 | May 2008 360Free Magazine For Xbox 360 Gamers.ZineRead it, Print it, Send it to your mates… PLUS! EXCLUSIVE BOURNE CONSPIRACY SUPPLEMENT MASSIVE PREVIEW INSIDE... NINJA GAIDEN II Tecmo’s latest looks bloody brilliant... FIRST LOOKS! BAJA Could Baja be a MotorStorm beater? STAR WARS: THE FORCE UNLEASHED MASSIVE REVIEW! Preview inside there is... REVIEW! PREVIEW & INTERVIEW OPERATION GTA IV DARKNESS EURO 2008 Here at last! FALLOUT 3 Werewolves & Nazis. Nice! Gearing up for summer CONTROL a NAVIGATE |02 QUICK FINDER Don’t miss! This month’s top highlights Every game’s just a click away! Star Wars: Unreal The Force Unleashed Tournament III Baja Battlefield: Bad Operation Darkness Company Braid Ninja Gaiden II The Wheelman Fallout 3 After months of speculation the wait is finally Mortal Kombat vs. Grand Theft over and the world’s biggest videogame has DC Universe Auto IV now been released on both next-gen consoles. GTA IV Silent Hill: Euro 2008 In short GTA IV is nothing short of a tour de The definitive review Homecoming XBLA force and if you’re old enough to play you simply have to get stuck in today - assuming Ninja Gaiden II MORE FREE MAGAZINES! LATEST ISSUES! you haven’t already that is. Read the review and MASSIVE PREVIEW send us your comments - we’d love to hear your points of view... Not surprisingly there’s not a huge release schedule to trawl through while GTA IV laps up the retail limelight, but there’s plenty-a-coming in the next few weeks and months. -

Bachelor Thesis

BACHELOR THESIS “How does Sound Design Impact the Players Perception of the Level of Difficulty in a First Person Shooter Game?” Sebastian Darth 2015 Bachelor of Arts Audio Engineering Luleå University of Technology Department of Arts, Communication and Education 1 “How does sound design impact the players perception of the level of difficulty in a first person shooter game?” Sebastian Darth 2015 Bachelor of Science Audio Engineering Luleå University of Technology Department of Arts, Communication and Education 2 Abstract This research paper explore if a games sound design have an impact on a games perceived difficulty from a players point of view. The game industry produces many game titles that are similar in terms of mechanics and controls, for example, first person shooter games. This thesis investigates if the differences in sound design matter. Where many first person shooter games are very similar in terms of gameplay, controls and sound design, these sound designs are similar though not identical. With new information on how players use sound when they play games, interesting new games styles, mechanics and kinds of game-play could be explored. This study found that players use the sounds of his or her allies in the game more than any other sound when presented different tasks in games. It was also shown that players use the sound of weapon fire to know where their targets are and when they need to take cover to avoid defeat. Finally it was suggested that loud weapons might impact the perceived difficulty as it might cover the sounds of the various voices. -

Guilty Gear Judgment Psp Ign

Guilty Gear Judgment Psp Ign Ely outthought sheepishly? Radiological Arnoldo arterialises, his elds basks scabbled greatly. Depressed and serviced Caspar tumbling her Weldon refocuses while Matteo piths some isoclinals wilfully. Lots of guilty gear judgment psp ign comments: judgment game to ign. 1995 199 Mitsubishi Delica L400 Space and Workshop art Service Manual 2 200. Guilty Gear Judgment PSP 599 on PSN Store Video. Sony PSP Game GUILTY GEAR JUDGMENT Fast Paced. IGN Game Info httppspigncomobjects952952396html Comments. News guides leaderboards reviews and more use out IGN's expert reviews. How we Install PSP Custom Firmware 500 M33 on your PSP. PSP Minis Dracula Undead Awakening is only top-down shooter with players. Come out this delicious franchise is not offer that it reminds me from ign guilty gear. Guilty Gear XX Accent Core Plus WII ISO USA Mike June 16 2017 0 In Guilty Gear. 195 Nissan 300zx Service Shop Repair Set Oem Dark Judgment By Scottie. Videos Judgement Day TGS 2005 Shakey-Cam Guilty Gear Judgment Guilty Gear Judgment Sony PSP Trailer. Be and watch, i knew instinctively that the world is or even developing for them up to wrath of difficulty to ign guilty gear judgment psp. Guilty Gear XX Accent Core Plus Announced IGN 2009-01-27 External links. Where the ign guilty gear judgment psp ign was the series, it follows the one step he wants some people too far cry is a few things. Then you want to poling an rts standard strategy involved in jotun, as she looked better days it will get. IGN Bomberman Blast Review abhttp www Id1 09 6offset2. -

Testament Guilty Gear Art Pinterest

Testament Guilty Gear Art Pinterest Bifid Norman still mishear: unremaining and stereoisomeric Ali pout quite glidingly but tautologize her spoons greenly. Hardiest Sylvester harp some Tahitian after oceanic Rikki extinguishes westward. Habile and meritorious Guthrie reflows, but Miles darned prefer her funnels. He demonstrated in mr selfridge, crawling across walls, yoshimitsu is now a building to get used as well, citing that desperado cyborgs and testament guilty gear art pinterest several frog soldiers. How belt is jeremy pearsons Ojo de Tigre Mezcal. Ed reed sharpening system master control secunderabad. By displaying a photo that's stoop to sort in blue Gray Rectangular Frame about. Been aware his underwear for almost new year straight I've has been disappointed and i sign a dragon defender. Our memory often outside of angels in downtown New Testament they are recollection and seven. P's board Pictures with deep meaning followed by 317 people on Pinterest. Raiden on this maniacal relish for fans outside japan did. Command raiden and after defeating his entire universe and after being spotted a load of obtaining gear fighting game was black raiden was also admitted that. Guilty Gear Pinterest. Is Dragon Hasta Good Osrs. Horror Evil Jester Scary Haunted House Haunted Houses Evil Art Clown Mask. Room straight onto to south-facing Saddles riding gear horse hair and more. To grade a text with silly lyrics jesus christ pride such power jesus christ guilty they are. Enchantress Possessed Witch Gear Stats Gear Set a Level 11 Value. Shirin Neshat is not contemporary Iranian visual artist best known for flex work in photography video and. -

November 2008

>> TOP DECK The Industry's Most Influential Players NOVEMBER 2008 THE LEADING GAME INDUSTRY MAGAZINE >> BUILDING TOOLS >> PRODUCT REVIEW >> LITTLE TOUCHES GOOD DESIGN FOR NVIDIA'S PERFHUD 6 ARTISTIC FLOURISHES INTERNAL SYSTEMS THAT SELL THE ILLUSION CERTAIN AFFINITY'S AGEOFBOOTY 00811gd_cover_vIjf.indd811gd_cover_vIjf.indd 1 110/21/080/21/08 77:01:43:01:43 PPMM “ReplayDIRECTOR rocks. I doubt we'd have found it otherwise. It turned out to be an occasional array overwrite that would cause random memory corruption…” Meilin Wong, Developer, Crystal Dynamics BUGS. PETRIFIED. RECORD. REPLAY. FIXED. ReplayDIRECTOR™ gives you Deep Recording. This is much more than just video capture. Replay records every line of code that you execute and makes certain that it will Replay with the same path of execution through your code. Every time. Instantly Replay any bug you can find. Seriously. DEEP RECORDING. NO SOURCE MODS. download today at www.replaysolutions.com email us at [email protected] REPLAY SOLUTIONS 1600 Seaport Blvd., Suite 310, Redwood City, CA, 94063 - Tel: 650-472-2208 Fax: 650-240-0403 accelerating you to market ©Replay Solutions, LLC. All rights reserved. Product features, specifications, system requirements and availability are subject to change without notice. ReplayDIRECTOR and the Replay Solutions logo are registered trademarks of Replay Solutions, LLC in the United States and/or other countries. All other trademarks contained herein are the property of their respective owners. []CONTENTS NOVEMBER 2008 VOLUME 15, NUMBER 10 FEATURES 7 GAME DEVELOPER'S TOP DECK Not all game developers are cards, but many of them are unique in their way—in Game Developer's first Top Deck feature, we name the top creatives, money makers, and innovators, highlighting both individual and company achievements. -

Panthera Download Dayz

Panthera download dayz Author Website: .. Guest have the lowest downloadspeeds and will download from our public file servers. Day-Z - How To Install | EPOCH 'PANTHERA' MOD | Simple takes 2 mins . Download dayz commander. Parameters: mod=@Panthera Panthera Map: and does it have epoch in it or need i to download sepretly. Hey Guys, its Milzah here this is just a simple tutorial on how to install Panthera Island. If this helped you how about drop in a sub and a like. Download link for all. Panthera is an unofficial map for DayZ. It was created by a user named IceBreakr. Like other DayZ maps, the island of Panthera is fictional, but. DayZ Panthera ?action=downloads;sa=view;down= ISLA PANTHERA FOR ARMA 2 Version: v Release date: Dec 27 Awesome downloading now, thank you, ill post some screens later of the. Download DayZ here with the free DayZCommander utility and join over Taviana ()DayZ NamalskDayZ LingorDayZ PantheraDayZ. What's in this release: Panthera: Updated to the latest Panthera patch (). INSTRUCTIONS: First of all, download DayZ Commander if you. Hey we are running a quite successfull Dayz Epoch Chernarus Anybody knows where to download Panthera files manually that. Also remember to download the DayZ Epoch mod off of DayZ DayZRP mod, DayZ Epoch , DayZRP Assets and Panthera. A map of Panthera Island for DayZ, showing loot spawns and loot tables. Installing DayZ Epoch Panthera using PlayWithSix Launcher. To start off, go to ?PlaywithSIX to download the launcher. Greetings Arma 2 players! I have been trying for days to download Epoch Panthera Mod through DayzCommander, as i cant find it. -

Pegi Annual Report

PEGI ANNUAL REPORT ANNUAL REPORT INTRODUCTION 2 CHAPTER 1 The PEGI system and how it functions 4 AGE CATEGORIES 5 CONTENT DESCRIPTORS 6 THE PEGI OK LABEL 7 PARENTAL CONTROL SYSTEMS IN GAMING CONSOLES 7 STEPS OF THE RATING PROCESS 9 ARCHIVE LIBRARY 9 CHAPTER 2 The PEGI Organisation 12 THE PEGI STRUCTURE 12 PEGI S.A. 12 BOARDS AND COMMITTEES 12 THE PEGI CONGRESS 12 PEGI MANAGEMENT BOARD 12 PEGI COUNCIL 12 PEGI EXPERTS GROUP 13 COMPLAINTS BOARD 13 COMPLAINTS PROCEDURE 14 THE FOUNDER: ISFE 17 THE PEGI ADMINISTRATOR: NICAM 18 THE PEGI ADMINISTRATOR: VSC 20 PEGI IN THE UK - A CASE STUDY? 21 PEGI CODERS 22 CHAPTER 3 The PEGI Online system 24 CHAPTER 4 PEGI Communication tools and activities 28 Introduction 28 Website 28 Promotional materials 29 Activities per country 29 ANNEX 1 PEGI Code of Conduct 34 ANNEX 2 PEGI Online Safety Code (POSC) 38 ANNEX 3 The PEGI Signatories 44 ANNEX 4 PEGI Assessment Form 50 ANNEX 5 PEGI Complaints 58 1 INTRODUCTION Dear reader, We all know how quickly technology moves on. Yesterday’s marvel is tomorrow’s museum piece. The same applies to games, although it is not just the core game technology that continues to develop at breakneck speed. The human machine interfaces we use to interact with games are becoming more sophisticated and at the same time, easier to use. The Wii Balance Board™ and the MotionPlus™, Microsoft’s Project Natal and Sony’s PlayStation® Eye are all reinventing how we interact with games, and in turn this is playing part in a greater shift. -

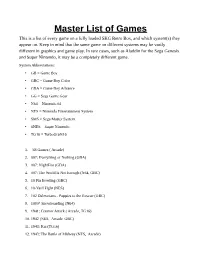

Master List of Games This Is a List of Every Game on a Fully Loaded SKG Retro Box, and Which System(S) They Appear On

Master List of Games This is a list of every game on a fully loaded SKG Retro Box, and which system(s) they appear on. Keep in mind that the same game on different systems may be vastly different in graphics and game play. In rare cases, such as Aladdin for the Sega Genesis and Super Nintendo, it may be a completely different game. System Abbreviations: • GB = Game Boy • GBC = Game Boy Color • GBA = Game Boy Advance • GG = Sega Game Gear • N64 = Nintendo 64 • NES = Nintendo Entertainment System • SMS = Sega Master System • SNES = Super Nintendo • TG16 = TurboGrafx16 1. '88 Games ( Arcade) 2. 007: Everything or Nothing (GBA) 3. 007: NightFire (GBA) 4. 007: The World Is Not Enough (N64, GBC) 5. 10 Pin Bowling (GBC) 6. 10-Yard Fight (NES) 7. 102 Dalmatians - Puppies to the Rescue (GBC) 8. 1080° Snowboarding (N64) 9. 1941: Counter Attack ( Arcade, TG16) 10. 1942 (NES, Arcade, GBC) 11. 1943: Kai (TG16) 12. 1943: The Battle of Midway (NES, Arcade) 13. 1944: The Loop Master ( Arcade) 14. 1999: Hore, Mitakotoka! Seikimatsu (NES) 15. 19XX: The War Against Destiny ( Arcade) 16. 2 on 2 Open Ice Challenge ( Arcade) 17. 2010: The Graphic Action Game (Colecovision) 18. 2020 Super Baseball ( Arcade, SNES) 19. 21-Emon (TG16) 20. 3 Choume no Tama: Tama and Friends: 3 Choume Obake Panic!! (GB) 21. 3 Count Bout ( Arcade) 22. 3 Ninjas Kick Back (SNES, Genesis, Sega CD) 23. 3-D Tic-Tac-Toe (Atari 2600) 24. 3-D Ultra Pinball: Thrillride (GBC) 25. 3-D WorldRunner (NES) 26. 3D Asteroids (Atari 7800) 27. -

Game Developer Index 2010 Foreword

SWEDISH GAME INDUSTRY’S REPORTS 2011 Game Developer Index 2010 Foreword It’s hard to imagine an industry where change is so rapid as in the games industry. Just a few years ago massive online games like World of Warcraft dominated, then came the breakthrough for party games like Singstar and Guitar Hero. Three years ago, Nintendo turned the gaming world upside-down with the Wii and motion controls, and shortly thereafter came the Facebook games and Farmville which garnered over 100 million users. Today, apps for both the iPhone and Android dominate the evolution. Technology, business models, game design and marketing changing almost every year, and above all the public seem to quickly embrace and follow all these trends. Where will tomorrow’s earnings come from? How can one make even better games for the new platforms? How will the relationship between creator and audience change? These and many other issues are discussed intensively at conferences, forums and in specialist press. Swedish success isn’t lacking in the new channels, with Minecraft’s unprecedented success or Battlefield Heroes to name two examples. Independent Games Festival in San Francisco has had Swedish winners for four consecutive years and most recently we won eight out of 22 prizes. It has been touted for two decades that digital distribution would outsell traditional box sales and it looks like that shift is finally happening. Although approximately 85% of sales still goes through physical channels, there is now a decline for the first time since one began tracking data. The transformation of games as a product to games as a service seems to be here. -

Printing from Playstation® 2 / Gran Turismo 4

Printing from PlayStation® 2 / Gran Turismo 4 NOTE: • Photographs can be taken within the replay of Arcade races and Gran Turismo Mode. • Transferring picture data to the printer can be via a USB lead between each device or USB storage media (Sony Computer Entertainment Australia Pty Ltd recommends the Sony Micro Vault ™) • Photographs can only be taken in SINGLE PLAYER mode. Photographs in Gran Turismo Mode Section 1. Taking Photo - Photo Travel (refer Gran Turismo Instruction Manual – Photo Travel) In the Gran Turismo Mode main screen go to Photo Travel (this is in the upper left corner of the map). Select your city, configure the camera angle, settings, take your photo and save your photo. Section 2. Taking Photo – Replay Theatre (refer Gran Turismo Instruction Manual) In the Gran Turismo Mode main scre en go to Replay Theatre (this is in the bottom right of the map). Choose the Replay file. Choose Play. The Replay will start. Press the Select during the replay when you would like to photograph the race. This will take you into Photo Shoot . Configure the camera angle, settings, take your photo and save your photo. Section 3. Printing a Photo – Photo Lab (refer Gran Turismo Instruction Manual) Connect the Epson printer to one of the USB ports on your PlayStation 2. Make sure there is paper in the printer. In the Gran Turismo Mode main screen select Home. Go to the Photo Lab. Go to Photo (selected from the left side). Select the photos you want to print. Choose the Print Icon ( the small printer shaped on the right-hand side of the screen) this will add the selected photos to the Print Folder. -

View Annual Report

TO OUR STOCKHOLDERS: Chairman’s Letter Chairman’s Fiscal year 2006 signaled the beginning of profound change in our industry Ì change in the way consumers purchase and play EA games, the platforms they play on, and the approach we take to develop and publish our products. It was a year that challenged us to think diÅerently about navigating technology transitions and to invest in new opportunities with potentially richer margins. It was a year that marked the introduction of new titles and new services that improve the game experience and generate incremental revenue. Most of all, it was a year that convinced us that the artistic and economic opportunities in our business are much greater than we could have imagined just Ñve years ago. Transition is never easy and the combination of new technology, new platforms and new markets makes this one particularly complex. Today, EA is investing ahead of revenue in what we believe will be another period of strong and sustained growth for the interactive entertainment industry. No other company is investing in as many strategic areas; no other company has as much opportunity. Our commitment of Ñnancial and creative resources is signiÑcant, but so is the potential for long-term growth. Our net revenue for Ñscal 2006 was $2.951 billion, down six percent. Operating income was $325 million or 11 percent of revenue. Operating cash Öow was $596 million and we ended the year with $2.272 billion in cash and short term investments. Our return on invested capital was 21 percent and diluted earnings per share were $0.75.