Introduction to the ZFS Filesystem

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Elastic Storage for Linux on System Z IBM Research & Development Germany

Peter Münch T/L Test and Development – Elastic Storage for Linux on System z IBM Research & Development Germany Elastic Storage for Linux on IBM System z © Copyright IBM Corporation 2014 9.0 Elastic Storage for Linux on System z Session objectives • This presentation introduces the Elastic Storage, based on General Parallel File System technology that will be available for Linux on IBM System z. Understand the concepts of Elastic Storage and which functions will be available for Linux on System z. Learn how you can integrate and benefit from the Elastic Storage in a Linux on System z environment. Finally, get your first impression in a live demo of Elastic Storage. 2 © Copyright IBM Corporation 2014 Elastic Storage for Linux on System z Trademarks The following are trademarks of the International Business Machines Corporation in the United States and/or other countries. AIX* FlashSystem Storwize* Tivoli* DB2* IBM* System p* WebSphere* DS8000* IBM (logo)* System x* XIV* ECKD MQSeries* System z* z/VM* * Registered trademarks of IBM Corporation The following are trademarks or registered trademarks of other companies. Adobe, the Adobe logo, PostScript, and the PostScript logo are either registered trademarks or trademarks of Adobe Systems Incorporated in the United States, and/or other countries. Cell Broadband Engine is a trademark of Sony Computer Entertainment, Inc. in the United States, other countries, or both and is used under license therefrom. Intel, Intel logo, Intel Inside, Intel Inside logo, Intel Centrino, Intel Centrino logo, Celeron, Intel Xeon, Intel SpeedStep, Itanium, and Pentium are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United States and other countries. -

Freenas® 11.0 User Guide

FreeNAS® 11.0 User Guide June 2017 Edition FreeNAS® IS © 2011-2017 iXsystems FreeNAS® AND THE FreeNAS® LOGO ARE REGISTERED TRADEMARKS OF iXsystems FreeBSD® IS A REGISTERED TRADEMARK OF THE FreeBSD Foundation WRITTEN BY USERS OF THE FreeNAS® network-attached STORAGE OPERATING system. VERSION 11.0 CopYRIGHT © 2011-2017 iXsystems (https://www.ixsystems.com/) CONTENTS WELCOME....................................................1 TYPOGRAPHIC Conventions...........................................2 1 INTRODUCTION 3 1.1 NeW FeaturES IN 11.0..........................................3 1.2 HarDWARE Recommendations.....................................4 1.2.1 RAM...............................................5 1.2.2 The OperATING System DeVICE.................................5 1.2.3 StorAGE Disks AND ContrOLLERS.................................6 1.2.4 Network INTERFACES.......................................7 1.3 Getting Started WITH ZFS........................................8 2 INSTALLING AND UpgrADING 9 2.1 Getting FreeNAS® ............................................9 2.2 PrEPARING THE Media.......................................... 10 2.2.1 On FreeBSD OR Linux...................................... 10 2.2.2 On WindoWS.......................................... 11 2.2.3 On OS X............................................. 11 2.3 Performing THE INSTALLATION....................................... 12 2.4 INSTALLATION TROUBLESHOOTING...................................... 18 2.5 UpgrADING................................................ 19 2.5.1 Caveats:............................................ -

Protocols: 0-9, A

Protocols: 0-9, A • 3COM-AMP3, on page 4 • 3COM-TSMUX, on page 5 • 3PC, on page 6 • 4CHAN, on page 7 • 58-CITY, on page 8 • 914C G, on page 9 • 9PFS, on page 10 • ABC-NEWS, on page 11 • ACAP, on page 12 • ACAS, on page 13 • ACCESSBUILDER, on page 14 • ACCESSNETWORK, on page 15 • ACCUWEATHER, on page 16 • ACP, on page 17 • ACR-NEMA, on page 18 • ACTIVE-DIRECTORY, on page 19 • ACTIVESYNC, on page 20 • ADCASH, on page 21 • ADDTHIS, on page 22 • ADOBE-CONNECT, on page 23 • ADWEEK, on page 24 • AED-512, on page 25 • AFPOVERTCP, on page 26 • AGENTX, on page 27 • AIRBNB, on page 28 • AIRPLAY, on page 29 • ALIWANGWANG, on page 30 • ALLRECIPES, on page 31 • ALPES, on page 32 • AMANDA, on page 33 • AMAZON, on page 34 • AMEBA, on page 35 • AMAZON-INSTANT-VIDEO, on page 36 Protocols: 0-9, A 1 Protocols: 0-9, A • AMAZON-WEB-SERVICES, on page 37 • AMERICAN-EXPRESS, on page 38 • AMINET, on page 39 • AN, on page 40 • ANCESTRY-COM, on page 41 • ANDROID-UPDATES, on page 42 • ANET, on page 43 • ANSANOTIFY, on page 44 • ANSATRADER, on page 45 • ANY-HOST-INTERNAL, on page 46 • AODV, on page 47 • AOL-MESSENGER, on page 48 • AOL-MESSENGER-AUDIO, on page 49 • AOL-MESSENGER-FT, on page 50 • AOL-MESSENGER-VIDEO, on page 51 • AOL-PROTOCOL, on page 52 • APC-POWERCHUTE, on page 53 • APERTUS-LDP, on page 54 • APPLEJUICE, on page 55 • APPLE-APP-STORE, on page 56 • APPLE-IOS-UPDATES, on page 57 • APPLE-REMOTE-DESKTOP, on page 58 • APPLE-SERVICES, on page 59 • APPLE-TV-UPDATES, on page 60 • APPLEQTC, on page 61 • APPLEQTCSRVR, on page 62 • APPLIX, on page 63 • ARCISDMS, -

Persistent 9P Sessions for Plan 9

Persistent 9P Sessions for Plan 9 Gorka Guardiola, [email protected] Russ Cox, [email protected] Eric Van Hensbergen, [email protected] ABSTRACT Traditionally, Plan 9 [5] runs mainly on local networks, where lost connections are rare. As a result, most programs, including the kernel, do not bother to plan for their file server connections to fail. These programs must be restarted when a connection does fail. If the kernel’s connection to the root file server fails, the machine must be rebooted. This approach suffices only because lost connections are rare. Across long distance networks, where connection failures are more common, it becomes woefully inadequate. To address this problem, we wrote a program called recover, which proxies a 9P session on behalf of a client and takes care of redialing the remote server and reestablishing con- nection state as necessary, hiding network failures from the client. This paper presents the design and implementation of recover, along with performance benchmarks on Plan 9 and on Linux. 1. Introduction Plan 9 is a distributed system developed at Bell Labs [5]. Resources in Plan 9 are presented as synthetic file systems served to clients via 9P, a simple file protocol. Unlike file protocols such as NFS, 9P is stateful: per-connection state such as which files are opened by which clients is maintained by servers. Maintaining per-connection state allows 9P to be used for resources with sophisticated access control poli- cies, such as exclusive-use lock files and chat session multiplexers. It also makes servers easier to imple- ment, since they can forget about file ids once a connection is lost. -

IBM Spectrum Scale CSI Driver for Container Persistent Storage

Front cover IBM Spectrum Scale CSI Driver for Container Persistent Storage Abhishek Jain Andrew Beattie Daniel de Souza Casali Deepak Ghuge Harald Seipp Kedar Karmarkar Muthu Muthiah Pravin P. Kudav Sandeep R. Patil Smita Raut Yadavendra Yadav Redpaper IBM Redbooks IBM Spectrum Scale CSI Driver for Container Persistent Storage April 2020 REDP-5589-00 Note: Before using this information and the product it supports, read the information in “Notices” on page ix. First Edition (April 2020) This edition applies to Version 5, Release 0, Modification 4 of IBM Spectrum Scale. © Copyright International Business Machines Corporation 2020. Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. iii iv IBM Spectrum Scale CSI Driver for Container Persistent Storage Contents Notices . vii Trademarks . viii Preface . ix Authors. ix Now you can become a published author, too . xi Comments welcome. xii Stay connected to IBM Redbooks . xii Chapter 1. IBM Spectrum Scale and Containers Introduction . 1 1.1 Abstract . 2 1.2 Assumptions . 2 1.3 Key concepts and terminology . 3 1.3.1 IBM Spectrum Scale . 3 1.3.2 Container runtime . 3 1.3.3 Container Orchestration System. 4 1.4 Introduction to persistent storage for containers Flex volumes. 4 1.4.1 Static provisioning. 4 1.4.2 Dynamic provisioning . 4 1.4.3 Container Storage Interface (CSI). 5 1.4.4 Advantages of using IBM Spectrum Scale storage for containers . 5 Chapter 2. Architecture of IBM Spectrum Scale CSI Driver . 7 2.1 CSI Component Overview. 8 2.2 IBM Spectrum Scale CSI driver architecture. -

FOSDEM 2017 Schedule

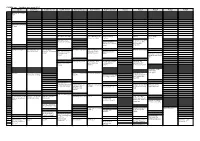

FOSDEM 2017 - Saturday 2017-02-04 (1/9) Janson K.1.105 (La H.2215 (Ferrer) H.1301 (Cornil) H.1302 (Depage) H.1308 (Rolin) H.1309 (Van Rijn) H.2111 H.2213 H.2214 H.3227 H.3228 Fontaine)… 09:30 Welcome to FOSDEM 2017 09:45 10:00 Kubernetes on the road to GIFEE 10:15 10:30 Welcome to the Legal Python Winding Itself MySQL & Friends Opening Intro to Graph … Around Datacubes Devroom databases Free/open source Portability of containers software and drones Optimizing MySQL across diverse HPC 10:45 without SQL or touching resources with my.cnf Singularity Welcome! 11:00 Software Heritage The Veripeditus AR Let's talk about The State of OpenJDK MSS - Software for The birth of HPC Cuba Game Framework hardware: The POWER Make your Corporate planning research Applying profilers to of open. CLA easy to use, aircraft missions MySQL Using graph databases please! 11:15 in popular open source CMSs 11:30 Jockeying the Jigsaw The power of duck Instrumenting plugins Optimized and Mixed License FOSS typing and linear for Performance reproducible HPC Projects algrebra Schema Software deployment 11:45 Incremental Graph Queries with 12:00 CloudABI LoRaWAN for exploring Open J9 - The Next Free It's time for datetime Reproducible HPC openCypher the Internet of Things Java VM sysbench 1.0: teaching Software Installation on an old dog new tricks Cray Systems with EasyBuild 12:15 Making License 12:30 Compliance Easy: Step Diagnosing Issues in Webpush notifications Putting Your Jobs Under Twitter Streaming by Open Source Step. Java Apps using for Kinto Introducing gh-ost the Microscope using Graph with Gephi Thermostat and OGRT Byteman. -

Advanced Services for Oracle Hierarchical Storage Manager

ORACLE DATA SHEET Advanced Services for Oracle Hierarchical Storage Manager The complex challenge of managing the data lifecycle is simply about putting the right data, on the right storage tier, at the right time. Oracle Hierarchical Storage Manager software enables you to reduce the cost of managing data and storing vast data repositories by providing a powerful, easily managed, cost-effective way to access, retain, and protect data over its entire lifecycle. However, your organization must ensure that your archive software is configured and optimized to meet strategic business needs and regulatory demands. Oracle Advanced Services for Oracle Hierarchical Storage Manager delivers the configuration expertise, focused reviews, and proactive guidance to help optimize the effectiveness of your solution–all delivered by Oracle Advanced Support Engineers. KEY BENEFITS Simplify Storage Management • Preproduction Readiness Services including critical patches and Putting the right information on the appropriate tier can reduce storage costs and updates, using proven methodologies maximize return on investment (ROI) over time. Oracle Hierarchical Storage Manager and recommended practices software actively manages data between storage tiers to let companies exploit the • Production Optimization Services substantial acquisition and operational cost differences between high-end disk drives, including configuration reviews and SATA drives, and tape devices. Oracle Hierarchical Storage Manager software provides performance reviews to analyze existing -

Installing Oracle Goldengate

Oracle® Fusion Middleware Installing Oracle GoldenGate 12c (12.3.0.1) E85215-07 November 2018 Oracle Fusion Middleware Installing Oracle GoldenGate, 12c (12.3.0.1) E85215-07 Copyright © 2017, 2018, Oracle and/or its affiliates. All rights reserved. This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable: U.S. GOVERNMENT END USERS: Oracle programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, delivered to U.S. Government end users are "commercial computer software" pursuant to the applicable Federal Acquisition Regulation and agency- specific supplemental regulations. As such, use, duplication, disclosure, modification, and adaptation of the programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, shall be subject to license terms and license restrictions applicable to the programs. -

Why Did We Choose Freebsd?

Why Did We Choose FreeBSD? Index Why FreeBSD in General? Why FreeBSD Rather than Linux? Why FreeBSD Rather than Windows? Why Did we Choose FreeBSD in General? We are using FreeBSD version 6.1. Here are some more specific features which make it appropriate for use in an ISP environment: Very stable, especially under load as shown by long-term use in large service providers. FreeBSD is a community-supported project which you can be confident is not going to 'go commercial' or start charging any license fees. A single source tree which contains both the kernel and all the rest of the code needed to build a complete base system. Contrast with Linux that has one kernel but hundreds of distributions to choose from, and which may come and go over time. Scalability features as standard: e.g. pwd.db (indexed password database), which give you much better performance and scales well for very large sites. Superior TCP/IP stack that responds well to extremely heavy load. Multiple firewall packages built in to the base system (IPF, IPFW, PF). High-end debugging and tracing tools, including the recently announced port of the Sun Dynamic Tracing tool, DTrace, to FreeBSD. Ability to gather fine-grained statistics on system performance using many included utilities like systat, gstat, iostat, di, swapinfo, disklabel, etc. Items such as software RAID are supported using multiple utilities (ata, ccd. vinum, geom). RAID-1 using GEOM Mirror (see gmirror) supports identical disk sets, or identical disk slieces. Take a look at the most stable web sites according to NetCraft (http://news.netcraft.com/archives/2006/06/06/six_hosting_companies_most_reliable_hoster_in_may.html). -

Truenas® 11.3-U5 User Guide

TrueNAS® 11.3-U5 User Guide Note: Starting with version 12.0, FreeNAS and TrueNAS are unifying (https://www.ixsystems.com/blog/freenas- truenas-unification/.) into “TrueNAS”. Documentation for TrueNAS 12.0 and later releases has been unified and moved to the TrueNAS Documentation Hub (https://www.truenas.com/docs/). Warning: To avoid the potential for data loss, iXsystems must be contacted before replacing a controller or upgrading to High Availability. Copyright iXsystems 2011-2020 TrueNAS® and the TrueNAS® logo are registered trademarks of iXsystems. CONTENTS Welcome .................................................... 8 Typographic Conventions ................................................ 9 1 Introduction 10 1.1 Contacting iXsystems ............................................... 10 1.2 Path and Name Lengths ............................................. 10 1.3 Using the Web Interface ............................................. 12 1.3.1 Tables and Columns ........................................... 12 1.3.2 Advanced Scheduler ........................................... 12 1.3.3 Schedule Calendar ............................................ 13 1.3.4 Changing TrueNAS® Settings ...................................... 13 1.3.5 Web Interface Troubleshooting ..................................... 14 1.3.6 Help Text ................................................. 14 1.3.7 Humanized Fields ............................................ 14 1.3.8 File Browser ................................................ 14 2 Initial Setup 15 2.1 Hardware -

BSD UNIX Toolbox 1000+ Commands for Freebsd, Openbsd

76034ffirs.qxd:Toolbox 4/2/08 12:50 PM Page iii BSD UNIX® TOOLBOX 1000+ Commands for FreeBSD®, OpenBSD, and NetBSD®Power Users Christopher Negus François Caen 76034ffirs.qxd:Toolbox 4/2/08 12:50 PM Page ii 76034ffirs.qxd:Toolbox 4/2/08 12:50 PM Page i BSD UNIX® TOOLBOX 76034ffirs.qxd:Toolbox 4/2/08 12:50 PM Page ii 76034ffirs.qxd:Toolbox 4/2/08 12:50 PM Page iii BSD UNIX® TOOLBOX 1000+ Commands for FreeBSD®, OpenBSD, and NetBSD®Power Users Christopher Negus François Caen 76034ffirs.qxd:Toolbox 4/2/08 12:50 PM Page iv BSD UNIX® Toolbox: 1000+ Commands for FreeBSD®, OpenBSD, and NetBSD® Power Users Published by Wiley Publishing, Inc. 10475 Crosspoint Boulevard Indianapolis, IN 46256 www.wiley.com Copyright © 2008 by Wiley Publishing, Inc., Indianapolis, Indiana Published simultaneously in Canada ISBN: 978-0-470-37603-4 Manufactured in the United States of America 10 9 8 7 6 5 4 3 2 1 Library of Congress Cataloging-in-Publication Data is available from the publisher. No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, scanning or otherwise, except as permitted under Sections 107 or 108 of the 1976 United States Copyright Act, without either the prior written permission of the Publisher, or authorization through payment of the appropriate per-copy fee to the Copyright Clearance Center, 222 Rosewood Drive, Danvers, MA 01923, (978) 750-8400, fax (978) 646-8600. Requests to the Publisher for permis- sion should be addressed to the Legal Department, Wiley Publishing, Inc., 10475 Crosspoint Blvd., Indianapolis, IN 46256, (317) 572-3447, fax (317) 572-4355, or online at http://www.wiley.com/go/permissions. -

Oracle ZFS Storage Appliance: Ideal Storage for Virtualization and Private Clouds

Oracle ZFS Storage Appliance: Ideal Storage for Virtualization and Private Clouds ORACLE WHITE P A P E R | MARCH 2 0 1 7 Table of Contents Introduction 1 The Value of Having the Right Storage for Virtualization and Private Cloud Computing 2 Scalable Performance, Efficiency and Reliable Data Protection 2 Improving Operational Efficiency 3 A Single Solution for Multihypervisor or Single Hypervisor Environments 3 Maximizing VMware VM Availability, Manageability, and Performance with Oracle ZFS Storage Appliance Systems 4 Availability 4 Manageability 4 Performance 5 Highly Efficient Oracle VM Environments with Oracle ZFS Storage Appliance 6 Private Cloud Integration 6 Conclusion 7 ORACLE ZFS STORAGE APPLIANCE: IDEAL STORAGE FOR VIRTUALIZATION AND PRIVATE CLOUDS Introduction The Oracle ZFS Storage Appliance family of products can support 10x more virtual machines (VMs) per storage system (compared to conventional NAS filers), while reducing cost and complexity and improving performance. Data center managers have learned that virtualization environment service- level agreements (SLAs) can live and die on the behavior of the storage supporting them. Oracle ZFS Storage Appliance products are rapidly becoming the systems of choice for some of the world’s most complex virtualization environments for three simple reasons. Their cache-centric architecture combines DRAM and flash, which is ideal for multiple and diverse workloads. Their sophisticated multithreading processing environment easily handles many concurrent data streams and the unparalleled