TABLE of CONTENTS S.No DATE TOPIC PAGE NO. UNIT I OPERATING SYSTEM OVERVIEW 1 Computer System Overview 1 2 Basic Elements 6 3 In

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Computer Architectures an Overview

Computer Architectures An Overview PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Sat, 25 Feb 2012 22:35:32 UTC Contents Articles Microarchitecture 1 x86 7 PowerPC 23 IBM POWER 33 MIPS architecture 39 SPARC 57 ARM architecture 65 DEC Alpha 80 AlphaStation 92 AlphaServer 95 Very long instruction word 103 Instruction-level parallelism 107 Explicitly parallel instruction computing 108 References Article Sources and Contributors 111 Image Sources, Licenses and Contributors 113 Article Licenses License 114 Microarchitecture 1 Microarchitecture In computer engineering, microarchitecture (sometimes abbreviated to µarch or uarch), also called computer organization, is the way a given instruction set architecture (ISA) is implemented on a processor. A given ISA may be implemented with different microarchitectures.[1] Implementations might vary due to different goals of a given design or due to shifts in technology.[2] Computer architecture is the combination of microarchitecture and instruction set design. Relation to instruction set architecture The ISA is roughly the same as the programming model of a processor as seen by an assembly language programmer or compiler writer. The ISA includes the execution model, processor registers, address and data formats among other things. The Intel Core microarchitecture microarchitecture includes the constituent parts of the processor and how these interconnect and interoperate to implement the ISA. The microarchitecture of a machine is usually represented as (more or less detailed) diagrams that describe the interconnections of the various microarchitectural elements of the machine, which may be everything from single gates and registers, to complete arithmetic logic units (ALU)s and even larger elements. -

Advanced Unix/Linux System Program Instructor: William W.Y

Advanced Unix/Linux System Program Instructor: William W.Y. Hsu › Course preliminaries › Introduction CONTENTS › Unix history › Unix basics 2/22/2018 INTRODUCTION TO COMPETITIVE PROGRAMMING 2 About this class › The class is called “Advanced Unix/Linux System Programming”. › It is not: – an introduction to Unix – an introduction to programming – an introduction to C (or C++) › 2/22/2018 INTRODUCTION TO COMPETITIVE PROGRAMMING 3 In a nutshell: the “what” 2/22/2018 INTRODUCTION TO COMPETITIVE PROGRAMMING 4 In a nutshell: the “what” 2/22/2018 ADVANCED UNIX/LINUX SYSTEM PROGRAMMING 5 In a nutshell: the “what” › Gain an understanding of the UNIX operating systems. › Gain (systems) programming experience. › Understand fundamental OS concepts (with focus on UNIX family): multi-user concepts. – Basic and advanced I/O – Process relationships – Interprocess communication – Basic network programming using a client/server model 2/22/2018 ADVANCED UNIX/LINUX SYSTEM PROGRAMMING 6 In a nutshell: the “why” › Understanding how UNIX works gives you insights in other OS concepts. › System level programming experience is invaluable as it forms the basis for most other programming and even use of the system. › System level programming in C helps you understand general programming concepts. › Most higher level programming languages (eventually) call (or implement themselves) standard C library functions. 2/22/2018 ADVANCED UNIX/LINUX SYSTEM PROGRAMMING 7 In a nutshell: the “how” static char dot[] = ".", *dotav[] = {dot, NULL}; struct winsize win; int ch, fts_options; int kflag = 0; const char *p; setprogname(argv[0]); setlocale(LC_ALL, ""); /* Terminal defaults to -Cq, non-terminal defaults to -1. */ if (isatty(STDOUT_FILENO)) { if (ioctl(STDOUT_FILENO, TIOCGWINSZ, &win) == 0 && win.ws_col > 0) termwidth = win.ws_col; f_column = f_nonprint = 1; } else f_singlecol = 1; /* Root is -A automatically. -

Advanced Programming in the UNIX Environment

CS631-AdvancedProgrammingintheUNIXEnvironment Slide1 CS631 - Advanced Programming in the UNIX Environment Department of Computer Science Stevens Institute of Technology Jan Schaumann [email protected] https://www.cs.stevens.edu/~jschauma/631/ Lecture 01: Introduction, UNIX history, UNIX Programming Basics August 27, 2018 CS631-AdvancedProgrammingintheUNIXEnvironment Slide2 New Rules Close your laptops! Lecture 01: Introduction, UNIX history, UNIX Programming Basics August 27, 2018 CS631-AdvancedProgrammingintheUNIXEnvironment Slide3 New Rules Close your laptops! Open your eyes! (Mind, too.) Lecture 01: Introduction, UNIX history, UNIX Programming Basics August 27, 2018 CS631-AdvancedProgrammingintheUNIXEnvironment Slide4 So far, so good... What questions do you have? Lecture 01: Introduction, UNIX history, UNIX Programming Basics August 27, 2018 CS631-AdvancedProgrammingintheUNIXEnvironment Slide5 About this class The class is called “Advanced Programming in the UNIX Environment”. It is not called: “An Introduction to Unix” “An Introduction to Programming” “An introduction to C” Lecture 01: Introduction, UNIX history, UNIX Programming Basics August 27, 2018 CS631-AdvancedProgrammingintheUNIXEnvironment Slide6 What is it? https://www.bell-labs.com/usr/dmr/www/chist.html Lecture 01: Introduction, UNIX history, UNIX Programming Basics August 27, 2018 CS631-AdvancedProgrammingintheUNIXEnvironment Slide7 In a nutshell: the ”what” $ ls /bin [ csh ed ls pwd sleep cat date expr mkdir rcmd stty chio dd hostname mt rcp sync chmod df kill mv -

Acorn Technical Publications Style Guide Copyright © Acorn Computers Limited 1997

Acorn Technical Publications Style Guide Copyright © Acorn Computers Limited 1997. All rights reserved. Updates and changes copyright © 2018 RISC OS Open Ltd. All rights reserved. Issue 1 published by Acorn Computers Technical Publications Department. Issue 2 published by Acorn Computers Technical Publications Department. Issue 3 published by RISC OS Open Ltd. No part of this publication may be reproduced or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording or otherwise, or stored in any retrieval system of any nature, without the written permission of the copyright holder and the publisher, application for which shall be made to the publisher. The product described in this manual is not intended for use as a critical component in life support devices or any system in which failure could be expected to result in personal injury. The product described in this manual is subject to continuous development and improvement. All information of a technical nature and particulars of the product and its use (including the information and particulars in this manual) are given by the publisher in good faith. However, the publisher cannot accept any liability for any loss or damage arising from the use of any information or particulars in this manual. If you have any comments on this manual, please complete the form at the back of the manual and send it to the address given there. All trademarks are acknowledged as belonging to their respective owners. Published by RISC OS Open Ltd. Issue 1, January 1992 (Acorn part number AKJ17). Issue 2, August 1997 (Acorn part number 0472,501). -

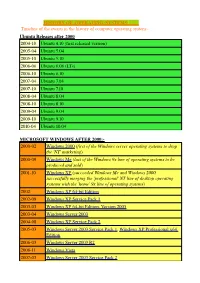

HISTORY of OPERATING SYSTEMS Timeline of The

HISTORY OF OPERATING SYSTEMS Timeline of the events in the history of computer operating system:- Ubuntu Releases after 2000 2004-10 Ubuntu 4.10 (first released version) 2005-04 Ubuntu 5.04 2005-10 Ubuntu 5.10 2006-06 Ubuntu 6.06 (LTs) 2006-10 Ubuntu 6.10 2007-04 Ubuntu 7.04 2007-10 Ubuntu 7.10 2008-04 Ubuntu 8.04 2008-10 Ubuntu 8.10 2009-04 Ubuntu 9.04 2009-10 Ubuntu 9.10 2010-04 Ubuntu 10.04 MICROSOFT WINDOWS AFTER 2000:- 2000-02 Windows 2000 (first of the Windows server operating systems to drop the ©NT© marketing) 2000-09 Windows Me (last of the Windows 9x line of operating systems to be produced and sold) 2001-10 Windows XP (succeeded Windows Me and Windows 2000, successfully merging the ©professional© NT line of desktop operating systems with the ©home© 9x line of operating systems) 2002 Windows XP 64-bit Edition 2002-09 Windows XP Service Pack 1 2003-03 Windows XP 64-bit Edition, Version 2003 2003-04 Windows Server 2003 2004-08 Windows XP Service Pack 2 2005-03 Windows Server 2003 Service Pack 1, Windows XP Professional x64 Edition 2006-03 Windows Server 2003 R2 2006-11 Windows Vista 2007-03 Windows Server 2003 Service Pack 2 2007-11 Windows Home Server 2008-02 Windows Vista Service Pack 1, Windows Server 2008 2008-04 Windows XP Service Pack 3 2009-05 Windows Vista Service Pack 2 2009-10 Windows 7(22 occtober 2009), Windows Server 2008 R2 EVENT IN HISTORY OF OS SINCE 1954:- 1950s 1954 MIT©s operating system made for UNIVAC 1103 1955 General Motors Operating System made for IBM 701 1956 GM-NAA I/O for IBM 704, based on General Motors -

Acorn R140 RISC Ix X.Desktop Guide

ACORN~ RISC iX X.desktop GUIDE 3034/21382 Copyright© Acorn Computen, L1mited 1988 X.desktop has been developed by lXI Limited Neither the whole nor any part of the information contained in, nor the produc.t dc~crihed in th1s manual may be adapted or reproduced in any material form except wil h the prior written approval of Acorn Computers Limited. The product described in this manual and product~ for use with it are subject to continuou~ development and unprovement. All information of a technical nature and particulars of the product and its ust.: (including the informal ion and particulars in 1his manual) are given by Acorn Computers Limited in good faith. However, Acorn Computers Limited cannot accept any liability for any loss or damage ansmg from the usc of any information or particulars in this manual. All correspondence should be addressed to: Cul>mmer Support and Service Acorn Computers Limited Fulbourn Road Cherry Himon Cambridge CBI 4JN X.desktop, lXI, and the lXI logo are trademarb or registered trademarks of IXI Lunitcd. ACORN is a 1radcmark of Acorn Computers Limited. UN I X is a trademark of AT& T. Published December 1988: Issue A ISBN I 85250 059 X Published by Acorn Computerlt Limited Pari Number 0483,749 ii Contents Part 1: About the X.desktop User Guide 3 X.desktop User Conventions used m this guide 3 Guide ( ;etting (urthl'r informalion 4 Introducing X.desktop 5 X.deskwp and RISC iX 5 Using your desktop 7 Using the icons 8 u~ing the menus 10 Organbing the desktop 1) Managing directories 17 Looking at the conrcnt~ 17 -

Riscixfs Module 37 Security on the System 42

Rl40 j •• ' • The choice of experience. ACORN R/40 • I I • I I • ... • 1be choice of experience Copyright © Acorn Computers Limited 1989 Designed and written by Acorn Computers Technical Publications Department Neither the whole nor any part of the information contained in, or the product described in this Guide may be adapted or reproduced in any material form except with the prior written approval of Acorn Computers Limited. The products described in this Guide are subject to continuous develop m n t and improvement. All information of a technical nature and particulars <)f the product and their use (including the information and particulars in th l t-~ Guide) are given by Acorn Computers Limited in good faith. However, A {H 'l Computers Limited cannot accept any liability for any loss or damage nrisi 1l J from the use of any information or particulars in this Guide. • If you have any comments on this Guide, please complete and return th ' ' Hill at the back of this Guide to the address given there. All other correSfHH \ 1 ~ t \ • should be addressed to: Customer Support and Service Acorn Computers Limited • Fulbourn Road Cambridge CB1 4JN Information can also be obtained from the Acorn Support lt1f t HJ tltl • Database (SID). This is a direct dial viewdata system available to H.,, t t I \. SID users. Initially, access SID on Cambridge (0223) 243642: thiH v Ill dl you to inspect the system and use a response frame for registration. ACORN and ARCHIMEDES are trademarks of Acorn Computers L itn lt · I. POSTSCRIPT is a trademark of Adobe Systems, Inc. -

APP215 a World of Standards Acorn R140 1St Edition January 1989

A WORLD OF STANDARDS ACORN RI4O A WORLD OF STANDARDS The Acorn R140 workstation represents a The networking capabilities of the major price breakthrough for UNIX systems. R140 workstation present new Acorn has exploited the performance of its opportunities in education and research to extend existing multi-user award-winning 32-bit RISC processor to systems or to create new installations produce the first in a series of personal that have previously been ruled out workstations running the UNIX operating on the grounds of cost. system at a price below that of any comparable product. PROCESSINGOPEN DISTRIBUTED The RISC processor designed and developed in the UK by Acorn is an outstanding Due to its adherence to a number of example of pioneering technology leading to industry standards including Berkeley radical cost savings. By launching the R140 UNIX, Ethernet, TCP/IP protocols workstation, Acorn has transformed the economics of making for networking, Yellow Pages, NFS for file access across the network and UNIX available to users in all fields. X Windows, the Acorn R140 Combining processing power, windowing and graphics, in-built presents an affordable way of developing or expanding an open data storage and standard UNIX software, the Acorn R140 distributed processing system. workstation supplies low-cost desktop power to the user whilst Acorn R140s can he connected to maintaining full connectivity with other workstations, PCs and mini and mainframe computers as multi-user systems. well as to workstations from Sun, Apollo, Digital Equipment, -

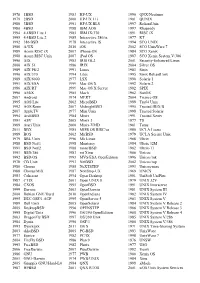

Timeline of Operating Systems - Wikipedia, the Free Encyclopedia

Timeline of operating systems - Wikipedia, the free encyclopedia http://en.wikipedia.org/wiki/Timeline_of_operating_systems Timeline of operating systems From Wikipedia, the free encyclopedia This article presents a timeline of events in the history of computer operating systems from 1951 to 2009. For a narrative explaining the overall developments, see the History of operating systems. Contents 1 1950s 2 1960s 3 1970s 4 1980s 5 1990s 6 2000s 7 See also 7.1 Category links 8 References 9 External links 1950s 1951 LEO I 'Lyons Electronic Office'[1] was the commercial development of EDSAC computing platform, supported by British firm J. Lyons and Co. 1954 MIT's operating system made for UNIVAC 1103[2] 1955 General Motors Operating System made for IBM 701[3] 1956 GM-NAA I/O for IBM 704, based on General Motors Operating System 1957 Atlas Supervisor (Manchester University) (Atlas computer project start) BESYS (Bell Labs), for IBM 7090 and IBM 7094 1958 University of Michigan Executive System (UMES), for IBM 704, 709, and 7090 1959 SHARE Operating System (SOS), based on GM-NAA I/O 1 of 16 2/24/11 9:28 PM Timeline of operating systems - Wikipedia, the free encyclopedia http://en.wikipedia.org/wiki/Timeline_of_operating_systems 1960s 1960 IBSYS (IBM for its 7090 and 7094) 1961 CTSS (MIT's Compatible Time-Sharing System for the IBM 7094) MCP (Burroughs Master Control Program) 1962 Atlas Supervisor (Manchester University) (Atlas computer commissioned) GCOS (GE's General Comprehensive Operating System, originally GECOS, General Electric Comprehensive -

Sistema Operativo

Sistema Operativo L.I. Francisco Ruiz Sala Instituto de Astronomía UNAM Definición: Un Sistema Operativo del acrónimo en ingles OS (Operating System) es un programa o programas que gestiona los recursos de hardware y provee servicios y las aplicaciones, una herramientas de software que permiten la interacción con el núcleo o Kernel (Nucleo) y este es el encargado de enviar e interpretar las instrucciones en código de máquina. Evolución histórica 1ª Generación (1945 -1955) Estas máquinas estaban construidas por medio de tubos de vacío. Eran programadas en lenguaje de máquina. 2ª Generación (1955 – 1965) Las características de la segunda generación son las siguientes: Están construidas con circuitos de transistores. Se programan en nuevos lenguajes nemotécnicos como el Ensamblador. 3ª Generación (1965 – 1975) Las características de esta generación fueron las siguientes: Su fabricación electrónica está basada en circuitos integrados. Su manejo es por medio de los lenguajes de control de los sistemas operativos, Lenguajes de Alto Nivel. 4ª Generación (1975 – 1990) Aquí aparecen los microprocesadores que es un gran adelanto de la microelectrónica, son circuitos integrados de alta densidad y con una velocidad impresionante. Las microcomputadoras con base en estos circuitos son extremadamente pequeñas y baratas, por lo que su uso se extiende al mercado industrial. Aquí nacen las computadoras personales, Lenguajes Interpretes y de Alto nivel. Tipos de SO: -Monoprocesos, Multiprocesos, Monousuarios y multiusuarios Kernel: El núcleo o Kernel (de la raíz germánica Kern, núcleo, hueso) es la parte fundamental del sistema operativo, y se define como la parte que se ejecuta en modo privilegiado (conocido también como modo núcleo). -

1978 1BSD 1979 2BSD 1980 3BSD 1980 4BSD 1994 4.4BSD Lite 1

1978 1BSD 1983 HP-UX 1996 QNX/Neutrino 1979 2BSD 2000 HP-UX 11i 1981 QUNIX 1980 3BSD 1991 HP-UX BLS 1997 ReliantUnix 1980 4BSD 1988 IBM AOS 1997 Rhapsody 1994 4.4BSD Lite 1 1985 IBM IX/370 1991 RISC iX 1995 4.4BSD Lite 2 1985 Interactive 386/ix 1977 RT 1992 386 BSD 1978 Interactive IS 1994 SCO UNIX 1986 A/UX 2010 iOS 2002 SCO UnixWare 7 1989 Acorn RISC iX 2007 iPhone OS 1984 SCO Xenix 1988 Acorn RISC Unix 2007 iPod OS 1987 SCO Xenix System V/386 1990 AIX 1983 IRIS GL2 2001 Security-Enhanced Linux 2000 AIX 5L 1986 IRIX 2004 Silver OS 1989 AIX PS/2 1991 Linux 1983 Sinix 1990 AIX/370 1994 Lites 1995 Sinix ReliantUnix 1989 AIX/6000 1977 LSX 1990 Solaris 1 1991 AIX/ESA 1999 Mac OS X 1992 Solaris 2 1986 AIX/RT 1999 Mac OS X Server 1982 SPIX 1990 AMiX 1985 Mach 1982 SunOS 2007 Android 1974 MERT 2004 Triance OS 1995 AOS Lite 2002 MicroBSD 1999 Tru64 Unix 1992 AOS Reno 2007 MidnightBSD 1995 Trusted IRIX/B 2007 AppleTV 1977 Mini Unix 1998 Trusted Solaris 1994 ArchBSD 1984 Minix 1991 Trusted Xenix 1991 ASV 2005 Minix 3 1977 TS 1989 Atari Unix 2000 Minix-VMD 1981 Tunis 2011 BBX 1985 MIPS OS RISC/os 1980 UCLA Locus 1989 BOS 2002 MirBSD 1979 UCLA Secure Unix 1979 BRL Unix 1996 Mk Linux 1988 Ultrix 1988 BSD Net/1 1998 Monterey 1984 Ultrix 32M 1991 BSD Net/2 1988 more/BSD 1982 Ultrix-11 1991 BSD/386 1983 mt Xinu 1986 Unicos 1992 BSD/OS 1993 MVS/ESA OpenEdition 1996 Unicos/mk 1978 CB Unix 1993 NetBSD 2002 Unicos/mp 1986 Chorus 1988 NeXTSTEP 1993 Unicox-max 1988 Chorus/MiX 1987 NonStop-UX 1969 UNICS 1983 Coherent 1994 Open Desktop 1981 UniSoft UniPlus 1987 -

ARM Architecture from Wikipedia, the Free Encyclopedia

ARM architecture From Wikipedia, the free encyclopedia ARM is a family of instruction set architectures for computer ARM architectures processors based on a reduced instruction set computing (RISC) architecture developed by British company ARM Holdings. A RISC-based computer design approach means ARM The ARM logo processors require significantly fewer transistors than typical Designer ARM Holdings CISC x86 processors in most personal computers. This approach reduces costs, heat and power use. These are Bits 32-bit, 64-bit desirable traits for light, portable, battery-powered devices— Introduced 1985 including smartphones, laptops, tablet and notepad computers, and other embedded systems. A simpler design Design RISC facilitates more efficient multi-core CPUs and higher core Type Register-Register counts at lower cost, providing improved energy efficiency Branching Condition code for servers.[3][4][5] Open Proprietary ARM Holdings develops the instruction set and architecture for ARM-based products, but does not manufacture 64/32-bit architecture products. The company periodically releases updates to its Introduced 2011 cores. Current cores from ARM Holdings support a 32-bit address space and 32-bit arithmetic; the ARMv8-A Version ARMv8-A architecture, announced in October 2011,[6] adds support Encoding AArch64/A64 and AArch32/A32 use for a 64-bit address space and 64-bit arithmetic. Instructions 32-bit instructions, T32 (Thumb2) for ARM Holdings' cores have 32-bit-wide fixed-length uses mixed 16- and 32-bit instructions. instructions, but later versions of the architecture also support ARMv7 user-space compatibility[1] a variable-length instruction set that provides both 32-bit and 16-bit-wide instructions for improved code density.