Topic Modeling with Wasserstein Autoencoders

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

News, Information, Rumors, Opinions, Etc

http://www.physics.miami.edu/~chris/srchmrk_nws.html ● Miami-Dade/Broward/Palm Beach News ❍ Miami Herald Online Sun Sentinel Palm Beach Post ❍ Miami ABC, Ch 10 Miami NBC, Ch 6 ❍ Miami CBS, Ch 4 Miami Fox, WSVN Ch 7 ● Local Government, Schools, Universities, Culture ❍ Miami Dade County Government ❍ Village of Pinecrest ❍ Miami Dade County Public Schools ❍ ❍ University of Miami UM Arts & Sciences ❍ e-Veritas Univ of Miami Faculty and Staff "news" ❍ The Hurricane online University of Miami Student Newspaper ❍ Tropic Culture Miami ❍ Culture Shock Miami ● Local Traffic ❍ Traffic Conditions in Miami from SmartTraveler. ❍ Traffic Conditions in Florida from FHP. ❍ Traffic Conditions in Miami from MSN Autos. ❍ Yahoo Traffic for Miami. ❍ Road/Highway Construction in Florida from Florida DOT. ❍ WSVN's (Fox, local Channel 7) live Traffic conditions in Miami via RealPlayer. News, Information, Rumors, Opinions, Etc. ● Science News ❍ EurekAlert! ❍ New York Times Science/Health ❍ BBC Science/Technology ❍ Popular Science ❍ Space.com ❍ CNN Space ❍ ABC News Science/Technology ❍ CBS News Sci/Tech ❍ LA Times Science ❍ Scientific American ❍ Science News ❍ MIT Technology Review ❍ New Scientist ❍ Physorg.com ❍ PhysicsToday.org ❍ Sky and Telescope News ❍ ENN - Environmental News Network ● Technology/Computer News/Rumors/Opinions ❍ Google Tech/Sci News or Yahoo Tech News or Google Top Stories ❍ ArsTechnica Wired ❍ SlashDot Digg DoggDot.us ❍ reddit digglicious.com Technorati ❍ del.ic.ious furl.net ❍ New York Times Technology San Jose Mercury News Technology Washington -

Generation Kill and the New Screen Combat Magdalena Yüksel and Colleen Kennedy-Karpat

15 Generation Kill and the New Screen Combat Magdalena Yüksel and Colleen Kennedy-Karpat No one could accuse the American cultural industries of giving the Iraq War the silent treatment. Between the 24-hour news cycle and fictionalized enter- tainment, war narratives have played a significant and evolving role in the media landscape since the declaration of war in 2003. Iraq War films, on the whole, have failed to impress audiences and critics, with notable exceptions like Kathryn Bigelow’s The Hurt Locker (2008), which won the Oscar for Best Picture, and her follow-up Zero Dark Thirty (2012), which tripled its budget in worldwide box office intake.1 Television, however, has fared better as a vehicle for profitable, war-inspired entertainment, which is perhaps best exemplified by the nine seasons of Fox’s 24 (2001–2010). Situated squarely between these two formats lies the television miniseries, combining seriality with the closed narrative of feature filmmaking to bring to the small screen— and, probably more significantly, to the DVD market—a time-limited story that cultivates a broader and deeper narrative development than a single film, yet maintains a coherent thematic and creative agenda. As a pioneer in both the miniseries format and the more nebulous category of quality television, HBO has taken fresh approaches to representing combat as it unfolds in the twenty-first century.2 These innovations build on yet also depart from the precedent set by Band of Brothers (2001), Steven Spielberg’s WWII project that established HBO’s interest in war-themed miniseries, and the subsequent companion project, The Pacific (2010).3 Stylistically, both Band of Brothers and The Pacific depict WWII combat in ways that recall Spielberg’s blockbuster Saving Private Ryan (1998). -

PC Magazine -July 2009.Pdf

how to install windows 7 JULY 2009 HANDS > ExCEl oN PowER ToolS wITH THE > BUIlD A PC PAlM foR $400! PRE > SECURITY: 5 EASY TRICkS SPECIAL 17TH ANNUAL UTILITY GUIDE 2009 94 of the Best Utilities for Your PC Appearance • Backup • Compression • Disk Utilities • Displays • Encryption Images • Recovery • Networking • Search • Shutdown • System Cleaners Tweakers • Updaters • Uninstallers • Virtual PCs and more Malestrom 5-7-2009 juLY 2009 voL. 28 no. 7 48 CovER SToRY 2009 WINDOWS UTILITY GUIDE Check out the biggest col- lection of time-saving utili- ties for Windows XP, vista, and even Win 7 we’ve ever assembled. The best part? Most of them are free. 49 Appearance 50 Backup/Sync 51 Compression 52 Disk utilities 52 Displays 53 Encryption 54 Erase and Delete 54 Images 55 networking 62 55 organization INSTALLING 56 Recover and Restore 57 Screen Capture WINDOWS 7 57 Search Before you install 58 Shutdown/Boot 58 System Cleaners that fresh download 59 System Monitors of Win 7 Release 60 Tweakers Candidate, read our guide 60 updaters 60 uninstallers to avoid pitfalls and compatibility problems. 61 virtual PCs PC MAGAZINE DIGITAL EDITION juLY 2009 Malestrom 5-7-2009 28 14 20 FIRST LooKS LETTERS 4 hArDWArE 5 fEEDbACk Acer Aspire 3935 Dell Wasabi PZ310 TECh nEWS 7 frONT SIDE Clickfree Traveler (16GB) obama’s cybersecurity plan; Plus Quick Looks DvD breakthrough; E3 slideshow; 20 bUSINESS rugged tech gadgets. Lenovo ThinkCentre M58p Eco Epson WorkForce Pro GT-S50 oPInIonS hP officejet Pro 8500 Wireless 2 fIrST WOrD: LANCE ULANOff 24 CONSUMEr ELECTrONICS 40 JOhN C. DvOrAk Budget D-SLRs: head to head 42 DvOrAk’S INSIDE TrACk Samsung Alias 2 SCh-u750 44 SASChA SEGAN TomTom Go 740 LIvE 46 DAN COSTA Palm Pre (Sprint) SoLuTIonS Sony Bravia KDL-46XBR8 68 rECESSION-PrOOf PC Plus Quick Looks Build a powerful home PC for 32 NETWOrkING just $400. -

Constructing and Consuming an Ideal in Japanese Popular Culture

Running head: KAWAII BOYS AND IKEMEN GIRLS 1 Kawaii Boys and Ikemen Girls: Constructing and Consuming an Ideal in Japanese Popular Culture Grace Pilgrim University of Florida KAWAII BOYS AND IKEMEN GIRLS 2 Table of Contents Abstract………………………………………………………………………………………..3 Introduction……………………………………………………………………………………4 The Construction of Gender…………………………………………………………………...6 Explication of the Concept of Gender…………………………………………………6 Gender in Japan………………………………………………………………………..8 Feminist Movements………………………………………………………………….12 Creating Pop Culture Icons…………………………………………………………………...22 AKB48………………………………………………………………………………..24 K-pop………………………………………………………………………………….30 Johnny & Associates………………………………………………………………….39 Takarazuka Revue…………………………………………………………………….42 Kabuki………………………………………………………………………………...47 Creating the Ideal in Johnny’s and Takarazuka……………………………………………….52 How the Companies and Idols Market Themselves…………………………………...53 How Fans Both Consume and Contribute to This Model……………………………..65 The Ideal and What He Means for Gender Expression………………………………………..70 Conclusion……………………………………………………………………………………..77 References……………………………………………………………………………………..79 KAWAII BOYS AND IKEMEN GIRLS 3 Abstract This study explores the construction of a uniquely gendered Ideal by idols from Johnny & Associates and actors from the Takarazuka Revue, as well as how fans both consume and contribute to this model. Previous studies have often focused on the gender play by and fan activities of either Johnny & Associates talents or Takarazuka Revue actors, but never has any research -

The Development and Validation of the Game User Experience Satisfaction Scale (Guess)

THE DEVELOPMENT AND VALIDATION OF THE GAME USER EXPERIENCE SATISFACTION SCALE (GUESS) A Dissertation by Mikki Hoang Phan Master of Arts, Wichita State University, 2012 Bachelor of Arts, Wichita State University, 2008 Submitted to the Department of Psychology and the faculty of the Graduate School of Wichita State University in partial fulfillment of the requirements for the degree of Doctor of Philosophy May 2015 © Copyright 2015 by Mikki Phan All Rights Reserved THE DEVELOPMENT AND VALIDATION OF THE GAME USER EXPERIENCE SATISFACTION SCALE (GUESS) The following faculty members have examined the final copy of this dissertation for form and content, and recommend that it be accepted in partial fulfillment of the requirements for the degree of Doctor of Philosophy with a major in Psychology. _____________________________________ Barbara S. Chaparro, Committee Chair _____________________________________ Joseph Keebler, Committee Member _____________________________________ Jibo He, Committee Member _____________________________________ Darwin Dorr, Committee Member _____________________________________ Jodie Hertzog, Committee Member Accepted for the College of Liberal Arts and Sciences _____________________________________ Ronald Matson, Dean Accepted for the Graduate School _____________________________________ Abu S. Masud, Interim Dean iii DEDICATION To my parents for their love and support, and all that they have sacrificed so that my siblings and I can have a better future iv Video games open worlds. — Jon-Paul Dyson v ACKNOWLEDGEMENTS Althea Gibson once said, “No matter what accomplishments you make, somebody helped you.” Thus, completing this long and winding Ph.D. journey would not have been possible without a village of support and help. While words could not adequately sum up how thankful I am, I would like to start off by thanking my dissertation chair and advisor, Dr. -

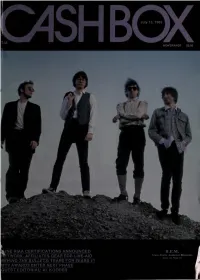

Cashbox Subscription: Please Check Classification;

July 13, 1985 NEWSPAPER $3.00 v.'r '-I -.-^1 ;3i:v l‘••: • •'i *. •- i-s .{' *. » NE RIAA CERTIFICATIONS ANNOUNCED R.E.M. AFFILIATES LIVE-AID Crass Roots Audience Blossoms TWORK, GEAR FOR Story on Page 13 WEHIND THE BULLETS: TEARS FOR FEARS #1 MTV AWARDS ENTER NEXT PHASE GUEST EDITORIAL: AL KOOPER SUBSCRIPTION ORDER: PLEASE ENTER MY CASHBOX SUBSCRIPTION: PLEASE CHECK CLASSIFICATION; RETAILER ARTIST I NAME VIDEO JUKEBOXES DEALER AMUSEMENT GAMES COMPANY TITLE ONE-STOP VENDING MACHINES DISTRIBUTOR RADIO SYNDICATOR ADDRESS BUSINESS HOME APT. NO. RACK JOBBER RADIO CONSULTANT PUBLISHER INDEPENDENT PROMOTION CITY STATE/PROVINCE/COUNTRY ZIP RECORD COMPANY INDEPENDENT MARKETING RADIO OTHER: NATURE OF BUSINESS PAYMENT ENCLOSED SIGNATURE DATE USA OUTSIDE USA FOR 1 YEAR I YEAR (52 ISSUES) $125.00 AIRMAIL $195.00 6 MONTHS (26 ISSUES) S75.00 1 YEAR FIRST CLASS/AIRMAIL SI 80.00 01SHBCK (Including Canada & Mexico) 330 WEST 58TH STREET • NEW YORK, NEW YORK 10019 ' 01SH BOX HE INTERNATIONAL MUSIC / COIN MACHINE / HOME ENTERTAINMENT WEEKLY VOLUME XLIX — NUMBER 5 — July 13, 1985 C4SHBO( Guest Editorial : T Taking Care Of Our Own ^ GEORGE ALBERT i. President and Publisher By A I Kooper MARK ALBERT 1 The recent and upcoming gargantuan Ethiopian benefits once In a very true sense. Bob Geldof has helped reawaken our social Vice President and General Manager “ again raise an issue that has troubled me for as long as I’ve been conscience; now we must use it to address problems much closer i SPENCE BERLAND a part of this industry. We, in the American music business do to home. -

Depot Square Garage SIPECUL 6350 2Llllath Npr Ipt Iw U P M Nn

‘i' .V,. ■V t iQVTWELVil MONDAY. FEBBUARY If. If40 Jbmr^Mtnr l^tmiitig MenOi Avaraga Daily CircalaUoii ThaWaathar Far «i» MrnUi of Jaanaty, ISM Fereeaet af 0. S. WaaMMr Bwdaa The Foliih'ABiorleaa AUdotto A rabearaal far the rural TNop 1, Ohrl 100010 win have a Oouft Uaaebaatar. Feraetara of H w at. OadHa Bawlag club will club will hold a opocUl moettaf to* oomedy. **ni*Old Vlllag* nebool”. ■paeUl maatlng tonight at T Amarlea. which poatponad ita regu. meat tonight at 7 o’clodc with Mra. Snmr fienlgMt aeenelanM Sgfel About Town ntfht at 8:80 at tb# CUntaa ^ « e t wiU take place tbts evening at o’clock at Center church, to com lar meeting Wedneadny of Inat Leon F. Wiechec at St. John’a 6350 anew aad aHgbUy eoMeTwafiaafi* \ elubroom. All member* ar* urfed T:S0 at the North Metbodlit plete plana for the aoth annlveraaiy week on account of the anowatorm, church rectory on Oolway atreet. Special Purchaoe! MembareCthsAnfilt 2llllatH nPRiPT iw U Pm nn JlFITaVil day. church. The Booetar club preaent* auppar of the troop at the church wtU bold a maatlng tomorrow eve U. be preeent aa plana for the an Borenn of CUontaUena duibtM ef Ubwtjr. No. I3S, nual dance will be mad*. ed thia entertainment aucceaefully Friday avening at 6:80, raaenra- ning in Mooaa ha|i. Bralnard piaoe. The regular weekly rehearaal of k> X -T o . A.. «m mMt tta O nagt lent fall, aad baa been requeated to Uoaa for which ahould ha in by the Baalkoven Olaa club win . -

Meyer Tech Pak012617.Indd

• Technical Information • Policies & Procedures • Fee Schedule for Rental & Services LOAD-IN AREA The loading dock is located on stage level, directly off upstage right. The dock has one 8’-0” wide x 10’-0” high (2.44m x 3.05m) overhead door. Dock height is 24” (.61m). There is NO leveler, and there is NO truck ramp. The loading area is 16’ wide x 22’ long with 12’ ceilings, in a wedge shape. Access to stage is through a 10’ wide x 11’ high overhead door upstage right. Access to the dressing rooms is by stairs or by elevator. CARPENTRY Seating Capacity Maximum Capacity: 1011 Orchestra 537 Grand Tier: 90 Mezzanine: 384 Handicapped Accessible: 8 Additional on Grand Tier Level Stage Dimensions Proscenium w: 49’-6” (15.09m) h:23’-0” (7.01m) Depth (plaster to back wall) 28’-0” (8.53m) Apron Curved 5’-0” (1.52m) at centerline Wing Space • Stage right 10’-0” (~3.05m) • Stage left 13’-0” (~3.96m) Orchestra Pit none Stage to Audience 4’-0” (1.21m) NFORMATION Stage Floor Material: 1/4” hardboard (Duron) I Color: Black Condition: Good Sub floor: 1 layer of 3/4” plywood 2x4 sleepers (24” O.C.) on resilient pads House Drapery: House Curtain- Scarlet; Manual Fly Item Number Material WxH Legs 8 black velour 10’ x 25’ (3.05m x 7.62m) Borders 4 black velour 60’ x 10’ (18.23m x 3.05m) Full Black 2 black velour 60’ x 25’ (18.23m x 7.62m) Line Set Data: Grid height: 53’-9” (16.38m) High trim: 50’-11” (15.52m) Low trim: 5’-8” (1.72m) Total Line Sets: 26 single purchase Arbor Capacity: 1500 lbs (680kg) ECHNICAL Weight Available: 8702 lbs (3947kg) Weight Size: 19 lbs (8.6 kg) Pipe Length: 60’-0” (18.29m) T Pipe size: 26 @ 1-1/2” Schedule 40 Lock Rail: Stage Left, stage level Line Plot: Enclosed Shop Area: There is NO on-site scenery shop, and there is no off-stage area for working on scenery. -

Boots Launches Christmas TV Advert Featuring the Sugababes Submitted By: Pr-Sending-Enterprises Tuesday, 18 November 2008

Boots launches Christmas TV advert featuring the Sugababes Submitted by: pr-sending-enterprises Tuesday, 18 November 2008 Boots' Christmas TV advert from 2007 won an industry Gold Award in The Campaign Magazine Big Awards. The company has now launched this year's seasonal advert, and it features the Sugababes' hit single 'Girls'. In 2007 the Boots (http://www.boots.com/) TV advert gave real insight into the lengthy preparations women make when getting ready for that all-important Christmas party. The music that accompanied it was 'Here Come the Girls' by Ernie K Doe, originally a hit in the 1970s. This year, Boots' new Christmas advert celebrates the art of choosing great gifts for your colleagues this season. The advert shows Boots knows that women want to get it right, finding the perfect present at a price they can afford. Boots has teamed up with the Sugababes, the UK's biggest girl band, for this year’s campaign. The advert features the trio’s recent single 'Girls', which includes a sample of 'Here Come the Girls' by Ernie K Doe. The Sugababes feel-good single has been selling fast in selected Boots stores across the nation, and Boots has matched the 10p donation to the White Ribbon Alliance for every copy it has rung through its tills. The theme of the song reflects the feminine traits highlighted in the advert. Set in an office, viewers will see women making lists, lovingly wrapping presents, and even offering themselves up as gifts in a bid to ensure they give the perfect 'Secret Santa' present. -

Tolono Library CD List

Tolono Library CD List CD# Title of CD Artist Category 1 MUCH AFRAID JARS OF CLAY CG CHRISTIAN/GOSPEL 2 FRESH HORSES GARTH BROOOKS CO COUNTRY 3 MI REFLEJO CHRISTINA AGUILERA PO POP 4 CONGRATULATIONS I'M SORRY GIN BLOSSOMS RO ROCK 5 PRIMARY COLORS SOUNDTRACK SO SOUNDTRACK 6 CHILDREN'S FAVORITES 3 DISNEY RECORDS CH CHILDREN 7 AUTOMATIC FOR THE PEOPLE R.E.M. AL ALTERNATIVE 8 LIVE AT THE ACROPOLIS YANNI IN INSTRUMENTAL 9 ROOTS AND WINGS JAMES BONAMY CO 10 NOTORIOUS CONFEDERATE RAILROAD CO 11 IV DIAMOND RIO CO 12 ALONE IN HIS PRESENCE CECE WINANS CG 13 BROWN SUGAR D'ANGELO RA RAP 14 WILD ANGELS MARTINA MCBRIDE CO 15 CMT PRESENTS MOST WANTED VOLUME 1 VARIOUS CO 16 LOUIS ARMSTRONG LOUIS ARMSTRONG JB JAZZ/BIG BAND 17 LOUIS ARMSTRONG & HIS HOT 5 & HOT 7 LOUIS ARMSTRONG JB 18 MARTINA MARTINA MCBRIDE CO 19 FREE AT LAST DC TALK CG 20 PLACIDO DOMINGO PLACIDO DOMINGO CL CLASSICAL 21 1979 SMASHING PUMPKINS RO ROCK 22 STEADY ON POINT OF GRACE CG 23 NEON BALLROOM SILVERCHAIR RO 24 LOVE LESSONS TRACY BYRD CO 26 YOU GOTTA LOVE THAT NEAL MCCOY CO 27 SHELTER GARY CHAPMAN CG 28 HAVE YOU FORGOTTEN WORLEY, DARRYL CO 29 A THOUSAND MEMORIES RHETT AKINS CO 30 HUNTER JENNIFER WARNES PO 31 UPFRONT DAVID SANBORN IN 32 TWO ROOMS ELTON JOHN & BERNIE TAUPIN RO 33 SEAL SEAL PO 34 FULL MOON FEVER TOM PETTY RO 35 JARS OF CLAY JARS OF CLAY CG 36 FAIRWEATHER JOHNSON HOOTIE AND THE BLOWFISH RO 37 A DAY IN THE LIFE ERIC BENET PO 38 IN THE MOOD FOR X-MAS MULTIPLE MUSICIANS HO HOLIDAY 39 GRUMPIER OLD MEN SOUNDTRACK SO 40 TO THE FAITHFUL DEPARTED CRANBERRIES PO 41 OLIVER AND COMPANY SOUNDTRACK SO 42 DOWN ON THE UPSIDE SOUND GARDEN RO 43 SONGS FOR THE ARISTOCATS DISNEY RECORDS CH 44 WHATCHA LOOKIN 4 KIRK FRANKLIN & THE FAMILY CG 45 PURE ATTRACTION KATHY TROCCOLI CG 46 Tolono Library CD List 47 BOBBY BOBBY BROWN RO 48 UNFORGETTABLE NATALIE COLE PO 49 HOMEBASE D.J. -

Host's Master Artist List

Host's Master Artist List You can play the list in the order shown here, or any order you prefer. Tick the boxes as you play the songs. Feeder Lily Allen Steps John Legend Gerry & The Pacemakers Liberty X Georgie Fame Bobby Vee Buddy Holly Beach Boys Animals Vengaboys Johnny Tilloson Hollies Blue Troggs Supremes Akon Gorillaz Britney Spears Chemical Brothers Johnny Kidd & The Pirates Sonny & Cher Elvis Presley Peggy Lee Ken Dodd Beyonce Jeff Beck Avril Lavigne Chuck Berry Lulu Del Shannon Spencer Davis Group Sugababes Backstreet Boys Daft Punk Dion Warwick Pink Doors Robbie Williams Steely Dan Louis Armstrong Chubby Checker Shirley Bassey Abba Destiny's Child Andy Williams Nelly Furtado Paul Anka Blink 182 Copyright QOD Page 1/101 Team/Player Sheet 1 Good Luck! Spencer Davis Peggy Lee Nelly Furtado Steps Ken Dodd Group Shirley Bassey Dion Warwick Gorillaz Bobby Vee Blue Johnny Kidd & Blink 182 Sonny & Cher Lulu Avril Lavigne The Pirates Jeff Beck Elvis Presley Feeder Vengaboys Britney Spears Copyright QOD Page 2/101 Team/Player Sheet 2 Good Luck! Lily Allen Blue Avril Lavigne Liberty X John Legend Peggy Lee Backstreet Boys Lulu Sugababes Buddy Holly Troggs Beach Boys Sonny & Cher Robbie Williams Blink 182 Johnny Kidd & Louis Armstrong Akon Abba Chuck Berry The Pirates Copyright QOD Page 3/101 Team/Player Sheet 3 Good Luck! Johnny Kidd & Robbie Williams Troggs Paul Anka Shirley Bassey The Pirates Blink 182 Johnny Tilloson Lulu Hollies Britney Spears Dion Warwick Lily Allen Peggy Lee Pink Del Shannon Sugababes Destiny's Child Chubby Checker -

The Formal and Contextual Relation of Typographic and Visual Elements of Pj Harvey Album Covers

THE FORMAL AND CONTEXTUAL RELATION OF TYPOGRAPHIC AND VISUAL ELEMENTS OF PJ HARVEY ALBUM COVERS Cinla Seker The aim of this research is to determine 21st century’s formal and contextual design solutions with the guidance of graphic design principles by analyzing design products. Twenty-nine times nominated and six times winner for the international worldwide music awards Pj Harvey’s eight alternative rock studio album covers have chosen; Dry (1992), Rid of Me (1993), To Bring You My Love (1995), Is this Desire?(1998), Stories from the City, Stories from the Sea (2000), Uh Huh Her (2004), White Chalk (2007), Let England Shake (2011).Graphic design as a design discipline in special means determining the formals of a 2d before production process according to the context. Album covers are one of the many graphic design products of 21st century. With the help of an album cover the artist reflects an audial expression visually. For a successful visualization some graphic design principles should have been considered by the designers. Design principles are the guides of organizing elements like visuals, typography and color. In this research five design principles are looked for; unity/harmony, balance, hierarchy, scale/proportion, dominance/emphasis and, similarity and contrast. On Pj Harvey’s album covers it is determined that every five of these principles were considered by the graphic designer during the design process as outlined. INTRODUCTION ............................................................................................ 750 I. ALBUM COVERS AS A GRAPHIC DESIGN PRODUCT................................... 751 A. Analysis of Pj Harvey Album Covers........................................ 752 CONCLUSION ............................................................................................... 757 INTRODUCTION Formal and functional features determination process called the design.