A Multi-View Intelligent Editor for Digital Video Libraries

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Understanding Digital Video

chapter1 Understanding Digital Video Are you ready to learn more about how digital video works? This chapter introduces you to the concept of digital video, the benefits of going digital, the different types of digital video cameras, the digital video workflow, and essential digital video terms. COPYRIGHTED MATERIAL What Is Digital Video? ........................................ 4 Understanding the Benefits of Going Digital ................................................6 Discover Digital Video Cameras .......................8 The Digital Video Workflow ............................10 Essential Digital Video Terms .........................12 What Is Digital Video? Digital video is a relatively inexpensive, high-quality video format that utilizes a digital video signal rather than an analog video signal. Consumers and professionals use digital video to create video for the Web and mobile devices, and even to create feature-length movies. Analog versus Digital Video Recording Media versus Format Analog video is variable data represented as The recording medium is essentially the physical electronic pulses. In digital video, the data is broken device on which the digital video is recorded, like down into a binary format as a series of ones and a tape or solid-state medium (a medium without zeros. A major weakness of analog recordings is that moving parts, such as flash memory). The format every time analog video is copied from tape to tape, refers to the way in which video and audio data is some of the data is lost and the image is degraded, coded and organized on the media. Three popular which is referred to as generation loss. Digital video examples of digital video formats are DV (Digital is less susceptible to deterioration when copied. -

Digital Video Quality Handbook (May 2013

Digital Video Quality Handbook May 2013 This page intentionally left blank. Executive Summary Under the direction of the Department of Homeland Security (DHS) Science and Technology Directorate (S&T), First Responders Group (FRG), Office for Interoperability and Compatibility (OIC), the Johns Hopkins University Applied Physics Laboratory (JHU/APL), worked with the Security Industry Association (including Steve Surfaro) and members of the Video Quality in Public Safety (VQiPS) Working Group to develop the May 2013 Video Quality Handbook. This document provides voluntary guidance for providing levels of video quality in public safety applications for network video surveillance. Several video surveillance use cases are presented to help illustrate how to relate video component and system performance to the intended application of video surveillance, while meeting the basic requirements of federal, state, tribal and local government authorities. Characteristics of video surveillance equipment are described in terms of how they may influence the design of video surveillance systems. In order for the video surveillance system to meet the needs of the user, the technology provider must consider the following factors that impact video quality: 1) Device categories; 2) Component and system performance level; 3) Verification of intended use; 4) Component and system performance specification; and 5) Best fit and link to use case(s). An appendix is also provided that presents content related to topics not covered in the original document (especially information related to video standards) and to update the material as needed to reflect innovation and changes in the video environment. The emphasis is on the implications of digital video data being exchanged across networks with large numbers of components or participants. -

Digital Video Recording

Digital Video Recording Five general principles for better video: Always use a tripod — always. Use good microphones and check your audio levels. Be aware of lighting and seek more light. Use the “rule of thirds” to frame your subject. Make pan/tilt movements slowly and deliberately. Don’t shoot directly against a wall. Label your recordings. Tripods An inexpensive tripod is markedly better than shooting by hand. Use a tripod that is easy to level (look for ones with a leveling bubble) and use it for every shot. Audio Remember that this is an audiovisual medium! Use an external microphone when you are recording, not the built-in video recorder microphone. Studies have shown that viewers who watch video with poor audio quality will actually perceive the image as more inferior than it actually is, so good sound is crucial. The camera will automatically disable built-in microphones when you plug in an external. There are several choices for microphones. Microphones There are several types of microphones to choose from: Omnidirectional Unidirectional Lavalier Shotgun Handheld In choosing microphones you will need to make a decision to use a wired or a wireless system. Hardwired systems are inexpensive and dependable. Just plug one end of the cord into the microphone and the other into the recording device. Limitations of wired systems: . Dragging the cord around on the floor causes it to accumulate dirt, which could result in equipment damage or signal distortion. Hiding the wiring when recording can be problematic. You may pick up a low hum if you inadvertently run audio wiring in a parallel path with electrical wiring. -

Model DV6600 User Guide Super Audio CD/DVD Player

E61M7ED/E61M9ED(EN).qx3 05.8.4 5:27 PM Page 1 Model DV6600 User Guide Super Audio CD/DVD Player CLASS 1 LASER PRODUCT E61M7ED/E61M9ED(EN).qx3 05.8.4 5:27 PM Page 2 PRECAUTIONS ENGLISH ESPAÑOL WARRANTY GARANTIA For warranty information, contact your local Marantz distributor. Para obtener información acerca de la garantia póngase en contacto con su distribuidor Marantz. RETAIN YOUR PURCHASE RECEIPT GUARDE SU RECIBO DE COMPRA Your purchase receipt is your permanent record of a valuable purchase. It should be Su recibo de compra es su prueba permanente de haber adquirido un aparato de kept in a safe place to be referred to as necessary for insurance purposes or when valor, Este recibo deberá guardarlo en un lugar seguro y utilizarlo como referencia corresponding with Marantz. cuando tenga que hacer uso del seguro o se ponga en contacto con Marantz. IMPORTANT IMPORTANTE When seeking warranty service, it is the responsibility of the consumer to establish proof Cuando solicite el servicio otorgado por la garantia el usuario tiene la responsabilidad and date of purchase. Your purchase receipt or invoice is adequate for such proof. de demonstrar cuándo efectuó la compra. En este caso, su recibo de compra será la FOR U.K. ONLY prueba apropiada. This undertaking is in addition to a consumer's statutory rights and does not affect those rights in any way. ITALIANO GARANZIA FRANÇAIS L’apparecchio è coperto da una garanzia di buon funzionamento della durata di un anno, GARANTIE o del periodo previsto dalla legge, a partire dalla data di acquisto comprovata da un Pour des informations sur la garantie, contacter le distributeur local Marantz. -

Hd-A3ku Hd-A3kc

HD DVD player HD-A3KU HD-A3KC Owner’s manual In the spaces provided below, record the Model and Serial No. located on the rear panel of your player. Model No. Serial No. Retain this information for future reference. SAFETY PRECAUTIONS CAUTION The lightning fl ash with arrowhead symbol, within an equilateral triangle, RISK OF ELECTRIC SHOCK is intended to alert the user to the presence of uninsulated dangerous DO NOT OPEN voltage within the products enclosure that may be of suffi cient magnitude RISQUE DE CHOC ELECTRIQUE NE to constitute a risk of electric shock to persons. ATTENTION PAS OUVRIR The exclamation point within an equilateral triangle is intended to alert WARNING : TO REDUCE THE RISK OF ELECTRIC SHOCK, DO NOT REMOVE the user to the presence of important operating and maintenance COVER (OR BACK). NO USERSERVICEABLE (servicing) instructions in the literature accompanying the appliance. PARTS INSIDE. REFER SERVICING TO QUALIFIED SERVICE PERSONNEL. WARNING: TO REDUCE THE RISK OF FIRE OR ELECTRIC SHOCK, DO NOT EXPOSE THIS APPLIANCE TO RAIN OR MOISTURE. DANGEROUS HIGH VOLTAGES ARE PRESENT INSIDE THE ENCLOSURE. DO NOT OPEN THE CABINET. REFER SERVICING TO QUALIFIED PERSONNEL ONLY. CAUTION: TO PREVENT ELECTRIC SHOCK, MATCH WIDE BLADE OF PLUG TO WIDE SLOT, FULLY INSERT. ATTENTION: POUR ÉVITER LES CHOCS ÉLECTRIQUES, INTRODUIRE LA LAME LA PLUS LARGE DE LA FICHE DANS LA BORNE CORRESPONDANTE DE LA PRISE ET POUSSER JUSQU’AU FOND. CAUTION: This HD DVD player employs a Laser System. To ensure proper use of this product, please read this owner’s manual carefully and retain for future reference. -

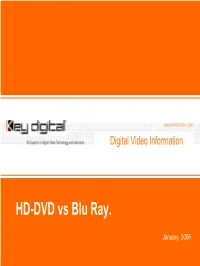

CEDIA 2005 Key Digital Press Conference & Training Presentation

Blu Ray vs HD-DVD Digital Video Information HD-DVD vs Blu Ray. January, 2006 Blu Ray vs HD-DVD For the last three years major consumer electronics companies together with Hollywood movie content providers, software developers, game platforms develop is in the competition to develop new movie/game/software disk format that will replace current DVD disk today. Unfortunately two competing camps Blu Ray and HD-DVD do not have any plans to merge yet and go full steam to the market with players and content. We at Key Digital® would like to inform our customers so informed preparation for this major new disk format. Blu Ray vs HD-DVD Blu Ray vs HD-DVD Comparison Parameters Blu Ray HD-DVD Comments Disk capacity Single layer disc – 25 Single layer disc – 20 Both m ay support up to GB GB 54GB Video MPEG2 H264, VC-1, MPEG2 H264 and VC-1 are next Compression generation compression and use higher compression ratio then MPEG2 Audio formats All Digital Audio All Digital Audio formats formats Play time Two hours+ HDTV Two hours+ HDTV AV Quality Very high Very high Price suggested at $1,800 $499 Price suggestions at this CES2006 stage are very preliminary Output Video HDMI only HDMI only Hard commitment on interface for HDMI only output for 1080i/720p H D TV is identical from both camps 1080p Output Yes Yes Both formats committed to capability allow 1080p output format for HDM I interface Output Video Com ponent Com ponent Com ponent output m ay interface for not be available altogether 480i/480p G am e platform Play Station XBOX support R ollout schedule Sim ilar to H D -D VD , full Starting March 2006 Q uantities to m anufacture production 3Q/2006 x10,000/month, full are very prelim inary production 3Q/2006 Content M ajor H ollyw ood M ajor H ollyw ood and Content providers availability com m itted M icrosoft com m itted alliances w ill shift as format winner become apparent. -

DVE) Competency Requirements

Certified Digital Video Editor (DVE) Competency Requirements This Competency listing serves to identify the major knowledge, skills, and standards areas which a certified Digital Video Editor needs in order to perform the professional tasks associated with the development of electronic digital videos for digital technology. Digital Video Editors must be knowledgeable in the following technical areas: 1.0 Screen Format 1.1 Explain Aspect Ratios in detail including: 1.1.1 the differences between the following: 1.1.1.1 Widescreen Aspect ratio, 16:9 1.1.1.2 Standard Aspect ratio, 4:3 1.2 Explain the use of letterbox technique 1.3 Describe the ‘Scan and Span’ technique use 1.4 Explain the screen formatting’s ‘safe zone’ 1.5 Describe the ‘split screen’ and its usage 1.6 Identify how Picture in Picture (PIP) is used in video editing 1.7 Explain ‘Overscan’ and associated terms: 1.7.1 Title Safe 1.7.2 Action Safe 1.7.3 Underscan 2.0 Video Fundamentals 2.1 Explain the following color details: 2.1.1 Luminance 2.1.2 Chrominance 2.1.3 RGB 2.1.4 YUV 2.2 Describe the significance of Frame and Frame Rates per second in digital video editing 2.3 Explain in detail progressive scanning or noninterlaced scanning 2.4 Explain the following video terminology: 2.4.1 Master 2.4.2 Talking Head 2.4.3 Freeze Frame 2.4.4 Dub 2.4.5 Explain video techniques such as: 2.4.5.1 Pan 2.4.5.2 Tilt 2.4.5.3 Roll 2.4.6 Identify the differences between the following video transitions: 2.4.6.1 Fades 2.4.6.2 Wipes 2.4.7 Explain the differences between “open caption” and “closed -

From Hard Drive to Optimized Video File

March 10 2003 Strategies and Techniques for Designers, Developers, and Managers of eLearning THIS WEEK — DEVELOPMENT TECHNIQUES Repurposing Taped Video for e-Learning, Part 2: Video is an increas- From Hard Drive to Optimized ingly important part of e-Learning Video File and there are many BY STEPHEN HASKIN ways to deliver it to n Part 1 of this two-part series, we learned how to move the learner’s desk- video content from digital or analog tape onto a hard top. With the right Idrive. This is half the job of repurposing taped video. This tools, making use week, we’ll learn how to use Adobe Premiere to render digi- tized video to other media: the Web, CD-ROM, and DVD. of these delivery You’ve got the tape. You’ve got the computer. You’ve options is easy. grabbed the video. Now it’s in the computer and you have to This week, learn do something with it. A file in your comput- as the video if you want, or give it another how to move digi- er is nice, but it’s not going to get any train- name. Remember, the schema for naming ing done. So what do you do? files is yours and I can’t know what kinds of tized video to the file names your organization uses, so what- Setting up projects in Premiere ever you call the Premiere project is OK. Web, to CD-ROM, Let’s start with the video you just Last week, I didn’t explain what happens grabbed. -

Building a Successful Digital Video Business

SOLUTIONS FOR PUBLISHERS FLIP THE SCRIPT BUILDING A SUCCESSFUL DIGITAL VIDEO BUSINESS bloomberg.com/content-licensing SOLUTIONS FOR PUBLISHERS REACH NEW AUDIENCES, FIND NEW REVENUE STREAMS Publishers, broadcasters and media brands around the world need new ways to compete effectively, capture share and remain vital in increasingly saturated markets. For many, digital video is an attractive solution. Poised for unprecedented rates of growth, digital video has the potential to attract larger audiences and provide fuel for new revenue streams. But getting started requires more than recognizing this potential. This white paper explores the fundamentals of building a thriving digital video business. It poses questions publishers need to consider before developing a realistic plan and offers steps publishers can take to successfully implement that plan. WHY VIDEO DRIVING MATTERS AUDIENCE TO DIGITAL VIDEO 3 5 MONETIZING MASTERING DIGITAL VIDEO TECHNOLOGY & CONTENT 9 13 bloomberg.com/content-licensing 2 SOLUTIONS FOR PUBLISHERS WHY VIDEO MATTERS People spend hours every day watching video. TV is the number one source, but its numbers—broadcast ratings, cable subscribers, households with TV—are in free fall. The fastest-growing segment by far is online video, with year-over-year growth rates north of 50 percent. The potential impact of this growth can’t be ignored. By 2017, researchers expect global consumer video activity to represent nearly 70 percent of all consumer Internet traffic, up from 57 percent in 2012. Online video services will double in usage during the same time frame, reaching more than 80 percent of all Internet users worldwide. The appeal of video is easy to understand. -

Super Video Compact Disc Super Video Compact Disc A

Super Video Compact Disc Super Video Compact Disc A Technical Explanation 3122 783 0081 1 1 21599 Super Video CD History · Demonstration players and discs to promote the standard. CD is one of the major new · Authoring tools to produce demo, technological steps of this century. test, and commercial discs. Beginning as a pure , high-quality sound For checking the compliance and reproduction system, it rapidly MPEG2 on compatibility of players and discs is developed into a whole family of needed: COMPACT DISC systems, with applications extending across to multimedia data storage and · A set ( suite ) of test discs with test distribution. The CD-ROM XA format each function described in the There is a market need for a makes it possible to combine normal standard at least once and if standardized full digital Compact computer data files with real-time achievable also the most important Disc based video reproduction multimedia files offering an additional combinations of content and system. 14% capacity. The format is platform applications within the scope of the independent to allow additional CD-DA standard. These discs are used for tracks ( CD-Extra) and to be played on a player development and The Super Video-CD standard multimedia-computer. All new formats manufacturing, as well as for upgrades the current Video-CD since 1990 have been based on the XA testing players that give problems format. It utilizes better Video and format. in the market. · A prototype test player, to play Audio Quality. It also standard demo and test discs, and for player includes extensions for surround Standardisation production development. -

Assuring Multi-Screen Video Quality

iQ Solutions Whitepaper √ √ √ √ Assuring Multi-Screen Video Quality Challenges can be overcome with The debut of the multi-screen video era a sharpened focus on the With the unveiling of the TV Everywhere partnership between Comcast Corp. quality of the customer’s actual and Time Warner Inc. in June 2009, the multiscreen video era formally began. viewing experience on each video For the first time in the history of the video entertainment industry, two device, not just the performance of prominent service and content providers promised to take high-quality TV the network delivering the programming far beyond the conventional home TV set. Breaking the pictures and sound. Only then will industry’s traditional shackles, Comcast and Timer Warner vowed to deliver network operators be able their programming to a vast array of other video displays, such as personal to put service quality issues behind computers, laptops, notebooks, video game consoles, iPads, mobile phones, them and carve out a and other portable devices. significant role in the emerging multi-screen universe. The embrace of this so-called “three screen strategy” by video service and content providers highlights the growing importance of digital video quality. More than ever, high-quality digital video signals are critical to the success of service providers as they seek to differentiate themselves from their competi- tors. With their programming now playing on multiple screens for the con- sumer, providers face even greater public exposure to any lingering video and audio problems on their networks. But digital video quality is not a new issue for cable operators, telcos, and satellite TV providers. -

Divx Plus HD Blu-Ray Disc/DVD Player

Pre-sales leaflet for United Kingdom (2014, November 6) Philips Blu-ray Disc/DVD player • DivX Plus HD • USB2.0 Media Link • DVD video upscaling BDP2900/05 Enjoy super-sharp movies in HD with RealMedia Video playback With BDP2900, movies never looked better. Incredibly sharp images in full HD 1080p are delivered from Blu-ray discs, while DVD upscaling offers near-HD video quality. Benefits See more Engage more • Blu-ray Disc playback for sharp images in full HD 1080p • EasyLink to control all HDMI CEC devices via a single remote • 1080p at 24 fps for cinema-like images • BD-Live (Profile 2.0) to enjoy online Blu-ray bonus content • DVD video upscaling to 1080p via HDMI for near-HD images • Enjoy all your movies and music from CDs and DVDs • DivX Plus HD Certified for high-definition DivX playback • USB 2.0 plays video/music from USB flash/hard disk drive • Subtitle Shift for widescreen without any missing subtitles Hear more • Dolby TrueHD for high fidelity sound Features Blu-ray Disc playback Subtitle Shift Blu-ray Discs have the capacity to carry high definition data, along with Subtitle Shift is an enhancement feature that lets users manually adjust a pictures in the 1920 x 1080 resolution that defines full high definition images. movie's subtitle positioning on the television or computer screen. Using the Scenes come to life as details leap at you, movements smoothen and images remote control, users can shift the subtitles up and down on the screen. On turn crystal clear. Blu-ray also delivers uncompressed surround sound — so widescreen displays, such as 21:9 cinema and projectors, the subtitles your audio experience becomes unbelievably real.