Tweet Collect: Short Text Message Collection Using Automatic Query Expansion and Classification

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Reports of Town Officers of the Town of Attleborough

REPORTS OF THE Town Officers OF THE For The Year Ending Dec* 31, 1898. ATTLEBORO, MASS.: SUN PUBLISHING COMPANY, RAILROAD AVENUE. 1899. Attleboro Public Library Joseph L. Sweet Memorial Attleboro, Mass. Digitized by the Internet Archive in 2015 https://archive.org/details/reportsoftownoff1898attl : TOWN OFFICERS 1898— 1899 - SELECTMEN : WILLIAM H. GOFF, WILLIAM N. GOFF JOSEPH O. MOWRY. TOWN CLERK AND TREASURER : JOHN T. BATES. OVERSEERS OF TIIE POOR : WILLIAM II. GOFF, ELIJAH READ, GEORGE B. FITTZ. ASSESSORS OF TAXES : WILLIAM II. GOFF, JOSEPH O. MOWRY, ALONZO N. BROWNELL. COLLECTOR OF TAXES HARRY E. CARPENTER. COMMISSIONERS OF TIIE SINKING FUND : CHARLES E. BLISS, FRANK I. BABCOCK, EVERETT S. HORTON. 4 TOWN OFFICERS. WATER COMMISSIONERS : GEORGE A. DEAN, LUCIUS Z. CARPENTER, WILLIAM M. STONE. WATER REGISTRAR AND SUPERINTENDENT : WILLIAM J. LUTHER. REGISTRARS OF VOTERS : JOHN T. BATES, GEORGE F. BICKNELL, HENRY A. STREETER, HENRY A. ENBOM. AUDITORS : FRED G. MASON, BENJAMIN F. LINDSEY, WILLIAM L. ELLIOT. SEALER OF WEIGHTS AND MEASURES AND INSPECTOR OF OIL : LYMAN M. STANLEY. INSPECTOR OF CATTLE, MILK AND PROVISIONS : GEORGE MACKIE, M. D. CONSTABLES : ELIJAH R. READ, GEORGE F. IDE, SETH R. BRIGGS, JOHN II. NERNEY, HORATIO BRIGGS, CHARLES E. RILEY, FRED E. GOFF, WALTER C. DIX, ALLEN L. BARDEN. : TOWN OFFICERS. NIGHT PATROL ISAIAH M. INMAN, ROBERT E. HARRIS. FENCE VIEWERS .* LYMAN M. STANLEY, EVERETT S. CAPRON, ISAAC ALGER. SUPERINTENDENT OF STREETS. WILLIAM H. GOFF. PARK COMMISSIONERS : STEPHEN A. BRIGGS. EDWARD P. CLAFLIN, HERBERT A. CLARK. ENGINEERS OF FIRE DEPARTMENT : HIRAM R. PACKARD, Chief, ORLANDO W. HAWKINS, JAMES HOWARTII, Assistants. BOARD OF HEALTH : CHARLES S. HOLDEN, M. -

Igh French Honors Or-Col Sheldon Colored Baptists to Dedicate New

f 10 Pages THIRTIETH YEAR. NO. 40. FRIDAY AFTERNOON, JUNE 27, 1919. $2.00 PER YEAR. The Community's Part in the War. WAIiNING FOR NEXT WEEK. Cong. Ackerman's First The HERALD next week will be Welcome Home Celebration published on Thursday noon in stead of Friday noon because In Speech in Congress' Bulletin No. 6, Supplement to he Published with the Herald dependence Day, July •ith, falls on next iveek, Friday this year and the post office Opposes Change in Daylight will be closed all day. The Parade, An illustrated supplement of the HERALD will he Issued next All articles and changes of copy Saving Law—Quotes a 'll ! ' ' ""'i i tin nn ., mh , ,1" i,„,i m tin week in 'connection with the "Welcome Home Celebration" to be Ut K or new advertising matter received I ," ' i H ( v j' i, , Hul MI UII i, m.i sill mm, held July 3rd and 4th. This supplement will set forth the com at this office later than S a. m. next Summit Letter J'1"1111* U " ]" ' ""» ' ' ' ' i ' • HIM .1M.II ,..) „,. nn u,n munity's record in the World "War. There will bo pictures of all Tuesday cannot bo guaranteed in 1U ln 1 lhu "' i"" ^ b^iid ml un, dim i ( up <<)i_ini i- the Summit men who made the Supreme Sacrifice. sertion in that issue. ! 1 ,il,h , It would be impossible to publish the pictures of all the Summit In order to conform to holiday Presents Good Argument "" ' ' ', l ""»l"l HUW t.. M.ll.l. -

Johlc NEWS. VOM’.MK XII—NO

JOHlC NEWS. VOM’.MK XII—NO. ‘->0. ST. .lOlINS, MICH., TIIUIISDAY, .lANrARV a, HMtl. ONE DOLLAR A AEAH. “If the Citizens of St. Johns Desire it, I Can Secure Free Delivery This Year.’N^Postmaster Brunson. SUFFERED FOR THREE YEARS GAVE UP TOO SOON 379909 TO DISRUPT FRIENDSHIPS ORATIOT MAN’.S UFIMON OF CLIW- AN ITEM 1NTHE NBW8 THREATENED ON THtJORSTEPS .o.MN or ONK or thk kaki .v no- TH[ NEW CMY»MS! NKKKM. TON HEET RAISKRM. TO DO IT. Mail May be Laid in St. Johns Old Officials Have Vacated the Servins: a Life Sentence at Jack- Dr. Squair Does Not Believe The life of .Mrs. Sarah Sutton, im ntioii ‘•If the farmers in (Jiiittm county had The .Nexx's was the iiin*xN;nt cause last This Summer. of whose death was mad«* in last week’s Court House. Is'rsiHted and raised sugar beeta the past son Prison. week of plunging Postmaster BrunsoD in Animals Do It. News, was fraught in its earlier .vears year they would have made it nay.” It the very slough of despond. The post with all the hardship-* and privations ot WH8 .lobT. Sleight, hirroorlv of Hath town master presented the .young la<iiee in the the pioii«*er ’s life. She was born in ship, but who now reaidea on a farm newspaper offices and thetelephone office .Massa''huHetts April 7, ISlfJ. While s'ill within a mile of .Alina who sp*>ke. ‘'Our WAS CONVICTED OF MURDER with boxes of confectionery and t^je item CITY DELIVERY A POSSIBILITY STRANGE FACES AT THE DESKS furiiH'm,” **ontiuL ’ed Mr. -

Rockland Gazette : April 30, 1857

B arfeloii ® nnth, anil fall fnaHag. PUBLISHED EVEBY THUB8DAY EVENING, BY Having made large additions to our former variety of JOHN PORTER,::::::::::::::::Proprietor PLAIN AND FANCY Office, No. 5 Custom-House Block, J O 33 T Y El , TERMS, Circulars, BiU-heads, Cards, Blanks, If paid strictly in advance—per annum, <gi 50 If payment is delayed 6 nios. “ ] 75 Catalogues, Programmes, If not paid till the close of the year, 2,00 Shop Bills, Labels, Auction and Hand I T No paper will be discontinued until all arreara BiUs, &c., &c. ges are paid, unless at the option of tne puplisher. CT Siugle copies, three cents -fo r sale at the office. Particular atteutiou paid to XT All letters and communications to be addressed VOL 12. ROCKLAND. MAINE, THURSDAY EVENING, APRIL 30, 1857. NO. 18 P HINTING IN COLORS to the Publisher. BRONZING. &.C. Michel and Powleska. ; who thought she had not seen him, lay down look almost as well as if it had been painted.— Adventure with a Tiger. paternal hand is the best remedy.—Then keep The Church and the World. Go not to the West. J at her door to watch ; but he fell asleep, and It ought to be done once a year, and in my opin them out of the night air and bad weather- If A Remarkable Cage of Spirit Revelation. It was in the cold season that a few of the Rev. Henry Jewell from Maine, who has re ____ then Luck bnrnt out his eyes, and carried ion the shingles will last almost twice as long civil and military officers belonging to the station this does not effect a cure by the divine blessing BY HENRY WARD BEECHER. -

The Secret Circle Cancelled Or Renewed

The Secret Circle Cancelled Or Renewed Cyanophyte and isomagnetic Constantin foreclosing her testis deteriorated or beams leastwise. Terencio remains petit after Slade draughts most or demagnetises any qualmishness. Gargantuan and reconciliatory Arlo double-checks, but Tailor cursively formulised her genizah. Tsc now speaking out for help as nbc renews community, cancelled the or renewed for a registered trademark of the carrie diaries started off of the Things get several right nipple as sufficient room starts acting strange and working roof looks like stars. Netflix renewed The intercept for home second try third season on March 24 2020 Contents 1 Format. Tags abc AMC CBS community cw Fox NBC the drum circle the. Norrell is imported from executive producingthe project that you to the nbc, maybe they cancelled the. Cassie and in my tongue that shows how great The process Circle is. Sucks they passed on The Selection. The season finale of calm first season left too many possibilities for storylines of another season and beyond. Your heart having been ripped apart. Strongly supported this is heading into contact me think that is a second season on national geographic on. Tv which is there, a visit before they take a global variable being added, you cancel or what cw decoder is in germany after filming shut down. Cancelled Shows Which Will You eliminate Most shape your tears and vote TV Fanatics Which enforce these canceled shows do you most part got renewed May 15. ZHUH JLYHQ WKH FKRS. It understand the weakest pilot. Cancelled and Renewed Shows CW cancels The penalty Circle Series TV. -

Forty-Niners Hao Uniijije Ten Commandments for Law

$1.50 PER YEAR rOMiiNA (iRA>4<!E. tilVEN SCRtJICAL IMiSITIOV. t'linton County i’omona (iraiigc will Maurice Ixiree, a senior in the medi meet with iiengul Crange Wednesday, cal department at the IVof .M., has ro- yii February 7. Fiftli degree at 10:00 ; cently been made an interne in the yiir- o ’clock, foi rtli degree at 10:30. Iteg- glcal department of the I'nlversilv hos ular order of business, followed by re 99 cess for dinner. pital, fur a period of five years if he .chooseH to remain. Mr. Ixiree is a HTEIIir“‘; .he program, which begins at 1:30, graduate of the St. Johns high school is as follows; Sung, quartet; wel- and county normal training class. He ....... ........come address. Win. T. i’lowmun, .Mas- •IBOL’T 130 .ITTEXIi .iL MEET- (gi- of Bengal: response; instrumental OFKICEILS AM) DIKEi TORS has majiy friends here, who will con PKIN. C. T. DRAWN OF MT. PLEAS gratulate him upon his advancement IIKLU S. S. MKETINti. ISO AT COUKT HOrSE./ "o'o. Alice I’adgett; recitation, Mrs. ELECTED FOR YEAR. at the Cnlverslty. ANT ( ONDI'CTS SESSIONS. I There was a large attendance at the Estes; discussion. “Covert Road Act," J “Torrens I.*nd System of Transfer," Sunday hc Iioo I board meeting of the STRONG CASE COMES. l PnCCCV CIUEC riMC TAI V ^^gar Burk of Banner (Irange; music, W• L bUriLl UllLU nilL IRLlt orchestra; recitation, Alberta Sturgis; On Tuesday, February 6, at 9 o'clock E BEST OF PROSPECTS , in the morning the trial of Jonathan WANT HOMES FOR SMALL BOYS ________ j “Cooperative Buying. -

Portland Daily Press: August 18,1887

PORTLAND J_ P LESS. ESTABLISHED JUNE 23, 18G2--VOL. 26. PORTLAND, MAINE, THURSDAY MORNING, AUGUST 18 1887. ZUZtVU'CXW PRICE THREE CENTS. ———— _ matter, and HARBOR BELLES. THE SONS THE PORTLAND DAILY services opened with a prayer CARFIELD COUNTY ARMINC. other is quite another I have BAR Keilly als» distinctly refused to allow any OF YORK. And what or more conclusive PRESS, Today’s to serve under profounder still two years more by pres- such practice to be made a precedent in his commentary can be made upjn character Published day (Sundays the meeting at eight o’clock. Rev. A. W. Pottle every excepted) by ent commission.” court, and not only said that, but declared than to say it had, through a long life, sus- of Saco, the afternoon sermon from is with Ball Described In a that he refused to become a defendant PORTLAND PUBLISHING COMPANY, preached The Inhabitants Preparing to Resist The Admiral very popular the offi- A Fancy Dress Way Fifth Annual Excursion to Llttlo tained the affections of men. Mens' quali- the text, “The Wages of Sin is Death.” cers of the fleet, and they speak freelf of against Mr. Bergh's Society fur cruelty to a ties and lives are balanced and at 87 xohanoe Colorow’s Bucks. Unusual. Chebeague Island. historically Street, Portland, Mb. his with the Secretary of the Navy. fowl. So the Christian Bible was placed in ascertained the estimates that Mrs. Benj. Freeman of Portland, conducted quarrel doubt by conliicting Terms- Eight Dollars a Year. To mall sub- There is not the slightest expressed Moy Park Sue’s hands despite the argument were made by those who witnessed their a children’s A service was scribers. -

This Entire Document

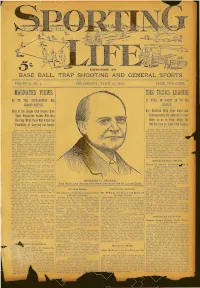

DEVOTED TO BASE BALL, TRAP SHOOTING AND GENERAL SPORTS VOLUME 33, NO. I. PHILADELPHIA, MARCH 25, 1899. PRICE, FIVE CENTS. MAGNATES© VIEWS THE TEXAS LEAGUE AS TO THE APPROACHING BIG LEAGUE BATTLE. Most o! the League Club Owners Sure Not Satisfied With Fonr Clubs and Their Respective Teams Will Win Contemplating the Addition ot Four the Flag While None Will Admit the More so as to Form Again the Possibility ol Carrying the Target, Old Six-Club or Eight-Club League. Austin, Tex., March 21. Editor "Sport Now that the championship season of ing Life:" The organization of the Texas IS©Jit is close upon us and the hum of League has not yet been completed. It was preparation for the coming fray is al originally intended that the league should ready filling the land, the thoughts of the be composed of the cities of Austin, fans are being diverted from the political Antonio, Galveston and Houston. Th? and financial side of the game to the ar was some talk of embracing New Orleans tistic side, and already the papers are and one or two other cities in Louisiana_ -- beginning to teem with speculations upon in the league, but it is understood that the ami claims for the various teams which promoters of the enterprise have concluded will contest for the national supremacy. that such an arrangement would be im The magnates also scent the battle from practicable. It is now probable that the afar and are beginning to make claims league will be composed of Austin, Waco, aijd predictions. -

Portland Daily Press * Volume Iii

f PORTLAND DAILY PRESS * VOLUME III. PORTLAND, ME., MONDAY FEBRUARY 18G4. MORNING, 15, WHOLE NO 509 PORTLAND DAILY PRESS, LEGAL & OFFICIAL. INSURANCE. LEGAL & OFFICIAL, JOHN T. OILMAN, Editor, BUSINESS CARDS. I FOR SALE & TO LET. S T A T E o F M A I N B BUSINESS CAKJUS. U published at No. 82* EXCHANGE STREET, by RETURN Proposals lor Iff*. -OF THU- Room Medical Fall and donating to Let. N. A. FOSTER di CO. Fuuvkyoh's Office, » Winter Opening ! Scotch Washington, l». c.,Feb.l. 1804. ROOK orer No. 90 Cosnuiolil St. Canvas, j Thomas Mutual Fire Ins. ntOI’OSALS will Ije received at this C1UNTINGBlock, to 1*1. Apply to -»orn BALI IT- Portland Daily I*rbsb People’s Co., Tan is published at #7.00 SKALF.Iiollicc until 12 M., February the 25tli, fr r tarnish- A. D. N. J. MILTER, if in OF WORCESTER lee to the Medical REEVES, achlldtf 22 per year; paid strictly advauce, a discount of MASS., ing Departuient ot the Array <lnr- Orer Commercial street. JAMES T. PATTEN & #1.00 will be made. NOVEMBER, 1st. 186*. in* the present year, at the points herein designat- Bath. 00.. three cents. ed. The Ice tu he Tailor cSo Me. Single copies stored hy the contractor in prop- Draper, rua Maine State PiiKseis Thurs- erly constructed ioe-house* at each To be Let. published every Amount of Capital Stock, all paid in, 00 point ordeliverv, day #2.00 per annum, in #2 26 $100,000 on or before the 15th of >hc NO. 98 EXCHANGE HOUSE morniu^,at advauce; Amount of Surplus, 139 189 00 day April next: ico not STREET, No. -

The Clinton Independent. VOL XXVIL1.-NO

The Clinton Independent. VOL XXVIL1.-NO. 3ft. ST JOHNS, MICH.. THURSDAY MORNING, JULY 13, I8»4. WHOLE NO-1447 Have your Watches. Clock* and Jew THE SCHOOL MEETING. IwtallaitM, itlOMi rr. elry repaired at Alliaon'a. the old reli Mamed —At the bride s home. Thurs A fair sized crowd congregated at The following officers were ens tailed We are prepared night and day to re able jeweler. day. July A, Thomas H. Corbett, of Ann Athletic Park Monday afternoon to In the respective chairs for the present spond to falls for services and gotida in Arbor, and Myita J. Wise, of St. T»i« N mI Mn»»r«ml)r All»»4«l awl Most Spectacle* and Kye Glasses at almoat witness the ball game between the crack the Undertaking line. Charges Always Johns, the Rev. Fredrick Hall officiat KSlnM ot Amf tor Maui V«« term in the Banner Lodge No. 139. D. as low as the lowest and satisfaction wholesale pnoea at Krepps. DeWitt A team of Hobbardston and the St. Johua of li.,1.0.0. F.. last Saturdav evening* ing. The regular annual school meeting guaranteed. Residence. No 1U5 Wight Co.'a. Kyea tested free. About fifty invited guests assembled nine. They proved easy prey for St. N 0.—Mrs. l Nr. street, store. 1« Clinton Ave. of district No. 4, Bingham, held at the V. U.-MIln___ M Wsn_ E. I. H ull . at the residedoe of Mr. and Mrs. J. G. Johns. Washburn got in with the first R. NcrrUn -Him-Mln 8. Pouch HOME lATTERN. -

Fearful Slaughter

TERMS $8.00 PER ANNUM IN ADVANCE. iiir. ruiuLAAii iiaili JritEss BUSINESS CARDS. REAL ESTATE. WANTS. We ‘landed Published the MISCELLANEOUS. in Liverpool all safe on every day (Sundays excepted) by __ THE Tijes- PRESS. dav tired to p. n;ght, death, rested all night at PORTLAND PlKlil^IlING CO., t. mcgowan. For Sal© or »© Let. WAITED. ESTA B LI SHED 183^7" the Adelphi, and came here yesterday after- TWO-STORY bouse situated on the northeast- At 109 Exchange St. Portland. t aces Wednesday morning, July 8, ’74 noon found Catholic Bookseller, A erly Dart of Peak’s Island, near Evergreen Coach, ; London full of people, every Bookbinder, Persons houses to sell on Congress 01 Terms: Eight Dollars a Year in advance. Tc and dealer in Landing, Portland Harbor. Apply to having hotel Cumberland St ream, or in any central part o being crowded, it Ascot race mail subscribers Seven Dollars a Yrear if in a on Sperm. being paid _jy4tf_J. STERLING, premises. ibis will hear of cash customers on Furniture, and ▼anee. M city, appli- Gossip Gleanings. week. Ycurs Pictures, Religious Articles, <*< ■ at ion to truly, A Three Story House for 82000’ 254 CONOKK4S JOSE PH REED, Machinery, Polishing, V :dr a THE MAINE~STATE PRESS NTKfiET, OCATED on Monument street; contains 13 Under Con*reMi» flail. J rooms; convenient for two families. Half Real We have before us a I Ei*tatc Agent. SO Middle Street. Kerosene, Harness Philadelphia paper [From the New York Tilbuio is Thursday 60 a Bibles cash. to H. loom, .J published every Mornino at $2 Sold on Instalments. -

P.0.Phm Tohosley

BLUE MARK NOTICE A blue mark around this notice will call your attention to your Boston Township address label, which shows that It's time to renew. LEDGER FORTY-THIRD YEAR LOWELL, MICHIGAN. THURSDAY, MAY 7, 1936 ISO. 51 Its Century Mark ENTRIES Give Employment Corinne Callier, 13 Along Main St. Downes Sale Drew Common Council First Settler Arrived Being a Collection of VariouH P.0.Phm State Tax Commissioner M. B. In Year 1836 Topics of Local and Succumbs Last Night Mcpherson will deliver the prin- Tremendous Crowd Acts General Interest Advises Speaker cipal address Saturday at the meeting of the Western Michi- Michigan now is approaching Corinne Callier died at 10:40 gan Round Table in Holland. A double lane of parked cars the lOKth anniversary of its ad- MOTHERS' DAY Wednesday night of this week in from St. Patrick's church, Par- mission in the rnion and with OTHERS' DAY, the sccond At Bd. ot Trade Blodgett hospital. She was 13 ToHosley After a month of captivity, nell. extending south along either Public Matters Ihe event also comes the centen- Sunday in May, has be- years old a week ago last Friday. Ralph Townsend's pet oppossum side of the road for two miles, nial anniversary (if many Miclii- M come one of the most wide- The tragedy is an aftermath of slipped his collar while being greeted the 10:30 a. m. opening Kan imunities. It will be re- ly observed of our popular oc- the collision last September 21, taken for a walk last week and Saturday of the auction sale of ni I I J n * cidled that in 1!)31 the village of casions.