Characterizing (Un)Moderated Textual Data in Social Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Twitter, Myspace and Facebook Demystified - by Ted Janusz

Twitter, MySpace and Facebook Demystified - by Ted Janusz Q: I hear people talking about Web sites like Twitter, MySpace and Facebook. What are they? And, even more importantly, should I be using them to promote oral implantology? First, you are not alone. A recent survey showed that 70 percent of American adults did not know enough about Twitter to even have an opinion. Tools like Twitter, Facebook and MySpace are components of something else you may have heard people talking about: Web 2.0 , a popular term for Internet applications in which the users are actively engaged in creating and distributing Web content. Web 1.0 probably consisted of the Web sites you saw back in the late 90s, which were nothing more than fancy electronic brochures. Web 1.5 would have been something like Amazon or eBay, sites on which one could buy, sell and leave reviews. What Web 3.0 will look like is anybody's guess! Let's look specifically at the three applications that you mentioned. Tweet, Tweet Twitter - "Twitter is like text messaging, only you can also do it from the Web," says Dan Tynan, the author of the Tynan on Technology blog. "Instead of sending a message to just one person, you can send it to thousands of people at once. You can choose to follow anyone's update (called "tweets") simply by clicking the Follow button on their profile, or vice-versa. The only rule is that each tweet can be no longer than 140 characters." Former CEO of Twitter Jack Dorsey once accepted an award for Twitter by saying, "We'd like to thank you in 140 characters or less. -

GOOGLE+ PRO TIPS Google+ Allows You to Connect with Your Audience in a Plethora of New Ways

GOOGLE+ PRO TIPS Google+ allows you to connect with your audience in a plethora of new ways. It combines features from some of the more popular platforms into one — supporting GIFs like Tumblr and, before Facebook jumped on board, supporting hashtags like Twitter — but Google+ truly shines with Hangouts and Events. As part of our Google Apps for Education agreement, NYU students can easily opt-in to Google+ using their NYU emails, creating a huge potential audience. KEEP YOUR POSTS TIDY While there isn’t a 140-character limit, keep posts brief, sharp, and to the point. Having more space than Twitter doesn’t mean you should post multiple paragraphs; use Twitter’s character limit as a good rule of thumb for how much your followers are used to consuming at a glance. When we have a lot to say, we create a Tumblr post, then share a link to it on Google+. REMOVE LINKS AFTER LINK PREVIEW Sharing a link on Google+ automatically creates a visually appealing link preview, which means you don’t need to keep the link in your text. There may be times when you don’t want a link preview, in which case you can remove the preview but keep the link. Whichever you prefer, don’t keep both. We keep link previews because they are more interactive than a simple text link. OTHER RESOURCES Google+ Training Video USE HASHTAgs — but be sTRATEGIC BY GROVO Hashtags can put you into already-existing conversations and make your Learn More BY GOOGLE posts easier to find by people following specific tags, such as incoming How Google+ Works freshmen following — or constantly searching — the #NYU tag. -

Naadac Social Media and Ethical Dilemnas for Behavioral Health Clinicians January 29, 2020

NAADAC SOCIAL MEDIA AND ETHICAL DILEMNAS FOR BEHAVIORAL HEALTH CLINICIANS JANUARY 29, 2020 TRANSCRIPT PROVIDED BY: CAPTIONACCESS LLC [email protected] www.captionaccess.com * * * * * This is being provided in a rough-draft format. Communication Access Realtime Translation (CART) is provided in order to facilitate communication accessibility and may not be a totally verbatim record of the proceedings * * * * * >> SPEAKER: The broadcast is now starting. All attendee are in listen-only mode. >> Hello, everyone, and welcome to today's webinar on social media and ethical dilemma s for behavioral health clinicians presented by Michael G Bricker. It is great that you can join us today. My name is Samson Teklemariam and I'm the director of training and professional development for NAADAC, the association for addiction professionals. I'll be the organizer for this training experience. And in an effort to continue the clinical professional and business development for the addiction professional, NAADAC is very fortunate to welcome webinar sponsors. As our field continues to grow and our responsibilities evolve, it is important to remain informed of best practices and resources supporting the addiction profession. So this webinar is sponsored by Brighter Vision, the worldwide leader in website design for therapists, counselors, and addiction professionals. Stay tuned for introductions on how to access the CE quiz towards the end of the webinar immediately following a word from our sponsor. The permanent homepage for NAADAC webinars is www NAADAC.org slash webinars. Make sure to bookmark this webpage so you can stay up to date on the latest in education. Closed captioning is provided by Caption Access. -

Effectiveness of Dismantling Strategies on Moderated Vs. Unmoderated

www.nature.com/scientificreports OPEN Efectiveness of dismantling strategies on moderated vs. unmoderated online social platforms Oriol Artime1*, Valeria d’Andrea1, Riccardo Gallotti1, Pier Luigi Sacco2,3,4 & Manlio De Domenico 1 Online social networks are the perfect test bed to better understand large-scale human behavior in interacting contexts. Although they are broadly used and studied, little is known about how their terms of service and posting rules afect the way users interact and information spreads. Acknowledging the relation between network connectivity and functionality, we compare the robustness of two diferent online social platforms, Twitter and Gab, with respect to banning, or dismantling, strategies based on the recursive censor of users characterized by social prominence (degree) or intensity of infammatory content (sentiment). We fnd that the moderated (Twitter) vs. unmoderated (Gab) character of the network is not a discriminating factor for intervention efectiveness. We fnd, however, that more complex strategies based upon the combination of topological and content features may be efective for network dismantling. Our results provide useful indications to design better strategies for countervailing the production and dissemination of anti- social content in online social platforms. Online social networks provide a rich laboratory for the analysis of large-scale social interaction and of their social efects1–4. Tey facilitate the inclusive engagement of new actors by removing most barriers to participate in content-sharing platforms characteristic of the pre-digital era5. For this reason, they can be regarded as a social arena for public debate and opinion formation, with potentially positive efects on individual and collective empowerment6. -

What Is Gab? a Bastion of Free Speech Or an Alt-Right Echo Chamber?

What is Gab? A Bastion of Free Speech or an Alt-Right Echo Chamber? Savvas Zannettou Barry Bradlyn Emiliano De Cristofaro Cyprus University of Technology Princeton Center for Theoretical Science University College London [email protected] [email protected] [email protected] Haewoon Kwak Michael Sirivianos Gianluca Stringhini Qatar Computing Research Institute Cyprus University of Technology University College London & Hamad Bin Khalifa University [email protected] [email protected] [email protected] Jeremy Blackburn University of Alabama at Birmingham [email protected] ABSTRACT ACM Reference Format: Over the past few years, a number of new “fringe” communities, Savvas Zannettou, Barry Bradlyn, Emiliano De Cristofaro, Haewoon Kwak, like 4chan or certain subreddits, have gained traction on the Web Michael Sirivianos, Gianluca Stringhini, and Jeremy Blackburn. 2018. What is Gab? A Bastion of Free Speech or an Alt-Right Echo Chamber?. In WWW at a rapid pace. However, more often than not, little is known about ’18 Companion: The 2018 Web Conference Companion, April 23–27, 2018, Lyon, how they evolve or what kind of activities they attract, despite France. ACM, New York, NY, USA, 8 pages. https://doi.org/10.1145/3184558. recent research has shown that they influence how false informa- 3191531 tion reaches mainstream communities. This motivates the need to monitor these communities and analyze their impact on the Web’s information ecosystem. 1 INTRODUCTION In August 2016, a new social network called Gab was created The Web’s information ecosystem is composed of multiple com- as an alternative to Twitter. -

Privacy and Security Issues Associated with Mobile Dating Applications

2018 Proceedings of the Conference on Information Systems Applied Research ISSN: 2167-1508 Norfolk, Virginia v11 n 4823 Privacy and Security Issues Associated with Mobile Dating Applications Dr. Darren R. Hayes [email protected] Pace University Sapienza Università di Roma Christopher Snow [email protected] Pace University New York, NY Abstract Mobile dating application (app) usage has grown exponentially, while becoming more effective at connecting people romantically. Given the sensitive nature of the information users are required to provide, to effectively match a couple, our research sought to understand if app permissions and privacy policies were enough for a user to adequately determine the amount of personal information being collected and shared. Based on a static and dynamic analysis of three popular dating apps for Android and iPhone, our conclusion is that dating app developers are collecting and sharing personal information that extends well beyond publicly stated privacy policies, which tend to be vague at best. Furthermore, the security protocols, utilized by dating app developers, could be deemed relatively inadequate, thereby posing potential risk to both consumers and organizations. While social media companies have been the primary focus for discussions surrounding the implementation of the General Data Protection Regulation (GDPR) in the European Union, our research findings indicate that third- party analytical companies and big data gatherers need to be more closely examined for potential violations. Keywords: GDPR, Privacy, Mobile Advertising, Big Data, Mobile Forensics, Android 1. INTRODUCTION two years, from 10% to 27% (Smith and Anderson, 2016). Therefore, it is important for There were 3.6 million applications (“apps”) on individuals to clearly understand the potential Google Play and 2.1 million iOS applications on security risks and privacy issues associated with Apple’s App Store in 2017 and a mere 8.5% of dating apps. -

Trailer Trash” Stigma and Belonging in Florida Mobile Home Parks

Social Inclusion (ISSN: 2183–2803) 2020, Volume 8, Issue 1, Pages 66–75 DOI: 10.17645/si.v8i1.2391 Article “Trailer Trash” Stigma and Belonging in Florida Mobile Home Parks Margarethe Kusenbach Department of Sociology, University of South Florida, Tampa, FL 33620, USA; E-Mail: [email protected] Submitted: 1 August 2019 | Accepted: 4 October 2019 | Published: 27 February 2020 Abstract In the United States, residents of mobile homes and mobile home communities are faced with cultural stigmatization regarding their places of living. While common, the “trailer trash” stigma, an example of both housing and neighbor- hood/territorial stigma, has been understudied in contemporary research. Through a range of discursive strategies, many subgroups within this larger population manage to successfully distance themselves from the stigma and thereby render it inconsequential (Kusenbach, 2009). But what about those residents—typically white, poor, and occasionally lacking in stability—who do not have the necessary resources to accomplish this? This article examines three typical responses by low-income mobile home residents—here called resisting, downplaying, and perpetuating—leading to different outcomes regarding residents’ sense of community belonging. The article is based on the analysis of over 150 qualitative interviews with mobile home park residents conducted in West Central Florida between 2005 and 2010. Keywords belonging; Florida; housing; identity; mobile homes; stigmatization; territorial stigma Issue This article is part of the issue “New Research on Housing and Territorial Stigma” edited by Margarethe Kusenbach (University of South Florida, USA) and Peer Smets (Vrije Universiteit Amsterdam, the Netherlands). © 2020 by the author; licensee Cogitatio (Lisbon, Portugal). This article is licensed under a Creative Commons Attribu- tion 4.0 International License (CC BY). -

Marketing and Communications for Artists Boost Your Social Media Presence

MARKETING AND COMMUNICATIONS FOR ARTISTS BOOST YOUR SOCIAL MEDIA PRESENCE QUESTIONS FOR KIANGA ELLIS: INTERNET & SOCIAL MEDIA In 2012, social media remains an evolving terrain in which artists and organizations must determine which platforms, levels of participation, and tracking methods are most effective and sustainable for their own needs. To inform this process, LMCC invited six artists and arts professionals effectively using social media to share their approaches, successes, and lessons learned. LOWER MANHATTAN CULTURAL COUNCIL (LMCC): Briefly describe your work as an artist and any other roles or affiliations you have as an arts professional. KIANGA ELLIS (KE): Following a legal career on Wall Street in derivatives and commodities sales and trading, I have spent the past few years as a consultant and producer of art projects. My expertise is in patron and audience development, business strategy and communications, with a special focus on social media and Internet marketing. I have worked with internationally recognized institutions such as The Museum of Modern Art, The Metropolitan Museum of Art, Sotheby’s, SITE Santa Fe, and numerous galleries and international art fairs. I am a published author and invited speaker on the topic of how the Internet is changing the business of art. In 2011, after several months meeting with artists in their studios and recording videos of our conversations, I began exhibiting and selling the work of emerging and international artists through Kianga Ellis Projects, an exhibition program that hosts conversations about the studio practice and work of invited contemporary artists. I launched the project in Santa Fe, New Mexico ⎯Kianga Ellis Projects is now located in an artist loft building in Bedford Stuyvesant, Brooklyn. -

Here's Your Grab-And-Go One-Sheeter for All Things Twitter Ads

Agency Checklist Here's your grab-and-go one-sheeter for all things Twitter Ads Use Twitter to elevate your next product or feature launch, and to connect with current events and conversations Identify your client’s specific goals and metrics, and contact our sales team to get personalized information on performance industry benchmarks Apply for an insertion order if you’re planning to spend over $5,000 (or local currency equivalent) Set up multi-user login to ensure you have all the right access to your clients’ ad accounts. We recommend clients to select “Account Administrator” and check the “Can compose Promotable Tweets” option for agencies to access all the right information Open your Twitter Ads account a few weeks before you need to run ads to allow time for approval, and review our ad policies for industry-specific rules and guidance Keep your Tweets clear and concise, with 1-2 hashtags if relevant and a strong CTA Add rich media, especially short videos (15 seconds or less with a sound-off strategy) whenever possible Consider investing in premium products (Twitter Amplify and Twitter Takeover) for greater impact Set up conversion tracking and mobile measurement partners (if applicable), and learn how to navigate the different tools in Twitter Ads Manager Review our targeting options, and choose which ones are right for you to reach your audience Understand the metrics and data available to you on analytics.twitter.com and through advanced measurement studies . -

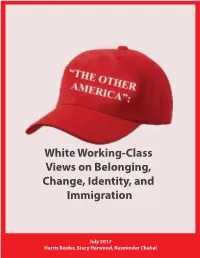

White Working-Class Views on Belonging, Change, Identity, and Immigration

White Working-Class Views on Belonging, Change, Identity, and Immigration July 2017 Harris Beider, Stacy Harwood, Kusminder Chahal Acknowledgments We thank all research participants for your enthusiastic participation in this project. We owe much gratitude to our two fabulous research assistants, Rachael Wilson and Erika McLitus from the University of Illinois, who helped us code all the transcripts, and to Efadul Huq, also from the University of Illinois, for the design and layout of the final report. Thank you to Open Society Foundations, US Programs for funding this study. About the Authors Harris Beider is Professor in Community Cohesion at Coventry University and a Visiting Professor in the School of International and Public Affairs at Columbia University. Stacy Anne Harwood is an Associate Professor in the Department of Urban & Regional Planning at the University of Illinois, Urbana-Champaign. Kusminder Chahal is a Research Associate for the Centre for Trust, Peace and Social Relations at Coventry University. For more information Contact Dr. Harris Beider Centre for Trust, Peace and Social Relations Coventry University, Priory Street Coventry, UK, CV1 5FB email: [email protected] Dr. Stacy Anne Harwood Department of Urban & Regional Planning University of Illinois at Urbana-Champaign 111 Temple Buell Hall, 611 E. Lorado Taft Drive Champaign, IL, USA, 61820 email: [email protected] How to Cite this Report Beider, H., Harwood, S., & Chahal, K. (2017). “The Other America”: White working-class views on belonging, change, identity, and immigration. Centre for Trust, Peace and Social Relations, Coventry University, UK. ISBN: 978-1-84600-077-5 Photography credits: except when noted all photographs were taken by the authors of this report. -

Borat in an Age of Postironic Deconstruction Antonio López

Taboo: The Journal of Culture and Education Volume 11 | Issue 1 Article 10 December 2007 Borat in an Age of Postironic Deconstruction Antonio López Follow this and additional works at: https://digitalcommons.lsu.edu/taboo Recommended Citation López, A. (2017). Borat in an Age of Postironic Deconstruction. Taboo: The Journal of Culture and Education, 11 (1). https://doi.org/ 10.31390/taboo.11.1.10 Taboo, Spring-Summer-Fall-WinterAntonio López 2007 73 Borat in an Age of Postironic Deconstruction Antonio López The power of holding two contradictory beliefs in one’s mind simultaneously, and accepting both of them. ... To tell deliberate lies while genuinely believing in them, to forget any fact that has become inconvenient, and then, when it becomes necessary again, to draw it back from oblivion for just so long as it is needed, to deny the existence of objective reality and all the while to take account of the reality which one denies—all this is indispensably necessary. Even in using the word doublethink it is necessary to exercise doublethink. For by using the word one admits that one is tampering with reality; by a fresh act of doublethink one erases this knowledge; and so on indefinitely, with the lie always one leap ahead of the truth. —George Orwell, 19841 I will speak to you in plain, simple English. And that brings us to tonight’s word: ‘truthiness.’ Now I’m sure some of the ‘word police,’ the ‘wordinistas’ over at Webster’s are gonna say, ‘hey, that’s not really a word.’ Well, anyone who knows me knows I’m no fan of dictionaries or reference books. -

Sex and Difference in the Jewish American Family: Incest Narratives in 1990S Literary and Pop Culture

University of Massachusetts Amherst ScholarWorks@UMass Amherst Doctoral Dissertations Dissertations and Theses March 2018 Sex and Difference in the Jewish American Family: Incest Narratives in 1990s Literary and Pop Culture Eli W. Bromberg University of Massachusetts Amherst Follow this and additional works at: https://scholarworks.umass.edu/dissertations_2 Part of the American Studies Commons Recommended Citation Bromberg, Eli W., "Sex and Difference in the Jewish American Family: Incest Narratives in 1990s Literary and Pop Culture" (2018). Doctoral Dissertations. 1156. https://doi.org/10.7275/11176350.0 https://scholarworks.umass.edu/dissertations_2/1156 This Open Access Dissertation is brought to you for free and open access by the Dissertations and Theses at ScholarWorks@UMass Amherst. It has been accepted for inclusion in Doctoral Dissertations by an authorized administrator of ScholarWorks@UMass Amherst. For more information, please contact [email protected]. SEX AND DIFFERENCE IN THE JEWISH AMERICAN FAMILY: INCEST NARRATIVES IN 1990S LITERARY AND POP CULTURE A Dissertation Presented by ELI WOLF BROMBERG Submitted to the Graduate School of the University of Massachusetts Amherst in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY February 2018 Department of English Concentration: American Studies © Copyright by Eli Bromberg 2018 All Rights Reserved SEX AND DIFFERENCE IN THE JEWISH AMERICAN FAMILY: INCEST NARRATIVES IN 1990S LITERARY AND POP CULTURE A Dissertation Presented By ELI W. BROMBERG