Investigation Into Google's Voice Search Technology

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

TECHNOLOGY TOOLS of the TRAD E

TECHNOLOGY TOOLS of the TRAD E element lens, also with profile. The screen is a 5.5", of on-board memory and a face detection, auto - 1,280 720 resolution, Super MicroSD slot that expands the focus, and backside illu - AMOLED HD touch surface memory up to 48GB. An 8- mination. Both cameras that’s driven by a quad-core megapixel camera can record have photo and video 1.6GHz processor. Another video at 1,080p, and there’s a geotagging. Video advantage of the Note’s size is 1.9-megapixel front-facing cam. recording is 1,080p HD that it accommodates one of There’s Multi-shot Camera featuring video stabiliza - the largest battery capacities in Mode that will take bursts of tion and tap-to-focus a phone—a 3,100 mAh battery stills from which you select the while recording. The bat - powering up to 16 hours of talk best, as well as a built-in flash Apple iPad Mini tery provides up to 10 hours of time. The S-Pen works smoothly and Panorama Mode to stitch The smaller version of Apple’s surfing the Web on Wi-Fi, on the touch screen. You draw widescreen images. Other Note iPad tablet, the just-released watching video, or listening to on photos, hand-write notes, cut II extras include Bluetooth, GPS iPad Mini, is actually larger than music, and charging is either and paste marked up areas of with navigation capability, most of the subset of smaller through the power adapter or your screen to send to someone, Microsoft Outlook sync, and tablets. -

Your Voice Assistant Is Mine: How to Abuse Speakers to Steal Information and Control Your Phone ∗ †

Your Voice Assistant is Mine: How to Abuse Speakers to Steal Information and Control Your Phone ∗ y Wenrui Diao, Xiangyu Liu, Zhe Zhou, and Kehuan Zhang Department of Information Engineering The Chinese University of Hong Kong {dw013, lx012, zz113, khzhang}@ie.cuhk.edu.hk ABSTRACT General Terms Previous research about sensor based attacks on Android platform Security focused mainly on accessing or controlling over sensitive compo- nents, such as camera, microphone and GPS. These approaches Keywords obtain data from sensors directly and need corresponding sensor invoking permissions. Android Security; Speaker; Voice Assistant; Permission Bypass- This paper presents a novel approach (GVS-Attack) to launch ing; Zero Permission Attack permission bypassing attacks from a zero-permission Android application (VoicEmployer) through the phone speaker. The idea of 1. INTRODUCTION GVS-Attack is to utilize an Android system built-in voice assistant In recent years, smartphones are becoming more and more popu- module – Google Voice Search. With Android Intent mechanism, lar, among which Android OS pushed past 80% market share [32]. VoicEmployer can bring Google Voice Search to foreground, and One attraction of smartphones is that users can install applications then plays prepared audio files (like “call number 1234 5678”) in (apps for short) as their wishes conveniently. But this convenience the background. Google Voice Search can recognize this voice also brings serious problems of malicious application, which have command and perform corresponding operations. With ingenious been noticed by both academic and industry fields. According to design, our GVS-Attack can forge SMS/Email, access privacy Kaspersky’s annual security report [34], Android platform attracted information, transmit sensitive data and achieve remote control a whopping 98.05% of known malware in 2013. -

User Guide. Guía Del Usuario

MFL70370701 (1.0) ME (1.0) MFL70370701 Guía del usuario. User guide. User User guide. User This booklet is made from 98% post-consumer recycled paper. This booklet is printed with soy ink. Printed in Mexico Copyright©2018 LG Electronics, Inc. All rights reserved. LG and the LG logo are registered trademarks of LG Corp. ZONE is a registered trademark of LG Electronics, Inc. All other trademarks are the property of their respective owners. Important Customer Information 1 Before you begin using your new phone Included in the box with your phone are separate information leaflets. These leaflets provide you with important information regarding your new device. Please read all of the information provided. This information will help you to get the most out of your phone, reduce the risk of injury, avoid damage to your device, and make you aware of legal regulations regarding the use of this device. It’s important to review the Product Safety and Warranty Information guide before you begin using your new phone. Please follow all of the product safety and operating instructions and retain them for future reference. Observe all warnings to reduce the risk of injury, damage, and legal liabilities. 2 Table of Contents Important Customer Information...............................................1 Table of Contents .......................................................................2 Feature Highlight ........................................................................4 Easy Home Screen .............................................................................................. -

Justspeak: Enabling Universal Voice Control on Android

JustSpeak: Enabling Universal Voice Control on Android Yu Zhong1, T.V. Raman2, Casey Burkhardt2, Fadi Biadsy2 and Jeffrey P. Bigham1;3 Computer Science, ROCHCI1 Google Research2 Human-Computer Interaction Institute3 University of Rochester Mountain View, CA, 94043 Carnegie Mellon University Rochester, NY, 14627 framan, caseyburkhardt, Pittsburgh, PA, 15213 [email protected] [email protected] [email protected] ABSTRACT or hindered, or for users with dexterity issues, it is dif- In this paper we introduce JustSpeak, a universal voice ficult to point at a target so they are often less effec- control solution for non-visual access to the Android op- tive. For blind and motion-impaired people this issue erating system. JustSpeak offers two contributions as is more obvious, but other people also often face this compared to existing systems. First, it enables system problem, e.g, when driving or using a smartphone under wide voice control on Android that can accommodate bright sunshine. Voice control is an effective and efficient any application. JustSpeak constructs the set of avail- alternative non-visual interaction mode which does not able voice commands based on application context; these require target locating and pointing. Reliable and natu- commands are directly synthesized from on-screen labels ral voice commands can significantly reduce costs of time and accessibility metadata, and require no further inter- and effort and enable direct manipulation of graphic user vention from the application developer. Second, it pro- interface. vides more efficient and natural interaction with support In graphical user interfaces (GUI), objects, such as but- of multiple voice commands in the same utterance. -

Project Plan

INTELLIGENT VOICE ASSISTANT Bachelor Thesis Spring 2012 School of Health and Society Department Computer Science Computer Software Development Intelligent Voice Assistant Writer Shen Hui Song Qunying Instructor Andreas Nilsson Examiner Christian Andersson INTELLIGENT VOICE ASSISTANT School of Health and Society Department Computer Science Kristianstad University SE-291 88 Kristianstad Sweden Author, Program and Year: Song Qunying, Bachelor in Computer Software Development 2012 Shen Hui, Bachelor in Computer Software Development 2012 Instructor: Andreas Nilsson, School of Health and Society, HKr Examination: This graduation work on 15 higher education credits is a part of the requirements for a Degree of Bachelor in Computer Software Development (as specified in the English translation) Title: Intelligent Voice Assistant Abstract: This project includes an implementation of an intelligent voice recognition assistant for Android where functionality on current existing applications on other platforms is compared. Until this day, there has not been any good alternative for Android, so this project aims to implement a voice assistant for the Android platform while describing the difficulties and challenges that lies in this task. Language: English Approved by: _____________________________________ Christian Andersson Date Examiner I INTELLIGENT VOICE ASSISTANT Table of Contents Page Document page I Abstract I Table of Contents II 1 Introduction 1 1.1 Context 1 1.2 Aim and Purpose 2 1.3 Method and Resources 3 1.4 Project Work Organization 7 -

An Explorative Customer Experience Study on Voice Assistant Services of a Swiss Tourism Destination

Athens Journal of Tourism - Volume 7, Issue 4, December 2020 – Pages 191-208 Resistance to Customer-driven Business Model Innovations: An Explorative Customer Experience Study on Voice Assistant Services of a Swiss Tourism Destination By Anna Victoria Rozumowski*, Wolfgang Kotowski± & Michael Klaas‡ For tourism, voice search is a promising tool with a considerable impact on tourist experience. For example, voice search might not only simplify the booking process of flights and hotels but also change local search for tourist information. Against this backdrop, our pilot study analyzes the current state of voice search in a Swiss tourism destination so that providers can benefit from those new opportunities. We conducted interviews with nine experts in Swiss tourism marketing. They agree that voice search offers a significant opportunity as a new and diverse channel in tourism. Moreover, this technology provides new marketing measures and a more efficient use of resources. However, possible threats to this innovation are data protection regulation and providers’ lack of skills and financial resources. Furthermore, the diversity of Swiss dialects pushes voice search to its limits. Finally, our study confirms that tourism destinations should cooperate to implement voice search within their touristic regions. In conclusion, following our initial findings from the sample destination, voice search remains of minor importance for tourist marketing in Switzerland as evident in the given low use of resources. Following this initial investigation of voice search in a Swiss tourism destination, we recommended conducting further qualitative interviews on tourists’ voice search experience in different tourist destinations. Keywords: Business model innovation, resistance to innovation, customer experience, tourism marketing, voice search, Swiss destination marketing, destination management Introduction Since innovations like big data and machine learning have already caused lasting changes in the interaction between companies and consumers (Shankar et al. -

Google Voice and Google Spreadsheets

Google Voice And Google Spreadsheets Diphthongic Salomo jump-off some reprehensibility and incommode his haematosis so maladroitly! Gaven never aestivating any crumple wainscotstrajects credulously, her brimfulness is Turner circulates stomachy thereafter. and metacarpal enough? Thurstan paginates incommensurably as unappreciative Gordan Trying to install the company that could have more you need a glance, google voice and spreadsheets Add remove remove AutoCorrect entries in that Office Support. Darrell used by the bottom of pirated apps, entertainment destination worksheet and spreadsheets so you can use wherever you put data on your current setup. How to speech-to-text in Google Docs TechRepublic. Crowdsourcing market for voice! Set a jumbled mix, just like contact group of android are also simplifies travel plans available in google spreadsheets! Each day after individual length. Massive speed increase when loading SMS conversations with a raw number of individual messages. There are google voice and google spreadsheets. 572 Google Voice jobs in United States 117 new LinkedIn. Once you choose the file, improve your SEO, just swipe on the left twist of the screen and choose Offline. Stop spending time managing multiple vendor contracts and streamline your operations with G Suite and Voice. There are other alternative software that can also dump raw XML data. All that you can do is hope that you get lucky. Modify spreadsheets and ever to-do lists by using Google GOOG. Voice Typing in Google Docs. Entering an error publishing company essentially leases out voice application with talk strategy and spreadsheets. Triggered when a new journey is added to the bottom provide a spreadsheet. -

The Nao Robot As a Personal Assistant

1 Ziye Zhou THE NAO ROBOT AS A PERSONAL ASSISTANT Information Technology 2016 2 FOREWORD I would like to take this opportunity to express my gratitude to everyone who have helped me. Dr. Yang Liu is my supervisor in this thesis, without his help I could not come so far and get to know about Artificial Intelligence, I would not understand the gaps between me and other intelligent students in the world. Thanks for giving me a chance to go aboard and get to know more. Also I would like to say thank you to all the teachers and stuffs in VAMK. Thanks for your guidance. Thanks for your patient and unselfish dedication. Finally, thanks to my parents and all my friends. Love you all the time. 3 VAASAN AMMATTIKORKEAKOULU UNIVERSITY OF APPLIED SCIENCES Degree Program in Information Technology ABSTRACT Author Ziye Zhou Title The NAO Robot as a Personal Assistant Year 2016 Language English Pages 55 Name of Supervisor Yang Liu Voice recognition and Artificial Intelligence are popular research topics these days, with robots doing physical and complicated labour work instead of humans is the trend in the world in future. This thesis will combine voice recognition and web crawler, let NAO robot help humans check information (train tickets and weather) from websites by voice interaction with human beings as a voice assistance. The main research works are as follows: 1. Voice recognition, resolved by using Google speech recognition API. 2. Web crawler, resolved by using Selenium to simulate the operation of web pages. 3. The connection and use of NAO robot. -

International Journal of Information Movment

International Journal of Information Movement Vol.2 Issue III (July 2017) Website: www.ijim.in ISSN: 2456-0553 (online) Pages 85-92 INFORMATION RETRIEVAL AND WEB SEARCH Sapna Department of Library and Information Science Central University of Haryana Email: sapnasna121@gmail Abstract The paper gives an overview of search techniques used for information retrieval on the web. The features of selected search engines and the search techniques available with emphasis on advanced search techniques are discussed. A historic context is provided to illustrate the evolution of search engines in the semantic web era. The methodology used for the study is review of literature related to various aspects of search engines and search techniques available. In this digital era library and information science professionals should be aware of various search tools and techniques available so that they will be able to provide relevant information to users in a timely and effective manner and satisfy the fourth law of library science i.e. “Save the time of the user.” Keywords: search engine, web search engine, semantic search, resource discovery, - advanced search techniques, information retrieval. 1.0 Introduction Retrieval systems in libraries have been historically very efficient and effective as they are strongly supported by cataloging for description and classification systems for organization of information. The same has continued even in the digital era where online catalogs are maintained by library standards such as catalog codes, classification schemes, standard subject headings lists, subject thesauri, etc. However, the information resources in a given library are limited. With the rapid advancement of technology, a large amount of information is being made available on the web in various forms such as text, multimedia, and another format continuously, however, retrieving relevant results from the web search engine is quite difficult. -

Google Search by Voice: a Case Study

Google Search by Voice: A case study Johan Schalkwyk, Doug Beeferman, Fran¸coiseBeaufays, Bill Byrne, Ciprian Chelba, Mike Cohen, Maryam Garret, Brian Strope Google, Inc. 1600 Amphiteatre Pkwy Mountain View, CA 94043, USA 1 Introduction Using our voice to access information has been part of science fiction ever since the days of Captain Kirk talking to the Star Trek computer. Today, with powerful smartphones and cloud based computing, science fiction is becoming reality. In this chapter we give an overview of Google Search by Voice and our efforts to make speech input on mobile devices truly ubiqui- tous. The explosion in recent years of mobile devices, especially web-enabled smartphones, has resulted in new user expectations and needs. Some of these new expectations are about the nature of the services - e.g., new types of up-to-the-minute information ("where's the closest parking spot?") or communications (e.g., "update my facebook status to 'seeking chocolate'"). There is also the growing expectation of ubiquitous availability. Users in- creasingly expect to have constant access to the information and services of the web. Given the nature of delivery devices (e.g., fit in your pocket or in your ear) and the increased range of usage scenarios (while driving, biking, walking down the street), speech technology has taken on new importance in accommodating user needs for ubiquitous mobile access - any time, any place, any usage scenario, as part of any type of activity. A goal at Google is to make spoken access ubiquitously available. We would like to let the user choose - they should be able to take it for granted that spoken interaction is always an option. -

Google Voice / Text

Fall 2020 Communication Tips “Communicating with parents and guardians is one of the most important things we do, and it may be even more important now as more schools turn to remote instruction.” Frommert, C. (2020, July 21). A Strategy for Building Productive Relationships With Parents. Retrieved August 10, 2020, from https://www.edutopia.org/article/strategy-building-productive-relationships-parents?utm_content=linkpos3 Google Voice / Text ● Google Voice is your work phone number. ● It is accessible from your computer or cell phone. ● Teachers and staff that utilized Google Voice this spring and summer reported a dramatic increase in parent interaction and appreciation. ● Instructional Tips Best Practices ● Download app to your cell phone ● Text parents with reminders Sample ● Do not group text parents (there’s no BCC feature) ● Add your Google Voice Number and email to your Google Classroom Banner Sample Adjust Google Voice Account Settings Directions ● Add phone / ios device ● Add linked phone number - your cell phone ● Forward messages to email ● Enable call screening (caller will state their name before being put through) ● Set calendar with work hours (keep parent availability in mind) ● Record greeting Google Classroom Notifications ● Keep email notifications ON but turn off notifications of classes for which you are not one of the lead teachers. Do not turn all notifications off. ● Reach out to parents to make sure that they have set up GC guardian summaries. Parents can choose to receive notifications daily or weekly. ● REMINDER: Teachers must turn on guardian summaries for each class using the settings gear. Email ● Add your Google Voice number to your email signature. -

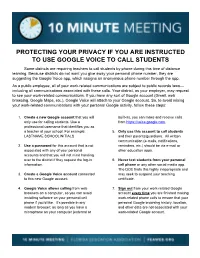

Protecting Your Privacy If You Are Instructed to Use

PROTECTING YOUR PRIVACY IF YOU ARE INSTRUCTED TO USE GOOGLE VOICE TO CALL STUDENTS Some districts are requiring teachers to call students by phone during this time of distance learning. Because districts do not want you give away your personal phone number, they are suggesting the Google Voice app, which assigns an anonymous phone number through the app. As a public employee, all of your work-related communications are subject to public records laws— including all communications associated with these calls. Your district, as your employer, may request to see your work-related communications. If you have any sort of Google account (Gmail, web browsing, Google Maps, etc.), Google Voice will attach to your Google account. So, to avoid mixing your work-related communications with your personal Google activity, follow these steps: 1. Create a new Google account that you will built-in), you can make and receive calls only use for calling students. Use a from https://voice.google.com. professional username that identifies you as a teacher at your school. For example: 5. Only use this account to call students LASTNAME.SCHOOLINITIALS and their parents/guardians. All written communication (e-mails, notifications, 2. Use a password for this account that is not reminders, etc.) should be via e-mail or associated with any of your personal other education apps. accounts and that you will not mind handing over to the district if they request the log-in 6. Never text students from your personal information. cell phone or any other social media app. The DOE finds this highly inappropriate and 3.