Enhancing the Visualization of Percussion Gestures by Virtual Character Animation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Proceedings of the 20Th International Seminar of the ISME Commission on Music in Special Education, Music Therapy, and Music Medicine

Proceedings of the 20th International Seminar of the ISME Commission on Music in Special Education, Music Therapy, and Music Medicine Faculdade de Artes do Paraná – FAP CuritiBa, Brazil 17-18 July 2014 Editor Melita Belgrave ©International Society for Music Education 2014 www.isme.org All abstracts presented at the 2014 ISME World Conference in Porto Alegre, Brazil, were peer refereed before inclusion in the Conference program. In addition, completed papers were fully (blind) refereed by a panel of international authorities before inclusion in the Seminar Proceedings. Editorial Board Melita Belgrave, Editor Jessie Chen Helen Farrell Markku Kaikkonen Bo Nilsson Lyn Schraer-Joiner National Library of Australia Cataloguing-in-Publication Author: ISME Commission on Music in Special Education, Music Therapy, and Music Medicine International Seminar (20th: 2014: Curitba, Brazil) Title: Proceedings of the 20th International Seminar of the Commission on Music in Special Education, Music Therapy, and Music Medicine, Curitiba, Brazil [electronic resource] / ISBN: 978-0-9942055-3-7 (ebook) Notes: Includes bibliographical references. Subjects: Music--Congresses. Music in education--Congresses. ISME Commission on Music in Special Education, Music Therapy, and Music Medicine Dewey Number: 780.7 ii The Conference Organizing Committee and ISME are grateful to the following people who provided expert, independent advice and who acted as referees for selecting papers and workshops for presentation at the 2014 ISME World Conference: Commissioners 2012-2014 -

Educational Materials to Go with the Video & Extra Materials For

Educational Materials to go with the Video & extra materials for preparation and extension of the concert Prepared by Valerie Trollinger ([email protected], or [email protected] ) October 2012 Discovery Concert Series The Science of Sound Reading Symphony Orchestra Discovery Concert Series October, 2012 The Thrill of Resonance (Grades 4 , and above; Grade 3 with help) Teacher Quick-Start Guide The video is the second one in our sequence about the Science of Sound. There are three (3) ways to use this series at this point: 1) For students to get the full benefit of the science behind the sounds, then viewing the first video “The Science of Sound” is strongly recommended. a. Show the first video in the sequence (The Science of Sound) with the accompanying worksheet, go over the worksheet as needed. When the students are familiar with the meaning of the words Frequency, Amplitude, Time, Dynamics, and the rest of the terms on the worksheet, then go on to the second video (The Thrill of Resonance) with that accompanying worksheet. From there you can continue with activities that are relevant to your curriculum. There are a lot of other activities that go with both of these videos, addressing STEM technology ( adding the arts ) and building on creative thinking, problem solving, critical thinking, reading, writing, and even engineering. 2) If you don’t have time for the first video at this point and want to only show the second-- a) The students still need to be familiar with the terms Frequency, Amplitude, and Time. Definitions will follow in the teacher pack. -

The Thumper C Docx

Project Number: MQPWZM-RB08 MQPECC-B708 The Thumper Robotic Bass A Major Qualifying Project Report Submitted to the Faculty Of the Worcester Polytechnic Institute In partial fulfillment of the requirements for the Degree of Bachelor of Science By ______________________________________ Matt Brown, Electrical and Computer Engineering, Class of 2008 ______________________________________ Barry Kosherick, Electrical and Computer Engineering, Class of 2009 ______________________________________ Adam Teti, Mechanical Engineering, Class of 2008 April 18, 2008 ________________________________ Prof. William R. Michalson, Co-Advisor ________________________________ Prof. Eben C. Cobb, Co-Advisor Abstract The Thumper Robotic Bass MQP is an attempt at creating a self-playing bass guitar that responds to MIDI input as well as input from an electric guitar to provide accompaniment. By bringing together a team of electrical and mechanical engineers, the project was structured to emulate an engineering design as encountered in industry. Table of Contents Introduction .................................................................................................................................................. 1 Problem Statement ................................................................................................................................... 1 Background ............................................................................................................................................... 2 Economics and Target Market ................................................................................................................. -

Irregular Incurve Xiaoyang Feng Media Researcher, NYU ITP 721 Broadway, 4Th Floor New York, NY 10003 503 953 4862 [email protected]

Proceedings of the 2010 Conference on New Interfaces for Musical Expression (NIME 2010), Sydney, Australia Irregular Incurve Xiaoyang Feng Media Researcher, NYU ITP 721 Broadway, 4th floor New York, NY 10003 503 953 4862 [email protected] developed based on the prototype of traditional instruments only for people. Unlike traditional instruments, Irregular Incurve is designed as an acoustic instrument played by a robot. Twelve strings of same thickness but different lengths are laid out on a sound box. The robot operates twelve corresponding guitar picks to pluck each string. The musical scale of this instrument can be potentially expanded through connecting it with more robotic musical instruments of the same type. The design philosophy behind Irregular Incurve is to visualize and enhance the aesthetic expression of the music generating process. The designer believes that the enjoyment of music appreciation does not only happen at the moment when music reaches the Figure 1. First Prototype without control interface audience, but is also stimulated by watching the visual interactions between the musician and the instrument. Irregular Incurve attempts to enlarge this imagination about music by ABSTRACT visually expressing the beauty of mechanical movements. Irregular Incurve is a MIDI controllable robotic string instrument. The twelve independent string-units compose the complete musical scale of 12 units. Each string can be plucked by a motor control guitar pick. A MIDI keyboard is attached to the instrument and serves as an interface for real-time interactions between the instrument and the audience. Irregular Incurve can also play pre- programmed music by itself. This paper presents the design concept and the technical solutions to realizing the functionality of Irregular Incurve. -

Real Time Music Visualization: a Study in the Visual Extension of Music

REAL TIME MUSIC VISUALIZATION: A STUDY IN THE VISUAL EXTENSION OF MUSIC A Thesis Presented in Partial Fulfillment of the Requirements for the Degree Master of Fine Arts in the Graduate School of The Ohio State University By Matthew Neil Bain, B.F.A. The Ohio State University 2008 Master’s Examination Committee: Approved by Professor Alan Price, Advisor Professor Maria Palazzi ____________________________________ Dr. Gregory Proctor Advisor Graduate program in the department of Industrial, Interior, and Visual Communication Design Copyright by Matthew Neil Bain 2008 ABSTRACT This work documents a design-led process of discovery for artistic development of real time 3D animations functioning as a visual extension to a live music performance. Musical dynamics, patterns, and themes are transposed into a visual form that develops over time through a carefully orchestrated process driven by the artist and the computer. Historical animations by Fischinger, Whitney, and “light organ” projections by Wilfred inform the work’s conceptual development. Various systems automate camera controls, audio analysis, and layers of motion in addition to providing the artist with a unique set of controls that demonstrate the effectiveness of the computer as a visual instrument for the artist. The complete system balances artistic responses, live multi-channel audio input, and computer control systems to orchestrate a real time visualization of a live music performance. The artist’s sensibilities and the computer’s generative capabilities combine to create a visually-focused member in the performing music ensemble. ii Dedicated to my Grandad iii ACKNOWLEDGMENTS I would like to thank my advisor, Alan Price, for his guidance, thought provoking conversations, and lunches. -

Active Listening Listening Bingo Games P

Active Listening Listening Bingo Games p. 36 Ann Bryant introduces Music is everywhere! Listen… MEET THE CLASSICS with Movement, Props, & Activities Classical Music Through Stories more Children’s MY FIRST CLASSICAL MUSIC BOOK Classics pp. 86-89 by Genevieve Helsby & Jason Chapman. An all-school Classical music is everywhere—even in space! program of Read about TV/cinema and listen to Harry classical Potter film music, dance the Can-Can of music Offenbach, attend a Mozart concert, watch in 3 min. theatre with Peer Gynt. Discover the compos- Dan Fee Denise Gagne a day! ers and their milieu—Mozart & opera, Bach All 5 Sets Set of 5 & organ, John Adams & program music, and 7147 $122 more. Meet the instruments and hear music LISTENING FUN WITH MORE LISTENING FUN Books & CDs that features each. A fun and intriguing over- SCARVES AND TENNIS with Parachutes, Paper 9727 $59 view of classical music in cartoon style with a BALLS: Rhythmically Plates, Ribbons, and Scarves LISTENING RESOURCE KITS by Denise Gagne. 28-track, 69 min. CD. HB & CD 7237 $12.99 Expressive Movements to by Dan Fee and Denise Gagné. These innovative kits open the door for an Classical Music by Dan Fee. Get kids moving to classical active music listening program for the whole Ann Bryant’s books and CDs integrate music, art and literature for My First Orchestra Book Introduce classical works of music, with parachutes, scarves, school in about 3 min. a day, even without a ages 6-10. Each read-aloud story coordinates with a piece of classical HB & CD 7240 $12.99 p. -

Creative Musical Instrument Design

CREATIVE MUSICAL INSTRUMENT DESIGN: A report on experimental approaches, unusual creations and new concepts in the world of musical and sound instruments. A thesis submitted to the SAE Institute, London, in fulfillment of the requirements for the degree in Recording Arts, awarded by Middlesex University. Author: Andrea Santini Student: 42792 Intake: RAD0503X Project Tutors: Christopher Hayne Darren Gash London, August 2004 c r e a t i v e m u s i c a l i n s t r u m e n t d e s i g n Abstract The following document presents the results of an investigation into the current reality of creative musical-instrument and sound-instrument design. The focus of this research is on acoustic and electro-acoustic devices only, sound sources involving oscillators, synthesis and sampling, be it analogue or digital, have therefore been excluded. Also, even though occasional reference will be made to historical and ‘ethnical’ instruments, they will not be treated as a core issue, the attention being primarily centered on contemporary creations. The study includes an overview of the most relevant “sonic creations” encountered in the research and chosen as representative examples to discuss the following aspects: o Interaction between body and instrument. o Sonic Space o Tuning and layout of pitches o Shapes, materials and elements o Sonic objects, noise and inharmonic sources o Aesthetics: sound instruments as art objects o Amplification and transducer technologies These where chosen to provide some degree of methodology during the research process and a coherent framework to the analysis of a subject which, due to its nature and to the scarcity of relevant studies, has unclear boundaries and a variety of possible interdisciplinary connections. -

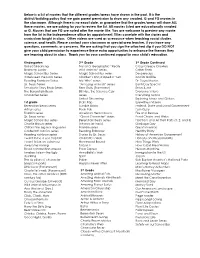

Below Is a List of Movies That the Different Grades/Areas Have Shown in the Past

Below is a list of movies that the different grades/areas have shown in the past. It is the district/building policy that we gain parent permission to show any unrated, G and PG movies in the classroom. Although there is no exact date, or guarantee that the grades/areas will show ALL these movies, we are asking for you to review the list. All movies listed are educationally unrated or G. Movies that are PG are noted after the movie title. You are welcome to preview any movie from the list in the Independence office by appointment. Titles correlate with the stories and curriculum taught in class. Other videos are used as a resource when teaching social studies, science, and health. Please contact your classroom or special area teacher, if you have any questions, comments, or concerns. We are asking that you sign the attached slip if you DO NOT give your child permission to experience these extra opportunities to enhance the themes they are learning about in class. Thank you for your continued support in your child’s education. Kindergarten 2nd Grade 3rd Grade Continued United Streaming National Geographic “Really Crazy Creepy Crawlers Brainpop Junior Wild Animals” series. Critter Rock Magic School Bus Series Magic School Bus series Despereaux In Between the Lions Series Children’s Encyclopedia “Tell Doctor Dolittle Reading Rainbow Series Me Why” series Dr. Seuss Movies Dr. Seuss Series “Amazing Animals” series Earth Day Special Scholastic Story Book Series Fern Gully (Rainforest) Econ & Me The Berenstain Bears Bill Nye, the Science Guy Everyone’s Hero Character Series series Everything Grows United Streaming Exploring Maps and Globes 1st grade Brain Pop Eyewitness Videos Berenstain bears series Tumble Books Federal, State and Local Government Arthur series Book Pals Fern Gully Franklin series American Hero Classics Fire and Rescue Dr. -

Fomrhi Quarterly

Quarterly No. 136, December 2016 FoMRHI Quarterly BULLETIN 136 Christopher Goodwin 2 COMMUNICATIONS 2060 Making woodwind instruments 7: Drilling long holes in woodwind instruments Jan Bouterse 6 2061 Making woodwind instruments 8: Reamers for woodwind instruments Jan Bouterse 15 2062 Il Calascionaro di Pratola Peligna John Downing 26 2063 Reverse engineering the Dean Castle Colascione – or Tiorba? John Downing 28 2064 Building the Urbino Lira Jacob Mariani 35 The next issue, Quarterly 137, will appear in April 2017. Please send in Comms and announcements to the address below, to arrive by April 1st. Fellowship of Makers and Researchers of Historical Instruments Committee: Andrew Atkinson, Peter Crossley, John Downing, Luke Emmet, Peter Forrester, Eleanor Froelich, Martyn Hodgson, Jim Lynham, Jeremy Montagu, Filadelfio Puglisi, Michael Roche, Alexander Rosenblatt, Marco Tiella, Secretary/Quarterly Editor: Christopher Goodwin Treasurer: John Reeve Webmaster: Luke Emmet Southside Cottage, Brook Hill, Albury, Guildford GU5 9DJ, Great Britain Tel: (++44)/(0)1483 202159 E-mail: [email protected] Web: www.fomrhi.org BULLETIN 136 Christopher Goodwin This is the fourth and final Q of this subscription period (nos. 133-136). It is just a few weeks later than planned, partly owing to computer problems, but it does mean that we have produced four issues in a calendar year as we should. Once again in this issue we have a nice balance between bowed, plucked and blown instruments, and between practical and speculative; many thanks to regular contributors Jan Bouterse and John Downing, and new contributor Jacob Mariani. Those who have not already paid for their 2017 subscriptions will find a membership form herewith inviting them to do so. -

Jeremy Montagu the Conch P.1 of 9 the Conch Its Use and Its

Jeremy Montagu The Conch p.1 of 9 The Conch Its Use and its Substitutes from Prehistory to the Present Day Jeremy Montagu Of all the prehistoric trumpets, perhaps the first, certainly the most widespread in use, with some interesting gaps in its distribution that we shall come to later, and perhaps often the most important trumpet, has been the conch, a trumpet made from the shell of a marine gastropod. This use of such shells goes back to remote antiquity, and the conch is certainly one of mankind’s earliest trumpets. It is far easier to knock the tip off the spire of a shell than to remove the point of a cow horn, or of a goat horn, or of an elephant tusk. It is easier still to knock, chip, or drill a hole in the side of the shell, but we have no evidence for side-blown shells outside of Oceania and a small part of East Africa. There is one shell trumpet known from the Upper Palaeolithic period and many from the Neolithic in various parts of Europe, some of them hundreds of kilometres from the sea.Thus these conches are far earlier than Tut-ankh-amūn’s trumpets i, which must date from somewhere before 1343 BCE, the date of the Pharaoh’s death ii, and are also some twelve hundred years earlier than the Danish lurs, which, dating from around 800 BCE, and which are otherwise the oldest surviving European hornsiii. Since many of these shells were found in graves, and at other ritual sites, it has been presumed that they were of some importance, and that they were probably used as ritual instruments. -

Autism, New Music Technologies and Cognition

Autism, New Music Technologies and Cognition by Adam Boulanger B.A. Music Therapy Berklee College of Music (2004) Submitted to the Program in Media Arts and Sciences, School of Architecture and Planning, in partial fulfillment of the requirements for the degree of MASSACHUSETTS INS1TTWR OF TECHNOLOGY Master of Science in Media Arts and Sciences SEP1 2006 at the MASSACHUSETTS INSTITUTE OF TECHNOLOGY LIBRARIES September, 2006 @ Massachusetts Institute of Technology 2006. All rights reserved. ROTCH Author................................................................. Program in Media Arts and Sciences August 16, 2006 Certified by........................................................-.-. Tod Machover Prof r of Music and Media Thesis Supervisor A ccepted by ............................................ ... -- Andrew Lipp Chairman, Department Committee on Graduate Students Autism, New Music Technologies and Cognition by Adam Boulanger Submitted to the Program in Media Arts and Sciences, School of Architecture and Planning, on August 16, 2006, in partial fulfillment of the requirements for the degree of Master of Science in Media Arts and Sciences Abstract Central coherence accounts of autism have shown dysfunction in the process- ing of local versus global information that may be the source of symptoms in social behavior, communication and repetitive behavior. An application was developed to measure cognitive abilities in central coherence tasks as part of a music composition task. The application was evaluated in collaboration with the Spotlight Program, an interdisciplinary social pragmatics program for chil- dren with Asperger's syndrome. This research indicates that it is possible to embed cognitive measure as part of a novel music application. Implications for current treatment interventions, and longitudinal experimentation designs are presented. Thesis supervisor: Tod Machover Title: Professor of Music and Media Thesis Committee Thesis supervisor.......................... -

@Home Learning Packet Covers

APRIL 2020 *DIGITAL EDITION* ELEMENTARY MUSIC @HOME LEARNING PATH #TITAN UP YOUR BRAIN LORAIN! www.lorainschools.org WEEK 4 | April 6 - April 9 PRINTABLE ACTIVITIES Below are resources that are for multiple grade levels. If you have any questions please reach out to your Elementary music teacher so that we can aid you along! Name ________________ SQUILT Class ________________ Listen to Camille Saint-Saën’s The Carnival of the Animals, Movement VIII. What animals do you hear? ______________________________________ What elements of the music made you think of that animal? ___________________________________________________________________________________________________________________ ___________________________________________________________________________________________________________________ Draw below what you imagine happening this movement of The Carnival of the Animals. SillyOMusic © The Carnival of the Animals VIII. Characters with Long Ears by Camille Saint-Saëns Listen and color. Small details may be colored with any color. Sun & Sky yellow & orange = violins blue & purple = tubas orange & red = flutes Rabbits gray = tempo played freely brown = tempo is steady white = very slow Ground green = 1 instrument brown = 2 instruments gray = 20 instruments Hills gray = loud brown = soft green = loud & soft Trampoline blue & gray = short notes orange & white = long notes purple & blue = long & short notes Name: © SillyOMusic Name ________________ Musical Egg Decorating Class ________________ Decorate the egg with eighth notes. Decorate the egg with quarter notes. Decorate the egg with quarter rests. Decorate the egg with half notes. Decorate the egg with dotted half notes. Decorate the egg with whole notes. © SillyOMusic Name ________________ Rabbit Chant Class ________________ Fill-in the rhythm of the words below with a , , or . Mister Rabbit hops, with a basket full of treats. Then he hides them in the grass for kids to find and eat! SillyOMusic © Name ________________ Rhythm Hop: Class ________________ The rabbit is jumping over notes.