Performance Assessment of Automated Feature Extraction Tools on High Resolution Imagery

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Arcgis Online Will Change How You Think About Mapping and GIS Continued from Cover

ArcNews Esri | Summer 2012 | Vol. 34, No. 2 Strengthening the GIS Profession Using Web Maps to Tell Your Story David DiBiase, Director of Education, Industry Solutions, Esri For centuries, maps have been telling stories—chronicling discovery and conquest, documenting an understanding of the patterns and interrelationships that underlie human and natural systems. Is GIS a profession? If so, what’s its relationship to other professions in the geo- But only in the past few years have new technologies and new media vastly expanded the potential spatial fi eld? How can you tell if someone who calls herself a GIS profession- of maps to weave narratives. al—or a GIS educator for that matter—knows what she’s doing? You might be Maps are now interactive. Th ey enable and refl ect data analysis; they’re constantly updated; and surprised to learn that these are contentious questions in the United States and they’re enriched with multimedia content. Maps are newly supercharged by digital technologies: other parts of the world. Th ey’re contentious because the demand for GIS work GIS, the web, the cloud, and mobile communications. Now, maps can take users from globe to continued on page 4 street corner in seconds; they can dynamically show change over time; they can organize and pres- ent charts, graphs, photos, and video. With the swipe of a fi ngertip across a tablet, map users can compare one theme with another, ask questions of maps, add their own information to maps, and cast votes on maps. ArcGIS Online Will Change How continued on page 22 You Think About Mapping and GIS Th is month, Esri offi cially released ArcGIS Online for organizations. -

David Johnson CORE SKILLS EDUCATION WORK EXPERIENCE

David Johnson 1234 Any Street Sunflower, MS 32410 662-451-1547 [email protected] CORE SKILLS Remote Sensing • Map Reading and Data Modeling • Data Base Management • Imagery Identification Proficient in RemoteView Pro, GeoRover and Google Earth Applications EDUCATION 20xx Delta State University Cleveland, MS Bachelor of Science Major: Geospatial Information Technology 20xx National Geospatial Intelligence Training Facility Roanoke, VA Geospatial Digital Data Users Certification WORK EXPERIENCE 20xx-Present BAE Systems Jackson, MS Geospatial Analyst Provide analysis and support and damage assessment regarding counter intelligence Conduct research and evaluation of raw and infused information to support mission objectives Create detailed visual intelligence products and reports for briefing to meet established intelligence requirements 20xx-20xx Delta State University Cleveland, MS Geospatial Information Technology Intern Provided assistance with MEMA (Mississippi Emergency Management Agency) with transfer of imagery Created a draft plan and needs assessment for Mid-Delta Storm Recovery, Inc. Assisted with FEMA (Federal Emergency Management Agency), Southern Region regarding hurricane season modules Processed and analyzed Google Earth data regarding predicted winter storm models and patterns 20xx-20xx WDAV Jackson, MS Meteorology Intern Researched and reviewed weather patterns and trends Wrote weather reports for on-air talent Featured as emergency on-air talent during tornado outbreak of April 20xx HONORS AND ACTIVITIES President’s List John R. Winston College of Arts Sciences Memorial Scholarship Geospatial Technology Society Southern Geospatial Technology Association David Johnson 1234 Any Street Sunflower, MS 32410 662-451-1547 [email protected] REFERENCES Mr. Kevin L. Shelton Managing Programmer BAE Systems Jackson, MS 38733 (601)) 826-0000 [email protected] Mr. -

23 – 24 September 2015 | the Cable Center Denver, Colorado

Abstract Book 23 – 24 September 2015 | The Cable Center Denver, Colorado This document contains the abstracts for the presentations contributed at the 28th Annual GIS in the Rockies Conference, September 23-24, 2015 at the Cable Center in Denver, Colorado. The presentation files will be gathered and uploaded to slideshare.com for viewing from the GIS in the Rockies website. Kerry Shakarjian and Mike Johnsen 2015 GIS in the Rockies Program Chairs 2 2015 GIS in the Rockies Today’s Vision: Tomorrow’s Reality 23 – 24 September 2015 Table of Contents Workshops ............................................................................................................. 4 Esri Hands-on Learning Lab (HOLL) ................................................................. 6 Poster Presentations .............................................................................................. 7 UAVs, The Photogrammetric Challenges .......................................................... 12 From the Plains to the Mountains: GIS Colorado Beyond the Horizon .......... 15 Surveying: Past, Present, and Future ................................................................. 17 GIS Analysis: A Map for Local and Regional Sustainability............................. 18 Free and Open Source Software for Geospatial Applications (FOSS4G) Advantages and Challenges ................................................................................ 22 GIS in Government .............................................................................................. 26 -

2016 Issue 3

» 2016 USGIF MEMBERSHIP DIRECTORY » A LOOK INSIDE IARPA » GEOINT 2016 RECAPS 2016 ISSUE 3 THE OFFICIAL MAGAZINE OF THE UNITED STATES GEOSPATIAL INTELLIGENCE FOUNDATION MACHINEBIG LEARNING, UNDERSTANDING 2016 USGIF MEMBERSHIP DIRECTORY (SEE BACK C0VER) HOW WILL THE IC HARNESS ADVANCEMENTS IN ARTIFICIAL INTELLIGENCE? MANAGE YOUR DATA. DISCOVER ANSWERS. MAKE DECISIONS. Analysts in today’s world are expected to have the right information at their fingertips to answer urgent questions at a moment’s notice. JagwireTM allows analysts to spend less time searching for the right data and more time providing answers for time-critical operations. harrisgeospatial.com/trajectory ©2016 Exelis Visual Information Solutions, Inc., a subsidiary of Harris Corporation. All rights reserved. All other marks are the property of their respective owners. Image courtesy of DoD. Use of DoD images does not constitute or imply endorsement. CONTENTS 2016 ISSUE 3 02 | VANTAGE POINT Features 12 | ELEVATE Member feedback drives 14 NOVA Information USGIF’s marketing & | MACHINE LEARNING, Management School at communications team. BIG UNDERSTANDING How will the IC harness advancements the Universidade de Lisboa is the first international 04 | INTSIDER in artificial intelligence? GEOINT program NGA Director Cardillo By Melanie D.G. Kaplan on a new age of GEOINT; accredited by USGIF. IGAPP Grand Challenge winner 20 awarded; breaking ISIL’s brand; | QUANTUM LEAPS 25 | MEMBERSHIP PULSE DARPA pushes boundaries of The Intelligence Advanced Textron Systems space technology. Research Projects Activity tackles Geospatial Solutions the IC’s most vexing problems. 06 | IN MOTION evolves proactively to By Jim Hodges Lundahl-Finnie Award and anticipate new trends. USGIF Awards winners announced; USGIF launches SPECIAL SECTION 27 | HORIZONS Universal GEOINT Certification 2016 USGIF MEMBERSHIP DIRECTORY Reading List; Peer Intel; program; USGIF accredits Flip the magazine to the back cover to view James Madison University. -

(MSGIST) 2014 Resume Book

Master of Science of Geographic Information Systems Technology (MSGIST) 2014 Resume Book University of Arizona Geographic Information Systems Technology (GIST) School of Geography and Development University of Arizona • P.O. Box 210076 • Tucson, AZ 85721 • uagist.arizona.edu Select 2014 MSGIST Students Jessica Styron Abrahams Leanndra Arechederra‐Romero William Beaver Shane Clark Cassie Fausel Gwynn Harlowe Pankaj Jamwal Jessica Fraver Michael Levengood Manny Lizarraga Brett Stauffer Jamie Velkoverh Nathan Wasserman RESUMES Jessica Styron Abrahams (formerly Jessica Alicia Styron) 2002 East River Road, Apt. S10 Tucson, AZ 85718 Mobile: (925) 451‐6289 Qualifications 01/2014 – 12/2014 University of Arizona, Tucson, Arizona. Qualification Masters of Science in GIS Technology (expected 12/2014) 08/2009 – 12/2001 University of Arizona, Tucson, Arizona. Qualification Masters of Science in Planning 04/2002 – 07/2003 Chapman University (Concord Campus), Orange, California. Qualification: Single Subject Credential in French with Supplementary Authorization in Introductory English 08/1997 – 12/2001 University of California at Berkeley, Berkeley, California. Qualification: Bachelors of Arts in French and in Comparative Literature. Between August 1999 – June 2000, Participated in the Education Abroad Program in Grenoble, France and attended the Université de Grenoble III, Stendhal. I graduated with a GPA of 3.44. Employment 03/2014 – 12/2014 BAIR Analytics, Inc. Highlands Ranch, Colorado. Intern. Main duties: Extracted and transformed vector shapefiles in GIS to create topographical maps. 03/2011 – 08/2011 City and County of San Francisco, Recreation and Parks Department, Planning Division, San Francisco, California. Student Design Trainee 1 Main duties: Reviewed and responded to CEQA and NEPA documents for projects impacting Recreation & Parks Department (RPD) facilities; Researched and summarized best management practices; Tracked and summarized U.S. -

ENVI EX User's Guide 3 Contents

ENVI EX User’s Guide ENVI EX December, 2009 Edition Copyright © ITT Visual Information Solutions All Rights Reserved 20EZX101DOC Legal The IDL®, IDL Advanced Math and Stats™, ENVI®, ENVI Zoom™, and ENVI® EX software programs and the accompanying procedures, functions, and documentation described herein are sold under license agreement. Their use, duplication, and disclosure are subject to the restrictions stated in the license agreement. ITT Visual Information Solutions reserves the right to make changes to this document at any time and without notice. Limitation of Warranty ITT Visual Information Solutions makes no warranties, either express or implied, as to any matter not expressly set forth in the license agreement, including without limitation the condition of the software, merchantability, or fitness for any particular purpose. ITT Visual Information Solutions shall not be liable for any direct, consequential, or other damages suffered by the Licensee or any others resulting from use of the software packages or their documentation. Permission to Reproduce this Manual If you are a licensed user of these products, ITT Visual Information Solutions grants you a limited, nontransferable license to reproduce this particular document provided such copies are for your use only and are not sold or distributed to third parties. All such copies must contain the title page and this notice page in their entirety. Export Control Information The software and associated documentation are subject to U.S. export controls including the United States Export Administration Regulations. The recipient is responsible for ensuring compliance with all applicable U.S. export control laws and regulations. These laws include restrictions on destinations, end users, and end use. -

ENVI 5 Functional Summary

ENVI 5 Functional Summary Operating Systems • ALSAT-1 • ERDAS IMAGINE (.img) • ASTER • ESRI Geodatabase Raster Dataset: Windows XP SP2 (Intel/AMD 32- & 64-bit) • ATSR • Personal (.mdb) Windows Vista (Intel/AMD 32- & 64-bit) • AVHRR • File (.gdb) Windows 7 (Intel/AMD 32- & 64-bit) • CARTOSAT-1 • Enterprise (.sde) Linux Kernel 2.6.x (Intel/AMD 64-bit) • DMSP (NOAA) • ESRI GRID Max OS X 10.6 (Intel 64-bit) • ENVISAT AATSR • GeoJP2 / GeoJ2K Max OS X 10.7 (Intel 64-bit) • ENVISAT MERIS • GeoTIFF • EROS • HDF4 Data Import • FORMOSAT-2 • JPEG • GeoEye-1 • JPEG2000 Raster Formats: • IKONOS • NITF 2.0, 2.1 (with license) • ADRG • IRS • PICT • ArcView Raster BIL • KOMPSAT-2 • PCI PIX • ASCII (x,y,z & gridded) • Landsat MSS, TM, ETM+ • PNG • BigTIFF • MAS-50 • SRF • Binary (flat) • MASTER • TIFF • BMP • MISR • XWD • CADRG • MODIS Vector Formats: • CIB • NigeriaSat-1 • AutoCAD DXF • DPPDB (with license) • OrbView-3 • ENVI EVF • DTED (levels 0, 1, 2) • RapidEye • ESRI Geodatabase Feature Class: • ECW • SeaWiFS • Personal (.mdb) • ENVI Raster • QuickBird • File (.gdb) • ENVI Gzip Compressed • SPOT • Enterprise (.sde) • ER Mapper ERS • TIMS • ESRI Shapefile • ERDAS IMAGINE (.img, .ige, .lan) • UK-DMCSat-1 Other Formats: • ESRI Geodatabase Raster Dataset: • WorldView-1 • Direct To Printer • Personal (.mdb) • WorldView-2 • Google Earth KML • File (.gdb) Hyperspectral Sensors: • MPEG • Enterprise (.sde) • AISA • PostScript • ESRI GRID • ARTEMIS (with license) • VRML • GeoJP2 / GeoJ2K • AVIRIS Chip Display To: • GeoTIFF • CASI • ArcMap • HDF4 • HyMap • -

Getting Started in ENVI

Getting Started with ENVI ENVI Versions 4.7 and 4.7 SP1 December, 2009 Edition Copyright © ITT Visual Information Solutions All Rights Reserved 20GST471DOC Restricted Rights Notice The IDL®, IDL Advanced Math and Stats™, ENVI®, ENVI Zoom™, and ENVI® EX software programs and the accompanying procedures, functions, and documentation described herein are sold under license agreement. Their use, duplication, and disclosure are subject to the restrictions stated in the license agreement. ITT Visual Information Solutions reserves the right to make changes to this document at any time and without notice. Limitation of Warranty ITT Visual Information Solutions makes no warranties, either express or implied, as to any matter not expressly set forth in the license agreement, including without limitation the condition of the software, merchantability, or fitness for any particular purpose. ITT Visual Information Solutions shall not be liable for any direct, consequential, or other damages suffered by the Licensee or any others resulting from use of the software packages or their documentation. Permission to Reproduce this Manual If you are a licensed user of these products, ITT Visual Information Solutions grants you a limited, nontransferable license to reproduce this particular document provided such copies are for your use only and are not sold or distributed to third parties. All such copies must contain the title page and this notice page in their entirety. Export Control Information This software and associated documentation are subject to U.S. export controls including the United States Export Administration Regulations. The recipient is responsible for ensuring compliance with all applicable U.S. export control laws and regulations. -

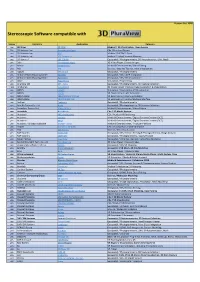

Stereo Software List

Version: Oct. 2020 Stereoscopic Software compatible with Stereo Company Application Category yes 3D Slicer 3D Slicer Medical / 3D Visualization, Open Source Yes 3D Systems, Inc. Geomagic Freeform CAx / Freeform Design yes 3D Systems, Inc. D2P Medical / DICOM-2-Print yes 3D Systems, Inc. VSP Technology Medical / Virtual Surgical Planning yes 3DFlow s.r.l. 3DF ZEPHYR Geospatial / Photogrammetry, 3D Reconstruction, UAV, Mesh yes 3dtv Stereoscopic Player 3D Video Player / Stereo Images yes Adobe Premiere Pro Media & Entertainment / Video Editing yes AGI STK Science / Satellite Tool Kit, Orbit Visualization yes Agisoft Metashape Geospatial / Photogrammetry yes AirborneHydroMapping GmbH KomVISH Geospatial / GIS, LiDAR Integration yes AirborneHydroMapping GmbH HydroVISH Geospatial / GIS, SDK Visualization yes Altair HyperWorks Simulation / Engineering yes Analytica Ltd DPS - Delta Geospatial / Photogrammetry, 3D Feature Collection FULL Anchorlab StereoBlend 3D Image Viewer / Stereo Image Generation & Visualization yes ANSYS EnSight Simulation / Engineering, VR Visualization yes ANSYS SPEOS VR Engineering / Light Simulation yes ANSYS Optis VREXPERIENCE SCANeR VR Engineering / Driving Simulation yes ANSYS Optis VRXPERIENCE HMI VR Engineering / Human Machine Interface yes Aplitop TcpStereo Geospatial / Photogrammetry yes Asia Air Survey Co., Ltd. Azuka Geospatial / Photogrammetry, 3D Feature Collection yes Assimilate Europe Ltd. SCRATCH Vs. 9 Media & Entertainment / Video Editing yes Autodesk NavisWorks CAx / 3D Model Review yes Autodesk VRED Professional -

Geobia: a Bridge Between Remote Sensing and GIS Problem

Problem Statement • How do we take daily multi-terabyte acquisitions of remote sensing earth observation (EO) data (and their increasingly vast archives) and turn it into useful geo- Geobia: A Bridge Between Remote Sensing information products? and GIS Darryl Murdock, Ph.D. ESRI Issues Role of Remote Sensing • Remote Sensing (RS) promise still unfulfilled • Surrogate for phenomenologies –Geographic Information Systems (GIS) users • RS data is just that – data, NOT information are data starved • Information is derived from analysis –RS data volumes are growing exponentially –Immediate viewing and interpretation • AFE results must be quick and readily ingested into a –Image processing geodatabase –Digitization (old school – or is it?) • Data Models and Metadata Standardization are needed Role of GIS ArcGIS Supports All Spatial Data Types • Provides a unifying SOA environment for users to In Relational 107’ analyze, visualize, and predict. Tables & Files Vectors • For some activities, such as natural disaster response, Topology Dimensions time-to-analysis is the most critical component. GIS allows fast multi-source integration and analysis Surveys • Thus, for time-sensitive activities the true power of GIS ABC is realized by combining real-time or near real-time EO Networks data, feature extracted vectors and other existing data Images Annotation CAD Drawings GEOBIA can be the connection between RS and GIS by 27 Main St. Terrain providing additional timely content 3D Objects Addresses Attributes With Rules That Define Relationships and Behavior 6 ArcGIS Supports All Spatial Data Types Geoprocessing Models “Best Practices” Can Be Scripted In Relational GEOBIA 107’ Tables & Files Vectors Topology Dimensions Surveys GEOBIA ABC Networks Images Annotation CAD Drawings 27 Main St. -

Geospatial Imagery Analyst

Geospatial Imagery Analyst Req ID: SB_GIA_01 – SB_GIA_03 Position Title: Geospatial ImageryAnalyst Classification Requirement: TS/SCI with CI polygraph Position Description: Geospatial Analysts play an integral part in providing information to our customers. Geospatial Analysts use overhead and aerial imagery, geospatial data, full motion video, and other electronic monitoring to collect and analyze information. Imagery Analysts are primarily responsible for supervising and analyzing overhead and aerial imagery developed by photographic and electronic means. Duties performed by Geospatial Analysts include using principles and techniques of photogrammetry and employing electronic, mechanical, and optical devices to obtain information from imagery. Obtains intelligence by studying and analyzing imagery products. Determines target coordinates for accurate location of geospatial analysis findings. Identifies adversary weapons, equipment and defenses and determines Order of Battle. Analyzes adversary installations and lines of communications. Conducts Battle Damage Assessment analysis. Prepares imagery analysis reports and products. Analyzes current intelligence holdings to identify gaps, and subsequent intelligence collection requirements. Considers adversary Order of Battle records in the development of collection tasks and assessment of enemy vulnerabilities and probable courses of action. Assists in the preparation of reports on captured adversary material. Drafts periodic and special intelligence reports, plans, and briefings. Because -

System Architecture Design Strategies Class Exercises

System Architecture Design Exercises August 1, 2011 Copyright©2011 Esri All rights reserved. Course version 10.0. Version release date February 2011. Printed in the United States of America. The information contained in this document is the exclusive property of Esri. This work is protected under United States copyright law and other international copyright treaties and conventions. No part of this work may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying and recording, or by any information storage or retrieval system, except as expressly permitted in writing by Esri. All requests should be sent to Attention: Contracts and Legal Services Manager, Esri, 380 New York Street, Redlands, CA 92373-8100 USA. EXPORT NOTICE: Use of these Materials is subject to U.S. export control laws and regulations including the U.S. Department of Commerce Export Administration Regulations (EAR). Diversion of these Materials contrary to U.S. law is prohibited. The information contained in this document is subject to change without notice. U. S. GOVERNMENT RESTRICTED/LIMITED RIGHTS Any software, documentation, and/or data delivered hereunder is subject to the terms of the License Agreement. The commercial license rights in the License Agreement strictly govern Licensee's use, reproduction, or disclosure of the software, data, and documentation. In no event shall the U.S. Government acquire greater than RESTRICTED/LIMITED RIGHTS. At a minimum, use, duplication, or disclosure by the U.S. Government is subject to restrictions as set forth in FAR §52.227-14 Alternates I, II, and III (DEC 2007); FAR §52.227-19(b) (DEC 2007) and/or FAR §12.211/12.212 (Commercial Technical Data/Computer Software); and DFARS §252.227-7015 (NOV 1995) (Technical Data) and/or DFARS §227.7202 (Computer Software), as applicable.