AMD GPU Hardware Basics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

GLSL 4.50 Spec

The OpenGL® Shading Language Language Version: 4.50 Document Revision: 7 09-May-2017 Editor: John Kessenich, Google Version 1.1 Authors: John Kessenich, Dave Baldwin, Randi Rost Copyright (c) 2008-2017 The Khronos Group Inc. All Rights Reserved. This specification is protected by copyright laws and contains material proprietary to the Khronos Group, Inc. It or any components may not be reproduced, republished, distributed, transmitted, displayed, broadcast, or otherwise exploited in any manner without the express prior written permission of Khronos Group. You may use this specification for implementing the functionality therein, without altering or removing any trademark, copyright or other notice from the specification, but the receipt or possession of this specification does not convey any rights to reproduce, disclose, or distribute its contents, or to manufacture, use, or sell anything that it may describe, in whole or in part. Khronos Group grants express permission to any current Promoter, Contributor or Adopter member of Khronos to copy and redistribute UNMODIFIED versions of this specification in any fashion, provided that NO CHARGE is made for the specification and the latest available update of the specification for any version of the API is used whenever possible. Such distributed specification may be reformatted AS LONG AS the contents of the specification are not changed in any way. The specification may be incorporated into a product that is sold as long as such product includes significant independent work developed by the seller. A link to the current version of this specification on the Khronos Group website should be included whenever possible with specification distributions. -

Small Form Factor 3D Graphics for Your Pc

VisionTek Part# 900701 PRODUCTIVITY SERIES: SMALL FORM FACTOR 3D GRAPHICS FOR YOUR PC The VisionTek Radeon R7 240SFF graphics card offers a perfect balance of performance, features, and affordability for the gamer seeking a complete solution. It offers support for the DIRECTX® 11.2 graphics standard and 4K Ultra HD for stunning 3D visual effects, realistic lighting, and lifelike imagery. Its Short Form Factor design enables it to fit into the latest Low Profile desktops and workstations, yet the R7 240SFF can be converted to a standard ATX design with the included tall bracket. With 2GB of DDR3 memory and award-winning Graphics Core Next (GCN) architecture, and DVI-D/HDMI outputs, the VisionTek Radeon R7 240SFF is big on features and light on your wallet. RADEON R7 240 SPECS • Graphics Engine: RADEON R7 240 • Video Memory: 2GB DDR3 • Memory Interface: 128bit • DirectX® Support: 11.2 • Bus Standard: PCI Express 3.0 • Core Speed: 780MHz • Memory Speed: 800MHz x2 • VGA Output: VGA* • DVI Output: SL DVI-D • HDMI Output: HDMI (Video/Audio) • UEFI Ready: Support SYSTEM REQUIREMENTS • PCI Express® based PC is required with one X16 lane graphics slot available on the motherboard. • 400W (or greater) power supply GCN Architecture: A new design for AMD’s unified graphics processing and compute cores that allows recommended. 500 Watt for AMD them to achieve higher utilization for improved performance and efficiency. CrossFire™ technology in dual mode. • Minimum 1GB of system memory. 4K Ultra HD Support: Experience what you’ve been missing even at 1080P! With support for 3840 x • Installation software requires CD-ROM 2160 output via the HDMI port, textures and other detail normally compressed for lower resolutions drive. -

First Person Shooting (FPS) Game

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056 Volume: 05 Issue: 04 | Apr-2018 www.irjet.net p-ISSN: 2395-0072 Thunder Force - First Person Shooting (FPS) Game Swati Nadkarni1, Panjab Mane2, Prathamesh Raikar3, Saurabh Sawant4, Prasad Sawant5, Nitesh Kuwalekar6 1 Head of Department, Department of Information Technology, Shah & Anchor Kutchhi Engineering College 2 Assistant Professor, Department of Information Technology, Shah & Anchor Kutchhi Engineering College 3,4,5,6 B.E. student, Department of Information Technology, Shah & Anchor Kutchhi Engineering College ----------------------------------------------------------------***----------------------------------------------------------------- Abstract— It has been found in researches that there is an have challenged hardware development, and multiplayer association between playing first-person shooter video games gaming has been integral. First-person shooters are a type of and having superior mental flexibility. It was found that three-dimensional shooter game featuring a first-person people playing such games require a significantly shorter point of view with which the player sees the action through reaction time for switching between complex tasks, mainly the eyes of the player character. They are unlike third- because when playing fps games they require to rapidly react person shooters in which the player can see (usually from to fast moving visuals by developing a more responsive mind behind) the character they are controlling. The primary set and to shift back and forth between different sub-duties. design element is combat, mainly involving firearms. First person-shooter games are also of ten categorized as being The successful design of the FPS game with correct distinct from light gun shooters, a similar genre with a first- direction, attractive graphics and models will give the best person perspective which uses light gun peripherals, in experience to play the game. -

ATI Radeon™ HD 4870 Computation Highlights

AMD Entering the Golden Age of Heterogeneous Computing Michael Mantor Senior GPU Compute Architect / Fellow AMD Graphics Product Group [email protected] 1 The 4 Pillars of massively parallel compute offload •Performance M’Moore’s Law Î 2x < 18 Month s Frequency\Power\Complexity Wall •Power Parallel Î Opportunity for growth •Price • Programming Models GPU is the first successful massively parallel COMMODITY architecture with a programming model that managgped to tame 1000’s of parallel threads in hardware to perform useful work efficiently 2 Quick recap of where we are – Perf, Power, Price ATI Radeon™ HD 4850 4x Performance/w and Performance/mm² in a year ATI Radeon™ X1800 XT ATI Radeon™ HD 3850 ATI Radeon™ HD 2900 XT ATI Radeon™ X1900 XTX ATI Radeon™ X1950 PRO 3 Source of GigaFLOPS per watt: maximum theoretical performance divided by maximum board power. Source of GigaFLOPS per $: maximum theoretical performance divided by price as reported on www.buy.com as of 9/24/08 ATI Radeon™HD 4850 Designed to Perform in Single Slot SP Compute Power 1.0 T-FLOPS DP Compute Power 200 G-FLOPS Core Clock Speed 625 Mhz Stream Processors 800 Memory Type GDDR3 Memory Capacity 512 MB Max Board Power 110W Memory Bandwidth 64 GB/Sec 4 ATI Radeon™HD 4870 First Graphics with GDDR5 SP Compute Power 1.2 T-FLOPS DP Compute Power 240 G-FLOPS Core Clock Speed 750 Mhz Stream Processors 800 Memory Type GDDR5 3.6Gbps Memory Capacity 512 MB Max Board Power 160 W Memory Bandwidth 115.2 GB/Sec 5 ATI Radeon™HD 4870 X2 Incredible Balance of Performance,,, Power, Price -

An Evolution of Mobile Graphics

AN EVOLUTION OF MOBILE GRAPHICS Michael C. Shebanow Vice President, Advanced Processor Lab Samsung Electronics July 20, 20131 DISCLAIMER • The views herein are my own • They do not represent Samsung’s vision nor product plans 2 • The Mobile Market • Review of GPU Tech • GPU Efficiency • User Experience • Tech Challenges • Summary 3 The Rise of the Mobile GPU & Connectivity A NEW WORLD COMING? 4 DISCRETE GPU MARKET Flattening 5 MOBILE GPU MARKET Smart • In 2012, an estimated 800+ Phones million mobile GPUs shipped “Phablets” • ~123M tablets • ~712M smart phones Tablets • Will easily exceed 1B in the coming years • Trend: • Discrete GPU relatively flat • Mobile is growing rapidly 6 WW INTERNET TRAFFIC • Source: Cisco VNI Mobile INET IP Traffic growth Traffic • Internet traffic growth Year (TB/sec) rate (TB/sec) rate is staggering 2005 0.9 0.00 2006 1.5 65% 0.00 • 2012 total traffic is 2007 2.5 61% 0.01 13.7 GB per person 2008 3.8 54% 0.01 per month 2009 5.6 45% 0.04 2010 7.8 40% 0.10 • 2012 smart phone 2011 10.6 36% 0.23 traffic at 2012 12.4 17% 0.34 0.342 GB per person per month • 2017 smart phone traffic expected at 2.7 GB per person per month 7 WHERE ARE WE HEADED?… • Enormous quantity of GPUs • Large amount of interconnectivity • Better I/O 8 GPU Pipelines A BRIEF REVIEW OF GPU TECH 9 MOBILE GPU PIPELINE ARCHITECTURES Tile-based immediate mode rendering IA VS CCV RS PS ROP (TBIMR) Tile-based deferred IA VS CCV scene rendering (TBDR) RS PS ROP IA = input assembler VS = vertex shader CCV = cull, clip, viewport transform RS = rasterization, -

Advanced Computer Graphics to Do Motivation Real-Time Rendering

To Do Advanced Computer Graphics § Assignment 2 due Feb 19 § Should already be well on way. CSE 190 [Winter 2016], Lecture 12 § Contact us for difficulties etc. Ravi Ramamoorthi http://www.cs.ucsd.edu/~ravir Motivation Real-Time Rendering § Today, create photorealistic computer graphics § Goal: interactive rendering. Critical in many apps § Complex geometry, lighting, materials, shadows § Games, visualization, computer-aided design, … § Computer-generated movies/special effects (difficult or impossible to tell real from rendered…) § Until 10-15 years ago, focus on complex geometry § CSE 168 images from rendering competition (2011) § § But algorithms are very slow (hours to days) Chasm between interactivity, realism Evolution of 3D graphics rendering Offline 3D Graphics Rendering Interactive 3D graphics pipeline as in OpenGL Ray tracing, radiosity, photon mapping § Earliest SGI machines (Clark 82) to today § High realism (global illum, shadows, refraction, lighting,..) § Most of focus on more geometry, texture mapping § But historically very slow techniques § Some tweaks for realism (shadow mapping, accum. buffer) “So, while you and your children’s children are waiting for ray tracing to take over the world, what do you do in the meantime?” Real-Time Rendering SGI Reality Engine 93 (Kurt Akeley) Pictures courtesy Henrik Wann Jensen 1 New Trend: Acquired Data 15 years ago § Image-Based Rendering: Real/precomputed images as input § High quality rendering: ray tracing, global illumination § Little change in CSE 168 syllabus, from 2003 to -

Penguin Computing Upgrades Corona with Latest AMD Radeon Instinct GPU Technology for Enhanced ML and AI Capabilities

Penguin Computing Upgrades Corona with latest AMD Radeon Instinct GPU Technology for Enhanced ML and AI Capabilities November 18, 2019 Fremont, CA., November 18, 2019 -Penguin Computing, a leader in high-performance computing (HPC), artificial intelligence (AI), and enterprise data center solutions and services, today announced that Corona, an HPC cluster first delivered to Lawrence Livermore National Lab (LLNL) in late 2018, has been upgraded with the newest AMD Radeon Instinct™ MI60 accelerators, based on Vega which, per AMD, is the World’s 1st 7nm GPU architecture that brings PCIe® 4.0 support. This upgrade is the latest example of Penguin Computing and LLNL’s ongoing collaboration aimed at providing additional capabilities to the LLNL user community. As previously released, the cluster consists of 170 two-socket nodes with 24-core AMD EPYCTM 7401 processors and a PCIe 1.6 Terabyte (TB) nonvolatile (solid-state) memory device. Each Corona compute node is GPU-ready with half of those nodes today utilizing four AMD Radeon Instinct MI25 accelerators per node, delivering 4.2 petaFLOPS of FP32 peak performance. With the MI60 upgrade, the cluster increases its potential PFLOPS peak performance to 9.45 petaFLOPS of FP32 peak performance. This brings significantly greater performance and AI capabilities to the research communities. “The Penguin Computing DOE team continues our collaborative venture with our vendor partners AMD and Mellanox to ensure the Livermore Corona GPU enhancements expand the capabilities to continue their mission outreach within various machine learning communities,” said Ken Gudenrath, Director of Federal Systems at Penguin Computing. Corona is being made available to industry through LLNL’s High Performance Computing Innovation Center (HPCIC). -

Developer Tools Showcase

Developer Tools Showcase Randy Fernando Developer Tools Product Manager NVISION 2008 Software Content Creation Performance Education Development FX Composer Shader PerfKit Conference Presentations Debugger mental mill PerfHUD Whitepapers Artist Edition Direct3D SDK PerfSDK GPU Programming Guide NVIDIA OpenGL SDK Shader Library GLExpert Videos CUDA SDK NV PIX Plug‐in Photoshop Plug‐ins Books Cg Toolkit gDEBugger GPU Gems 3 Texture Tools NVSG GPU Gems 2 Melody PhysX SDK ShaderPerf GPU Gems PhysX Plug‐Ins PhysX VRD PhysX Tools The Cg Tutorial NVIDIA FX Composer 2.5 The World’s Most Advanced Shader Authoring Environment DirectX 10 Support NVIDIA Shader Debugger Support ShaderPerf 2.0 Integration Visual Models & Styles Particle Systems Improved User Interface Particle Systems All-New Start Page 350Z Sample Project Visual Models & Styles Other Major Features Shader Creation Wizard Code Editor Quickly create common shaders Full editor with assisted Shader Library code generation Hundreds of samples Properties Panel Texture Viewer HDR Color Picker Materials Panel View, organize, and apply textures Even More Features Automatic Light Binding Complete Scripting Support Support for DirectX 10 (Geometry Shaders, Stream Out, Texture Arrays) Support for COLLADA, .FBX, .OBJ, .3DS, .X Extensible Plug‐in Architecture with SDK Customizable Layouts Semantic and Annotation Remapping Vertex Attribute Packing Remote Control Capability New Sample Projects 350Z Visual Styles Atmospheric Scattering DirectX 10 PCSS Soft Shadows Materials Post‐Processing Simple Shadows -

NVIDIA Quadro P4000

NVIDIA Quadro P4000 GP104 1792 112 64 8192 MB GDDR5 256 bit GRAPHICS PROCESSOR CORES TMUS ROPS MEMORY SIZE MEMORY TYPE BUS WIDTH The Quadro P4000 is a professional graphics card by NVIDIA, launched in February 2017. Built on the 16 nm process, and based on the GP104 graphics processor, the card supports DirectX 12.0. The GP104 graphics processor is a large chip with a die area of 314 mm² and 7,200 million transistors. Unlike the fully unlocked GeForce GTX 1080, which uses the same GPU but has all 2560 shaders enabled, NVIDIA has disabled some shading units on the Quadro P4000 to reach the product's target shader count. It features 1792 shading units, 112 texture mapping units and 64 ROPs. NVIDIA has placed 8,192 MB GDDR5 memory on the card, which are connected using a 256‐bit memory interface. The GPU is operating at a frequency of 1227 MHz, which can be boosted up to 1480 MHz, memory is running at 1502 MHz. We recommend the NVIDIA Quadro P4000 for gaming with highest details at resolutions up to, and including, 5760x1080. Being a single‐slot card, the NVIDIA Quadro P4000 draws power from 1x 6‐pin power connectors, with power draw rated at 105 W maximum. Display outputs include: 4x DisplayPort. Quadro P4000 is connected to the rest of the system using a PCIe 3.0 x16 interface. The card measures 241 mm in length, and features a single‐slot cooling solution. Graphics Processor Graphics Card GPU Name: GP104 Released: Feb 6th, 2017 Architecture: Pascal Production Active Status: Process Size: 16 nm Bus Interface: PCIe 3.0 x16 Transistors: 7,200 -

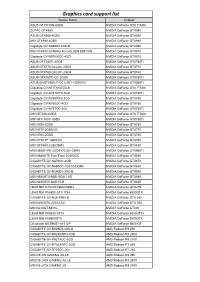

Graphics Card Support List

Graphics card support list Device Name Chipset ASUS GTXTITAN-6GD5 NVIDIA GeForce GTX TITAN ZOTAC GTX980 NVIDIA GeForce GTX980 ASUS GTX980-4GD5 NVIDIA GeForce GTX980 MSI GTX980-4GD5 NVIDIA GeForce GTX980 Gigabyte GV-N980D5-4GD-B NVIDIA GeForce GTX980 MSI GTX970 GAMING 4G GOLDEN EDITION NVIDIA GeForce GTX970 Gigabyte GV-N970IXOC-4GD NVIDIA GeForce GTX970 ASUS GTX780TI-3GD5 NVIDIA GeForce GTX780Ti ASUS GTX770-DC2OC-2GD5 NVIDIA GeForce GTX770 ASUS GTX760-DC2OC-2GD5 NVIDIA GeForce GTX760 ASUS GTX750TI-OC-2GD5 NVIDIA GeForce GTX750Ti ASUS ENGTX560-Ti-DCII/2D1-1GD5/1G NVIDIA GeForce GTX560Ti Gigabyte GV-NTITAN-6GD-B NVIDIA GeForce GTX TITAN Gigabyte GV-N78TWF3-3GD NVIDIA GeForce GTX780Ti Gigabyte GV-N780WF3-3GD NVIDIA GeForce GTX780 Gigabyte GV-N760OC-4GD NVIDIA GeForce GTX760 Gigabyte GV-N75TOC-2GI NVIDIA GeForce GTX750Ti MSI NTITAN-6GD5 NVIDIA GeForce GTX TITAN MSI GTX 780Ti 3GD5 NVIDIA GeForce GTX780Ti MSI N780-3GD5 NVIDIA GeForce GTX780 MSI N770-2GD5/OC NVIDIA GeForce GTX770 MSI N760-2GD5 NVIDIA GeForce GTX760 MSI N750 TF 1GD5/OC NVIDIA GeForce GTX750 MSI GTX680-2GB/DDR5 NVIDIA GeForce GTX680 MSI N660Ti-PE-2GD5-OC/2G-DDR5 NVIDIA GeForce GTX660Ti MSI N680GTX Twin Frozr 2GD5/OC NVIDIA GeForce GTX680 GIGABYTE GV-N670OC-2GD NVIDIA GeForce GTX670 GIGABYTE GV-N650OC-1GI/1G-DDR5 NVIDIA GeForce GTX650 GIGABYTE GV-N590D5-3GD-B NVIDIA GeForce GTX590 MSI N580GTX-M2D15D5/1.5G NVIDIA GeForce GTX580 MSI N465GTX-M2D1G-B NVIDIA GeForce GTX465 LEADTEK GTX275/896M-DDR3 NVIDIA GeForce GTX275 LEADTEK PX8800 GTX TDH NVIDIA GeForce 8800GTX GIGABYTE GV-N26-896H-B -

Real-Time Rendering Techniques with Hardware Tessellation

Volume 34 (2015), Number x pp. 0–24 COMPUTER GRAPHICS forum Real-time Rendering Techniques with Hardware Tessellation M. Nießner1 and B. Keinert2 and M. Fisher1 and M. Stamminger2 and C. Loop3 and H. Schäfer2 1Stanford University 2University of Erlangen-Nuremberg 3Microsoft Research Abstract Graphics hardware has been progressively optimized to render more triangles with increasingly flexible shading. For highly detailed geometry, interactive applications restricted themselves to performing transforms on fixed geometry, since they could not incur the cost required to generate and transfer smooth or displaced geometry to the GPU at render time. As a result of recent advances in graphics hardware, in particular the GPU tessellation unit, complex geometry can now be generated on-the-fly within the GPU’s rendering pipeline. This has enabled the generation and displacement of smooth parametric surfaces in real-time applications. However, many well- established approaches in offline rendering are not directly transferable due to the limited tessellation patterns or the parallel execution model of the tessellation stage. In this survey, we provide an overview of recent work and challenges in this topic by summarizing, discussing, and comparing methods for the rendering of smooth and highly-detailed surfaces in real-time. 1. Introduction Hardware tessellation has attained widespread use in computer games for displaying highly-detailed, possibly an- Graphics hardware originated with the goal of efficiently imated, objects. In the animation industry, where displaced rendering geometric surfaces. GPUs achieve high perfor- subdivision surfaces are the typical modeling and rendering mance by using a pipeline where large components are per- primitive, hardware tessellation has also been identified as a formed independently and in parallel. -

AMD Radeon E8860

Components for AMD’s Embedded Radeon™ E8860 GPU INTRODUCTION The E8860 Embedded Radeon GPU available from CoreAVI is comprised of temperature screened GPUs, safety certi- fiable OpenGL®-based drivers, and safety certifiable GPU tools which have been pre-integrated and validated together to significantly de-risk the challenges typically faced when integrating hardware and software components. The plat- form is an off-the-shelf foundation upon which safety certifiable applications can be built with confidence. Figure 1: CoreAVI Support for E8860 GPU EXTENDED TEMPERATURE RANGE CoreAVI provides extended temperature versions of the E8860 GPU to facilitate its use in rugged embedded applications. CoreAVI functionally tests the E8860 over -40C Tj to +105 Tj, increasing the manufacturing yield for hardware suppliers while reducing supply delays to end customers. coreavi.com [email protected] Revision - 13Nov2020 1 E8860 GPU LONG TERM SUPPLY AND SUPPORT CoreAVI has provided consistent and dedicated support for the supply and use of the AMD embedded GPUs within the rugged Mil/Aero/Avionics market segment for over a decade. With the E8860, CoreAVI will continue that focused support to ensure that the software, hardware and long-life support are provided to meet the needs of customers’ system life cy- cles. CoreAVI has extensive environmentally controlled storage facilities which are used to store the GPUs supplied to the Mil/ Aero/Avionics marketplace, ensuring that a ready supply is available for the duration of any program. CoreAVI also provides the post Last Time Buy storage of GPUs and is often able to provide additional quantities of com- ponents when COTS hardware partners receive increased volume for existing products / systems requiring additional inventory.