Automatic Sequences

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Balanced Ternary Arithmetic on the Abacus

Balanced Ternary Arithmetic on the Abacus What is balanced ternary? It is a ternary number system where the digits of a number are powers of three rather than powers of ten, but instead of adding multiples of one or two times various powers of three to make up the desired number, in balanced ternary some powers of three are added and others are subtracted. Each power of three comprisising the number is represented by either a +, a -, or 0, to show that the power of three is either added, subtracted, or not present in the number. There are no multiples of a power of three greater than +1 or -1, which greatly simplifies multiplication and division. Another advantage of balanced ternary is that all integers can be represented, both positive and negative, without the need for a separate symbol to indicate plus or minus. The most significant ternary digit (trit) of any positive balanced ternary number is + and the most significant trit of any negative number is -. Here is a list of a few numbers in decimal, ternary, and balanced ternary: Decimal Ternary Balanced ternary ------------------------------------------------- -6 - 20 - + 0 (-9+3) -5 - 12 - + + (-9+3+1) -4 - 11 - - ( -3-1) -3 - 10 - 0 etc. -2 - 2 - + -1 - 1 - 0 0 0 1 1 + 2 2 + - 3 10 + 0 4 11 + + 5 12 + - - 6 20 + - 0 Notice that the negative of a balanced ternary number is formed simply by inverting all the + and - signs in the number. Thomas Fowler in England, about 1840, built a balanced ternary calculating machine capable of multiplying and dividing balanced ternary numbers. -

VSI Openvms C Language Reference Manual

VSI OpenVMS C Language Reference Manual Document Number: DO-VIBHAA-008 Publication Date: May 2020 This document is the language reference manual for the VSI C language. Revision Update Information: This is a new manual. Operating System and Version: VSI OpenVMS I64 Version 8.4-1H1 VSI OpenVMS Alpha Version 8.4-2L1 Software Version: VSI C Version 7.4-1 for OpenVMS VMS Software, Inc., (VSI) Bolton, Massachusetts, USA C Language Reference Manual Copyright © 2020 VMS Software, Inc. (VSI), Bolton, Massachusetts, USA Legal Notice Confidential computer software. Valid license from VSI required for possession, use or copying. Consistent with FAR 12.211 and 12.212, Commercial Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under vendor's standard commercial license. The information contained herein is subject to change without notice. The only warranties for VSI products and services are set forth in the express warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. VSI shall not be liable for technical or editorial errors or omissions contained herein. HPE, HPE Integrity, HPE Alpha, and HPE Proliant are trademarks or registered trademarks of Hewlett Packard Enterprise. Intel, Itanium and IA64 are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United States and other countries. Java, the coffee cup logo, and all Java based marks are trademarks or registered trademarks of Oracle Corporation in the United States or other countries. Kerberos is a trademark of the Massachusetts Institute of Technology. -

Basic Digit Sets for Radix Representation

LrBRAJ7T LIBRARY r TECHNICAL REPORT SECTICTJ^ Kkl B7-CBT SSrTK*? BAVAI PC "T^BADUATB SCSOp POSTGilADUAIS SCSftQl NPS52-78-002 . NAVAL POSTGRADUATE SCHOOL Monterey, California BASIC DIGIT SETS FOR RADIX REPRESENTATION David W. Matula June 1978 a^oroved for public release; distribution unlimited. FEDDOCS D 208.14/2:NPS-52-78-002 NAVAL POSTGRADUATE SCHOOL Monterey, California Rear Admiral Tyler Dedman Jack R. Bor sting Superintendent Provost The work reported herein was supported in part by the National Science Foundation under Grant GJ-36658 and by the Deutsche Forschungsgemeinschaft under Grant KU 155/5. Reproduction of all or part of this report is authorized. This report was prepared by: UNCLASSIFIED SECURITY CLASSIFICATION OF THIS PAGE (When Data Entered) READ INSTRUCTIONS REPORT DOCUMENTATION PAGE BEFORE COMPLETING FORM 1. REPORT NUMBER 2. GOVT ACCESSION NO. 3. RECIPIENT'S CATALOG NUMBER NPS52-78-002 4. TITLE (and Subtitle) 5. TYPE OF REPORT ft PERIOD COVERED BASIC DIGIT SETS FOR RADIX REPRESENTATION Final report 6. PERFORMING ORG. REPORT NUMBER 7. AUTHORS 8. CONTRACT OR GRANT NUM8ERfa; David W. Matula 9. PERFORMING ORGANIZATION NAME AND ADDRESS 10. PROGRAM ELEMENT, PROJECT, TASK AREA ft WORK UNIT NUMBERS Naval Postgraduate School Monterey, CA 93940 11. CONTROLLING OFFICE NAME AND ADDRESS 12. REPORT DATE Naval Postgraduate School June 1978 Monterey, CA 93940 13. NUMBER OF PAGES 33 14. MONITORING AGENCY NAME ft AODRESSf// different from Controlling Olllce) 15. SECURITY CLASS, (ot thta report) Unclassified 15«. DECLASSIFI CATION/ DOWN GRADING SCHEDULE 16. DISTRIBUTION ST ATEMEN T (of thta Report) Approved for public release; distribution unlimited. 17. DISTRIBUTION STATEMENT (of the mbatract entered In Block 20, if different from Report) 18. -

Part I Number Representation

Part I Number Representation Parts Chapters 1. Numbers and Arithmetic 2. Representing Signed Numbers I. Number Representation 3. Redundant Number Systems 4. Residue Number Systems 5. Basic Addition and Counting 6. Carry-Lookahead Adders II. Addition / Subtraction 7. Variations in Fast Adders 8. Multioperand Addition 9. Basic Multiplication Schemes 10. High-Radix Multipliers III. Multiplication 11. Tree and Array Multipliers 12. Variations in Multipliers 13. Basic Division Schemes 14. High-Radix Dividers IV . Division Elementary Operations Elementary 15. Variations in Dividers 16. Division by Convergence 17. Floating-Point Reperesentations 18. Floating-Point Operations V. Real Arithmetic 19. Errors and Error Control 20. Precise and Certifiable Arithmetic 21. Square-Rooting Methods 22. The CORDIC Algorithms VI. Function Evaluation 23. Variations in Function Evaluation 24. Arithmetic by Table Lookup 25. High-Throughput Arithmetic 26. Low-Power Arithmetic VII. Implementation Topics 27. Fault-Tolerant Arithmetic 28. Past,Reconfigurable Present, and Arithmetic Future Appendix: Past, Present, and Future Mar. 2015 Computer Arithmetic, Number Representation Slide 1 About This Presentation This presentation is intended to support the use of the textbook Computer Arithmetic: Algorithms and Hardware Designs (Oxford U. Press, 2nd ed., 2010, ISBN 978-0-19-532848-6). It is updated regularly by the author as part of his teaching of the graduate course ECE 252B, Computer Arithmetic, at the University of California, Santa Barbara. Instructors can use these slides freely in classroom teaching and for other educational purposes. Unauthorized uses are strictly prohibited. © Behrooz Parhami Edition Released Revised Revised Revised Revised First Jan. 2000 Sep. 2001 Sep. 2003 Sep. 2005 Apr. 2007 Apr. -

ARRAY SET ADDRESSING: ENABLING EFFICIENT HEXAGONALLY SAMPLED IMAGE PROCESSING by NICHOLAS I. RUMMELT a DISSERTATION PRESENTED TO

ARRAY SET ADDRESSING: ENABLING EFFICIENT HEXAGONALLY SAMPLED IMAGE PROCESSING By NICHOLAS I. RUMMELT A DISSERTATION PRESENTED TO THE GRADUATE SCHOOL OF THE UNIVERSITY OF FLORIDA IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHILOSOPHY UNIVERSITY OF FLORIDA 2010 1 °c 2010 Nicholas I. Rummelt 2 To my beautiful wife and our three wonderful children 3 ACKNOWLEDGMENTS Thanks go out to my family for their support, understanding, and encouragement. I especially want to thank my advisor and committee chair, Joseph N. Wilson, for his keen insight, encouragement, and excellent guidance. I would also like to thank the other members of my committee: Paul Gader, Arunava Banerjee, Jeffery Ho, and Warren Dixon. I would like to thank the Air Force Research Laboratory (AFRL) for generously providing the opportunity, time, and funding. There are many people at AFRL that played some role in my success in this endeavor to whom I owe a debt of gratitude. I would like to specifically thank T.J. Klausutis, Ric Wehling, James Moore, Buddy Goldsmith, Clark Furlong, David Gray, Paul McCarley, Jimmy Touma, Tony Thompson, Marta Fackler, Mike Miller, Rob Murphy, John Pletcher, and Bob Sierakowski. 4 TABLE OF CONTENTS page ACKNOWLEDGMENTS .................................. 4 LIST OF TABLES ...................................... 7 LIST OF FIGURES ..................................... 8 ABSTRACT ......................................... 10 CHAPTER 1 INTRODUCTION AND BACKGROUND ...................... 11 1.1 Introduction ................................... 11 1.2 Background ................................... 11 1.3 Recent Related Research ........................... 15 1.4 Recent Related Academic Research ..................... 16 1.5 Hexagonal Image Formation and Display Considerations ......... 17 1.5.1 Converting from Rectangularly Sampled Images .......... 17 1.5.2 Hexagonal Imagers .......................... -

A Combinatorial Approach to Binary Positional Number Systems

Acta Math. Hungar. DOI: 10.1007/s10474-013-0387-8 A COMBINATORIAL APPROACH TO BINARY POSITIONAL NUMBER SYSTEMS A. VINCE Department of Mathematics, University of Florida, Gainesville, FL, USA e-mail: avince@ufl.edu (Received June 14, 2013; revised August 13, 2013; accepted August 22, 2013) Abstract. Although the representation of the real numbers in terms of a base and a set of digits has a long history, new questions arise even in the binary case – digits 0 and 1. A binary positional√ number system (binary radix system) with base equal to the golden ratio (1 + 5)/2 is fairly well known. The main result of this paper is a construction of infinitely many binary radix systems, each one constructed combinatorially from a single pair of binary strings. Every binary radix system that satisfies even a minimal set of conditions that would be expected of a positional number system, can be constructed in this way. 1. Introduction The terms positional number system, radix system,andβ-expansion that appear in the literature all refer to the representation of real numbers in terms of a given base or radix B and a given finite set D of digits. Histori- cally the base is 10 and the digit set is {0, 1, 2,...,9} or, in the binary case, the base is 2 and the digit set is {0, 1}. Alternative choices for the set of dig- its goes back at least to Cauchy, who suggested the use of negative digits in base 10, for example D = {−4, −3,...,4, 5}.Thebalanced ternary system is a base 3 system with digit set D = {−1, 0, 1} discussed by Knuth in [10]. -

Introduction to Computer Data Representation

Introduction to Computer Data Representation Peter Fenwick The University of Auckland (Retired) New Zealand Bentham Science Publishers Bentham Science Publishers Bentham Science Publishers Executive Suite Y - 2 P.O. Box 446 P.O. Box 294 PO Box 7917, Saif Zone Oak Park, IL 60301-0446 1400 AG Bussum Sharjah, U.A.E. USA THE NETHERLANDS [email protected] [email protected] [email protected] Please read this license agreement carefully before using this eBook. Your use of this eBook/chapter constitutes your agreement to the terms and conditions set forth in this License Agreement. This work is protected under copyright by Bentham Science Publishers to grant the user of this eBook/chapter, a non- exclusive, nontransferable license to download and use this eBook/chapter under the following terms and conditions: 1. This eBook/chapter may be downloaded and used by one user on one computer. The user may make one back-up copy of this publication to avoid losing it. The user may not give copies of this publication to others, or make it available for others to copy or download. For a multi-user license contact [email protected] 2. All rights reserved: All content in this publication is copyrighted and Bentham Science Publishers own the copyright. You may not copy, reproduce, modify, remove, delete, augment, add to, publish, transmit, sell, resell, create derivative works from, or in any way exploit any of this publication’s content, in any form by any means, in whole or in part, without the prior written permission from Bentham Science Publishers. 3. The user may print one or more copies/pages of this eBook/chapter for their personal use. -

College of Basic Sciences & Humanities

SECTION X FACULTY OF BASIC SCIENCES AND HUMANITIES General Information Disciplines • Biochemistry • Botany • Business Studies • Chemistry • Economics and Sociology • Journalism, Languagues and Culture • Mathematics, Statistics and Physics • Microbiology • Zoology • Course curriculum for Award of 3-year B.Sc. Degree on opting out of 5-year integrated M.Sc. (Hons) Programme in Biochemistry • Semester-wise Programme for 5-year Integrated M.Sc. (Hons) in Biochemistry • Course curriculum for Award of 3-year B.Sc. Degree on opting out of 5-year integrated M.Sc. (Hons) Programme in Botany • Semester-wise Programme for 5-year Integrated M.Sc. (Hons) in Botany • Course curriculum for Award of 3-year B.Sc. Degree on opting out of 5-year integrated M.Sc. (Hons) Programme in Chemistry • Semester-wise Programme for 5-year Integrated M.Sc. (Hons) in Chemistry • Course curriculum for Award of 3-year B.Sc. Degree on opting out of 5-year integrated M.Sc. (Hons) Programme in Microbiology • Semester-wise Programme for 5-year Integrated M.Sc. (Hons) in Microbiology • Course curriculum for Award of 3-year B.Sc. Degree on opting out of 5-year integrated M.Sc. (Hons) Programme in Zoology • Semester-wise Programme for 5-year Integrated M.Sc. (Hons) in Zoology 371 COLLEGE OF BASIC SCIENCES AND HUMANITIES Basic Sciences provide scientific capital from which practical application of knowledge is drawn. Keeping in view the significance of basic sciences and humanities for proper understanding and development of different areas of agriculture and allied fields, the College of Basic Sciences and Humanities was established in October, 1965. Dr A S Kahlon was the founder Dean of the College and he continued in this position up to October, 1978. -

Floating Point

Contents Articles Floating point 1 Positional notation 22 References Article Sources and Contributors 32 Image Sources, Licenses and Contributors 33 Article Licenses License 34 Floating point 1 Floating point In computing, floating point describes a method of representing an approximation of a real number in a way that can support a wide range of values. The numbers are, in general, represented approximately to a fixed number of significant digits (the significand) and scaled using an exponent. The base for the scaling is normally 2, 10 or 16. The typical number that can be represented exactly is of the form: Significant digits × baseexponent The idea of floating-point representation over intrinsically integer fixed-point numbers, which consist purely of significand, is that An early electromechanical programmable computer, expanding it with the exponent component achieves greater range. the Z3, included floating-point arithmetic (replica on display at Deutsches Museum in Munich). For instance, to represent large values, e.g. distances between galaxies, there is no need to keep all 39 decimal places down to femtometre-resolution (employed in particle physics). Assuming that the best resolution is in light years, only the 9 most significant decimal digits matter, whereas the remaining 30 digits carry pure noise, and thus can be safely dropped. This represents a savings of 100 bits of computer data storage. Instead of these 100 bits, much fewer are used to represent the scale (the exponent), e.g. 8 bits or 2 A diagram showing a representation of a decimal decimal digits. Given that one number can encode both astronomic floating-point number using a mantissa and an and subatomic distances with the same nine digits of accuracy, but exponent. -

Non-Power Positional Number Representation Systems, Bijective Numeration, and the Mesoamerican Discovery of Zero

Non-Power Positional Number Representation Systems, Bijective Numeration, and the Mesoamerican Discovery of Zero Berenice Rojo-Garibaldia, Costanza Rangonib, Diego L. Gonz´alezb;c, and Julyan H. E. Cartwrightd;e a Posgrado en Ciencias del Mar y Limnolog´ıa, Universidad Nacional Aut´onomade M´exico, Av. Universidad 3000, Col. Copilco, Del. Coyoac´an,Cd.Mx. 04510, M´exico b Istituto per la Microelettronica e i Microsistemi, Area della Ricerca CNR di Bologna, 40129 Bologna, Italy c Dipartimento di Scienze Statistiche \Paolo Fortunati", Universit`adi Bologna, 40126 Bologna, Italy d Instituto Andaluz de Ciencias de la Tierra, CSIC{Universidad de Granada, 18100 Armilla, Granada, Spain e Instituto Carlos I de F´ısicaTe´oricay Computacional, Universidad de Granada, 18071 Granada, Spain Keywords: Zero | Maya | Pre-Columbian Mesoamerica | Number rep- resentation systems | Bijective numeration Abstract Pre-Columbian Mesoamerica was a fertile crescent for the development of number systems. A form of vigesimal system seems to have been present from the first Olmec civilization onwards, to which succeeding peoples made contributions. We discuss the Maya use of the representational redundancy present in their Long Count calendar, a non-power positional number representation system with multipliers 1, 20, 18× 20, :::, 18× arXiv:2005.10207v2 [math.HO] 23 Mar 2021 20n. We demonstrate that the Mesoamericans did not need to invent positional notation and discover zero at the same time because they were not afraid of using a number system in which the same number can be written in different ways. A Long Count number system with digits from 0 to 20 is seen later to pass to one using digits 0 to 19, which leads us to propose that even earlier there may have been an initial zeroless bijective numeration system whose digits ran from 1 to 20. -

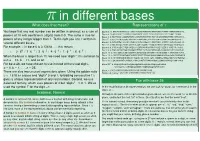

Π in Other Bases

π in different bases What does that mean? Representations of π You know that any real number can be written in decimal: as a sum of Base 2: 11.001001000011111101101010100010001000010110100011000010001101::: powers of 10 with coefficients (digits) from 0-9. The same is true for Base 3: 10.0102110122220102110021111102212222201112012121212001211001::: Base 4: 3.0210033312222020201122030020310301030121202202320003130013031::: powers of any integer bigger than 1. To the right you see π written in Base 5: 3.032322143033432411241224041402314211143020310022003444132211::: several different bases. Base 6: 3.0503300514151241052344140531253211023012144420041152525533142::: Base 7: 3.0663651432036134110263402244652226643520650240155443215426431::: For example, π in base 6 is 3:12418 ::: ; this means Base 8: 3.110375524210264302151423063050560067016321122011160210514763::: 0 −1 −2 −3 −4 −5 Base 9: 3.1241881240744278864517776173103582851654535346265230112632145::: π = 3 · 6 + 1 · 6 + 2 · 6 + 4 · 6 + 1 · 6 + 8 · 6 + ··· : Base 10: 3.141592653589793238462643383279502884197169399375105820974944::: When the base is larger than 10, we need new “digits”; it is common to Base 11: 3.16150702865a48523521525977752941838668848853163a1a54213004658::: Base 12: 3.184809493b918664573a6211bb151551a05729290a7809a492742140a60a5::: use a = 10, b = 11, and so on. Base 16: 3.243f6a8885a308d313198a2e03707344a4093822299f31d0082efa98ec4e6::: For base 26, we have chosen to use instead of the usual digits, Base 26*: d.drsqlolyrtrodnlhnqtgkudqgtuirxneqbckbszivqqvgdmelmu a = 0; -

Numbers 1 to 100

Numbers 1 to 100 PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Tue, 30 Nov 2010 02:36:24 UTC Contents Articles −1 (number) 1 0 (number) 3 1 (number) 12 2 (number) 17 3 (number) 23 4 (number) 32 5 (number) 42 6 (number) 50 7 (number) 58 8 (number) 73 9 (number) 77 10 (number) 82 11 (number) 88 12 (number) 94 13 (number) 102 14 (number) 107 15 (number) 111 16 (number) 114 17 (number) 118 18 (number) 124 19 (number) 127 20 (number) 132 21 (number) 136 22 (number) 140 23 (number) 144 24 (number) 148 25 (number) 152 26 (number) 155 27 (number) 158 28 (number) 162 29 (number) 165 30 (number) 168 31 (number) 172 32 (number) 175 33 (number) 179 34 (number) 182 35 (number) 185 36 (number) 188 37 (number) 191 38 (number) 193 39 (number) 196 40 (number) 199 41 (number) 204 42 (number) 207 43 (number) 214 44 (number) 217 45 (number) 220 46 (number) 222 47 (number) 225 48 (number) 229 49 (number) 232 50 (number) 235 51 (number) 238 52 (number) 241 53 (number) 243 54 (number) 246 55 (number) 248 56 (number) 251 57 (number) 255 58 (number) 258 59 (number) 260 60 (number) 263 61 (number) 267 62 (number) 270 63 (number) 272 64 (number) 274 66 (number) 277 67 (number) 280 68 (number) 282 69 (number) 284 70 (number) 286 71 (number) 289 72 (number) 292 73 (number) 296 74 (number) 298 75 (number) 301 77 (number) 302 78 (number) 305 79 (number) 307 80 (number) 309 81 (number) 311 82 (number) 313 83 (number) 315 84 (number) 318 85 (number) 320 86 (number) 323 87 (number) 326 88 (number)