Estimation of Pareto Distribution Functions from Samples Contaminated by Measurement Errors

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A New Parameter Estimator for the Generalized Pareto Distribution Under the Peaks Over Threshold Framework

mathematics Article A New Parameter Estimator for the Generalized Pareto Distribution under the Peaks over Threshold Framework Xu Zhao 1,*, Zhongxian Zhang 1, Weihu Cheng 1 and Pengyue Zhang 2 1 College of Applied Sciences, Beijing University of Technology, Beijing 100124, China; [email protected] (Z.Z.); [email protected] (W.C.) 2 Department of Biomedical Informatics, College of Medicine, The Ohio State University, Columbus, OH 43210, USA; [email protected] * Correspondence: [email protected] Received: 1 April 2019; Accepted: 30 April 2019 ; Published: 7 May 2019 Abstract: Techniques used to analyze exceedances over a high threshold are in great demand for research in economics, environmental science, and other fields. The generalized Pareto distribution (GPD) has been widely used to fit observations exceeding the tail threshold in the peaks over threshold (POT) framework. Parameter estimation and threshold selection are two critical issues for threshold-based GPD inference. In this work, we propose a new GPD-based estimation approach by combining the method of moments and likelihood moment techniques based on the least squares concept, in which the shape and scale parameters of the GPD can be simultaneously estimated. To analyze extreme data, the proposed approach estimates the parameters by minimizing the sum of squared deviations between the theoretical GPD function and its expectation. Additionally, we introduce a recently developed stopping rule to choose the suitable threshold above which the GPD asymptotically fits the exceedances. Simulation studies show that the proposed approach performs better or similar to existing approaches, in terms of bias and the mean square error, in estimating the shape parameter. -

Independent Approximates Enable Closed-Form Estimation of Heavy-Tailed Distributions

Independent Approximates enable closed-form estimation of heavy-tailed distributions Kenric P. Nelson Abstract – Independent Approximates (IAs) are proven to enable a closed-form estimation of heavy-tailed distributions with an analytical density such as the generalized Pareto and Student’s t distributions. A broader proof using convolution of the characteristic function is described for future research. (IAs) are selected from independent, identically distributed samples by partitioning the samples into groups of size n and retaining the median of the samples in those groups which have approximately equal samples. The marginal distribution along the diagonal of equal values has a density proportional to the nth power of the original density. This nth-power-density, which the IAs approximate, has faster tail decay enabling closed-form estimation of its moments and retains a functional relationship with the original density. Computational experiments with between 1000 to 100,000 Student’s t samples are reported for over a range of location, scale, and shape (inverse of degree of freedom) parameters. IA pairs are used to estimate the location, IA triplets for the scale, and the geometric mean of the original samples for the shape. With 10,000 samples the relative bias of the parameter estimates is less than 0.01 and a relative precision is less than ±0.1. The theoretical bias is zero for the location and the finite bias for the scale can be subtracted out. The theoretical precision has a finite range when the shape is less than 2 for the location estimate and less than 3/2 for the scale estimate. -

ACTS 4304 FORMULA SUMMARY Lesson 1: Basic Probability Summary of Probability Concepts Probability Functions

ACTS 4304 FORMULA SUMMARY Lesson 1: Basic Probability Summary of Probability Concepts Probability Functions F (x) = P r(X ≤ x) S(x) = 1 − F (x) dF (x) f(x) = dx H(x) = − ln S(x) dH(x) f(x) h(x) = = dx S(x) Functions of random variables Z 1 Expected Value E[g(x)] = g(x)f(x)dx −∞ 0 n n-th raw moment µn = E[X ] n n-th central moment µn = E[(X − µ) ] Variance σ2 = E[(X − µ)2] = E[X2] − µ2 µ µ0 − 3µ0 µ + 2µ3 Skewness γ = 3 = 3 2 1 σ3 σ3 µ µ0 − 4µ0 µ + 6µ0 µ2 − 3µ4 Kurtosis γ = 4 = 4 3 2 2 σ4 σ4 Moment generating function M(t) = E[etX ] Probability generating function P (z) = E[zX ] More concepts • Standard deviation (σ) is positive square root of variance • Coefficient of variation is CV = σ/µ • 100p-th percentile π is any point satisfying F (π−) ≤ p and F (π) ≥ p. If F is continuous, it is the unique point satisfying F (π) = p • Median is 50-th percentile; n-th quartile is 25n-th percentile • Mode is x which maximizes f(x) (n) n (n) • MX (0) = E[X ], where M is the n-th derivative (n) PX (0) • n! = P r(X = n) (n) • PX (1) is the n-th factorial moment of X. Bayes' Theorem P r(BjA)P r(A) P r(AjB) = P r(B) fY (yjx)fX (x) fX (xjy) = fY (y) Law of total probability 2 If Bi is a set of exhaustive (in other words, P r([iBi) = 1) and mutually exclusive (in other words P r(Bi \ Bj) = 0 for i 6= j) events, then for any event A, X X P r(A) = P r(A \ Bi) = P r(Bi)P r(AjBi) i i Correspondingly, for continuous distributions, Z P r(A) = P r(Ajx)f(x)dx Conditional Expectation Formula EX [X] = EY [EX [XjY ]] 3 Lesson 2: Parametric Distributions Forms of probability -

Fixed-K Asymptotic Inference About Tail Properties

Journal of the American Statistical Association ISSN: 0162-1459 (Print) 1537-274X (Online) Journal homepage: http://www.tandfonline.com/loi/uasa20 Fixed-k Asymptotic Inference About Tail Properties Ulrich K. Müller & Yulong Wang To cite this article: Ulrich K. Müller & Yulong Wang (2017) Fixed-k Asymptotic Inference About Tail Properties, Journal of the American Statistical Association, 112:519, 1334-1343, DOI: 10.1080/01621459.2016.1215990 To link to this article: https://doi.org/10.1080/01621459.2016.1215990 Accepted author version posted online: 12 Aug 2016. Published online: 13 Jun 2017. Submit your article to this journal Article views: 206 View related articles View Crossmark data Full Terms & Conditions of access and use can be found at http://www.tandfonline.com/action/journalInformation?journalCode=uasa20 JOURNAL OF THE AMERICAN STATISTICAL ASSOCIATION , VOL. , NO. , –, Theory and Methods https://doi.org/./.. Fixed-k Asymptotic Inference About Tail Properties Ulrich K. Müller and Yulong Wang Department of Economics, Princeton University, Princeton, NJ ABSTRACT ARTICLE HISTORY We consider inference about tail properties of a distribution from an iid sample, based on extreme value the- Received February ory. All of the numerous previous suggestions rely on asymptotics where eventually, an infinite number of Accepted July observations from the tail behave as predicted by extreme value theory, enabling the consistent estimation KEYWORDS of the key tail index, and the construction of confidence intervals using the delta method or other classic Extreme quantiles; tail approaches. In small samples, however, extreme value theory might well provide good approximations for conditional expectations; only a relatively small number of tail observations. -

Package 'Renext'

Package ‘Renext’ July 4, 2016 Type Package Title Renewal Method for Extreme Values Extrapolation Version 3.1-0 Date 2016-07-01 Author Yves Deville <[email protected]>, IRSN <[email protected]> Maintainer Lise Bardet <[email protected]> Depends R (>= 2.8.0), stats, graphics, evd Imports numDeriv, splines, methods Suggests MASS, ismev, XML Description Peaks Over Threshold (POT) or 'methode du renouvellement'. The distribution for the ex- ceedances can be chosen, and heterogeneous data (including histori- cal data or block data) can be used in a Maximum-Likelihood framework. License GPL (>= 2) LazyData yes NeedsCompilation no Repository CRAN Date/Publication 2016-07-04 20:29:04 R topics documented: Renext-package . .3 anova.Renouv . .5 barplotRenouv . .6 Brest . .8 Brest.years . 10 Brest.years.missing . 11 CV2............................................. 11 CV2.test . 12 Dunkerque . 13 EM.mixexp . 15 expplot . 16 1 2 R topics documented: fgamma . 17 fGEV.MAX . 19 fGPD ............................................ 22 flomax . 24 fmaxlo . 26 fweibull . 29 Garonne . 30 gev2Ren . 32 gof.date . 34 gofExp.test . 37 GPD............................................. 38 gumbel2Ren . 40 Hpoints . 41 ini.mixexp2 . 42 interevt . 43 Jackson . 45 Jackson.test . 46 logLik.Renouv . 47 Lomax . 48 LRExp . 50 LRExp.test . 51 LRGumbel . 53 LRGumbel.test . 54 Maxlo . 55 MixExp2 . 57 mom.mixexp2 . 58 mom2par . 60 NBlevy . 61 OT2MAX . 63 OTjitter . 66 parDeriv . 67 parIni.MAX . 70 pGreenwood1 . 72 plot.Rendata . 73 plot.Renouv . 74 PPplot . 78 predict.Renouv . 79 qStat . 80 readXML . 82 Ren2gev . 84 Ren2gumbel . 85 Renouv . 87 RenouvNoEst . 94 RLlegend . 96 RLpar . 98 RLplot . 99 roundPred . 101 rRendata . 102 Renext-package 3 SandT . 105 skip2noskip . -

Handbook on Probability Distributions

R powered R-forge project Handbook on probability distributions R-forge distributions Core Team University Year 2009-2010 LATEXpowered Mac OS' TeXShop edited Contents Introduction 4 I Discrete distributions 6 1 Classic discrete distribution 7 2 Not so-common discrete distribution 27 II Continuous distributions 34 3 Finite support distribution 35 4 The Gaussian family 47 5 Exponential distribution and its extensions 56 6 Chi-squared's ditribution and related extensions 75 7 Student and related distributions 84 8 Pareto family 88 9 Logistic distribution and related extensions 108 10 Extrem Value Theory distributions 111 3 4 CONTENTS III Multivariate and generalized distributions 116 11 Generalization of common distributions 117 12 Multivariate distributions 133 13 Misc 135 Conclusion 137 Bibliography 137 A Mathematical tools 141 Introduction This guide is intended to provide a quite exhaustive (at least as I can) view on probability distri- butions. It is constructed in chapters of distribution family with a section for each distribution. Each section focuses on the tryptic: definition - estimation - application. Ultimate bibles for probability distributions are Wimmer & Altmann (1999) which lists 750 univariate discrete distributions and Johnson et al. (1994) which details continuous distributions. In the appendix, we recall the basics of probability distributions as well as \common" mathe- matical functions, cf. section A.2. And for all distribution, we use the following notations • X a random variable following a given distribution, • x a realization of this random variable, • f the density function (if it exists), • F the (cumulative) distribution function, • P (X = k) the mass probability function in k, • M the moment generating function (if it exists), • G the probability generating function (if it exists), • φ the characteristic function (if it exists), Finally all graphics are done the open source statistical software R and its numerous packages available on the Comprehensive R Archive Network (CRAN∗). -

Theoretical Properties of the Weighted Feller-Pareto Distributions." Asian Journal of Mathematics and Applications, 2014: 1-12

CORE Metadata, citation and similar papers at core.ac.uk Provided by Georgia Southern University: Digital Commons@Georgia Southern Georgia Southern University Digital Commons@Georgia Southern Mathematical Sciences Faculty Publications Mathematical Sciences, Department of 2014 Theoretical Properties of the Weighted Feller- Pareto Distributions Oluseyi Odubote Georgia Southern University Broderick O. Oluyede Georgia Southern University, [email protected] Follow this and additional works at: https://digitalcommons.georgiasouthern.edu/math-sci-facpubs Part of the Mathematics Commons Recommended Citation Odubote, Oluseyi, Broderick O. Oluyede. 2014. "Theoretical Properties of the Weighted Feller-Pareto Distributions." Asian Journal of Mathematics and Applications, 2014: 1-12. source: http://scienceasia.asia/index.php/ama/article/view/173/ https://digitalcommons.georgiasouthern.edu/math-sci-facpubs/307 This article is brought to you for free and open access by the Mathematical Sciences, Department of at Digital Commons@Georgia Southern. It has been accepted for inclusion in Mathematical Sciences Faculty Publications by an authorized administrator of Digital Commons@Georgia Southern. For more information, please contact [email protected]. ASIAN JOURNAL OF MATHEMATICS AND APPLICATIONS Volume 2014, Article ID ama0173, 12 pages ISSN 2307-7743 http://scienceasia.asia THEORETICAL PROPERTIES OF THE WEIGHTED FELLER-PARETO AND RELATED DISTRIBUTIONS OLUSEYI ODUBOTE AND BRODERICK O. OLUYEDE Abstract. In this paper, for the first time, a new six-parameter class of distributions called weighted Feller-Pareto (WFP) and related family of distributions is proposed. This new class of distributions contains several other Pareto-type distributions such as length-biased (LB) Pareto, weighted Pareto (WP I, II, III, and IV), and Pareto (P I, II, III, and IV) distributions as special cases. -

On Families of Generalized Pareto Distributions: Properties and Applications

Journal of Data Science 377-396 , DOI: 10.6339/JDS.201804_16(2).0008 On Families of Generalized Pareto Distributions: Properties and Applications D. Hamed1 and F. Famoye2 and C. Lee2 1 Department of Mathematics, Winthrop University, Rock Hill, SC, USA; 2Department of Mathematics, Central Michigan University, Mount Pleasant, Michigan, USA In this paper, we introduce some new families of generalized Pareto distributions using the T-R{Y} framework. These families of distributions are named T-Pareto{Y} families, and they arise from the quantile functions of exponential, log-logistic, logistic, extreme value, Cauchy and Weibull distributions. The shapes of these T-Pareto families can be unimodal or bimodal, skewed to the left or skewed to the right with heavy tail. Some general properties of the T-Pareto{Y} family are investigated and these include the moments, modes, mean deviations from the mean and from the median, and Shannon entropy. Several new generalized Pareto distributions are also discussed. Four real data sets from engineering, biomedical and social science are analyzed to demonstrate the flexibility and usefulness of the T-Pareto{Y} families of distributions. Key words: Shannon entropy; quantile function; moment; T-X family. 1. Introduction The Pareto distribution is named after the well-known Italian-born Swiss sociologist and economist Vilfredo Pareto (1848-1923). Pareto [1] defined Pareto’s Law, which can be stated as N Axa , where N represents the number of persons having income x in a population. Pareto distribution is commonly used in modelling heavy tailed distributions, including but not limited to income, insurance and city size populations. -

A New Generalization of the Pareto Distribution and Its Application to Insurance Data

Journal of Risk and Financial Management Article A New Generalization of the Pareto Distribution and Its Application to Insurance Data Mohamed E. Ghitany 1,*, Emilio Gómez-Déniz 2 and Saralees Nadarajah 3 1 Department of Statistics and Operations Research, Faculty of Science, Kuwait University, Safat 13060, Kuwait 2 Department of Quantitative Methods and TiDES Institute, University of Las Palmas de Gran Canaria, 35017 Gran Canaria, Spain; [email protected] 3 School of Mathematics, University of Manchester, Manchester M13 9PL, UK; [email protected] * Correspondence: [email protected] Received: 26 November 2017; Accepted: 2 February 2018; Published: 7 February 2018 Abstract: The Pareto classical distribution is one of the most attractive in statistics and particularly in the scenario of actuarial statistics and finance. For example, it is widely used when calculating reinsurance premiums. In the last years, many alternative distributions have been proposed to obtain better adjustments especially when the tail of the empirical distribution of the data is very long. In this work, an alternative generalization of the Pareto distribution is proposed and its properties are studied. Finally, application of the proposed model to the earthquake insurance data set is presented. Keywords: gamma distribution; estimation; financial risk; fit; pareto distribution MSC: 62E10; 62F10; 62F15; 62P05 1. Introduction In general insurance, only a few large claims arising in the portfolio represent the largest part of the payments made by the insurance company. Appropriate estimation of these extreme events is crucial for the practitioner to correctly assess insurance and reinsurance premiums. On this subject, the single parameter Pareto distribution (Arnold 1983; Brazauskas and Serfling 2003; Rytgaard 1990), among others has been traditionally considered as a suitable claim size distribution in relation to rating problems. -

Exploring Heavy Tails Pareto and Generalized Pareto Distributions

Exploring Heavy Tails Pareto and Generalized Pareto Distributions September 25, 2019 This vignette is designed to give a short overview about Pareto Distributions and Generalized Pareto Distributions (GPD). We will work with the SPC.we data of our quantmod vignette. Therefore we have to reproduce the SPC.we data in exactly the same way as described the quantmod vignette. In financial data analysis stock indices as the S&P 500 index are typically analyzed by using the returns of the index. We use the log-returns > WSPLRet <- diff(log(SPC.we)) We start to analyze these by plotting a histogram > hist(WSPLRet) Histogram of WSPLRet 1000 800 600 Frequency 400 200 0 −0.20 −0.15 −0.10 −0.05 0.00 0.05 0.10 0.15 WSPLRet Figure 1: Histogram of the log-returns of the S&P 500 from 1960-01-04 to 2009-01-01. This histogram shows a unimodal distribution of values with the peak around 0, which nourishes the hypothesis that the log-returns are normally distributed. A very intuitive method to test this is the Q-Q plot. The slope of the (linear regression) line and its intercept determine the parameters of the corresponding Gaussian distribution. If the points are close to this line the empirical distribution of the sample can 1 > qqnorm(WSPLRet) > qqline(WSPLRet) Normal Q−Q Plot 0.10 0.05 0.00 −0.05 Sample Quantiles −0.10 −0.15 −0.20 −3 −2 −1 0 1 2 3 Theoretical Quantiles Figure 2: Q-Q plot of WSPLRet values. -

The Pareto Distribution

The Pareto Distribution Background Power Function Consider an arbitrary power function, x↦kx α where k is a constant and the exponent α gov- erns the relationship. Note that if y=kx α, then Log[y] = Log[k] +α Log[x]. That is, the logarith- mic transformation of this power function is linear in Log[x]. Another way to say this is that the elasticity of y with respect to x is constant: d Log[y] =α. Since the elasticity does not depend on d Log[x] the size of x, we sometimes say that the power relationship is scale invariant. Levels Log Transform 4 5 3 1 0.50 2 0.10 1 0.05 0.5 1.0 1.5 2.0 0.2 0.5 1 2 Two Power Functions Power Law A power law is a theoretical or empirical relationship governed by a power function. ◼ In geometry, the area of a regular polygon is proportional to the square of the length of a side. ◼ In physics, the gravitational attraction of two objects is inversely proportional to the square of their distance. ◼ In ecology, Taylor’s Law states that the variance of population density is a power-function of mean population density. ◼ In economics, Gabaix (1999) finds the population of cities follows a power law (with an inequality parameter close to 1 (see below). ◼ In economics, Luttmer (2007) finds the distribution of employment in US firms follows a power law with inequality parameter close to 1. 2 temp99.nb ◼ In economics and business, the Pareto Principle (or 80-20 rule) says that 80% of income accrues to the top 20% of income recipients. -

Application of Generalized Pareto Distribution to Constrain Uncertainty in Low-Probability Peak Ground Accelerations Luc Huyse,1 Rui Chen,2 John A

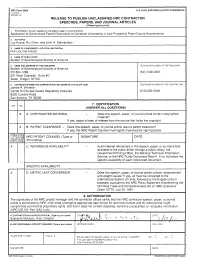

- -- -- - -- NRC Form 390A U.S. NUCLEAR REGULATORY COMMISSION (12 2003) NRCMD 3 9 RELEASE TO PUBLISH UNCLASSIFIED NRC CONTRACTOR SPEECHES, PAPERS, AND JOURNAL ARTICLES (Please type or prrnt) 1 TITLE (State in lull as it rlppeers on the speech, paper, or journal article) I Aaalication of Gener#slizedPareto Distribution to Constrain Uncertainty in Low-Probability Peak Ground Accelerations 2. AUTHOR(s) Luc Huyse, Rui Cheri, and John A. Stamatakos 3. NAME OF CONFERENCE. LOCATION. AND DATE@) NIA (Journal Article) 4. NAME OF PUBLICATION Bulletin of Seismological Society of America 5. NAME AND ADDRESS 01: THE PUBLISHER TELEPHONE NUMBER OF THE PUBLISHER Bulletin of Seismological Society of America PO BOX1268 (541) 549-2203 221 West Cascade - Suite #C Sister, Oregon 97759 - - 6 CONTRACTOR NAME AND COMPLETE MAILING ADDRESS (Include ZIP code) TELEPHONE NUMBER OF THE CONTRACTOR James R Wlnterle Center for Nuclear Waste Regulatory Analyses (210) 522-5249 6220 Culebra Road San Antonlo. TX 78238 7. CERTlFlCATlON (ANSWER ALL QUESTIONS) A. COPYRIGHTED MATERIAL - Does this speech, paper, or journal article contain copyrighted material? If ves, attach a letter of release from the source that holds the copvriaht. B. PATENT CLEARANCE - Does this speech, paper, or journal art~clerequire patent clearance? If yes, the NRC Patent Counsel must signify clearance by signing below COUNSEL (Type or SIGNATURE DATE C. REFERENCE AVAILABILITY - Is all material referenced in this speech, paper, or journal article available to the public either through a public library, the Government Printing Office, the National Technical Information Service, or the NRC Public Document Room? If no, list below the specific availability of each referenced document.