The Enigma Behind the Good–Turing Formula

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

APA Newsletter on Philosophy and Computers, Vol. 18, No. 2 (Spring

NEWSLETTER | The American Philosophical Association Philosophy and Computers SPRING 2019 VOLUME 18 | NUMBER 2 FEATURED ARTICLE Jack Copeland and Diane Proudfoot Turing’s Mystery Machine ARTICLES Igor Aleksander Systems with “Subjective Feelings”: The Logic of Conscious Machines Magnus Johnsson Conscious Machine Perception Stefan Lorenz Sorgner Transhumanism: The Best Minds of Our Generation Are Needed for Shaping Our Future PHILOSOPHICAL CARTOON Riccardo Manzotti What and Where Are Colors? COMMITTEE NOTES Marcello Guarini Note from the Chair Peter Boltuc Note from the Editor Adam Briggle, Sky Croeser, Shannon Vallor, D. E. Wittkower A New Direction in Supporting Scholarship on Philosophy and Computers: The Journal of Sociotechnical Critique CALL FOR PAPERS VOLUME 18 | NUMBER 2 SPRING 2019 © 2019 BY THE AMERICAN PHILOSOPHICAL ASSOCIATION ISSN 2155-9708 APA NEWSLETTER ON Philosophy and Computers PETER BOLTUC, EDITOR VOLUME 18 | NUMBER 2 | SPRING 2019 Polanyi’s? A machine that—although “quite a simple” one— FEATURED ARTICLE thwarted attempts to analyze it? Turing’s Mystery Machine A “SIMPLE MACHINE” Turing again mentioned a simple machine with an Jack Copeland and Diane Proudfoot undiscoverable program in his 1950 article “Computing UNIVERSITY OF CANTERBURY, CHRISTCHURCH, NZ Machinery and Intelligence” (published in Mind). He was arguing against the proposition that “given a discrete- state machine it should certainly be possible to discover ABSTRACT by observation sufficient about it to predict its future This is a detective story. The starting-point is a philosophical behaviour, and this within a reasonable time, say a thousand discussion in 1949, where Alan Turing mentioned a machine years.”3 This “does not seem to be the case,” he said, and whose program, he said, would in practice be “impossible he went on to describe a counterexample: to find.” Turing used his unbreakable machine example to defeat an argument against the possibility of artificial I have set up on the Manchester computer a small intelligence. -

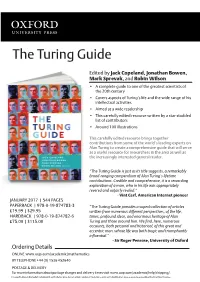

The Turing Guide

The Turing Guide Edited by Jack Copeland, Jonathan Bowen, Mark Sprevak, and Robin Wilson • A complete guide to one of the greatest scientists of the 20th century • Covers aspects of Turing’s life and the wide range of his intellectual activities • Aimed at a wide readership • This carefully edited resource written by a star-studded list of contributors • Around 100 illustrations This carefully edited resource brings together contributions from some of the world’s leading experts on Alan Turing to create a comprehensive guide that will serve as a useful resource for researchers in the area as well as the increasingly interested general reader. “The Turing Guide is just as its title suggests, a remarkably broad-ranging compendium of Alan Turing’s lifetime contributions. Credible and comprehensive, it is a rewarding exploration of a man, who in his life was appropriately revered and unfairly reviled.” - Vint Cerf, American Internet pioneer JANUARY 2017 | 544 PAGES PAPERBACK | 978-0-19-874783-3 “The Turing Guide provides a superb collection of articles £19.99 | $29.95 written from numerous different perspectives, of the life, HARDBACK | 978-0-19-874782-6 times, profound ideas, and enormous heritage of Alan £75.00 | $115.00 Turing and those around him. We find, here, numerous accounts, both personal and historical, of this great and eccentric man, whose life was both tragic and triumphantly influential.” - Sir Roger Penrose, University of Oxford Ordering Details ONLINE www.oup.com/academic/mathematics BY TELEPHONE +44 (0) 1536 452640 POSTAGE & DELIVERY For more information about postage charges and delivery times visit www.oup.com/academic/help/shipping/. -

Can Machines Think? the Controversy That Led to the Turing Test, 1946-1950

Can machines think? The controversy that led to the Turing test, 1946-1950 Bernardo Gonçalves1 Abstract Turing’s much debated test has turned 70 and is still fairly controversial. His 1950 paper is seen as a complex and multi-layered text and key questions remain largely unanswered. Why did Turing choose learning from experience as best approach to achieve machine intelligence? Why did he spend several years working with chess-playing as a task to illustrate and test for machine intelligence only to trade it off for conversational question-answering later in 1950? Why did Turing refer to gender imitation in a test for machine intelligence? In this article I shall address these questions directly by unveiling social, historical and epistemological roots of the so-called Turing test. I will draw attention to a historical fact that has been scarcely observed in the secondary literature so far, namely, that Turing's 1950 test came out of a controversy over the cognitive capabilities of digital computers, most notably with physicist and computer pioneer Douglas Hartree, chemist and philosopher Michael Polanyi, and neurosurgeon Geoffrey Jefferson. Seen from its historical context, Turing’s 1950 paper can be understood as essentially a reply to a series of challenges posed to him by these thinkers against his view that machines can think. Keywords: Alan Turing, Can machines think?, The imitation game, The Turing test, Mind-machine controversy, History of artificial intelligence. Robin Gandy (1919-1995) was one of Turing’s best friends and his only doctorate student. He received Turing’s mathematical books and papers when Turing died in 1954, and took over from Max Newman in 1963 the task of editing the papers for publication.1 Regarding Turing’s purpose in writing his 1950 paper and sending it for publication, Gandy offered a testimony previously brought forth by Jack Copeland which has not yet been commented about:2 It was intended not so much as a penetrating contribution to philosophy but as propaganda. -

When Shannon Met Turing

DOI: http://dx.doi.org/10.14236/ewic/EVA2017.9 Life in Code and Digits: When Shannon met Turing Tula Giannini Jonathan P. Bowen Dean and Professor Professor of Computing School of Information School of Engineering Pratt Institute London South Bank University New York, USA London, UK http://mysite.pratt.edu/~giannini/ http://www.jpbowen.com [email protected] [email protected] Claude Shannon (1916–2001) is regarded as the father of information theory. Alan Turing (1912– 1954) is known as the father of computer science. In the year 1943, Shannon and Turing were both at Bell Labs in New York City, although working on different projects. They had discussions together, including about Turing’s “Universal Machine,” a type of computational brain. Turing seems quite surprised that in a sea of code and computers, Shannon envisioned the arts and culture as an integral part of the digital revolution – a digital DNA of sorts. What was dreamlike in 1943, is today a reality, as digital representation of all media, accounts for millions of “cultural things” and massive music collections. The early connections that Shannon made between the arts, information, and computing, intuit the future that we are experiencing today. This paper considers foundational aspects of the digital revolution, the current state, and the possible future. It examines how digital life is increasingly becoming part of real life for more and more people around the world, especially with respect to the arts, culture, and heritage. Computer science. Information theory. Digital aesthetics. Digital culture. GLAM. 1. INTRODUCTION 2016, Copeland et al. -

Simply Turing

Simply Turing Simply Turing MICHAEL OLINICK SIMPLY CHARLY NEW YORK Copyright © 2020 by Michael Olinick Cover Illustration by José Ramos Cover Design by Scarlett Rugers All rights reserved. No part of this publication may be reproduced, distributed, or transmitted in any form or by any means, including photocopying, recording, or other electronic or mechanical methods, without the prior written permission of the publisher, except in the case of brief quotations embodied in critical reviews and certain other noncommercial uses permitted by copyright law. For permission requests, write to the publisher at the address below. [email protected] ISBN: 978-1-943657-37-7 Brought to you by http://simplycharly.com Contents Praise for Simply Turing vii Other Great Lives x Series Editor's Foreword xi Preface xii Acknowledgements xv 1. Roots and Childhood 1 2. Sherborne and Christopher Morcom 7 3. Cambridge Days 15 4. Birth of the Computer 25 5. Princeton 38 6. Cryptology From Caesar to Turing 44 7. The Enigma Machine 68 8. War Years 85 9. London and the ACE 104 10. Manchester 119 11. Artificial Intelligence 123 12. Mathematical Biology 136 13. Regina vs Turing 146 14. Breaking The Enigma of Death 162 15. Turing’s Legacy 174 Sources 181 Suggested Reading 182 About the Author 185 A Word from the Publisher 186 Praise for Simply Turing “Simply Turing explores the nooks and crannies of Alan Turing’s multifarious life and interests, illuminating with skill and grace the complexities of Turing’s personality and the long-reaching implications of his work.” —Charles Petzold, author of The Annotated Turing: A Guided Tour through Alan Turing’s Historic Paper on Computability and the Turing Machine “Michael Olinick has written a remarkably fresh, detailed study of Turing’s achievements and personal issues. -

Alan Turing: Virtuosity and Visualisation

http://dx.doi.org/10.14236/ewic/EVA2016.40 Alan Turing: Virtuosity and visualisation Jonathan P. Bowen London South Bank University, UK & Museophile Limited, Oxford, UK http://www.jpbowen.com [email protected] Alan Turing (1912–1954) has been increasingly recognised as an important mathematician who, despite his short life, developed mathematical ideas that today have led to foundational aspects of computer science, especially with respect to computability, artificial intelligence and morphogenesis (the growth of biological patterns, important in mathematical biology). Some of Turing’s mathematics and related ideas can be visualised in interesting and even artistic ways, aided using software. In addition, a significant corpus of the historical documentation on Turing can now be accessed online as a number of major archives have digitised material related to Turing. This paper introduces some of the major scientific work of Turing and ways in which it can be visualised, often artistically. Turing’s fame has, especially since his centenary in 2012, reached a level where he has had a cultural influence on the arts in general. Although the story of Turing can be seen as one of tragedy, with his life cut short, from a historical viewpoint Turing’s contribution to humankind has been triumphant. Artificial Intelligence. Digital art. History of mathematics. Morphogenesis. Turing Machines. Visualisation. 1. BACKGROUND AND INTRODUCTION a Turing Machine, with visualisations related to of some of Turing’s ideas. Section 3 presents some Alan Mathison Turing, OBE, FRS (23 June 1912 – 7 Turing-related artwork, leading to a new book cover. June 1954) was a British mathematician and In Section 4, major archives relating to Turing are codebreaker (Newman 1955, Hodges 1983/2012). -

US Navy Cryptanalytic Bombe - a Theory of Operation and Computer Simulation

US Navy Cryptanalytic Bombe - A Theory of Operation and Computer Simulation Magnus Ekhall Fredrik Hallenberg [email protected] [email protected] Abstract cally accurate speed. The simulator will be made available to the public. This paper presents a computer simulation of the US Navy Turing bombe. The US Navy bombe, an improved version of the British Turing-Welchman bombe, was pre- dominantly used to break German naval Enigma messages during World War II. By using simulations of a machine to break an example message it is shown how the US Navy Turing bombe could have been oper- ated and how it would have looked when running. 1 Introduction In 1942, with the help of Bletchley Park, the US Navy signals intelligence and cryptanalysis group OP-20-G started working on a new Turing bombe Figure 1: An operator setting up the wheels on a design. The result was a machine with both sim- US Navy bombe. Source: NSA ilarities and differences compared to its British counterpart. It is assumed that the reader is familiar with the There is an original US Navy bombe still in Enigma machine. This knowledge is widely avail- existence at the National Cryptologic Museum in able, for example in (Welchman, 2014). Fort Meade, MD, USA. The bombe on display is To find an Enigma message key with the bombe not in working order and the exact way it was op- it is necessary to have a piece of plaintext, a crib, erated is not fully known. corresponding to a part of the encrypted message. -

A New Master 1 Farewell To

NEWS AND FEATURES FROM THE BALLIOL COMMUNITY | JUNE 2018 A NEW MASTER 1 Visit from the Met Commissioner 9 Social media: a threat to democracy? 20 FAREWELL TO SIR How Balliol won University Challenge 28 DRUMMOND BONE 4 Balliol entrepreneurs 39 34 16 JUNE 2018 FROM THE MASTER 1 COLLEGE NEWS 30 New Fellows 2 A class act 4 Portrait of Professor Sir Drummond Bone 6 Deans on display 6 Awards 7 New Domestic Bursar 8 Visit from the Met Commissioner 9 New Outreach Officer 10 Admissions video 10 9 Our Oxford trip 11 Chinese visitors 12 4 Groundbreaking ceremony at the Master’s Field 13 STUDENT NEWS Horses and art in Northern Plains tribes 14 Having a blast in Bangladesh 16 Balliol climbers at BUCS 16 Photo of single atom wins national competition 17 26 Orchestra tour 17 Judo medal 17 JCR introduces CAFG officers 18 First place in an international finance competition 18 BOOKS AND RESEARCH #VoteLeave or #StrongerIn 19 Target democracy 20 Dynamics, vibration and uncertainty 22 Bookshelf 24 14 BALLIOL PAST AND PRESENT Balliol College, Oxford OX1 3BJ Nicholas Crouch reconstructed 26 www.balliol.ox.ac.uk How Balliol won University Challenge 28 Copyright © Balliol College, Oxford, 2018 The Garden Quad in Wartime 30 Tutorials remembered 32 Editor: Anne Askwith (Publications and Web Officer) Walking in the footsteps of Belloc 32 Editorial Adviser: Nicola Trott (Senior Tutor) Design and printing: Ciconi Ltd ALUMNI STORIES Front cover: Balliol’s first female Master, Dame Helen Ghosh DCB Social enterprise in Rwanda 33 (photograph by Rob Judges), who took up her position in April 2018. -

The Turing Guide Free

FREE THE TURING GUIDE PDF Jack Copeland,Jonathan Bowen,Mark Sprevak,Robin Wilson | 544 pages | 02 Feb 2017 | Oxford University Press | 9780198747833 | English | Oxford, United Kingdom The Turing Test Walkthrough Goodreads helps you keep track of books you want to read. Want to Read saving…. Want to Read Currently Reading Read. Other editions. Enlarge cover. Error rating book. Refresh and try The Turing Guide. Open Preview See a Problem? Jack Copeland. Details if other :. Thanks for telling us about the problem. Return to Book Page. Preview — The Turing Guide by B. The Turing Guide by B. Jack Copeland Editor. Jonathan Bowen Editor. Mark Sprevak Editor. Robin J. Wilson Editor. Alan Turing has long proved a subject of fascination, but following the centenary of his birth inthe code-breaker, computer pioneer, mathematician and much more has become even more celebrated with much media coverage, and several meetings, conferences and books raising public awareness of Turing's life and work. This The Turing Guide will bring together contributions from s Alan Turing The Turing Guide long proved a subject of fascination, but following the centenary of his birth inthe code-breaker, computer pioneer, mathematician and much more has become even more celebrated with much media coverage, and several meetings, conferences and books raising public awareness of Turing's life and work. This volume will bring together contributions from some of the leading experts on Alan Turing to create a comprehensive guide to Turing that will serve as a useful resource for researchers in the area as well as the increasingly interested general reader. -

A Bibliography of Publications of Alan Mathison Turing

A Bibliography of Publications of Alan Mathison Turing Nelson H. F. Beebe University of Utah Department of Mathematics, 110 LCB 155 S 1400 E RM 233 Salt Lake City, UT 84112-0090 USA Tel: +1 801 581 5254 FAX: +1 801 581 4148 E-mail: [email protected], [email protected], [email protected] (Internet) WWW URL: http://www.math.utah.edu/~beebe/ 24 August 2021 Version 1.227 Abstract This bibliography records publications of Alan Mathison Turing (1912– 1954). Title word cross-reference 0(z) [Fef95]. $1 [Fis15, CAC14b]. 1 [PSS11, WWG12]. $139.99 [Ano20]. $16.95 [Sal12]. $16.96 [Kru05]. $17.95 [Hai16]. $19.99 [Jon17]. 2 [Fai10b]. $21.95 [Sal12]. $22.50 [LH83]. $24.00/$34 [Kru05]. $24.95 [Sal12, Ano04, Kru05]. $25.95 [KP02]. $26.95 [Kru05]. $29.95 [Ano20, CK12b]. 3 [Ano11c]. $54.00 [Kru05]. $69.95 [Kru05]. $75.00 [Jon17, Kru05]. $9.95 [CK02]. H [Wri16]. λ [Tur37a]. λ − K [Tur37c]. M [Wri16]. p [Tur37c]. × [Jon17]. -computably [Fai10b]. -conversion [Tur37c]. -D [WWG12]. -definability [Tur37a]. -function [Tur37c]. 1 2 . [Nic17]. Zycie˙ [Hod02b]. 0-19-825079-7 [Hod06a]. 0-19-825080-0 [Hod06a]. 0-19-853741-7 [Rus89]. 1 [Ano12g]. 1-84046-250-7 [CK02]. 100 [Ano20, FB17, Gin19]. 10011-4211 [Kru05]. 10th [Ano51]. 11th [Ano51]. 12th [Ano51]. 1942 [Tur42b]. 1945 [TDCKW84]. 1947 [CV13b, Tur47, Tur95a]. 1949 [Ano49]. 1950s [Ell19]. 1951 [Ano51]. 1988 [Man90]. 1995 [Fef99]. 2 [DH10]. 2.0 [Wat12o]. 20 [CV13b]. 2001 [Don01a]. 2002 [Wel02]. 2003 [Kov03]. 2004 [Pip04]. 2005 [Bro05]. 2006 [Mai06, Mai07]. 2008 [Wil10]. 2011 [Str11]. 2012 [Gol12]. 20th [Kru05]. 25th [TDCKW84]. -

Alan Turing: Founder of Computer Science

Alan Turing: Founder of Computer Science Jonathan P. Bowen Division of Computer Science and Informatics School of Engineering London South Bank University Borough Road, London SE1 0AA, UK Museophile Limited, Oxford, UK [email protected] http://www.jpbowen.com Abstract. In this paper, a biographical overview of Alan Turing, the 20th cen- tury mathematician, philosopher and early computer scientist, is presented. Tur- ing has a rightful claim to the title of ‘Father of modern computing’. He laid the theoretical groundwork for a universal machine that models a computer in its most general form before World War II. During the war, Turing was instru- mental in developing and influencing practical computing devices that have been said to have shortened the war by up to two years by decoding encrypted enemy messages that were generally believed to be unbreakable. After the war, he was involved with the design and programming of early computers. He also wrote foundational papers in the areas of what are now known as Artificial Intelligence (AI) and mathematical biology shortly before his untimely death. The paper also considers Turing’s subsequent influence, both scientifically and culturally. 1 Prologue Alan Mathison Turing [8,23,33] was born in London, England, on 23 June 1912. Ed- ucated at Sherborne School in Dorset, southern England, and at King’s College, Cam- bridge, he graduated in 1934 with a degree in mathematics. Twenty years later, after a short but exceptional career, he died on 7 June 1954 in mysterious circumstances. He is lauded by mathematicians, philosophers, and computer sciences, as well as increasingly the public in general. -

Jewel Theatre Audience Guide Addendum: Alan Turing Biography

Jewel Theatre Audience Guide Addendum: Alan Turing Biography directed by Kirsten Brandt by Susan Myer Silton, Dramaturg © 2019 ALAN TURING The outline of the following overview of Turing’s life is largely based on his biography on Alchetron.com (https://alchetron.com/Alan-Turing), a “social encyclopedia” developed by Alchetron Technologies. It has been embellished with additional information from sources such as Andrew Hodges’ books, Alan Turing: The Enigma (1983) and Turing (1997) as well as his website, https://www.turing.org.uk. The following books have also provided additional information: Prof: Alan Turing Decoded (2015) by Dermot Turing, who is Alan’s nephew by way of his only sibling, John; The Turing Guide by B. Jack Copeland, Jonathan Bowen, Mark Sprevak, and Robin Wilson (2017); and Alan M. Turing, written by his mother, Sara, shortly after he died. The latter was republished in 2012 as Alan M. Turing – Centenary Edition with an Afterword entitled “My Brother Alan” by John Turing. The essay was added when it was discovered among John’s writings following his death. The republication also includes a new Foreword by Martin Davis, an American mathematician known for his model of post-Turing machines. Extended biographies of Christopher Morcom, Dillwyn Knox, Joan Clarke (the character of Pat Green in the play) and Sara Turing, which are provided as Addendums to this Guide, provide additional information about Alan. Beginnings Alan Mathison Turing was an English computer scientist, mathematician, logician, cryptanalyst, philosopher and theoretical biologist. He was born in a nursing home in Maida Vale, a tony residential district of London, England on June 23, 1912.