Classification of Carnatic Thumbnails Using CNN-RNN Models

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Nationalmerit-2020.Pdf

Rehabilitation Council of India ‐ National Board of Examination in Rehabilitation (NBER) National Merit list of candidates in Alphabatic Order for admission to Diploma Level Course for the Academic Session 2020‐21 06‐Nov‐20 S.No Name Father Name Application No. Course Institute Institute Name Category % in Class Remark Code Code 12th 1 A REENA PATRA A BHIMASEN PATRA 200928134554 0549 AP034 Priyadarsini Service Organization, OBC 56.16 2 AABHA MAYANK PANDEY RAMESH KUMAR PANDEY 200922534999 0547 UP067 Yuva Viklang Evam Dristibadhitarth Kalyan Sewa General 75.4 Sansthan, 3 AABID KHAN HAKAM DEEN 200930321648 0547 HR015 MR DAV College of Education, OBC 74.6 4 AADIL KHAN INTZAR KHAN 200929292350 0527 UP038 CBSM, Rae Bareli Speech & Hearing Institute, General 57.8 5 AADITYA TRIPATHI SOM PRAKASH TRIPATHI 200921120721 0549 UP130 Suveera Institute for Rehabilitation and General 71 Disabilities 6 AAINA BANO SUMIN MOHAMMAD 200926010618 0550 RJ002 L.K. C. Shri Jagdamba Andh Vidyalaya Samiti OBC 93 ** 7 AAKANKSHA DEVI LAKHAN LAL 200927081668 0550 UP044 Rehabilitation Society of the Visually Impaired, OBC 75 8 AAKANKSHA MEENA RANBEER SINGH 200928250444 0547 UP119 Swaraj College of Education ST 74.6 9 AAKANKSHA SINGH NARENDRA BAHADUR SING 201020313742 0547 UP159 Prema Institute for Special Education, General 73.2 10 AAKANSHA GAUTAM TARACHAND GAUTAM 200925253674 0549 RJ058 Ganga Vision Teacher Training Institute General 93.2 ** 11 AAKANSHA SHARMA MAHENDRA KUMAR SHARM 200919333672 0549 CH002 Government Rehabilitation Institute for General 63.60% Intellectual -

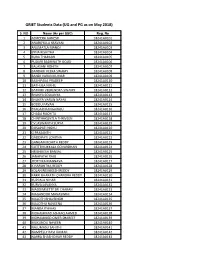

GRIET Students Data (UG and PG As on May 2018)

GRIET Students Data (UG and PG as on May 2018) S. NO Name (As per SSC) Reg. No 1 AZMEERA GANESH 18241A0101 2 ANABOYULA SRAVANI 18241A0102 3 ANUMATLA MANOJ 18241A0103 4 BYNA RISHITHA 18241A0104 5 BURA THARASRI 18241A0105 6 PUDARI BADRINATH GOUD 18241A0106 7 BALASANI ROHITH 18241A0107 8 BANDARI VEERA SWAMY 18241A0108 9 BANDI VARUN KUMAR 18241A0109 10 BASHIPAKA PRADEEP 18241A0110 11 BATHULA NIKHIL 18241A0111 12 BATIKIRI VEERENDRA SWAMY 18241A0112 13 BHUKYA SOUJANYA 18241A0113 14 BHUKYA VARUN NAYAK 18241A0114 15 BODDU PAVAN 18241A0115 16 BYAGARI RANGARAJU 18241A0116 17 CHADA RUCHITA 18241A0117 18 CHINTHAKUNTLA THRIVEEN 18241A0118 19 CV JASWANTH SURYA 18241A0119 20 DOSAPATI NISHU 18241A0120 21 G PRASANTH 18241A0121 22 GADDIPATI LOHITHA 18241A0122 23 GANGAM ROHITH REDDY 18241A0123 24 GOTTEMUKKALA GOVARDHAN 18241A0124 25 HRISHIKESH BANSAL 18241A0125 26 JANAPATHI RAJU 18241A0126 27 JYOTHIKA MANNAVA 18241A0127 28 K HARSHITHA REDDY 18241A0128 29 KOLAN RESHIKESH REDDY 18241A0129 30 KARRI BHARATH CHANDRA REDDY 18241A0130 31 KUPPALA NIHAR 18241A0131 32 KURVA LAVANYA 18241A0132 33 MADDIMSETTY SRI CHARAN 18241A0133 34 MAGANOOR MANASWINI 18241A0134 35 MALOTH BHAVSINGH 18241A0135 36 MALOTHU NAVEENA 18241A0136 37 MANDA ITHIHAS 18241A0137 38 MOHAMMAD ASHFAQ AHMED 18241A0138 39 MOHAMMED OMER SHAREEF 18241A0139 40 MUKUNDU NAVEEN 18241A0140 41 NALUMASU SAHITHI 18241A0141 42 NAMPELLY RAVI KUMAR 18241A0142 43 NARRA SHASHIDHAR REDDY 18241A0143 44 PATLOLA VINAY REDDY 18241A0144 45 PATTAMBETTY PAVANKUMAR 18241A0145 46 POLA THARUN 18241A0146 47 POSANI S V A KALYAN -

Towards Automatic Audio Segmentation of Indian Carnatic Music

Friedrich-Alexander-Universit¨at Erlangen-Nurnberg¨ Master Thesis Towards Automatic Audio Segmentation of Indian Carnatic Music submitted by Venkatesh Kulkarni submitted July 29, 2014 Supervisor / Advisor Dr. Balaji Thoshkahna Prof. Dr. Meinard Muller¨ Reviewers Prof. Dr. Meinard Muller¨ International Audio Laboratories Erlangen A Joint Institution of the Friedrich-Alexander-Universit¨at Erlangen-N¨urnberg (FAU) and Fraunhofer Institute for Integrated Circuits IIS ERKLARUNG¨ Erkl¨arung Hiermit versichere ich an Eides statt, dass ich die vorliegende Arbeit selbstst¨andig und ohne Benutzung anderer als der angegebenen Hilfsmittel angefertigt habe. Die aus anderen Quellen oder indirekt ubernommenen¨ Daten und Konzepte sind unter Angabe der Quelle gekennzeichnet. Die Arbeit wurde bisher weder im In- noch im Ausland in gleicher oder ¨ahnlicher Form in einem Verfahren zur Erlangung eines akademischen Grades vorgelegt. Erlangen, July 29, 2014 Venkatesh Kulkarni i Master Thesis, Venkatesh Kulkarni ACKNOWLEDGEMENTS Acknowledgements I would like to express my gratitude to my supervisor, Dr. Balaji Thoshkahna, whose expertise, understanding and patience added considerably to my learning experience. I appreciate his vast knowledge and skill in many areas (e.g., signal processing, Carnatic music, ethics and interaction with participants).He provided me with direction, technical support and became more of a friend, than a supervisor. A very special thanks goes out to my Prof. Dr. Meinard M¨uller,without whose motivation and encouragement, I would not have considered a graduate career in music signal analysis research. Prof. Dr. Meinard M¨ulleris the one professor/teacher who truly made a difference in my life. He was always there to give his valuable and inspiring ideas during my thesis which motivated me to think like a researcher. -

Famous Indian Classical Musicians and Vocalists Free Static GK E-Book

oliveboard FREE eBooks FAMOUS INDIAN CLASSICAL MUSICIANS & VOCALISTS For All Banking and Government Exams Famous Indian Classical Musicians and Vocalists Free static GK e-book Current Affairs and General Awareness section is one of the most important and high scoring sections of any competitive exam like SBI PO, SSC-CGL, IBPS Clerk, IBPS SO, etc. Therefore, we regularly provide you with Free Static GK and Current Affairs related E-books for your preparation. In this section, questions related to Famous Indian Classical Musicians and Vocalists have been asked. Hence it becomes very important for all the candidates to be aware about all the Famous Indian Classical Musicians and Vocalists. In all the Bank and Government exams, every mark counts and even 1 mark can be the difference between success and failure. Therefore, to help you get these important marks we have created a Free E-book on Famous Indian Classical Musicians and Vocalists. The list of all the Famous Indian Classical Musicians and Vocalists is given in the following pages of this Free E-book on Famous Indian Classical Musicians and Vocalists. Sample Questions - Q. Ustad Allah Rakha played which of the following Musical Instrument? (a) Sitar (b) Sarod (c) Surbahar (d) Tabla Answer: Option D – Tabla Q. L. Subramaniam is famous for playing _________. (a) Saxophone (b) Violin (c) Mridangam (d) Flute Answer: Option B – Violin Famous Indian Classical Musicians and Vocalists Free static GK e-book Famous Indian Classical Musicians and Vocalists. Name Instrument Music Style Hindustani -

Note Staff Symbol Carnatic Name Hindustani Name Chakra Sa C

The Indian Scale & Comparison with Western Staff Notations: The vowel 'a' is pronounced as 'a' in 'father', the vowel 'i' as 'ee' in 'feet', in the Sa-Ri-Ga Scale In this scale, a high note (swara) will be indicated by a dot over it and a note in the lower octave will be indicated by a dot under it. Hindustani Chakra Note Staff Symbol Carnatic Name Name MulAadhar Sa C - Natural Shadaj Shadaj (Base of spine) Shuddha Swadhishthan ri D - flat Komal ri Rishabh (Genitals) Chatushruti Ri D - Natural Shudhh Ri Rishabh Sadharana Manipur ga E - Flat Komal ga Gandhara (Navel & Solar Antara Plexus) Ga E - Natural Shudhh Ga Gandhara Shudhh Shudhh Anahat Ma F - Natural Madhyam Madhyam (Heart) Tivra ma F - Sharp Prati Madhyam Madhyam Vishudhh Pa G - Natural Panchama Panchama (Throat) Shuddha Ajna dha A - Flat Komal Dhaivat Dhaivata (Third eye) Chatushruti Shudhh Dha A - Natural Dhaivata Dhaivat ni B - Flat Kaisiki Nishada Komal Nishad Sahsaar Ni B - Natural Kakali Nishada Shudhh Nishad (Crown of head) Så C - Natural Shadaja Shadaj Property of www.SarodSitar.com Copyright © 2010 Not to be copied or shared without permission. Short description of Few Popular Raags :: Sanskrut (Sanskrit) pronunciation is Raag and NOT Raga (Alphabetical) Aroha Timing Name of Raag (Karnataki Details Avroha Resemblance) Mood Vadi, Samvadi (Main Swaras) It is a old raag obtained by the combination of two raags, Ahiri Sa ri Ga Ma Pa Ga Ma Dha ni Så Ahir Bhairav Morning & Bhairav. It belongs to the Bhairav Thaat. Its first part (poorvang) has the Bhairav ang and the second part has kafi or Så ni Dha Pa Ma Ga ri Sa (Chakravaka) serious, devotional harpriya ang. -

Fusion Without Confusion Raga Basics Indian

Fusion Without Confusion Raga Basics Indian Rhythm Basics Solkattu, also known as konnakol is the art of performing percussion syllables vocally. It comes from the Carnatic music tradition of South India and is mostly used in conjunction with instrumental music and dance instruction, although it has been widely adopted throughout the world as a modern composition and performance tool. Similarly, the music of North India has its own system of rhythm vocalization that is based on Bols, which are the vocalization of specific sounds that correspond to specific sounds that are made on the drums of North India, most notably the Tabla drums. Like in the south, the bols are used in musical training, as well as composition and performance. In addition, solkattu sounds are often referred to as bols, and the practice of reciting bols in the north is sometimes referred to as solkattu, so the distinction between the two practices is blurred a bit. The exercises and compositions we will discuss contain bols that are found in both North and South India, however they come from the tradition of the North Indian tabla drums. Furthermore, the theoretical aspect of the compositions is distinctly from the Hindustani, (north Indian) tradition. Hence, for the purpose of this presentation, the use of the term Solkattu refers to the broader, more general practice of Indian rhythmic language. South Indian Percussion Mridangam Dolak Kanjira Gattam North Indian Percussion Tabla Baya (a.k.a. Tabla) Pakhawaj Indian Rhythm Terms Tal (also tala, taal, or taala) – The Indian system of rhythm. Tal literally means "clap". -

DREAMWORLD INDIA Product Description - 2014

DREAMWORLD INDIA Product Description - 2014 About DREAMWORLD INDIA DWI is India’s fastest growing multilayered marketing firm demonstrating deep experience & expertise spanning over a decade. The gradual progression has been challenging & exciting with DWI’s multilayered marketing divisions such as “Direct to consumer, Exhibition sales, Magazine subscription, Institutional sales, Wise Buyers Club & Dream Niketana (Shop)”. Over the years DWI has expanded its distribution nationwide & would shortly go overseas. DWI’s strong sales force has created a direct selling network that has a reach beyond expectations. Our products are sourced from Living Media India Ltd which includes brands like “India Today, Music Today, Business Today, Good house Keeping, Harper Collins, Leopard, Britannica and also branded households, Electronics & several different highly popular magazines of international repute. DWI unceasingly keeps pace with the dynamic market environment to innovate remarkable strategies understanding customer’s needs ensuring unique, branded & exclusive products at an incredible price without any compromise in quality. DWI aims to become a “One-Stop Shop” i.e., our goal is to bring every branded product you need to your door step keeping in mind “Branded products at incredible prices” to help save your hard earned money. Our confidence in our work & product is at the highest level which is why you receive the product or gifts first & then pay. COMPLETE HISTORY OF THE WORLD This is one of the great works of historical reference in the English language .If you were allowed only one history book in the whole of your life. The Complete History of the world would be hard to beat because it conveys a sense not only of time, but also of place. -

A Novel EEG Based Study with Hindustani Classical Music

Can Musical Emotion Be Quantified With Neural Jitter Or Shimmer? A Novel EEG Based Study With Hindustani Classical Music Sayan Nag, Sayan Biswas, Sourya Sengupta Shankha Sanyal, Archi Banerjee, Ranjan Department of Electrical Engineering Sengupta,Dipak Ghosh Jadavpur University Sir C.V. Raman Centre for Physics and Music Kolkata, India Jadavpur University Kolkata, India Abstract—The term jitter and shimmer has long been used in In India, music (geet) has been a subject of aesthetic and the domain of speech and acoustic signal analysis as a parameter intellectual discourse since the times of Vedas (samaveda). for speaker identification and other prosodic features. In this Rasa was examined critically as an essential part of the theory study, we look forward to use the same parameters in neural domain to identify and categorize emotional cues in different of art by Bharata in Natya Sastra, (200 century BC). The rasa musical clips. For this, we chose two ragas of Hindustani music is considered as a state of enhanced emotional perception which are conventionally known to portray contrast emotions produced by the presence of musical energy. It is perceived as and EEG study was conducted on 5 participants who were made a sentiment, which could be described as an aesthetic to listen to 3 min clip of these two ragas with sufficient resting experience. Although unique, one can distinguish several period in between. The neural jitter and shimmer components flavors according to the emotion that colors it [11]. Several were evaluated for each experimental condition. The results reveal interesting information regarding domain specific arousal emotional flavors are listed, namely erotic love (sringara), of human brain in response to musical stimuli and also regarding pathetic (karuna), devotional (bhakti), comic (hasya), horrific trait characteristics of an individual. -

Quote of the Week

31st October – 6th November, 2014 Quote of the Week Character cannot be developed in ease and quiet. Only through experience of trial and suffering can the soul be strengthened, ambition inspired, and success achieved. – Helen Keller < Click icons below for easy navigation > Through Chennai This Week, compiled and published every Friday, we provide information about what is happening in Chennai every week. It has information about all the leading Events – Music, Dance, Exhibitions, Seminars, Dining Out, and Discount Sales etc. CTW is circulated within several corporate organizations, large and small. If you wish to share information with approximately 30000 readers or advertise here, please call 98414 41116 or 98840 11933. Our mail id is [email protected] Entertainment - Film Festivals in the City Friday Movie Club @ Cholamandal presents - Film: BBC Modern Masters - Andy Warhol The first in a four-part series exploring the life and works of the 20th century's artists: Matisse; Picasso; Dali and Warhol. In this episode on Andy Warhol, Sooke explores the king of Pop Art. On his journey he parties with Dennis Hopper, has a brush with Carla Bruni and comes to grips with Marilyn. Along the way he uncovers just how brilliantly Andy Warhol pinpointed and portrayed our obsessions with consumerism, celebrity and the media. This film will be screened on 31st October, 2014 at 7.00 pm - 8.30 pm. at Cholamandal Centre for Contemporary Art (CCCA), Cholamandal Artists’ Village, Injambakkam, ECR, Chennai – 600 115. Entry is free. For more information, contact 9500105961/ 24490092 / 24494053 Entertainment – Music & Dance Bharat Sangeet Utsav 2014 Bharat Sangeet Utsav, organised by Carnatica and Sri Parthasarathy Swami Sabha is a well-themed concert series and comes up early in November. -

Evaluation of the Effects of Music Therapy Using Todi Raga of Hindustani Classical Music on Blood Pressure, Pulse Rate and Respiratory Rate of Healthy Elderly Men

Volume 64, Issue 1, 2020 Journal of Scientific Research Institute of Science, Banaras Hindu University, Varanasi, India. Evaluation of the Effects of Music Therapy Using Todi Raga of Hindustani Classical Music on Blood Pressure, Pulse Rate and Respiratory Rate of Healthy Elderly Men Samarpita Chatterjee (Mukherjee) 1, and Roan Mukherjee2* 1 Department of Hindustani Classical Music (Vocal), Sangit-Bhavana, Visva-Bharati (A Central University), Santiniketan, Birbhum-731235,West Bengal, India 2 Department of Human Physiology, Hazaribag College of Dental Sciences and Hospital, Demotand, Hazaribag 825301, Jharkhand, India. [email protected] Abstract Several studies have indicated that music therapy may affect I. INTRODUCTION cardiovascular health; in particular, it may bring positive changes Music may be regarded as the projection of ideas as well as in blood pressure levels and heart rate, thereby improving the emotions through significant sounds produced by an instrument, overall quality of life. Hence, to regulate blood pressure, music voices, or both by taking into consideration different elements of therapy may be regarded as a significant complementary and alternative medicine (CAM). The respiratory rate, if maintained melody, rhythm, and harmony. Music plays an important role in within the normal range, may promote good cardiac health. The everyone’s life. Music has the power to make one experience aim of the present study was to evaluate the changes in blood harmony, emotional ecstasy, spiritual uplifting, positive pressure, pulse rate and respiratory rate in healthy and disease-free behavioral changes, and absolute tranquility. The annoyance in males (age 50-60 years), at the completion of 30 days of music life may increase in lack of melody and harmony. -

A History of Indian Music by the Same Author

68253 > OUP 880 5-8-74 10,000 . OSMANIA UNIVERSITY LIBRARY Call No.' poa U Accession No. Author'P OU H Title H; This bookok should bHeturned on or befoAbefoifc the marked * ^^k^t' below, nfro . ] A HISTORY OF INDIAN MUSIC BY THE SAME AUTHOR On Music : 1. Historical Development of Indian Music (Awarded the Rabindra Prize in 1960). 2. Bharatiya Sangiter Itihasa (Sanglta O Samskriti), Vols. I & II. (Awarded the Stisir Memorial Prize In 1958). 3. Raga O Rupa (Melody and Form), Vols. I & II. 4. Dhrupada-mala (with Notations). 5. Sangite Rabindranath. 6. Sangita-sarasamgraha by Ghanashyama Narahari (edited). 7. Historical Study of Indian Music ( ....in the press). On Philosophy : 1. Philosophy of Progress and Perfection. (A Comparative Study) 2. Philosophy of the World and the Absolute. 3. Abhedananda-darshana. 4. Tirtharenu. Other Books : 1. Mana O Manusha. 2. Sri Durga (An Iconographical Study). 3. Christ the Saviour. u PQ O o VM o Si < |o l "" c 13 o U 'ij 15 1 I "S S 4-> > >-J 3 'C (J o I A HISTORY OF INDIAN MUSIC' b SWAMI PRAJNANANANDA VOLUME ONE ( Ancient Period ) RAMAKRISHNA VEDANTA MATH CALCUTTA : INDIA. Published by Swaxni Adytaanda Ramakrishna Vedanta Math, Calcutta-6. First Published in May, 1963 All Rights Reserved by Ramakrishna Vedanta Math, Calcutta. Printed by Benoy Ratan Sinha at Bharati Printing Works, 141, Vivekananda Road, Calcutta-6. Plates printed by Messrs. Bengal Autotype Co. Private Ltd. Cornwallis Street, Calcutta. DEDICATED TO SWAMI VIVEKANANDA AND HIS SPIRITUAL BROTHER SWAMI ABHEDANANDA PREFACE Before attempting to write an elaborate history of Indian Music, I had a mind to write a concise one for the students. -

Bangalore for the Visitor

Bangalore For the Visitor PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Mon, 12 Dec 2011 08:58:04 UTC Contents Articles The City 11 BBaannggaalloorree 11 HHiissttoorryoofBB aann ggaalloorree 1188 KKaarrnnaattaakkaa 2233 KKaarrnnaattaakkaGGoovv eerrnnmmeenntt 4466 Geography 5151 LLaakkeesiinBB aanngg aalloorree 5511 HHeebbbbaalllaakkee 6611 SSaannkkeeyttaannkk 6644 MMaaddiiwwaallaLLaakkee 6677 Key Landmarks 6868 BBaannggaalloorreCCaann ttoonnmmeenntt 6688 BBaannggaalloorreFFoorrtt 7700 CCuubbbboonPPaarrkk 7711 LLaalBBaagghh 7777 Transportation 8282 BBaannggaalloorreMM eettrrooppoolliittaanTT rraannssppoorrtCC oorrppoorraattiioonn 8822 BBeennggaalluurruIInn tteerrnnaattiioonnaalAA iirrppoorrtt 8866 Culture 9595 Economy 9696 Notable people 9797 LLiisstoof ppee oopplleffrroo mBBaa nnggaalloorree 9977 Bangalore Brands 101 KKiinnggffiisshheerAAiirrll iinneess 110011 References AArrttiicclleSSoo uurrcceesaann dCC oonnttrriibbuuttoorrss 111155 IImmaaggeSS oouurrcceess,LL iicceennsseesaa nndCC oonnttrriibbuuttoorrss 111188 Article Licenses LLiicceennssee 112211 11 The City Bangalore Bengaluru (ಬೆಂಗಳೂರು)) Bangalore — — metropolitan city — — Clockwise from top: UB City, Infosys, Glass house at Lal Bagh, Vidhana Soudha, Shiva statue, Bagmane Tech Park Bengaluru (ಬೆಂಗಳೂರು)) Location of Bengaluru (ಬೆಂಗಳೂರು)) in Karnataka and India Coordinates 12°58′′00″″N 77°34′′00″″EE Country India Region Bayaluseeme Bangalore 22 State Karnataka District(s) Bangalore Urban [1][1] Mayor Sharadamma [2][2] Commissioner Shankarlinge Gowda [3][3] Population 8425970 (3rd) (2011) •• Density •• 11371 /km22 (29451 /sq mi) [4][4] •• Metro •• 8499399 (5th) (2011) Time zone IST (UTC+05:30) [5][5] Area 741.0 square kilometres (286.1 sq mi) •• Elevation •• 920 metres (3020 ft) [6][6] Website Bengaluru ? Bangalore English pronunciation: / / ˈˈbæŋɡəɡəllɔəɔər, bæŋɡəˈllɔəɔər/, also called Bengaluru (Kannada: ಬೆಂಗಳೂರು,, Bengaḷūru [[ˈˈbeŋɡəɭ uuːːru]ru] (( listen)) is the capital of the Indian state of Karnataka.