How to Visualsfm

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Automatic Generation of a 3D City Model

UNIVERSITY OF CASTILLA-LA MANCHA ESCUELA SUPERIOR DE INFORMÁTICA COMPUTER ENGINEERING DEGREE DEGREE FINAL PROJECT Automatic generation of a 3D city model David Murcia Pacheco June, 2017 AUTOMATIC GENERATION OF A 3D CITY MODEL Escuela Superior de Informática UNIVERSITY OF CASTILLA-LA MANCHA ESCUELA SUPERIOR DE INFORMÁTICA Information Technology and Systems SPECIFIC TECHNOLOGY OF COMPUTER ENGINEERING DEGREE FINAL PROJECT Automatic generation of a 3D city model Author: David Murcia Pacheco Director: Dr. Félix Jesús Villanueva Molina June, 2017 David Murcia Pacheco Ciudad Real – Spain E-mail: [email protected] Phone No.:+34 625 922 076 c 2017 David Murcia Pacheco Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.3 or any later version published by the Free Software Foundation; with no Invariant Sections, no Front-Cover Texts, and no Back-Cover Texts. A copy of the license is included in the section entitled "GNU Free Documentation License". i TRIBUNAL: Presidente: Vocal: Secretario: FECHA DE DEFENSA: CALIFICACIÓN: PRESIDENTE VOCAL SECRETARIO Fdo.: Fdo.: Fdo.: ii Abstract HIS document collects all information related to the Degree Final Project (DFP) of Com- T puter Engineering Degree of the student David Murcia Pacheco, tutorized by Dr. Félix Jesús Villanueva Molina. This work has been developed during 2016 and 2017 in the Escuela Superior de Informática (ESI), in Ciudad Real, Spain. It is based in one of the proposed sub- jects by the faculty of this university for this year, called "Generación automática del modelo en 3D de una ciudad". -

Benchmarks on WWW Performance

The Scalability of X3D4 PointProperties: Benchmarks on WWW Performance Yanshen Sun Thesis submitted to the Faculty of the Virginia Polytechnic Institute and State University in partial fulfillment of the requirements for the degree of Master of Science in Computer Science and Application Nicholas F. Polys, Chair Doug A. Bowman Peter Sforza Aug 14, 2020 Blacksburg, Virginia Keywords: Point Cloud, WebGL, X3DOM, x3d Copyright 2020, Yanshen Sun The Scalability of X3D4 PointProperties: Benchmarks on WWW Performance Yanshen Sun (ABSTRACT) With the development of remote sensing devices, it becomes more and more convenient for individual researchers to acquire high-resolution point cloud data by themselves. There have been plenty of online tools for the researchers to exhibit their work. However, the drawback of existing tools is that they are not flexible enough for the users to create 3D scenes of a mixture of point-based and triangle-based models. X3DOM is a WebGL-based library built on Extensible 3D (X3D) standard, which enables users to create 3D scenes with only a little computer graphics knowledge. Before X3D 4.0 Specification, little attention has been paid to point cloud rendering in X3DOM. PointProperties, an appearance node newly added in X3D 4.0, provides point size attenuation and texture-color mixing effects to point geometries. In this work, we propose an X3DOM implementation of PointProperties. This implementation fulfills not only the features specified in X3D 4.0 documentation, but other shading effects comparable to the effects of triangle-based geometries in X3DOM, as well as other state-of-the-art point cloud visualization tools. -

Cloudcompare Point Cloud Processing Workshop Daniel Girardeau-Montaut

CloudCompare Point Cloud Processing Workshop Daniel Girardeau-Montaut www.cloudcompare.org @CloudCompareGPL PCP 2019 [email protected] December 4-5, 2019, Stuttgart, Germany Outline About the project Quick overview of the software capabilities Point cloud processing with CloudCompare The project 2003: PhD for EDF R&D EDF Main French power utility More than 150 000 employees worldwide 2 000 @ R&D (< 2%) 200 know about CloudCompare (< 0.2%) Sales >75 B€ > 200 dams + 58 nuclear reactors (19 plants) EDF and Laser Scanning EDF = former owner of Mensi (now Trimble Laser Scanning) Main scanning activity: as-built documentation Scanning a single nuclear reactor building 2002: 3 days, 50 M. points 2014: 1.5 days, 50 Bn points (+ high res. photos) EDF and Laser Scanning Other scanning activities: Building monitoring (dams, cooling towers, etc.) Landslide monitoring Hydrology Historical preservation (EDF Foundation) PhD Change detection on 3D geometric data Application to Emergency Mapping Inspired by 9/11 post-attacks recovery efforts (see “Mapping Ground Zero” by J. Kern, Optech, Nov. 2001) TLS was used for: visualization, optimal crane placement, measurements, monitoring the subsidence of the wreckage pile, slurry wall monitoring, etc. CloudCompare V1 2004-2006 Aim: quickly detecting changes by comparing TLS point clouds… with a CAD mesh or with another (high density) cloud CloudCompare V2 2007: “Industrialization” of CloudCompare … for internal use only! Rationale: idle reactor = 6 M€ / day acquired data -

Seamless Texture Mapping of 3D Point Clouds

Seamless Texture Mapping of 3D Point Clouds Dan Goldberg Mentor: Carl Salvaggio Chester F. Carlson Center for Imaging Science, Rochester Institute of Technology Rochester, NY November 25, 2014 Abstract The two similar, quickly growing fields of computer vision and computer graphics give users the ability to immerse themselves in a realistic computer generated environment by combining the ability create a 3D scene from images and the texture mapping process of computer graphics. The output of a popular computer vision algorithm, structure from motion (obtain a 3D point cloud from images) is incomplete from a computer graphics standpoint. The final product should be a textured mesh. The goal of this project is to make the most aesthetically pleasing output scene. In order to achieve this, auxiliary information from the structure from motion process was used to texture map a meshed 3D structure. 1 Introduction The overall goal of this project is to create a textured 3D computer model from images of an object or scene. This problem combines two different yet similar areas of study. Computer graphics and computer vision are two quickly growing fields that take advantage of the ever-expanding abilities of our computer hardware. Computer vision focuses on a computer capturing and understanding the world. Computer graphics con- centrates on accurately representing and displaying scenes to a human user. In the computer vision field, constructing three-dimensional (3D) data sets from images is becoming more common. Microsoft's Photo- synth (Snavely et al., 2006) is one application which brought attention to the 3D scene reconstruction field. Many structure from motion algorithms are being applied to data sets of images in order to obtain a 3D point cloud (Koenderink and van Doorn, 1991; Mohr et al., 1993; Snavely et al., 2006; Crandall et al., 2011; Weng et al., 2012; Yu and Gallup, 2014; Agisoft, 2014). -

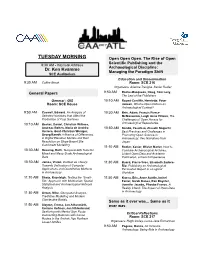

TUESDAY MORNING Open Open Open

TUESDAY MORNING Open Open Open. The Rise of Open Scientific Publishing and the 8:30 AM Keynote Address Dr. Ken Kvamme Archaeological Discipline: Managing the Paradigm Shift SCE Auditorium Education and Dissemination 9:30 AM Coffee Break Room: SCE 216 Organizers: Arianna Traviglia, Xavier Rodier General Papers 9:50 AM RocksMacqueen, Doug, Tom Levy, The Last of the Publishers General GIS 10:10 AM RouedCunliffe, Henriette, Peter Room: SCE House Jensen, What is Open Data in an Archaeological Context? 9:50 AM Caswell, Edward, An Analysis of 10:30 AM Brin, Adam, Francis Pierce Selected Variables that Affect the McManamon, Leigh Anne Ellison, The Production of Cost Surfaces Challenges of Open Access for 10:10 AM Becker, Daniel, Christian Willmes, Archaeological Repositories Andreas Bolten, María de Andrés 10:50 AM Kondo, Yasuhisa, Atsushi Noguchi, Herrero, GerdChristian Weniger, Best Practices and Challenges in Georg Bareth, Influence of Differences Promoting Open Science in in Digital Elevation Models and their Archaeology: Two Narratives from Resolution on SlopeBased Site Japan Catchment Modelling 11:10 AM Rodier, Xavier, Olivier Marlet, How to 10:30 AM Beusing, Ruth, TemporalGIS Tools for Combine Archaeological Archives, Mixed and MesoScale Archaeological Linked Open Data and Academic Data Publication, a French Experience 10:50 AM James, Vivian, Context as Theory: 11:30 AM Buard, PierreYves, Elisabeth Zadora Towards Unification of Computer Rio, Publishing an Archaeological Applications and Quantitative Methods Excavation Report in a Logicist in Archaeology Workflow 11:10 AM Sharp, Kayeleigh, Testing the ‘Small 11:50 AM Kansa, Eric, Anne Austin, Ixchel Site’ Approach with Multivariate Spatial Faniel, Sarah Kansa, Ran Boynter, Statistical and Archaeological Network Jennifer Jacobs, Phoebe France, A Analysis Reality Check: The Impact of Open Data in Archaeology 11:30 AM Groen, Mike, Geospatial Analysis, Predictive Modelling and Modern Clandestine Burials Same as it ever was.. -

Simulated Imagery Rendering Workflow for UAS-Based

remote sensing Article Simulated Imagery Rendering Workflow for UAS-Based Photogrammetric 3D Reconstruction Accuracy Assessments Richard K. Slocum * and Christopher E. Parrish School of Civil and Construction Engineering, Oregon State University, 101 Kearney Hall, 1491 SW Campus Way, Corvallis, OR 97331, USA; [email protected] * Correspondence: [email protected]; Tel.: +1-703-973-1983 Academic Editors: Gonzalo Pajares Martinsanz, Xiaofeng Li and Prasad S. Thenkabail Received: 13 March 2017; Accepted: 19 April 2017; Published: 22 April 2017 Abstract: Structure from motion (SfM) and MultiView Stereo (MVS) algorithms are increasingly being applied to imagery from unmanned aircraft systems (UAS) to generate point cloud data for various surveying and mapping applications. To date, the options for assessing the spatial accuracy of the SfM-MVS point clouds have primarily been limited to empirical accuracy assessments, which involve comparisons against reference data sets, which are both independent and of higher accuracy than the data they are being used to test. The acquisition of these reference data sets can be expensive, time consuming, and logistically challenging. Furthermore, these experiments are also almost always unable to be perfectly replicated and can contain numerous confounding variables, such as sun angle, cloud cover, wind, movement of objects in the scene, and camera thermal noise, to name a few. The combination of these factors leads to a situation in which robust, repeatable experiments are cost prohibitive, and the experiment results are frequently site-specific and condition-specific. Here, we present a workflow to render computer generated imagery using a virtual environment which can mimic the independent variables that would be experienced in a real-world UAS imagery acquisition scenario. -

MEPP2: a Generic Platform for Processing 3D Meshes and Point Clouds

EUROGRAPHICS 2020/ F. Banterle and A. Wilkie Short Paper MEPP2: a generic platform for processing 3D meshes and point clouds Vincent Vidal, Eric Lombardi , Martial Tola , Florent Dupont and Guillaume Lavoué Université de Lyon, CNRS, LIRIS, Lyon, France Abstract In this paper, we present MEPP2, an open-source C++ software development kit (SDK) for processing and visualizing 3D surface meshes and point clouds. It provides both an application programming interface (API) for creating new processing filters and a graphical user interface (GUI) that facilitates the integration of new filters as plugins. Static and dynamic 3D meshes and point clouds with appearance-related attributes (color, texture information, normal) are supported. The strength of the platform is to be generic programming oriented. It offers an abstraction layer, based on C++ Concepts, that provides interoperability over several third party mesh and point cloud data structures, such as OpenMesh, CGAL, and PCL. Generic code can be run on all data structures implementing the required concepts, which allows for performance and memory footprint comparison. Our platform also permits to create complex processing pipelines gathering idiosyncratic functionalities of the different libraries. We provide examples of such applications. MEPP2 runs on Windows, Linux & Mac OS X and is intended for engineers, researchers, but also students thanks to simple use, facilitated by the proposed architecture and extensive documentation. CCS Concepts • Computing methodologies ! Mesh models; Point-based models; • Software and its engineering ! Software libraries and repositories; 1. Introduction GUI for processing and visualizing 3D surface meshes and point clouds. With the increasing capability of 3D data acquisition devices, mod- Several platforms exist for processing 3D meshes such as Meshlab eling software and graphics processing units, three-dimensional [CCC08] based on VCGlibz, MEPP [LTD12], and libigl [JP∗18]. -

From Laser Scanner Point Clouds to 3D Modeling of the Valencian Silo-Yard in Burjassot”

Hochschule Karlsruhe – Technik und Wirtschaft University of Applied Sciences Fakultät für Fakultät für Informationsmanagement und Medien Master´s Programme Geomatics Master´s Thesis of María Cristina Gómez Peribáñez FROM LASER SCANNER POINT CLOUDS TO 3D MODELING OF THE VALENCIAN SILO-YARD IN BURJASSOT First Referee: Prof. Dr.-Ing. H. Saler Second Referee: Prof. Dr.-Ing. V. Tsioukas (AUTH) Third Referee: Prof. Dr.-Ing. F. Buchón (UPV) Karlsruhe, September 2017 Acknowledgement I would like to express my sincere thanks to all those who have made this Master Thesis possible, especially to the program Baden- Württemberg Stipendium. I would like to thank my tutors Heinz Saler, Vassilis Tsioukas and Fernando Buchón, for the time they have given me, for their help and support in this project. To my parents, family and friends, I would also like to thank you for your daily support to complete this project. Especially to Irini for all her help. Thanks. María Cristina Gómez Peribáñez I | P a g e Hochschule Karlsruhe – Technik und Wirtschaft Aristotle University of Thessaloniki -Faculty of Engineering University Polytechnic of Valencia Table of contents Acknowledgement ......................................................................................................................... I Table of contents .......................................................................................................................... 1 List of figures ............................................................................................................................... -

Choosing a Photogrammetry Workflow for Cultural Heritage Groups

heritage Article To 3D or Not 3D: Choosing a Photogrammetry Workflow for Cultural Heritage Groups Hafizur Rahaman * and Erik Champion School of Media, Creative Arts, and Social Inquiry, Curtin University, Perth, WA 6845, Australia * Correspondence: hafi[email protected]; Tel.: +61-8-9266-3339 Received: 22 May 2019; Accepted: 28 June 2019; Published: 3 July 2019 Abstract: The 3D reconstruction of real-world heritage objects using either a laser scanner or 3D modelling software is typically expensive and requires a high level of expertise. Image-based 3D modelling software, on the other hand, offers a cheaper alternative, which can handle this task with relative ease. There also exists free and open source (FOSS) software, with the potential to deliver quality data for heritage documentation purposes. However, contemporary academic discourse seldom presents survey-based feature lists or a critical inspection of potential production pipelines, nor typically provides direction and guidance for non-experts who are interested in learning, developing and sharing 3D content on a restricted budget. To address the above issues, a set of FOSS were studied based on their offered features, workflow, 3D processing time and accuracy. Two datasets have been used to compare and evaluate the FOSS applications based on the point clouds they produced. The average deviation to ground truth data produced by a commercial software application (Metashape, formerly called PhotoScan) was used and measured with CloudCompare software. 3D reconstructions generated from FOSS produce promising results, with significant accuracy, and are easy to use. We believe this investigation will help non-expert users to understand the photogrammetry and select the most suitable software for producing image-based 3D models at low cost for visualisation and presentation purposes. -

Sterescopic Software Compatible with 3D Pluraview

Version: Nov. 2020 Stereoscopic Software compatible with Stereo Company Application Category yes The ImagingSource ic3d 3D Image Viewer / Stereo Camera Calibration & Visualization FULL Anchorlab StereoBlend 3D Image Viewer / Stereo Image Generation & Visualization yes Masuji Suto StereoPhoto Maker 3D Image Viewer / Stereo Image Generation & Visualization, Freeware yes Presagis OpenFlight Creator 3D Model Building Environment yes 3dtv Stereoscopic Player 3D Video Player / Stereo Images yes Beijing Blue Sight Tech. Co., Ltd. ProvideService 3D Video Player / Stereo Images yes Bino Bino 3D Video Player / Stereo Images yes sView sView 3D Video Player / Stereo Images yes Xeometric ELITECAD Architecture BIM / Architecture, Construction, CAD Engine FULL Dassault Systems 3DVIA BIM / Interior Modeling yes Xeometric ELITECAD Styler BIM / Interior Modeling FULL SierraSoft Land BIM / Land Survey Restitution and Analysis FULL SierraSoft Roads BIM / Road & Highway Design yes Fraunhofer IAO Vrfx BIM / VR for Revit yes Xeometric ELITECAD ViewerPRO BIM / VR Viewer, Free Option yes ENSCAPE Enscape 2.8 BIM / VR Visualization Plug-In for ArchiCAD, Revit, SketchUp, Rhino, Vectorworks yes Xeometric ELITECAD Lumion BIM / VR Visualization, Architecture Models FULL Autodesk NavisWorks CAx / 3D Model Review FULL Dassault Systems eDrawings for Solidworks CAx / 3D Model Review yes OPEN CASCADE CAD CAD Assistant CAx / 3D Model Review FULL PTC Creo View MCAD CAx / 3D Model Review yes Gstarsoft Co., Ltd HaoChen 3D CAx / CAD, Architecture, HVAC, Electric & Power yes -

Monopoli10032057.Pdf

AUTOMAÇÃO DE PROCESSO DE DIGITALIZAÇÃO FOTOGRAMÉTRICA PARA MEDIÇÃO MECÂNICA NÃO-DESTRUTIVA DE AMOSTRAS PEQUENAS Guillaume Pascal William Lauras Projeto de Graduação apresentado ao Curso de Engenharia Mecânica da Escola Politécnica, Universidade Federal do Rio de Janeiro, como parte dos requisitos necessários para à obtenção do título de Engenheiro. Orientador: Flávio de Marco Filho Rio de Janeiro Agosto de 2020 UNIVERSIDADE FEDERAL DO RIO DE JANEIRO Departamento de Engenharia Mecânica DEM/POLI/UFRJ AUTOMAÇÃO DE PROCESSO DE DIGITALIZAÇÃO FOTOGRAMÉTRICA PARA MEDIÇÃO MECÂNICA NÃO-DESTRUTIVA DE AMOSTRAS PEQUENAS Guillaume Pascal William Lauras PROJETO DE GRADUAÇÃO SUBMETIDO AO CORPO DOSCENTE DO CURSO DE ENGENHARIA MECÂNICA DA ESCOLA POLITÉCNICA DA UNIVERSIDADE FEDERAL DO RIO DE JANEIRO COMO PARTE DOS REQUISITOS NECESSÁRIOS PARA A OBTENÇÃO DO GRAU DE ENGENHEIRO MECÂNICO. Aprovado por: ________________________________________________ Prof. Flávio de Marco Filho, DSc ________________________________________________ Prof. Geraldo Cernicchiaro, PhD ________________________________________________ Prof. João Alves de Oliveira, PhD ________________________________________________ Prof. Sylvio José Ribeiro de Oliveira, Dr.Ing. ________________________________________________ Prof. Vitor Ferreira Romano, Dott.Ric RIO DE JANEIRO, RJ - BRASIL AGOSTO DE 2020 ii Lauras, Guillaume Pascal William Automação de Processo de Medição Mecânica Não- Destrutiva por Digitalização Fotogramétrica de Amostras Pequenas/ Guillaume Pascal William Lauras. – Rio de Janeiro: UFRJ/ Escola Politécnica, 2020. XIII, 73 p.: il.; 29,7 cm. Orientador: Flávio de Marco Filho Projeto de Graduação – UFRJ/ Escola Politécnica/ Curso de Engenharia Mecânica, 2020. Referências Bibliográficas: p. 69-71. 1. Metrologia. 2. Estereofotogrametria. 3. Focus-stacking. I. de Marco Filho, Flávio. II. Universidade Federal do Rio de Janeiro, Escola Politécnica, Curso de Engenharia Mecânica. III. Automação de Processo de Medição Mecânica Não- Destrutiva por Digitalização Fotogramétrica de Amostras Pequenas. -

Facce. I Molti Volti Della Storia Umana. Una Mostra Open Source

Archeologia e Calcolatori Supplemento 8, 2016, 271-279 FACCE. I MOLTI VOLTI DELLA STORIA UMANA. UNA MOSTRA OPEN SOURCE 1. Introduzione alla mostra I visi sono la relazione tra noi e il mondo: riconosciamo, veniamo ri- conosciuti, ci riconosciamo grazie ad essi. I visi, molto spesso, dicono chi siamo, da dove veniamo e come stiamo. I visi sono come pagine di libro che raccontano storie. Oggi, grazie alle ricostruzioni facciali forensi di ultima generazione e alle tecniche di morfometria, è possibile riportare in vita i volti del passato con grande precisione e immediatezza. I visi – umani e dei nostri antenati – sono i protagonisti e, al tempo stesso, il filo conduttore della mostra FACCE. I molti volti della storia umana, aperta a Padova nelle sale espositive del Centro di Ateneo per i Musei presso l’Orto Botanico dal 14 febbraio al 14 giugno 2015 (Fig. 1). I visi offrono, inoltre, lo spunto per affrontare tematiche care all’Antro- pologia, evidenziando come le frontiere di studio di questa disciplina siano molto cambiate nel tempo, arrivando spesso a ribaltare oggi quello che veniva asserito nel passato. Essi diventano l’occasione per presentare al pubblico, in un allestimento apposito, alcuni dei reperti più significativi del Museo di Antropologia dell’Università degli Studi di Padova, nell’attesa dell’allestimento definitivo del museo all’interno del Polo Museale di Palazzo Cavalli. 2. Il percorso Il percorso si snoda in cinque sezioni tematiche. I reperti esposti sono pre- valentemente, ma non esclusivamente, provenienti dal Museo di Antropologia. In alcune sezioni, infatti, particolari reperti di grande valore richiesti in prestito da altri musei o istituzioni vanno a completare e impreziosire il percorso.