Iscsi Gbe to SATA II Disk Array Systems Janus SA-6643S Software

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Memoright Ssds: the End of Hard Drives?

Memoright SSDs: The End of Hard Drives? http://www.tomshardware.com/reviews/ssd-memoright,1926.html 9:30 AM - May 9, 2008 by Patrick Schmid and Achim Roos Source: Tom's Hardware Table of content 1 - The HDD is Beaten 2 - Flash SSD Tech Talk 3 - Memoright SSD MR25.2-032S 4 - Test Controller: Adaptec RAID 5805 5 - Test Setup 6 - Memoright SDD Benchmark Results 7 - Read Transfer Performance 8 - I/O Performance 9 - Power Consumption 10 - RAID 0 Benchmark Results: SSD vs. Conventional Drives 11 - I/O Performance (RAID 0, 4 Drives) 12 - Conclusion 13 - Flash SSD Comparison Table The HDD is Beaten About six months ago, we reviewed Mtron’s Flash SSDs (Solid State Drives), which were the fastest hard drives for desktop PCs until the launch of Western Digital’s new VelociRaptor . Although the VelociRaptor is a conventional hard drive and therefore it cannot offer the extremely quick access times of transistor-based storage media, it is the best choice for most applications - and it offers almost 10 times the capacity at a fraction of the SSD drive’s cost. However, we found an even better drive for the real enthusiast: the Memoright SSD MR25.2-032S, which leaves any other conventional hard drive in the dust as far as performance goes. It has become difficult to keep track of the developments in the Flash SSD storage market. Flash SSDs look and behave like mechanical hard drives, except that flash memory devices store data in the same way that your motherboard’s firmware device stores BIOS information. -

Western Digital

Western Digital 2010 Annual Report and Form 10-K The Company Founded in 1970, Western Digital is a data storage pioneer and long- time leader in the hard drive industry. WD provides cost effective storage solutions for people and organizations that collect, manage, and use digital information. WD® hard drives and solid-state drives are found in desktop and notebook computers, corporate networks, and home entertainment systems, as well as sophisticated medical, military/ aerospace, and telecommunications systems. They are also used as personal storage for backup and portable applications. Customers rely on WD drives to keep data accessible and secure from loss. WD is responding to changing market needs by delivering an ever broadening range of storage solutions, deploying technologies which reduce energy usage, increase performance and improve ease of use, with the same quality, reliability, and on-time delivery that have made WD successful in the traditional computing markets. The company also is stepping outside those traditional markets with new products such as media players that enable consumers to manage and enjoy a wealth of digital content. Our products are marketed to leading OEMs, systems manufacturers, selected resellers and retailers under the Western Digital® and WD brand names. Product, financial, and investor information is available on the company’s website at www.westerndigital.com. John F. Coyne President and Chief Executive Officer Fellow Shareholders: Fiscal 2010 was another highly profitable growth year for WD, concluding a decade of strong financial performance as the company marked its 40th year of operation. REVENUE Looking ahead into our fifth decade, ever-increasing demand Dollars in millions $9,850 for cost-effective, high-capacity storage continues to provide $10,000 $9,000 opportunity for substantial growth and consistent value creation, $8,074 $8,000 $7,453 when addressed with an effective business model. -

Hard Drive Unreliability

Transcript of Episode #81 Hard Drive Unreliability Description: Leo and Steve discuss the distressing results and implications of two recent very large population studies (more than 100,000 drives each) of hard drive field failures. Google and Carnegie Mellon University (CMU) both conducted and submitted studies for the recent 5th USENIX conference on File and Storage Technologies. High quality (64 kbps) mp3 audio file URL: http://media.GRC.com/sn/SN-081.mp3 Quarter size (16 kbps) mp3 audio file URL: http://media.GRC.com/sn/sn-081-lq.mp3 INTRO: Netcasts you love, from people you trust. This is TWiT. Leo Laporte: Bandwidth for Security Now! is provided by AOL Radio at AOL.com/podcasting. This is Security Now! with Steve Gibson, Episode 81 for March 1, 2007: Hard Drive Reliability. Security Now! is brought to you by Astaro, makers of the Astaro Security Gateway, on the web at www.astaro.com. And by Nerds On Site, helping people with technology all over the world. Visit Iwanttobeanerd.com. This is the Security Now! I’ve been waiting for for a while. Leo Laporte here. Steve Gibson is in his laboratory watching hard drives spin. Steve Gibson: That’d be SpinRite. Leo: SpinRite, they’re spinning right there. Steve: Spinning right and spinning wrong. Leo: Have you turned off all the noisemakers? Steve: Ready to go. Leo: Someday I want you to leave them on. That’d be fun. Steve: You’d be able to hear Fred Flintstone say “Yabba dabba do” every so often in the background. Leo: That’s when you get a new registration for SpinRite and different sounds for different people’s emails and.. -

Cw01 / Cw01-Wd Crown Series Manual Crown 01

CW01 / CW01-WD CROWN SERIES MANUAL CROWN 01 CW01 A powerful and flexible high-end multimedia server case The CW01 is created forfor HTPCHTPTPCaC andand PC enthusiastsenthusiasts who wishwish to enjoyenjoy the exceptionallyexcep powerful layout from SilverStone's top-of-the-line LC18 enclosure without the integrated touch screen. Rather than merely remaking the case, SilverStone engineers further tweaked the design to allow even more user adjustments to components such as reversible PSU mounting and greater degree of hard drive mounting positions to accommodate a wide range of add-on cards. The simple but purposeful front panel is highlighted with new details such as gold-plated 1/4" audio jacks that is not only made for onboard HD-audio but also compatible with Creative Lab's SoundBlaster Live!/Audigy/X-Fi series sound card. If there is an enclosure that can appeal to both HTPC and traditional computer enthusiasts equally, the CW01 will be the one. Specifications Material Aluminum front panel, 1.5mm aluminum chassis Color Black & Silver Motherboard Standard ATX, Micro ATX SST-CW01B (black) Model SST-CW01B-WD (black) SST-CW01S (silver) External 5.25" x 2, 3.5" x 1 Drive Bay SST-CW01 3.5" x 5 Internal SST-CW01-WD 3.5" x 6 SST-CW01 2 x 80mm fan slots Bottom 2 x 80mm intake fan, SST-CW01-WD Cooling System 2050rpm, 21dBA Rear 2 x 80mm exhaust fan, 2050rpm, 21dBA Side Dedicated CPU & PSU vents Expansion Slot 7 USB2.0 x 2, IEEE1394 x 1, 1/4" audio jack x 1 Front I/O Port 1/4" MIC jack x 1, 52-in-1 card reader Power Supply Optional standard PS2 (ATX) Dimension 425 mm (W) x 170mm (H) x 443 mm (D) 01 CW01-WD A powerful and flexible high-end multimedia server case designed for Raptor X The CW01-WD is created forf HTPC and d PC enthusiasts th i t who h wish i h to t enjoy j the th powerful layout from SilverStone's top-of-the-line LC18 enclosure with full view of the glorious Raptor X hard drive from Western Digital. -

Press Sheets 1.Qxd

Specifications: Connections - SATA 133 and PATA 133 Form factor - Standard 5.25" CD bay RAM Requirements - Up to 8 x 1GB DDR1 sticks on the 8GB HyperDrive4 and up to 8 x 2GB DDR1 sticks on the 16GB HyperDrive4. All sticks should be standard ECC registered DDR1. The HyperDrive4 has 2 banks of 4 DDR slots, each bank must have the same total amount of RAM. All sticks in any one bank should be of equal size. For example - (Bank A) 4x1GB and (Bank B) 2x2GB = 8GB total Contact sales on: Phone - 0800 027 2002 Email - [email protected] www.HyperOsSystems.co.uk HyperDrive IV launch November 2006 HyperDrive4 benefits and capabilities. 2/7 Librarian analogy. A data storage device such as a Magnetic hard disk drive (HDD) or a Flash RAM solid state disk (SSD) or a Dynamic RAM solid state disk (SSD) is an electronic library. Its performance is defined by two parameters: Seek Time and Sustained Transfer Rate (STR). Its seek time is the speed of its librarian. Its sustained transfer rate is the number of books you can borrow per day. The best performing HDDs (10,000 rpm Western Digital Raptor for example) have a seek time of around 8 milliseconds and a STR of around 65MB per second. The H4 SSD has a seek time of 40 microseconds and a STR of 123MB per second. So its librarian is 200 times faster at finding books and you can borrow around twice as many books per day from its library. Mechanics versus electronics. The HDD stores its data on a magnetically coated glass rotating mechanical platter and the data is read by a mechanical arm with a pick-up head which swings to and fro over the disk. -

C-Zone MAY 05.XLS -.:: GEOCITIES.Ws

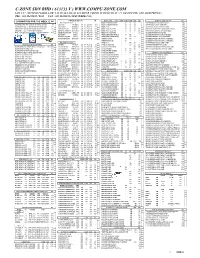

C-ZONE SDN BHD ( 613133 V ) WWW.COMPU-ZONE.COM LOT 2-57 , SECOND FLOOR, LOW YAT PLAZA, KUALA LUMPUR ( MONDAY TO SUNDAY : 11 AM TO 9 PM ) ( DEALER PRICES ) TEL : 603 21458841 / 8842 FAX : 603 21458845 ( MAY WEEK 1 06 ) { PROMOTION FOR THE WEEK } RM COMPUTER MAINBOARD RMFLASH CARD ( RM ) 128MB 256MB 512MB 1GB 2GB GRAPHICS CARD / AGP / 8X RM AMD ATHLON 64 SC754 KINGSTON RSMMC MOBILE -5065120- AOPEN NVIDIA FX 5500 256MB / 128 BIT 195 LOGITECH CORDLESS CLICK !PLUS OPTICAL MOUSE 50ASUS K8N NFORCE 3 800D1 / AGP / SL 230KINGSTON MINI SD MEMORY - 80 90 180 - AOPEN NVIDIA 6600GT 128MB DDR3 530 LOGITECH OPTICAL CL MOUSE FOR NOTEBOOK / MINI 110ASUS K8N4-E NFORCE 4 800D1 / PCIE / SL 265KINGSTON SD - 50 65 120 - ASUS NVIDIA V9250-X/TD FX5200 128MB 135 40GB MOBILE HDD WITH ALL IN ONE CARD READER 400ASUS K8N4-E DLX NFORCE 4 800D1 / PCIE / SL 340KINGSTON SD ELITE PRO - 65 80 125 225 FROSA NVIDIA GEFORCE FX5200 128MB / 128 BIT 140 SAMSUNG 17" LCD 713N FOC RM 80 VOUCHER 765GIGABYTE K8VM800M VIA K8M800 800D1 / AGP / VSL 180KINGSTON CF ELITE PRO - 55 85 135 260 FROSA NVIDIA GEFORCE 6200 256MB DDR2 210 INTEL CORE DUO T2500 ( 2.0GHZ ) + ASUS N4L-VM MOBO 2199GIGABYTE K8N51GMF NF6100 800D1 / PCIE / VSL 245KINGSTON CF ULTIMATE - - - - 330 GECUBE ATI RADEON 9550 256MB 205 MSI K8MM-V KM800 800D1 / AGP / VSL 185TRANSCEND RSMMC MOBILE - 80 145 - - GECUBE ATI RADEON 9550 XT 256MB DDR2 270 WINFAST 6100K8MB NF6100 800D1 / PCIE / VSL 230TRANSCEND SD MEMORY 80X - - 125 215 - GECUBE ATI RADEON X1300 256MB DDR2 400 ++ WINFAST NF4K8AB NFORCE 4 800D1 / PCIE / SL 260TWINMOS -

Wd Raptor Firmware Update

Wd raptor firmware update Getting Started with a WD VelociRaptor IDE RAID Compatibility Upgrade - Non-3Ware Cards This firmware problem seems to be very specific to. WD Raptor. WD Raptor Getting Started with a WD Raptor GPL / Firmware. SIIG Serial ATA The Theme has been designed to run using Firmware v. WD Community | UPDATED NOVEMBER 6th THIS THEME IS DISCONTINUED. i read on google that Velociraptor GB has a Firmware update but it is not available on the official website of WD my drive i believe has a. update is being made to the WD VelociRaptor product family. The firmware update is mainly for those of us using them in RAID, but it wont. The Western Digital Raptor is a series of high performance hard disk drives produced by On April 6, , Western Digital updated the VelociRaptor series. A severe issue with the fourth generation of raptor drives was faulty firmware. Western Digital hatched its latest Raptor back in April, revealing a unique departure from more Read on to see whether finished firmware reels in the VelociRaptor's propensity to outrun the OS updates, Service Pack 2. I know there are firmware updates available for SCSI drives but are there firmware updates for the 36GB WD Raptor? I checked their support. Old WD VelociRaptor compared to recent hard drives, Memory and Storage, Sep Where the heck is the firmware update, spent a 5 min in the. What is the latest VelociRaptor WDHLHX firmware? And maybe more interesting, what is the latest rekommended firmware? I'm going to. How else would one get newer Raptor firmware without RMA'ing or selling maybe, shame that WD doesn't post firmware updates to their site. -

Ir16saser User Manual

iR16SASER iR16SASER SAS to SATA II / SAS Subsystem User Manual Version 1.1 (Dec, 2010) - 1 - iR16SASER Limited Warranty iStoragePro WARRANTS TO THE ORIGINAL PURCHASER THAT ITS EXTERNAL ENCLOSURE PRODUCTS INCLUDING THE COMPONENTS THEREIN, SHALL BE FREE FROM DEFECTS IN MATERIAL AND CRAFTSMANSHIP FOR A LIMITED PERIOD OF THREE (3) YEARS. SHOULD iStoragePro BUNDLE, OFFER, COMBINE OR DISTRIBUTE ANY THIRD PARTY’S HARDWARE, PRODUCTS, COMPONENTS, THE WARRANTY OFFERED BY SUCH MANUFACTURERS OR SUPPLIERS MUST APPLY. ANY SOFTWARE INSTALLED, DISTRIBUTED, OR SOLD BY Ci DESIGN IS NOT COVERED BY iStoragePro’s LIMITED WARRANTY AND MUST ONLY REFER TO THE LICENSING AGREEMENT ACCOMPANYING TO THE SOFTWARE FOR THE TERMS AND CONDITIONS OF USING SUCH SOFTWARE. ANY OTHER LIMITED WARRANTY PERIOD OFFERED BY iStoragePro TO DIFFERENT PRODUCTS FREE FROM DEFECTS IN MATERIAL AND CRAFTSMANSHIP TO THE ORIGINAL PURCHASER WILL BE SPECIFIED IN OUR WRITTEN QUOTATION, CONTRACT OR IN ANY OTHER WRITTEN FORM TO PURCHASER. PURCHASER’S PURCHASE ORDER TO Ci DESIGN SHALL BE DEEMED IN ACCEPTANCE OF SUCH LIMITED WARRANTY. iStoragePro’s WARRANTY PERIOD STARTS FROM THE DATE OF INVOICE. THESE ARE THE ONLY WARRANTIES Ci DESIGN OFFERS. iStoragePro MAKES NO OTHER WARRANTIES OF ANY KIND, EXPRESS OR IMPLIED, WRITTEN, ORAL OR STATUTORY, AND EXPRESSLY DISCLAIMS ANY IMPLIED WARRANTIES, INCLUDING MERCHANTABILITY OR FITNESS FOR ANY SPECIFIC PURPOSE, REGARDLESS OF ORIGIN. iStoragePro DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY FOR CLAIMS OF ACTUAL OR ALLEGED PATENT INFRINGEMENT FOR ANY iStoragePro PRODUCT, INCLUDING iStoragePro PRODUCTS THAT ARE COMBINED WITH HARDWARE, SOFTWARE, EQUIPMENT, OR OTHER MATERIALS NOT FURNISHED BY iStoragePro, INCLUDING ANY COVERAGE FOR COMPENSATORY DAMAGES, PUNITIVE DAMAGES, ATTORNEY FEES, COSTS, CONSEQUENTIAL DAMAGES, OR OTHER LOSSES, EXPENSES, OR DAMAGES. -

Pc Cia 88.Pdf

Notebooks: a nova fronteira Hardware Os novos chips da Intel PC&CIA # 85 # 2009 PC85_Editorial.indd 2 23/1/2009 09:30:05 Editora Saber Ltda. Diretor Estado sólido Editorial Hélio Fittipaldi Armazenar dados em mídias de estado sólido muda radical- mente um conceito que foi criado antes mesmo do surgimento do PC: o de que dados devem ser armazenados em discos www.revistapcecia.com.br magnéticos. Editorial Esta idéia está em vigor há tanto tempo (o conceito surgiu Editor e Diretor Responsável Hélio Fittipaldi em 1956, criado pela IBM) que já achamos natural, parece Editor de Tecnologia Daniel Appel Daniel Appel que sempre foi assim e que essa é a “maneira certa” de guardar Conselho Editorial nossos arquivos. Após tantas décadas de uso, os discos rígidos conquistaram a confiança Roberto R. Cunha, Renato Paiotti do usuário de forma que muitos ainda pensam ser mais seguro confiar seus dados a Colaboradores discos magnéticos frágeis, com baixa resistência a impactos e vibrações, e que rodam em Diego Pagliarini Vivencio, João Carlos Cé Bassanesi, velocidades altíssimas, do que a células de memória de estado sólido. Jansen Carlo Sena, Marcus Brandão de Moura, Convencer o mercado das vantagens do SSD não será uma tarefa simples, mas com as Renato Paiotti, Wagner Barth informações contidas nesta revista certamente as coisas serão mais fáceis. Nestas páginas, Revisão o leitor encontrará explicações sobre as várias tecnologias de memórias de estado sólido, Eutíquio Lopez Designers sua implicação no desempenho do sistema e algumas considerações sobre a morte (ou Carlos Tartaglioni, não) dos HDs. Não é à toa que grande parte dos especialistas concorda que a tecnologia Diego M. -

Basics of Computer Hardware

1 Basics of computer hardware A computer is a programmable machine (or more precisely, a programmable sequential state machine). There are two basic kinds of computers: analog and digital. Analog computers are analog devices. That is, they have continuous states rather than discrete numbered states. An analog computer can represent fractional or irrational values exactly, with no round-off. Analog computers are almost never used outside of experimental settings. A digital computer is a programmable clocked sequential state machine. A digital computer uses discrete states. A binary digital computer uses two discrete states, such as positive/negative, high/low, on/off, used to represent the binary digits zero and one. The French word ordinate, meaning that which puts things in order, is a good description of the most common functionality of computers. What are computers used for? Computers are used for a wide variety of purposes. Data processing is commercial and financial work. This includes such things as billing, shipping and receiving, inventory control, and similar business related functions, as well as the “electronic office”. 2 Scientific processing is using a computer to support science. This can be as simple as gathering and analyzing raw data and as complex as modelling natural phenomenon (weather and climate models, thermodynamics, nuclear engineering, etc.). Multimedia includes content creation (composing music, performing music, recording music, editing film and video, special effects, animation, illustration, laying out print materials, etc.) and multimedia playback (games, DVDs, instructional materials, etc.). parts of a computer The classic crude over implication of a computer is that it contains three elements: processor unit, memory, and I/O (input/output). -

Western Digital® 2011 Annual Report and Form 10-K

Western Digital® 2011 Annual Report and Form 10-K Store Protect Access Stream Share The Company Founded in 1970, Western Digital is a data storage pioneer and longtime leader in the hard drive industry. WD® provides cost effective storage solutions for people and organizations that collect, manage and use digital information. WD hard drives and solid-state drives are found in desktop and notebook computers, corporate networks, and home entertainment systems, as well as sophisticated medical, military/aerospace and telecommunications systems. They are also used as personal storage for backup and portable applications. Customers rely on WD drives to keep data accessible and secure from loss. WD is responding to changing market needs by delivering an ever– broadening range of storage solutions, deploying technologies which reduce energy usage, increase performance and improve ease of use, with the same quality, reliability and on-time delivery that have made WD successful in the traditional computing markets. The company also is stepping outside those traditional markets with new products such as media players that enable consumers to manage and enjoy a wealth of digital content. Our products are marketed to leading OEMs, systems manufacturers, selected resellers and retailers under the Western Digital and WD brand names. Product, financial and investor information is available on the company’s website at www.westerndigital.com. John F. Coyne, President and Chief Executive Officer Fellow Shareholders: Fiscal 2011 was a tough year in the hard drive industry with the REVENUE Dollars in millions PC and HDD industries growth rates slowing off the unexpectedly $9,850 strong post recession year of 2010. -

A Hard Disk Drive( HDD )

A hard disk drive ( HDD ), commonly referred to as a hard drive, hard disk or fixed disk drive,[1] is a non- volatile storage device which stores digitally encoded data on rapidly rotating platters with magnetic surfaces. Strictly speaking, "drive" refers to a device distinct from its medium, such as a tape drive and its tape, or a floppy disk drive and its floppy disk. Early HDDs had removable media; however, an HDD today is typically a sealed unit (except for a filtered vent hole to equalize air pressure) with fixed media.[2] A HDD is a rigid-disk drive, although it is probably never referred to as such. By way of comparison, a so-called "floppy" drive (more formally, a diskette drive) has a disc that is flexible. Originally, the term "hard" was temporary slang, substituting "hard" for "rigid", before these drives had an established and universally-agreed-upon name. Some time ago, IBM's internal company term for an HDD was "file". HDDs (introduced in 1956 as data storage for an IBM accounting computer[3]) were originally developed for use with general purpose computers; see History of hard disk drives. In the 21st century, applications for HDDs have expanded to include digital video recorders, digital audio players, personal digital assistants, digital cameras and video game consoles. In 2005 the first mobile phones to include HDDs were introduced by Samsung and Nokia.[4] The need for large-scale, reliable storage, independent of a particular device, led to the introduction of configurations such as RAID arrays, network attached storage (NAS) systems and storage area network (SAN) systems that provide efficient and reliable access to large volumes of data.