Statistical Analysis of High-Throughput Sequencing Count Data

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Method to Infer Changed Activity of Metabolic Function from Transcript Profiles

ModeScore: A Method to Infer Changed Activity of Metabolic Function from Transcript Profiles Andreas Hoppe and Hermann-Georg Holzhütter Charité University Medicine Berlin, Institute for Biochemistry, Computational Systems Biochemistry Group [email protected] Abstract Genome-wide transcript profiles are often the only available quantitative data for a particular perturbation of a cellular system and their interpretation with respect to the metabolism is a major challenge in systems biology, especially beyond on/off distinction of genes. We present a method that predicts activity changes of metabolic functions by scoring reference flux distributions based on relative transcript profiles, providing a ranked list of most regulated functions. Then, for each metabolic function, the involved genes are ranked upon how much they represent a specific regulation pattern. Compared with the naïve pathway-based approach, the reference modes can be chosen freely, and they represent full metabolic functions, thus, directly provide testable hypotheses for the metabolic study. In conclusion, the novel method provides promising functions for subsequent experimental elucidation together with outstanding associated genes, solely based on transcript profiles. 1998 ACM Subject Classification J.3 Life and Medical Sciences Keywords and phrases Metabolic network, expression profile, metabolic function Digital Object Identifier 10.4230/OASIcs.GCB.2012.1 1 Background The comprehensive study of the cell’s metabolism would include measuring metabolite concentrations, reaction fluxes, and enzyme activities on a large scale. Measuring fluxes is the most difficult part in this, for a recent assessment of techniques, see [31]. Although mass spectrometry allows to assess metabolite concentrations in a more comprehensive way, the larger the set of potential metabolites, the more difficult [8]. -

The Kyoto Encyclopedia of Genes and Genomes (KEGG)

Kyoto Encyclopedia of Genes and Genome Minoru Kanehisa Institute for Chemical Research, Kyoto University HFSPO Workshop, Strasbourg, November 18, 2016 The KEGG Databases Category Database Content PATHWAY KEGG pathway maps Systems information BRITE BRITE functional hierarchies MODULE KEGG modules KO (KEGG ORTHOLOGY) KO groups for functional orthologs Genomic information GENOME KEGG organisms, viruses and addendum GENES / SSDB Genes and proteins / sequence similarity COMPOUND Chemical compounds GLYCAN Glycans Chemical information REACTION / RCLASS Reactions / reaction classes ENZYME Enzyme nomenclature DISEASE Human diseases DRUG / DGROUP Drugs / drug groups Health information ENVIRON Health-related substances (KEGG MEDICUS) JAPIC Japanese drug labels DailyMed FDA drug labels 12 manually curated original DBs 3 DBs taken from outside sources and given original annotations (GENOME, GENES, ENZYME) 1 computationally generated DB (SSDB) 2 outside DBs (JAPIC, DailyMed) KEGG is widely used for functional interpretation and practical application of genome sequences and other high-throughput data KO PATHWAY GENOME BRITE DISEASE GENES MODULE DRUG Genome Molecular High-level Practical Metagenome functions functions applications Transcriptome etc. Metabolome Glycome etc. COMPOUND GLYCAN REACTION Funding Annual budget Period Funding source (USD) 1995-2010 Supported by 10+ grants from Ministry of Education, >2 M Japan Society for Promotion of Science (JSPS) and Japan Science and Technology Agency (JST) 2011-2013 Supported by National Bioscience Database Center 0.8 M (NBDC) of JST 2014-2016 Supported by NBDC 0.5 M 2017- ? 1995 KEGG website made freely available 1997 KEGG FTP site made freely available 2011 Plea to support KEGG KEGG FTP academic subscription introduced 1998 First commercial licensing Contingency Plan 1999 Pathway Solutions Inc. -

Abstracts Genome 10K & Genome Science 29 Aug - 1 Sept 2017 Norwich Research Park, Norwich, Uk

Genome 10K c ABSTRACTS GENOME 10K & GENOME SCIENCE 29 AUG - 1 SEPT 2017 NORWICH RESEARCH PARK, NORWICH, UK Genome 10K c 48 KEYNOTE SPEAKERS ............................................................................................................................... 1 Dr Adam Phillippy: Towards the gapless assembly of complete vertebrate genomes .................... 1 Prof Kathy Belov: Saving the Tasmanian devil from extinction ......................................................... 1 Prof Peter Holland: Homeobox genes and animal evolution: from duplication to divergence ........ 2 Dr Hilary Burton: Genomics in healthcare: the challenges of complexity .......................................... 2 INVITED SPEAKERS ................................................................................................................................. 3 Vertebrate Genomics ........................................................................................................................... 3 Alex Cagan: Comparative genomics of animal domestication .......................................................... 3 Plant Genomics .................................................................................................................................... 4 Ksenia Krasileva: Evolution of plant Immune receptors ..................................................................... 4 Andrea Harper: Using Associative Transcriptomics to predict tolerance to ash dieback disease in European ash trees ............................................................................................................ -

3 13437143.Pdf

Title Integrative Annotation of 21,037 Human Genes Validated by Full-Length cDNA Clones Imanishi, Tadashi; Itoh, Takeshi; Suzuki, Yutaka; O'Donovan, Claire; Fukuchi, Satoshi; Koyanagi, Kanako O.; Barrero, Roberto A.; Tamura, Takuro; Yamaguchi-Kabata, Yumi; Tanino, Motohiko; Yura, Kei; Miyazaki, Satoru; Ikeo, Kazuho; Homma, Keiichi; Kasprzyk, Arek; Nishikawa, Tetsuo; Hirakawa, Mika; Thierry-Mieg, Jean; Thierry-Mieg, Danielle; Ashurst, Jennifer; Jia, Libin; Nakao, Mitsuteru; Thomas, Michael A.; Mulder, Nicola; Karavidopoulou, Youla; Jin, Lihua; Kim, Sangsoo; Yasuda, Tomohiro; Lenhard, Boris; Eveno, Eric; Suzuki, Yoshiyuki; Yamasaki, Chisato; Takeda, Jun-ichi; Gough, Craig; Hilton, Phillip; Fujii, Yasuyuki; Sakai, Hiroaki; Tanaka, Susumu; Amid, Clara; Bellgard, Matthew; Bonaldo, Maria de Fatima; Bono, Hidemasa; Bromberg, Susan K.; Brookes, Anthony J.; Bruford, Elspeth; Carninci, Piero; Chelala, Claude; Couillault, Christine; Souza, Sandro J. de; Debily, Marie-Anne; Devignes, Marie-Dominique; Dubchak, Inna; Endo, Toshinori; Estreicher, Anne; Eyras, Eduardo; Fukami-Kobayashi, Kaoru; R. Gopinath, Gopal; Graudens, Esther; Hahn, Yoonsoo; Han, Michael; Han, Ze-Guang; Hanada, Kousuke; Hanaoka, Hideki; Harada, Erimi; Hashimoto, Katsuyuki; Hinz, Ursula; Hirai, Momoki; Hishiki, Teruyoshi; Hopkinson, Ian; Imbeaud, Sandrine; Inoko, Hidetoshi; Kanapin, Alexander; Kaneko, Yayoi; Kasukawa, Takeya; Kelso, Janet; Kersey, Author(s) Paul; Kikuno, Reiko; Kimura, Kouichi; Korn, Bernhard; Kuryshev, Vladimir; Makalowska, Izabela; Makino, Takashi; Mano, Shuhei; -

Ontology-Based Methods for Analyzing Life Science Data

Habilitation a` Diriger des Recherches pr´esent´ee par Olivier Dameron Ontology-based methods for analyzing life science data Soutenue publiquement le 11 janvier 2016 devant le jury compos´ede Anita Burgun Professeur, Universit´eRen´eDescartes Paris Examinatrice Marie-Dominique Devignes Charg´eede recherches CNRS, LORIA Nancy Examinatrice Michel Dumontier Associate professor, Stanford University USA Rapporteur Christine Froidevaux Professeur, Universit´eParis Sud Rapporteure Fabien Gandon Directeur de recherches, Inria Sophia-Antipolis Rapporteur Anne Siegel Directrice de recherches CNRS, IRISA Rennes Examinatrice Alexandre Termier Professeur, Universit´ede Rennes 1 Examinateur 2 Contents 1 Introduction 9 1.1 Context ......................................... 10 1.2 Challenges . 11 1.3 Summary of the contributions . 14 1.4 Organization of the manuscript . 18 2 Reasoning based on hierarchies 21 2.1 Principle......................................... 21 2.1.1 RDF for describing data . 21 2.1.2 RDFS for describing types . 24 2.1.3 RDFS entailments . 26 2.1.4 Typical uses of RDFS entailments in life science . 26 2.1.5 Synthesis . 30 2.2 Case study: integrating diseases and pathways . 31 2.2.1 Context . 31 2.2.2 Objective . 32 2.2.3 Linking pathways and diseases using GO, KO and SNOMED-CT . 32 2.2.4 Querying associated diseases and pathways . 33 2.3 Methodology: Web services composition . 39 2.3.1 Context . 39 2.3.2 Objective . 40 2.3.3 Semantic compatibility of services parameters . 40 2.3.4 Algorithm for pairing services parameters . 40 2.4 Application: ontology-based query expansion with GO2PUB . 43 2.4.1 Context . 43 2.4.2 Objective . -

Computational Methods Addressing Genetic Variation In

COMPUTATIONAL METHODS ADDRESSING GENETIC VARIATION IN NEXT-GENERATION SEQUENCING DATA by Charlotte A. Darby A dissertation submitted to Johns Hopkins University in conformity with the requirements for the degree of Doctor of Philosophy Baltimore, Maryland June 2020 © 2020 Charlotte A. Darby All rights reserved Abstract Computational genomics involves the development and application of computational meth- ods for whole-genome-scale datasets to gain biological insight into the composition and func- tion of genomes, including how genetic variation mediates molecular phenotypes and disease. New biotechnologies such as next-generation sequencing generate genomic data on a massive scale and have transformed the field thanks to simultaneous advances in the analysis toolkit. In this thesis, I present three computational methods that use next-generation sequencing data, each of which addresses the genetic variations within and between human individuals in a different way. First, Samovar is a software tool for performing single-sample mosaic single-nucleotide variant calling on whole genome sequencing linked read data. Using haplotype assembly of heterozygous germline variants, uniquely made possible by linked reads, Samovar identifies variations in different cells that make up a bulk sequencing sample. We apply it to 13cancer samples in collaboration with researchers at Nationwide Childrens Hospital. Second, scHLAcount is a software pipeline that computes allele-specific molecule counts for the HLA genes from single-cell gene expression data. We use a personalized reference genome based on the individual’s genotypes to reveal allele-specific and cell type-specific gene expression patterns. Even given technology-specific biases of single-cell gene expression data, we can resolve allele-specific expression for these genes since the alleles are often quite different between the two haplotypes of an individual. -

Genbank by Walter B

GenBank by Walter B. Goad o understand the significance of the information stored in memory—DNA—to the stuff of activity—proteins. The idea that GenBank, you need to know a little about molecular somehow the bases in DNA determine the amino acids in proteins genetics. What that field deals with is self-replication—the had been around for some time. In fact, George Gamow suggested in T process unique to life—and mutation and 1954, after learning about the structure proposed for DNA, that a recombination—the processes responsible for evolution-at the triplet of bases corresponded to an amino acid. That suggestion was fundamental level of the genes in DNA. This approach of working shown to be true, and by 1965 most of the genetic code had been from the blueprint, so to speak, of a living system is very powerful, deciphered. Also worked out in the ’60s were many details of what and studies of many other aspects of life—the process of learning, for Crick called the central dogma of molecular genetics—the now example—are now utilizing molecular genetics. firmly established fact that DNA is not translated directly to proteins Molecular genetics began in the early ’40s and was at first but is first transcribed to messenger RNA. This molecule, a nucleic controversial because many of the people involved had been trained acid like DNA, then serves as the template for protein synthesis. in the physical sciences rather than the biological sciences, and yet These great advances prompted a very distinguished molecular they were answering questions that biologists had been asking for geneticist to predict, in 1969, that biology was just about to end since years. -

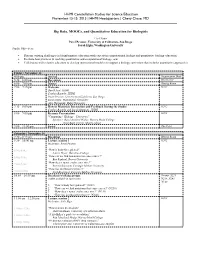

Big Data, Moocs, and ... (PDF)

HHMI Constellation Studios for Science Education November 13-15, 2015 | HHMI Headquarters | Chevy Chase, MD Big Data, MOOCs, and Quantitative Education for Biologists Co-Chairs Pavel Pevzner, University of California- San Diego Sarah Elgin, Washington University Studio Objectives Discuss existing challenges in bioinformatics education with experts in computational biology and quantitative biology education, Evaluate best practices in teaching quantitative and computational biology, and Collaborate with scientist educators to develop instructional modules to support a biology curriculum that includes quantitative approaches. Friday | November 13 4:00 pm Arrival Registration Desk 5:30 – 6:00 pm Reception Great Hall 6:00 – 7:00 pm Dinner Dining Room 7:00 – 7:15 pm Welcome K202 David Asai, HHMI Cynthia Bauerle, HHMI Pavel Pevzner, University of California-San Diego Sarah Elgin, Washington University Alex Hartemink, Duke University 7:15 – 8:00 pm How to Maximize Interaction and Feedback During the Studio K202 Cynthia Bauerle and Sarah Simmons, HHMI 8:00 – 9:00 pm Keynote Presentation K202 "Computing + Biology = Discovery" Speakers: Ran Libeskind-Hadas, Harvey Mudd College Eliot Bush, Harvey Mudd College 9:00 – 11:00 pm Social The Pilot Saturday | November 14 7:30 – 8:15 am Breakfast Dining Room 8:30 – 10:00 am Lecture session 1 K202 Moderator: Pavel Pevzner 834a-854a “How is body fat regulated?” Laurie Heyer, Davidson College 856a-916a “How can we find mutations that cause cancer?” Ben Raphael, Brown University “How does a tumor evolve over time?” 918a-938a Russell Schwartz, Carnegie Mellon University “How fast do ribosomes move?” 940a-1000a Carl Kingsford, Carnegie Mellon University 10:05 – 10:55 am Breakout working groups Rooms: S221, (coffee available in each room) N238, N241, N140 1. -

Exploiting High Throughput DNA Sequencing Data for Genomic Analysis

Exploiting high throughput DNA sequencing data for genomic analysis Markus Hsi-Yang Fritz Darwin College A dissertation submitted to the University of Cambridge for the degree of Doctor of Philosophy 14th October 2011 Markus Hsi-Yang Fritz EMBL-European Bioinformatics Institute Wellcome Trust Genome Campus Hinxton, Cambridge, CB10 1SD United Kingdom email: [email protected] This dissertation is the result of my own work and includes nothing which is the outcome of work done in collaboration except where specifically indicated in the text. No part of this work has been submitted or is currently being submitted for any other qualification. This document does not exceed the word limit of 60,000 words1 as defined by the Biology Degree Committee. Markus Hsi-Yang Fritz 14th October 2011 1 excluding bibliography, figures, appendices etc. To an exceptional scientist — my Dad. Exploiting high throughput DNA sequencing data for genomic analysis Summary Markus Hsi-Yang Fritz Darwin College The last few years have witnessed a drastic increase in genomic data. This has been facilitated by the shift away from the Sanger sequencing tech- nique to an array of high-throughput methods — so-called next-generation sequencing technologies. This enormous growth of available DNA data has been a tremendous boon to large-scale genomics studies and has rapidly advanced fields such as environmental genomics, ancient DNA research, population genomics and disease association. On the other hand, however, researchers and sequence archives are now facing an enormous data deluge. Critically, the rate of sequencing data accumulation is now outstripping advances in hard drive capacity, network bandwidth and processing power. -

Research Report 2006 Max Planck Institute for Molecular Genetics, Berlin Imprint | Research Report 2006

MAX PLANCK INSTITUTE FOR MOLECULAR GENETICS Research Report 2006 Max Planck Institute for Molecular Genetics, Berlin Imprint | Research Report 2006 Published by the Max Planck Institute for Molecular Genetics (MPIMG), Berlin, Germany, August 2006 Editorial Board Bernhard Herrmann, Hans Lehrach, H.-Hilger Ropers, Martin Vingron Coordination Claudia Falter, Ingrid Stark Design & Production UNICOM Werbeagentur GmbH, Berlin Number of copies: 1,500 Photos Katrin Ullrich, MPIMG; David Ausserhofer Contact Max Planck Institute for Molecular Genetics Ihnestr. 63–73 14195 Berlin, Germany Phone: +49 (0)30-8413 - 0 Fax: +49 (0)30-8413 - 1207 Email: [email protected] For further information about the MPIMG please see our website: www.molgen.mpg.de MPI for Molecular Genetics Research Report 2006 Table of Contents The Max Planck Institute for Molecular Genetics . 4 • Organisational Structure. 4 • MPIMG – Mission, Development of the Institute, Research Concept. .5 Department of Developmental Genetics (Bernhard Herrmann) . 7 • Transmission ratio distortion (Hermann Bauer) . .11 • Signal Transduction in Embryogenesis and Tumor Progression (Markus Morkel). 14 • Development of Endodermal Organs (Heiner Schrewe) . 16 • Gene Expression and 3D-Reconstruction (Ralf Spörle). 18 • Somitogenesis (Lars Wittler). 21 Department of Vertebrate Genomics (Hans Lehrach) . 25 • Molecular Embryology and Aging (James Adjaye). .31 • Protein Expression and Protein Structure (Konrad Büssow). .34 • Mass Spectrometry (Johan Gobom). 37 • Bioinformatics (Ralf Herwig). .40 • Comparative and Functional Genomics (Heinz Himmelbauer). 44 • Genetic Variation (Margret Hoehe). 48 • Cell Arrays/Oligofingerprinting (Michal Janitz). .52 • Kinetic Modeling (Edda Klipp) . .56 • In Vitro Ligand Screening (Zoltán Konthur). .60 • Neurodegenerative Disorders (Sylvia Krobitsch). .64 • Protein Complexes & Cell Organelle Assembly/ USN (Bodo Lange/Thorsten Mielke). .67 • Automation & Technology Development (Hans Lehrach). -

Integrative Annotation of 21,037 Human Genes Validated by Full-Length Cdna Clones

Title Integrative Annotation of 21,037 Human Genes Validated by Full-Length cDNA Clones Imanishi, Tadashi; Itoh, Takeshi; Suzuki, Yutaka; O'Donovan, Claire; Fukuchi, Satoshi; Koyanagi, Kanako O.; Barrero, Roberto A.; Tamura, Takuro; Yamaguchi-Kabata, Yumi; Tanino, Motohiko; Yura, Kei; Miyazaki, Satoru; Ikeo, Kazuho; Homma, Keiichi; Kasprzyk, Arek; Nishikawa, Tetsuo; Hirakawa, Mika; Thierry-Mieg, Jean; Thierry-Mieg, Danielle; Ashurst, Jennifer; Jia, Libin; Nakao, Mitsuteru; Thomas, Michael A.; Mulder, Nicola; Karavidopoulou, Youla; Jin, Lihua; Kim, Sangsoo; Yasuda, Tomohiro; Lenhard, Boris; Eveno, Eric; Suzuki, Yoshiyuki; Yamasaki, Chisato; Takeda, Jun-ichi; Gough, Craig; Hilton, Phillip; Fujii, Yasuyuki; Sakai, Hiroaki; Tanaka, Susumu; Amid, Clara; Bellgard, Matthew; Bonaldo, Maria de Fatima; Bono, Hidemasa; Bromberg, Susan K.; Brookes, Anthony J.; Bruford, Elspeth; Carninci, Piero; Chelala, Claude; Couillault, Christine; Souza, Sandro J. de; Debily, Marie-Anne; Devignes, Marie-Dominique; Dubchak, Inna; Endo, Toshinori; Estreicher, Anne; Eyras, Eduardo; Fukami-Kobayashi, Kaoru; R. Gopinath, Gopal; Graudens, Esther; Hahn, Yoonsoo; Han, Michael; Han, Ze-Guang; Hanada, Kousuke; Hanaoka, Hideki; Harada, Erimi; Hashimoto, Katsuyuki; Hinz, Ursula; Hirai, Momoki; Hishiki, Teruyoshi; Hopkinson, Ian; Imbeaud, Sandrine; Inoko, Hidetoshi; Kanapin, Alexander; Kaneko, Yayoi; Kasukawa, Takeya; Kelso, Janet; Kersey, Author(s) Paul; Kikuno, Reiko; Kimura, Kouichi; Korn, Bernhard; Kuryshev, Vladimir; Makalowska, Izabela; Makino, Takashi; Mano, Shuhei; -

Strategic Plan 2011-2016

Strategic Plan 2011-2016 Wellcome Trust Sanger Institute Strategic Plan 2011-2016 Mission The Wellcome Trust Sanger Institute uses genome sequences to advance understanding of the biology of humans and pathogens in order to improve human health. -i- Wellcome Trust Sanger Institute Strategic Plan 2011-2016 - ii - Wellcome Trust Sanger Institute Strategic Plan 2011-2016 CONTENTS Foreword ....................................................................................................................................1 Overview .....................................................................................................................................2 1. History and philosophy ............................................................................................................ 5 2. Organisation of the science ..................................................................................................... 5 3. Developments in the scientific portfolio ................................................................................... 7 4. Summary of the Scientific Programmes 2011 – 2016 .............................................................. 8 4.1 Cancer Genetics and Genomics ................................................................................ 8 4.2 Human Genetics ...................................................................................................... 10 4.3 Pathogen Variation .................................................................................................. 13 4.4 Malaria