Scope of Various Random Number Generators in Ant System Approach for Tsp

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Power Values of Divisor Sums Author(S): Frits Beukers, Florian Luca, Frans Oort Reviewed Work(S): Source: the American Mathematical Monthly, Vol

Power Values of Divisor Sums Author(s): Frits Beukers, Florian Luca, Frans Oort Reviewed work(s): Source: The American Mathematical Monthly, Vol. 119, No. 5 (May 2012), pp. 373-380 Published by: Mathematical Association of America Stable URL: http://www.jstor.org/stable/10.4169/amer.math.monthly.119.05.373 . Accessed: 15/02/2013 04:05 Your use of the JSTOR archive indicates your acceptance of the Terms & Conditions of Use, available at . http://www.jstor.org/page/info/about/policies/terms.jsp . JSTOR is a not-for-profit service that helps scholars, researchers, and students discover, use, and build upon a wide range of content in a trusted digital archive. We use information technology and tools to increase productivity and facilitate new forms of scholarship. For more information about JSTOR, please contact [email protected]. Mathematical Association of America is collaborating with JSTOR to digitize, preserve and extend access to The American Mathematical Monthly. http://www.jstor.org This content downloaded on Fri, 15 Feb 2013 04:05:44 AM All use subject to JSTOR Terms and Conditions Power Values of Divisor Sums Frits Beukers, Florian Luca, and Frans Oort Abstract. We consider positive integers whose sum of divisors is a perfect power. This prob- lem had already caught the interest of mathematicians from the 17th century like Fermat, Wallis, and Frenicle. In this article we study this problem and some variations. We also give an example of a cube, larger than one, whose sum of divisors is again a cube. 1. INTRODUCTION. Recently, one of the current authors gave a mathematics course for an audience with a general background and age over 50. -

A Note on Random Number Generation

A note on random number generation Christophe Dutang and Diethelm Wuertz September 2009 1 1 INTRODUCTION 2 \Nothing in Nature is random. number generation. By \random numbers", we a thing appears random only through mean random variates of the uniform U(0; 1) the incompleteness of our knowledge." distribution. More complex distributions can Spinoza, Ethics I1. be generated with uniform variates and rejection or inversion methods. Pseudo random number generation aims to seem random whereas quasi random number generation aims to be determin- istic but well equidistributed. 1 Introduction Those familiars with algorithms such as linear congruential generation, Mersenne-Twister type algorithms, and low discrepancy sequences should Random simulation has long been a very popular go directly to the next section. and well studied field of mathematics. There exists a wide range of applications in biology, finance, insurance, physics and many others. So 2.1 Pseudo random generation simulations of random numbers are crucial. In this note, we describe the most random number algorithms At the beginning of the nineties, there was no state-of-the-art algorithms to generate pseudo Let us recall the only things, that are truly ran- random numbers. And the article of Park & dom, are the measurement of physical phenomena Miller (1988) entitled Random generators: good such as thermal noises of semiconductor chips or ones are hard to find is a clear proof. radioactive sources2. Despite this fact, most users thought the rand The only way to simulate some randomness function they used was good, because of a short on computers are carried out by deterministic period and a term to term dependence. -

Package 'Randtoolbox'

Package ‘randtoolbox’ January 31, 2020 Type Package Title Toolbox for Pseudo and Quasi Random Number Generation and Random Generator Tests Version 1.30.1 Author R port by Yohan Chalabi, Christophe Dutang, Petr Savicky and Di- ethelm Wuertz with some underlying C codes of (i) the SFMT algorithm from M. Mat- sumoto and M. Saito, (ii) the Knuth-TAOCP RNG from D. Knuth. Maintainer Christophe Dutang <[email protected]> Description Provides (1) pseudo random generators - general linear congruential generators, multiple recursive generators and generalized feedback shift register (SF-Mersenne Twister algorithm and WELL generators); (2) quasi random generators - the Torus algorithm, the Sobol sequence, the Halton sequence (including the Van der Corput sequence) and (3) some generator tests - the gap test, the serial test, the poker test. See e.g. Gentle (2003) <doi:10.1007/b97336>. The package can be provided without the rngWELL dependency on demand. Take a look at the Distribution task view of types and tests of random number generators. Version in Memoriam of Diethelm and Barbara Wuertz. Depends rngWELL (>= 0.10-1) License BSD_3_clause + file LICENSE NeedsCompilation yes Repository CRAN Date/Publication 2020-01-31 10:17:00 UTC R topics documented: randtoolbox-package . .2 auxiliary . .3 coll.test . .4 coll.test.sparse . .6 freq.test . .8 gap.test . .9 get.primes . 11 1 2 randtoolbox-package getWELLState . 12 order.test . 12 poker.test . 14 pseudoRNG . 16 quasiRNG . 22 rngWELLScriptR . 26 runifInterface . 27 serial.test . 29 soboltestfunctions . 31 Index 33 randtoolbox-package General remarks on toolbox for pseudo and quasi random number generation Description The randtoolbox-package started in 2007 during an ISFA (France) working group. -

Gretl User's Guide

Gretl User’s Guide Gnu Regression, Econometrics and Time-series Allin Cottrell Department of Economics Wake Forest university Riccardo “Jack” Lucchetti Dipartimento di Economia Università Politecnica delle Marche December, 2008 Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.1 or any later version published by the Free Software Foundation (see http://www.gnu.org/licenses/fdl.html). Contents 1 Introduction 1 1.1 Features at a glance ......................................... 1 1.2 Acknowledgements ......................................... 1 1.3 Installing the programs ....................................... 2 I Running the program 4 2 Getting started 5 2.1 Let’s run a regression ........................................ 5 2.2 Estimation output .......................................... 7 2.3 The main window menus ...................................... 8 2.4 Keyboard shortcuts ......................................... 11 2.5 The gretl toolbar ........................................... 11 3 Modes of working 13 3.1 Command scripts ........................................... 13 3.2 Saving script objects ......................................... 15 3.3 The gretl console ........................................... 15 3.4 The Session concept ......................................... 16 4 Data files 19 4.1 Native format ............................................. 19 4.2 Other data file formats ....................................... 19 4.3 Binary databases .......................................... -

MODERN MATHEMATICS 1900 to 1950

Free ebooks ==> www.Ebook777.com www.Ebook777.com Free ebooks ==> www.Ebook777.com MODERN MATHEMATICS 1900 to 1950 Michael J. Bradley, Ph.D. www.Ebook777.com Free ebooks ==> www.Ebook777.com Modern Mathematics: 1900 to 1950 Copyright © 2006 by Michael J. Bradley, Ph.D. All rights reserved. No part of this book may be reproduced or utilized in any form or by any means, electronic or mechanical, including photocopying, recording, or by any information storage or retrieval systems, without permission in writing from the publisher. For information contact: Chelsea House An imprint of Infobase Publishing 132 West 31st Street New York NY 10001 Library of Congress Cataloging-in-Publication Data Bradley, Michael J. (Michael John), 1956– Modern mathematics : 1900 to 1950 / Michael J. Bradley. p. cm.—(Pioneers in mathematics) Includes bibliographical references and index. ISBN 0-8160-5426-6 (acid-free paper) 1. Mathematicians—Biography. 2. Mathematics—History—20th century. I. Title. QA28.B736 2006 510.92'2—dc22 2005036152 Chelsea House books are available at special discounts when purchased in bulk quantities for businesses, associations, institutions, or sales promotions. Please call our Special Sales Department in New York at (212) 967-8800 or (800) 322-8755. You can find Chelsea House on the World Wide Web at http://www.chelseahouse.com Text design by Mary Susan Ryan-Flynn Cover design by Dorothy Preston Illustrations by Jeremy Eagle Printed in the United States of America MP FOF 10 9 8 7 6 5 4 3 2 1 This book is printed on acid-free paper. -

Gretl 1.6.0 and Its Numerical Accuracy - Technical Supplement

GRETL 1.6.0 AND ITS NUMERICAL ACCURACY - TECHNICAL SUPPLEMENT A. Talha YALTA and A. Yasemin YALTA∗ Department of Economics, Fordham University, New York 2007-07-13 This supplement provides detailed information regarding both the procedure and the results of our testing GRETL using the univariate summary statistics, analysis of variance, linear regression, and nonlinear least squares benchmarks of the NIST Statis- tical Reference Datasets (StRD), as well as verification of the random number generator and the accuracy of statistical distributions used for calculating critical values. The numbers of accurate digits (NADs) for testing the StRD datasets are also supplied and compared with several commercial packages namely SAS v6.12, SPSS v7.5, S-Plus v4.0, Stata 7, and Gauss for Windows v3.2.37. We did not perform any accuracy tests of the latest versions of these programs and the results that we supply for packages other than GRETL 1.6.0 are those independently verified and published by McCullough (1999a), McCullough and Wilson (2002), and Vinod (2000) previously. Readers are also referred to McCullough (1999b) and Keeling and Pavur (2007), which examine software accuracy and supply the NADS across four and nine software packages at once respectively. Since all these programs perform calculations using 64-bit floating point arithmetic, discrepan- cies in results are caused by differences in algorithms used to perform various statistical operations. 1 Numerical tests of the NIST StRD benchmarks For the StRD reference data sets, NIST provides certified values calculated with multiple precision in 500 digits of accuracy, which were later rounded to 15 significant digits (11 for nonlinear least squares). -

Three Aspects of the Theory of Complex Multiplication

Three Aspects of the Theory of Complex Multiplication Takase Masahito Introduction In the letter addressed to Dedekind dated March 15, 1880, Kronecker wrote: - the Abelian equations with square roots of rational numbers are ex hausted by the transformation equations of elliptic functions with sin gular modules, just as the Abelian equations with integral coefficients are exhausted by the cyclotomic equations. ([Kronecker 6] p. 455) This is a quite impressive conjecture as to the construction of Abelian equations over an imaginary quadratic number fields, which Kronecker called "my favorite youthful dream" (ibid.). The aim of the theory of complex multiplication is to solve Kronecker's youthful dream; however, it is not the history of formation of the theory of complex multiplication but Kronecker's youthful dream itself that I would like to consider in the present paper. I will consider Kronecker's youthful dream from three aspects: its history, its theoretical character, and its essential meaning in mathematics. First of all, I will refer to the history and theoretical character of the youthful dream (Part I). Abel modeled the division theory of elliptic functions after the division theory of a circle by Gauss and discovered the concept of an Abelian equation through the investigation of the algebraically solvable conditions of the division equations of elliptic functions. This discovery is the starting point of the path to Kronecker's youthful dream. If we follow the path from Abel to Kronecker, then it will soon become clear that Kronecker's youthful dream has the theoretical character to be called the inverse problem of Abel. -

3 Uniform Random Numbers 3 3.1 Random and Pseudo-Random Numbers

Contents 3 Uniform random numbers 3 3.1 Random and pseudo-random numbers . 3 3.2 States, periods, seeds, and streams . 5 3.3 U(0; 1) random variables . 8 3.4 Inside a random number generator . 10 3.5 Uniformity measures . 12 3.6 Statistical tests of random numbers . 15 3.7 Pairwise independent random numbers . 17 End notes . 18 Exercises . 20 1 2 Contents © Art Owen 2009{2013 do not distribute or post electronically without author's permission 3 Uniform random numbers Monte Carlo methods require a source of randomness. For the convenience of the users, random numbers are delivered as a stream of independent U(0; 1) random variables. As we will see in later chapters, we can generate a vast assortment of random quantities starting with uniform random numbers. In practice, for reasons outlined below, it is usual to use simulated or pseudo- random numbers instead of genuinely random numbers. This chapter looks at how to make good use of random number generators. That begins with selecting a good one. Sometimes it ends there too, but in other cases we need to learn how best to set seeds and organize multiple streams and substreams of random numbers. This chapter also covers quality criteria for ran- dom number generators, briefly discusses the most commonly used algorithms, and points out some numerical issues that can trap the unwary. 3.1 Random and pseudo-random numbers It would be satisfying to generate random numbers from a process that accord- ing to well established understanding of physics is truly random. -

A Recap of Randomness the Mersene Twister Xorshift Linear

Programmatic Entropy: Exploring the Most Prominent PRNGs Jared Anderson, Evan Bause, Nick Pappas, Joshua Oberlin A Recap of Randomness Random number generating algorithms are rated by two primary measures: entropy - the measure of disorder in the numbers created and period - how long it takes before the PRNG begins to inevitably cycle its pattern. While high entropy and a long period are desirable traits, it is Xorshift sometimes necessary to settle for a less intense method of random Linear Congruential Generators Xorshift random number generators are an extremely fast and number generation to not sacrifice performance of the product the PRNG efficient type of PRNG discovered by George Marsaglia. An xorshift is required for. However, in the real world PRNGs must also be evaluated PRNG works by taking the exclusive or (xor) of a computer word with a for memory footprint, CPU requirements, and speed. shifted version of itself. For a single integer x, an xorshift operation can In this poster we will explore three of the major types of PRNGs, their produce a sequence of 232 - 1 random integers. For a pair of integers x, history, their inner workings, and their uses. y, it can produce a sequence of 264 - 1 random integers. For a triple x, y, z, it can produce a sequence of 296 - 1, and so on. The Mersene Twister The Mersenne Twister is the most widely used general purpose pseudorandom number generator today. A Mersenne prime is a prime number that is one less than a power of two, and in the case of a 19937 mersenne twister, is its chosen period length (most commonly 2 −1). -

1. Monte Carlo Integration

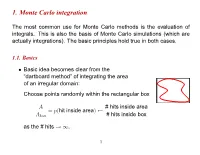

1. Monte Carlo integration The most common use for Monte Carlo methods is the evaluation of integrals. This is also the basis of Monte Carlo simulations (which are actually integrations). The basic principles hold true in both cases. 1.1. Basics Basic idea becomes clear from the • “dartboard method” of integrating the area of an irregular domain: Choose points randomly within the rectangular box A # hits inside area = p(hit inside area) Abox # hits inside box as the # hits . ! 1 1 Buffon’s (1707–1788) needle experiment to determine π: • – Throw needles, length l, on a grid of lines l distance d apart. – Probability that a needle falls on a line: d 2l P = πd – Aside: Lazzarini 1901: Buffon’s experiment with 34080 throws: 355 π = 3:14159292 ≈ 113 Way too good result! (See “π through the ages”, http://www-groups.dcs.st-and.ac.uk/ history/HistTopics/Pi through the ages.html) ∼ 2 1.2. Standard Monte Carlo integration Let us consider an integral in d dimensions: • I = ddxf(x) ZV Let V be a d-dim hypercube, with 0 x 1 (for simplicity). ≤ µ ≤ Monte Carlo integration: • - Generate N random vectors x from flat distribution (0 (x ) 1). i ≤ i µ ≤ - As N , ! 1 V N f(xi) I : N iX=1 ! - Error: 1=pN independent of d! (Central limit) / “Normal” numerical integration methods (Numerical Recipes): • - Divide each axis in n evenly spaced intervals 3 - Total # of points N nd ≡ - Error: 1=n (Midpoint rule) / 1=n2 (Trapezoidal rule) / 1=n4 (Simpson) / If d is small, Monte Carlo integration has much larger errors than • standard methods. -

Ziggurat Revisited

Ziggurat Revisited Dirk Eddelbuettel RcppZiggurat version 0.1.3 as of July 25, 2015 Abstract Random numbers following a Standard Normal distribution are of great importance when using simulations as a means for investigation. The Ziggurat method (Marsaglia and Tsang, 2000; Leong, Zhang, Lee, Luk, and Villasenor, 2005) is one of the fastest methods to generate normally distributed random numbers while also providing excellent statistical properties. This note provides an updated implementations of the Ziggurat generator suitable for 32- and 64-bit operating system. It compares the original implementations to several popular Open Source implementations. A new implementation embeds the generator into an appropriate C++ class structure. The performance of the different generator is investigated both via extended timing and through a series of statistical tests, including a suggested new test for testing Normal deviates directly. Integration into other systems such as R is discussed as well. 1 Introduction Generating random number for use in simulation is a classic topic in scientific computing and about as old as the field itself. Most algorithms concentrate on the uniform distribution. Its values can be used to generate randomly distributed values from other distributions simply by using the relevant inverse function. Regarding terminology, all computational algorithms for generation of random numbers are by definition deterministic. Here, we follow standard convention and refer to such numbers as pseudo-random as they can always be recreated given the seed value for a given sequence. We are not concerned in this note with quasi-random numbers (also called low-discrepnancy sequences). We consider this topic to be subset of pseudo-random numbers subject to distributional constraints. -

Gretl Manual

Gretl Manual Gnu Regression, Econometrics and Time-series Library Allin Cottrell Department of Economics Wake Forest University August, 2005 Gretl Manual: Gnu Regression, Econometrics and Time-series Library by Allin Cottrell Copyright © 2001–2005 Allin Cottrell Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.1 or any later version published by the Free Software Foundation (see http://www.gnu.org/licenses/fdl.html). iii Table of Contents 1. Introduction........................................................................................................................................... 1 Features at a glance ......................................................................................................................... 1 Acknowledgements .......................................................................................................................... 1 Installing the programs................................................................................................................... 2 2. Getting started ...................................................................................................................................... 4 Let’s run a regression ...................................................................................................................... 4 Estimation output............................................................................................................................. 6 The