3 Uniform Random Numbers 3 3.1 Random and Pseudo-Random Numbers

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Note on Random Number Generation

A note on random number generation Christophe Dutang and Diethelm Wuertz September 2009 1 1 INTRODUCTION 2 \Nothing in Nature is random. number generation. By \random numbers", we a thing appears random only through mean random variates of the uniform U(0; 1) the incompleteness of our knowledge." distribution. More complex distributions can Spinoza, Ethics I1. be generated with uniform variates and rejection or inversion methods. Pseudo random number generation aims to seem random whereas quasi random number generation aims to be determin- istic but well equidistributed. 1 Introduction Those familiars with algorithms such as linear congruential generation, Mersenne-Twister type algorithms, and low discrepancy sequences should Random simulation has long been a very popular go directly to the next section. and well studied field of mathematics. There exists a wide range of applications in biology, finance, insurance, physics and many others. So 2.1 Pseudo random generation simulations of random numbers are crucial. In this note, we describe the most random number algorithms At the beginning of the nineties, there was no state-of-the-art algorithms to generate pseudo Let us recall the only things, that are truly ran- random numbers. And the article of Park & dom, are the measurement of physical phenomena Miller (1988) entitled Random generators: good such as thermal noises of semiconductor chips or ones are hard to find is a clear proof. radioactive sources2. Despite this fact, most users thought the rand The only way to simulate some randomness function they used was good, because of a short on computers are carried out by deterministic period and a term to term dependence. -

Package 'Randtoolbox'

Package ‘randtoolbox’ January 31, 2020 Type Package Title Toolbox for Pseudo and Quasi Random Number Generation and Random Generator Tests Version 1.30.1 Author R port by Yohan Chalabi, Christophe Dutang, Petr Savicky and Di- ethelm Wuertz with some underlying C codes of (i) the SFMT algorithm from M. Mat- sumoto and M. Saito, (ii) the Knuth-TAOCP RNG from D. Knuth. Maintainer Christophe Dutang <[email protected]> Description Provides (1) pseudo random generators - general linear congruential generators, multiple recursive generators and generalized feedback shift register (SF-Mersenne Twister algorithm and WELL generators); (2) quasi random generators - the Torus algorithm, the Sobol sequence, the Halton sequence (including the Van der Corput sequence) and (3) some generator tests - the gap test, the serial test, the poker test. See e.g. Gentle (2003) <doi:10.1007/b97336>. The package can be provided without the rngWELL dependency on demand. Take a look at the Distribution task view of types and tests of random number generators. Version in Memoriam of Diethelm and Barbara Wuertz. Depends rngWELL (>= 0.10-1) License BSD_3_clause + file LICENSE NeedsCompilation yes Repository CRAN Date/Publication 2020-01-31 10:17:00 UTC R topics documented: randtoolbox-package . .2 auxiliary . .3 coll.test . .4 coll.test.sparse . .6 freq.test . .8 gap.test . .9 get.primes . 11 1 2 randtoolbox-package getWELLState . 12 order.test . 12 poker.test . 14 pseudoRNG . 16 quasiRNG . 22 rngWELLScriptR . 26 runifInterface . 27 serial.test . 29 soboltestfunctions . 31 Index 33 randtoolbox-package General remarks on toolbox for pseudo and quasi random number generation Description The randtoolbox-package started in 2007 during an ISFA (France) working group. -

Gretl User's Guide

Gretl User’s Guide Gnu Regression, Econometrics and Time-series Allin Cottrell Department of Economics Wake Forest university Riccardo “Jack” Lucchetti Dipartimento di Economia Università Politecnica delle Marche December, 2008 Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.1 or any later version published by the Free Software Foundation (see http://www.gnu.org/licenses/fdl.html). Contents 1 Introduction 1 1.1 Features at a glance ......................................... 1 1.2 Acknowledgements ......................................... 1 1.3 Installing the programs ....................................... 2 I Running the program 4 2 Getting started 5 2.1 Let’s run a regression ........................................ 5 2.2 Estimation output .......................................... 7 2.3 The main window menus ...................................... 8 2.4 Keyboard shortcuts ......................................... 11 2.5 The gretl toolbar ........................................... 11 3 Modes of working 13 3.1 Command scripts ........................................... 13 3.2 Saving script objects ......................................... 15 3.3 The gretl console ........................................... 15 3.4 The Session concept ......................................... 16 4 Data files 19 4.1 Native format ............................................. 19 4.2 Other data file formats ....................................... 19 4.3 Binary databases .......................................... -

Scope of Various Random Number Generators in Ant System Approach for Tsp

AC 2007-458: SCOPE OF VARIOUS RANDOM NUMBER GENERATORS IN ANT SYSTEM APPROACH FOR TSP S.K. Sen, Florida Institute of Technology Syamal K Sen ([email protected]) is currently a professor in the Dept. of Mathematical Sciences, Florida Institute of Technology (FIT), Melbourne, Florida. He did his Ph.D. (Engg.) in Computational Science from the prestigious Indian Institute of Science (IISc), Bangalore, India in 1973 and then continued as a faculty of this institute for 33 years. He was a professor of Supercomputer Computer Education and Research Centre of IISc during 1996-2004 before joining FIT in January 2004. He held a Fulbright Fellowship for senior teachers in 1991 and worked in FIT. He also held faculty positions, on leave from IISc, in several universities around the globe including University of Mauritius (Professor, Maths., 1997-98), Mauritius, Florida Institute of Technology (Visiting Professor, Math. Sciences, 1995-96), Al-Fateh University (Associate Professor, Computer Engg, 1981-83.), Tripoli, Libya, University of the West Indies (Lecturer, Maths., 1975-76), Barbados.. He has published over 130 research articles in refereed international journals such as Nonlinear World, Appl. Maths. and Computation, J. of Math. Analysis and Application, Simulation, Int. J. of Computer Maths., Int. J Systems Sci., IEEE Trans. Computers, Internl. J. Control, Internat. J. Math. & Math. Sci., Matrix & Tensor Qrtly, Acta Applicande Mathematicae, J. Computational and Applied Mathematics, Advances in Modelling and Simulation, Int. J. Engineering Simulation, Neural, Parallel and Scientific Computations, Nonlinear Analysis, Computers and Mathematics with Applications, Mathematical and Computer Modelling, Int. J. Innovative Computing, Information and Control, J. Computational Methods in Sciences and Engineering, and Computers & Mathematics with Applications. -

Gretl 1.6.0 and Its Numerical Accuracy - Technical Supplement

GRETL 1.6.0 AND ITS NUMERICAL ACCURACY - TECHNICAL SUPPLEMENT A. Talha YALTA and A. Yasemin YALTA∗ Department of Economics, Fordham University, New York 2007-07-13 This supplement provides detailed information regarding both the procedure and the results of our testing GRETL using the univariate summary statistics, analysis of variance, linear regression, and nonlinear least squares benchmarks of the NIST Statis- tical Reference Datasets (StRD), as well as verification of the random number generator and the accuracy of statistical distributions used for calculating critical values. The numbers of accurate digits (NADs) for testing the StRD datasets are also supplied and compared with several commercial packages namely SAS v6.12, SPSS v7.5, S-Plus v4.0, Stata 7, and Gauss for Windows v3.2.37. We did not perform any accuracy tests of the latest versions of these programs and the results that we supply for packages other than GRETL 1.6.0 are those independently verified and published by McCullough (1999a), McCullough and Wilson (2002), and Vinod (2000) previously. Readers are also referred to McCullough (1999b) and Keeling and Pavur (2007), which examine software accuracy and supply the NADS across four and nine software packages at once respectively. Since all these programs perform calculations using 64-bit floating point arithmetic, discrepan- cies in results are caused by differences in algorithms used to perform various statistical operations. 1 Numerical tests of the NIST StRD benchmarks For the StRD reference data sets, NIST provides certified values calculated with multiple precision in 500 digits of accuracy, which were later rounded to 15 significant digits (11 for nonlinear least squares). -

A Recap of Randomness the Mersene Twister Xorshift Linear

Programmatic Entropy: Exploring the Most Prominent PRNGs Jared Anderson, Evan Bause, Nick Pappas, Joshua Oberlin A Recap of Randomness Random number generating algorithms are rated by two primary measures: entropy - the measure of disorder in the numbers created and period - how long it takes before the PRNG begins to inevitably cycle its pattern. While high entropy and a long period are desirable traits, it is Xorshift sometimes necessary to settle for a less intense method of random Linear Congruential Generators Xorshift random number generators are an extremely fast and number generation to not sacrifice performance of the product the PRNG efficient type of PRNG discovered by George Marsaglia. An xorshift is required for. However, in the real world PRNGs must also be evaluated PRNG works by taking the exclusive or (xor) of a computer word with a for memory footprint, CPU requirements, and speed. shifted version of itself. For a single integer x, an xorshift operation can In this poster we will explore three of the major types of PRNGs, their produce a sequence of 232 - 1 random integers. For a pair of integers x, history, their inner workings, and their uses. y, it can produce a sequence of 264 - 1 random integers. For a triple x, y, z, it can produce a sequence of 296 - 1, and so on. The Mersene Twister The Mersenne Twister is the most widely used general purpose pseudorandom number generator today. A Mersenne prime is a prime number that is one less than a power of two, and in the case of a 19937 mersenne twister, is its chosen period length (most commonly 2 −1). -

1. Monte Carlo Integration

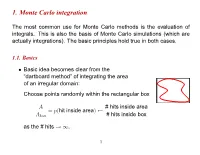

1. Monte Carlo integration The most common use for Monte Carlo methods is the evaluation of integrals. This is also the basis of Monte Carlo simulations (which are actually integrations). The basic principles hold true in both cases. 1.1. Basics Basic idea becomes clear from the • “dartboard method” of integrating the area of an irregular domain: Choose points randomly within the rectangular box A # hits inside area = p(hit inside area) Abox # hits inside box as the # hits . ! 1 1 Buffon’s (1707–1788) needle experiment to determine π: • – Throw needles, length l, on a grid of lines l distance d apart. – Probability that a needle falls on a line: d 2l P = πd – Aside: Lazzarini 1901: Buffon’s experiment with 34080 throws: 355 π = 3:14159292 ≈ 113 Way too good result! (See “π through the ages”, http://www-groups.dcs.st-and.ac.uk/ history/HistTopics/Pi through the ages.html) ∼ 2 1.2. Standard Monte Carlo integration Let us consider an integral in d dimensions: • I = ddxf(x) ZV Let V be a d-dim hypercube, with 0 x 1 (for simplicity). ≤ µ ≤ Monte Carlo integration: • - Generate N random vectors x from flat distribution (0 (x ) 1). i ≤ i µ ≤ - As N , ! 1 V N f(xi) I : N iX=1 ! - Error: 1=pN independent of d! (Central limit) / “Normal” numerical integration methods (Numerical Recipes): • - Divide each axis in n evenly spaced intervals 3 - Total # of points N nd ≡ - Error: 1=n (Midpoint rule) / 1=n2 (Trapezoidal rule) / 1=n4 (Simpson) / If d is small, Monte Carlo integration has much larger errors than • standard methods. -

Ziggurat Revisited

Ziggurat Revisited Dirk Eddelbuettel RcppZiggurat version 0.1.3 as of July 25, 2015 Abstract Random numbers following a Standard Normal distribution are of great importance when using simulations as a means for investigation. The Ziggurat method (Marsaglia and Tsang, 2000; Leong, Zhang, Lee, Luk, and Villasenor, 2005) is one of the fastest methods to generate normally distributed random numbers while also providing excellent statistical properties. This note provides an updated implementations of the Ziggurat generator suitable for 32- and 64-bit operating system. It compares the original implementations to several popular Open Source implementations. A new implementation embeds the generator into an appropriate C++ class structure. The performance of the different generator is investigated both via extended timing and through a series of statistical tests, including a suggested new test for testing Normal deviates directly. Integration into other systems such as R is discussed as well. 1 Introduction Generating random number for use in simulation is a classic topic in scientific computing and about as old as the field itself. Most algorithms concentrate on the uniform distribution. Its values can be used to generate randomly distributed values from other distributions simply by using the relevant inverse function. Regarding terminology, all computational algorithms for generation of random numbers are by definition deterministic. Here, we follow standard convention and refer to such numbers as pseudo-random as they can always be recreated given the seed value for a given sequence. We are not concerned in this note with quasi-random numbers (also called low-discrepnancy sequences). We consider this topic to be subset of pseudo-random numbers subject to distributional constraints. -

Gretl Manual

Gretl Manual Gnu Regression, Econometrics and Time-series Library Allin Cottrell Department of Economics Wake Forest University August, 2005 Gretl Manual: Gnu Regression, Econometrics and Time-series Library by Allin Cottrell Copyright © 2001–2005 Allin Cottrell Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.1 or any later version published by the Free Software Foundation (see http://www.gnu.org/licenses/fdl.html). iii Table of Contents 1. Introduction........................................................................................................................................... 1 Features at a glance ......................................................................................................................... 1 Acknowledgements .......................................................................................................................... 1 Installing the programs................................................................................................................... 2 2. Getting started ...................................................................................................................................... 4 Let’s run a regression ...................................................................................................................... 4 Estimation output............................................................................................................................. 6 The -

Econometrics with Octave

Econometrics with Octave Dirk Eddelb¨uttel∗ Bank of Montreal, Toronto, Canada. [email protected] November 1999 Summary GNU Octave is an open-source implementation of a (mostly Matlab compatible) high-level language for numerical computations. This review briefly introduces Octave, discusses applications of Octave in an econometric context, and illustrates how to extend Octave with user-supplied C++ code. Several examples are provided. 1 Introduction Econometricians sweat linear algebra. Be it for linear or non-linear problems of estimation or infer- ence, matrix algebra is a natural way of expressing these problems on paper. However, when it comes to writing computer programs to either implement tried and tested econometric procedures, or to research and prototype new routines, programming languages such as C or Fortran are more of a bur- den than an aid. Having to enhance the language by supplying functions for even the most primitive operations adds extra programming effort, introduces new points of failure, and moves the level of abstraction further away from the elegant mathematical expressions. As Eddelb¨uttel(1996) argues, object-oriented programming provides `a step up' from Fortran or C by enabling the programmer to seamlessly add new data types such as matrices, along with operations on these new data types, to the language. But with Moore's Law still being validated by ever and ever faster processors, and, hence, ever increasing computational power, the prime reason for using compiled code, i.e. speed, becomes less relevant. Hence the growing popularity of interpreted programming languages, both, in general, as witnessed by the surge in popularity of the general-purpose programming languages Perl and Python and, in particular, for numerical applications with strong emphasis on matrix calculus where languages such as Gauss, Matlab, Ox, R and Splus, which were reviewed by Cribari-Neto and Jensen (1997), Cribari-Neto (1997) and Cribari-Neto and Zarkos (1999), have become popular. -

Lecture 23 Random Numbers 1 Introduction 1.1 Motivation Scientifically an Event Is Considered Random If It Is Unpredictable

Lecture 23 Random numbers 1 Introduction 1.1 Motivation Scientifically an event is considered random if it is unpredictable. Classic examples are a coin flip, a die roll and a lottery ticket drawing. For a coin flip we can associate 1 with “heads” and 0 with “tails,” and a sequence of coin flips then produces a random sequence of binary digits. These can be taken to describe a random integer. For example, 1001011 can be interpreted as the binary number corresponding to 1(26)+0(25)+0(24)+1(23)+0(22)+1(21)+1(20)=64+8+2+1=75 (1) For a lottery drawing we can label n tickets with the numbers 0,1,2,…,n−1 . A drawing then produces a random integer i with 0≤i≤n−1 . In these and other ways random events can be associated with corresponding random numbers. Conversely, if we are able to generate random numbers we can use those to represent random events or phenomena, and this can very useful in engineering analysis and design. Consider the design of a bridge structure. We have no direct control over what or how many vehicles drive across the bridge or what environmental conditions it is subject to. A good way to uncover unforeseen problems with a design is to simulate it being subject to a large number of random conditions, and this is one of the many motivations for developing random number generators. How can we be sure our design will function properly? By the way, if you doubt this is a real problem, read up on the Tacoma Narrows bridge which failed spectacularly due to wind forces alone [1]. -

Random Sampling: Practice Makes Imperfect 3 2 Pseudo-Random Number Generators

Random Sampling: Practice Makes Imperfect Philip B. Stark and Kellie Ottoboni Abstract The pseudo-random number generators (PRNGs), sampling algorithms, and algorithms for generating random integers in some common statistical pack- ages and programming languages are unnecessarily inaccurate, by an amount that may matter for statistical inference. Most use PRNGs with state spaces that are too small for contemporary sampling problems and methods such as the bootstrap and permutation tests. The random sampling algorithms in many packages rely on the false assumption that PRNGs produce IID U[0,1) outputs. The discreteness of PRNG outputs and the limited state space of common PRNGs cause those algo- rithms to perform poorly in practice. Statistics packages and scientific programming languages should use cryptographically secure PRNGs by default (not for their se- curity properties, but for their statistical ones), and offer weaker PRNGs only as an option. Software should not use methods that assume PRNG outputs are IID U[0,1) random variables, such as generating a random sample by permuting the population and taking the first k items or generating random integers by multiplying a pseudo- random binary fraction or float by a constant and rounding the result. More accurate methods are available. The difference between theory and practice is smaller in theory than it is in practice. —Unknown In theory, there’s no difference between theory and practice, but in practice, there is. —Jan L.A. van de Snepscheut arXiv:1810.10985v2 [stat.CO] 26 Oct 2018 Philip B. Stark University of California, Berkeley, e-mail: [email protected] Kellie Ottoboni University of California, Berkeley e-mail: [email protected] 1 2 Philip B.