Mellanox Virtual Protocol Interconnect® Creates the Ideal Gateway Between Infiniband and Ethernet

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

OFC/NFOEC 2011 Program Archive

OFC/NFOEC 2011 Archive Technical Conference: March 6-10, 2011 Exposition: March 8-10, 2011 Los Angeles Convention Center, Los Angeles, CA, USA At OFC/NFOEC 2011, the optical communications industry was buzzing with the sounds of a larger exhibit hall, expanded programming, product innovations, cutting-edge research presentations, and increased attendance March 6 - 10 in Los Angeles. The exhibit hall grew by 20 percent over last year, featuring new programming for service providers and data center operators, and more exhibitors filling a larger space, alongside its core show floor programs and activities. The more than 500 companies in the exhibition hall showcased innovations in areas such as 100G, tunable XFPs, metro networking, Photonic Integrated Circuits, and more. On hand to demonstrate where the industry is headed were network and test equipment vendors, sub-system and component manufacturers, as well as software, fiber cable and specialty fiber manufacturers. Service providers and enterprises were there to get the latest information on building or upgrading networks or datacenters. OFC/NFOEC also featured expanded program offerings in the areas of high-speed data communications, optical internetworking, wireless backhaul and supercomputing for its 2011 conference and exhibition. This new content and more was featured in standing-room only programs such as the Optical Business Forum, Ethernet Alliance Program, Optical Internetworking Forum Program, Green Touch Panel Session, a special symposium on Meeting the Computercom Challenge and more. Flagship programs Market Watch and the Service Provider Summit also featured topics on data centers, wireless, 100G, and optical networking. Hundreds of educational workshops, short courses, tutorial sessions and invited talks at OFC/NFOEC covered hot topics such as datacom, FTTx/in-home, wireless backhaul, next generation data transfer technology, 100G, coherent, and photonic integration. -

Advancing Applications Performance with Infiniband

Advancing Applications Performance With InfiniBand Pak Lui, Application Performance Manager September 12, 2013 Mellanox Overview Ticker: MLNX . Leading provider of high-throughput, low-latency server and storage interconnect • FDR 56Gb/s InfiniBand and 10/40/56GbE • Reduces application wait-time for data • Dramatically increases ROI on data center infrastructure . Company headquarters: • Yokneam, Israel; Sunnyvale, California • ~1,200 employees* worldwide . Solid financial position • Record revenue in FY12; $500.8M, up 93% year-over-year • Q2’13 revenue of $98.2M • Q3’13 guidance ~$104M to $109M • Cash + investments @ 6/30/13 = $411.3M * As of June 2013 © 2013 Mellanox Technologies 2 Providing End-to-End Interconnect Solutions Comprehensive End-to-End Software Accelerators and Managment Management Storage and Data MXM FCA UFM VSA UDA Mellanox Messaging Fabric Collectives Storage Accelerator Unstructured Data Unified Fabric Management Acceleration Acceleration (iSCSI) Accelerator Comprehensive End-to-End InfiniBand and Ethernet Solutions Portfolio ICs Adapter Cards Switches/Gateways Long-Haul Systems Cables/Modules © 2013 Mellanox Technologies 3 Virtual Protocol Interconnect (VPI) Technology VPI Adapter VPI Switch Unified Fabric Manager Switch OS Layer Applications Networking Storage Clustering Management 64 ports 10GbE Acceleration Engines 36 ports 40/56GbE 48 10GbE + 12 40/56GbE 36 ports IB up to 56Gb/s Ethernet: 10/40/56 Gb/s 8 VPI subnets 3.0 InfiniBand:10/20/40/56 Gb/s From data center to campus and metro connectivity LOM Adapter Card Mezzanine Card © 2013 Mellanox Technologies 4 MetroDX™ and MetroX™ . MetroX™ and MetroDX™ extends InfiniBand and Ethernet RDMA reach . Fastest interconnect over 40Gb/s InfiniBand or Ethernet links . Supporting multiple distances . -

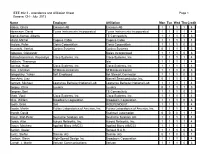

IEEE 802.3 Attendance List

IEEE 802.3 - Attendance and Affiliation Sheet Page 1 Geneva, CH - July 2013 Name Employer Affiliation Mon Tue Wed Thu Credit Abbas, Ghani Ericsson AB Ericsson AB 1 1 1 1 4 Abramson, David Texas Instruments Incorporated Texas Instruments Incorporated 1 1 1 1 4 Alamo Alonso, Alberto TE Connectivity 1 1 1 0 3 Allard, Michel Cogeco Cable Cogeco Cable 1 1 1 1 4 Anslow, Peter Ciena Corporation Ciena Corporation 1 1 1 0 3 Arunarthi, Venkat Cortina Systems Cortina Systems 0 1 1 1 3 Babenko, Oleksandr Molex Incorporated 1 1 1 0 3 Balasubramanian, Koussalya Cisco Systems, Inc. Cisco Systems, Inc. 1 1 1 1 4 Baldwin, Thananya Ixia 1 1 1 1 4 Barrass, Hugh Cisco Systems, Inc. Cisco Systems, Inc. 1 1 1 1 4 Beia, Christian STMicroelectronics STMicroelectronics 1 1 1 0 3 Belopolsky, Yakov Self Employed Bel Stewart Connector 1 1 1 0 3 Ben-Artsi, Liav Marvell Semiconductor, Inc. 0 1 1 1 3 Bennett, Michael Lawrence Berkeley National Lab Lawrence Berkeley National Lab 1 1 1 1 4 Bergey, Chris Luxtera Luxtera 0 1 1 1 3 Bergner, Bert TE Connectivity 1 1 1 0 3 Bhatt, Vipul Cisco Systems, Inc. Cisco Systems, Inc. 1 1 1 1 4 Bliss, William Broadcom Corporation Broadcom Corporation 1 1 1 0 3 Booth, Brad Dell INDEPENDENT 1 1 1 1 4 Bouda, Martin Fujitsu Laboratories of America, Inc. Fujitsu Laboratories of America, Inc. 1 1 1 1 4 Brandt, David Rockwell Automation 0 0 0 1 1 Braun, Ralf-Peter Deutsche Telekom AG Deutsche Telekom AG 1 1 1 1 4 Brown, Alan Aurora Networks, Inc. -

European Technology Media & Telecommunications Monitor

European Technology Media & Telecommunications Monitor Market and Industry Update H1 2013 Piper Jaffray European TMT Team: Eric Sanschagrin Managing Director Head of European TMT [email protected] +44 (0) 207 796 8420 Jessica Harneyford Associate [email protected] +44 (0) 207 796 8416 Peter Shin Analyst [email protected] +44 (0) 207 796 8444 Julie Wright Executive Assistant [email protected] +44 (0) 207 796 8427 TECHNOLOGY, MEDIA & TELECOMMUNICATIONS MONITOR Market and Industry Update Selected Piper Jaffray H1 2013 TMT Transactions TMT Investment Banking Transactions Date: June 2013 $47,500,000 Client: IPtronics A/S Transaction: Mellanox Technologies, Ltd. signed a definitive agreement to acquire IPtronics A/S from Creandum AB, Sunstone Capital A/S and others for $47.5 million in cash. Pursuant to the Has Been Acquired By transaction, IPtronics’ current location in Roskilde, Denmark will serve as Mellanox’s first research and development centre in Europe and IPtronics A/S will operate as a wholly-owned indirect subsidiary of Mellanox Technologies, Ltd. Client Description: Mellanox Technologies Ltd. is a leading supplier of end-to-end InfiniBand and June 2013 Ethernet interconnect solutions and services for servers and storage. PJC Role: Piper Jaffray acted as exclusive financial advisor to IPtronics A/S. Date: May 2013 $46,000,000 Client: inContact, Inc. (NasdaqCM: SAAS) Transaction: inContact closed a $46.0 million follow-on offering of 6,396,389 shares of common stock, priced at $7.15 per share. Client Description: inContact, Inc. provides cloud contact center software solutions. PJC Role: Piper Jaffray acted as bookrunner for the offering. -

2013 State of the VITA Technology Industry

2013 State of the VITA Technology Industry September 2013 P.O. Box 19658 Fountain Hills, AZ 85269 480.837.7486 [email protected] www.vita.com This page intentially left blank for double sided printing. State of the VITA Technology Industry September 2013 by: Ray Alderman, Executive Director, VITA This report provides the reader with updates on the state of the VITA Technology industry in particular and of the board industry in general, from the perspective of Ray Alderman, the executive director of VITA. VITA is the trade association dedicated to fostering American National Standards Institute (ANSI) accredited, open system architectures in critical embedded system applications. The complete series of reports can be found at Market Reports. (www.vita.com) Introduction This issue of the “State of the VITA Technology Industry” recaps our current economic Contents conditions We will also take a close look at unmanned vehicles of all types Optical backplanes have been on the horizon for many years, new innovation keeps moving us Introduction . 1 so ever slowly forward, but we are quickly running out of runway There have been a few deals made in the mergers and acquisition department, and to wrap it up, we will close Business Conditions . 1 with “Alderman’s Business Rules ” Markets. 3 MIL/Aero 3 Business Conditions Telecom 7 Optical Developments. 8 The final numbers for Q1 2013 U S GDP growth1 came in at a disappointing 1 8% after two earlier estimates of 2 4% The final Q1 GDP was revised to 1 1% by the Bureau of Mergers and Acquisitions 10 Economic Analysis (the Bureau of Economic Analysis issues a preliminary GDP number, and revises it three times until the next quarter GDP estimate is announced) While this Changing Business Models 10 is not exciting, it beats the situation we have seen in China2 and Europe3 in Q1 Market Estimates . -

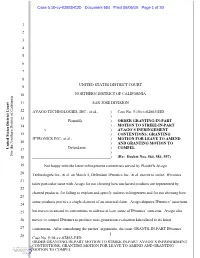

Case No. 5:10-Cv-02863-EJD ) 13 Plaintiffs, ) ORDER GRANTING-IN-PART 14 ) MOTION to STRIKE-IN-PART V

Case 5:10-cv-02863-EJD Document 684 Filed 08/05/15 Page 1 of 20 1 2 3 4 5 6 7 8 UNITED STATES DISTRICT COURT 9 10 NORTHERN DISTRICT OF CALIFORNIA 11 SAN JOSE DIVISION 12 AVAGO TECHNOLOGIES, INC., et al., ) Case No. 5:10-cv-02863-EJD ) 13 Plaintiffs, ) ORDER GRANTING-IN-PART 14 ) MOTION TO STRIKE-IN-PART v. ) AVAGO’S INFRINGEMENT 15 ) CONTENTIONS; GRANTING IPTRONICS INC., et al., ) MOTION FOR LEAVE TO AMEND 16 ) AND GRANTING MOTION TO United States District Court 17 Defendants. ) COMPEL ) For the Northern District of California For the Northern District 18 ) (Re: Docket Nos. 564, 584, 597) 19 Not happy with the latest infringement contentions served by Plaintiffs Avago 20 Technologies Inc., et al. on March 5, Defendant IPtronics Inc., et al. moves to strike. IPtronics 21 takes particular issue with Avago for not showing how uncharted products are represented by 22 charted products, for failing to explain and specify indirect infringement and for not showing how 23 some products practice a single element of an asserted claim. Avago disputes IPtronics’ assertions, 24 25 but moves to amend its contentions to address at least some of IPtronics’ concerns. Avago also 26 moves to compel IPtronics to produce next-generation evaluation kits related to its latest 27 contentions. After considering the parties’ arguments, the court GRANTS-IN-PART IPtronics’ 28 1 Case No. 5:10-cv-02863-EJD ORDER GRANTING-IN-PART MOTION TO STRIKE-IN-PART AVAGO’S INFRINGEMENT CONTENTIONS; GRANTING MOTION FOR LEAVE TO AMEND AND GRANTING MOTION TO COMPEL Case 5:10-cv-02863-EJD Document 684 Filed 08/05/15 Page 2 of 20 motion to strike, GRANTS Avago’s motion for leave to amend and GRANTS Avago’s motion to 1 2 compel. -

Mellanox Technologies, Ltd

MELLANOX TECHNOLOGIES, LTD. FORM 10-K (Annual Report) Filed 02/28/14 for the Period Ending 12/31/13 Address 350 OAKMEAD PARKWAY, SUITE 100 SUNNYVALE, CA 94085 Telephone 408-970-3400 CIK 0001356104 Symbol MLNX SIC Code 3674 - Semiconductors and Related Devices Industry Semiconductors Sector Technology Fiscal Year 12/31 http://www.edgar-online.com © Copyright 2014, EDGAR Online, Inc. All Rights Reserved. Distribution and use of this document restricted under EDGAR Online, Inc. Terms of Use. Use these links to rapidly review the document TABLE OF CONTENTS PART III Table of Contents UNITED STATES SECURITIES AND EXCHANGE COMMISSION Washington, D.C. 20549 Form 10-K ANNUAL REPORT PURSUANT TO SECTION 13 OR 15(d) OF THE SECURITIES EXCHANGE ACT OF 1934 For the Fiscal Year Ended: December 31, 2013 OR TRANSITION REPORT PURSUANT TO SECTION 13 OR 15(d) OF THE SECURITIES EXCHANGE ACT OF 1934 For the transition period from to Commission File Number 001-33299 MELLANOX TECHNOLOGIES, LTD. (Exact name of registrant as specified in its charter) Israel 98 -0233400 (State or other jurisdiction of (I.R.S. Employer incorporation or organization) Identification Number) Mellanox Technologies, Ltd. Beit Mellanox, Yokneam, Israel 20692 (Address of principal executive offices, including zip code) +972-4-909-7200 (Registrant's telephone number, including area code) Securities registered pursuant to Section 12(b) of the Act: Title of Each Class: Name of Each Exchange on Which Registered: Ordinary shares, nominal value NIS 0.0175 per share The NASDAQ Stock Market, Inc. Securities registered pursuant to Section 12(g) of the Act: None (Title of Class) Indicate by check mark if the registrant is a well-known seasoned issuer, as defined in Rule 405 of the Securities Act. -

Unified Synchronized Data Acquisition Networks

UNIFIED SYNCHRONIZED DATA ACQUISITION NETWORKS Inauguraldissertation zur Erlangung des akademischen Grades eines Doktors der Naturwissenschaften der Universität Mannheim vorgelegt von Frank Karl Wolfgang Gerhard Rolf Lemke (Diplom-Informatiker im Integrierten Diplomstudiengang Mathematik und Informatik) aus Mutterstadt Mannheim, 2012 Dekan: Professor Dr. H. J. Müller, Universität Mannheim Referent: Professor Dr. U. Brüning, Universität Heidelberg Korreferent: Professor Dr. R. Männer, Universität Heidelberg Tag der mündlichen Prüfung: 30.11.2012 Abstract The permanently evolving technical area of communication technology and the presence of more and more precise sensors and detectors, enable options and solutions to challenges in science and industry. In high-energy physics, for example, it becomes possible with accu- rate measurements to observe particles almost at the speed of light in small-sized dimen- sions. Thereby, the enormous amounts of gathered data require modern high performance communication networks. Potential and efficient implementation of future readout chains will depend on new concepts and mechanisms. The main goals of this dissertation are to create new efficient synchronization mechanisms and to evolve readout systems for optimization of future sensor and detector systems. This happens in the context of the Compressed Baryonic Matter experiment, which is a part of the Facility for Antiproton and Ion Research, an international accelerator facility. It extends an accelerator complex in Darmstadt at the GSI Helmholtzzentrum für Schwerionenfors- chung GmbH. Initially, the challenges are specified and an analysis of the state of the art is presented. The resulting constraints and requirements influenced the design and development described within this dissertation. Subsequently, the different design and implementation tasks are dis- cussed. -

GIGOPTIX, INC. (Exact Name of Registrant As Specified in Its Charter)

Table of Contents UNITED STATES SECURITIES AND EXCHANGE COMMISSION WASHINGTON, D.C. 20549 FORM 10-K (Mark One) x ANNUAL REPORT PURSUANT TO SECTION 13 OR 15(D) OF THE SECURITIES EXCHANGE ACT OF 1934 For the fiscal year ended December 31, 2010 or ¨ TRANSITION REPORT UNDER SECTION 13 OR 15(D) OF THE SECURITIES EXCHANGE ACT OF 1934 For the transition period from to Commission file number: 333-153362 GIGOPTIX, INC. (Exact name of registrant as specified in its charter) Delaware 26-2439072 (State or Other Jurisdiction of (I.R.S. Employer Incorporation or Organization) Identification No.) 2300 Geng Road, Suite 250 Palo Alto, CA 94303 Registrant’s telephone number: (650-424-1937) Securities registered pursuant to Section 12(b) of the Exchange Act: None Securities registered pursuant to Section 12(g) of the Exchange Act: Common Stock, $0.001 par value per share (Title of Class) Indicate by check mark if the registrant is a well-known seasoned issuer, as defined in Rule 405 of the Securities Act. Yes ¨ No x Indicated by check mark if the registrant is not required to file reports pursuant to Section 13 or Section 15(d) of the Act. Yes ¨ No x Indicate by check mark whether the registrant (1) has filed all reports required to be filed by Section 13 or 15(d) of the Securities Exchange Act of 1934 during the preceding 12 months (or for such shorter period that the registrant was required to file such reports), and (2) has been subject to such filing requirements for the past 90 days. -

Attend the World's Leading Optical Network and Communications Event

FREE Admission to the Expo Attend the World’s Leading Optical Network and Communications Event Exposition: 11-13 March 2014 Technical Conference: 9-13 March 2014 Moscone Center San Francisco, California, USA www.ofcconference.org/exhibitpass Sponsored by: Discover advanced optical technologies, applications and insights to take your network—and your business—to the next level. 550+ Exhibitors – One stop for all your business needs See new products Network with colleagues Compare products Learn what’s hot Meet with vendors Attend educational sessions Explore customized solutions on the show floor Establish new contacts VIEW THE EXHIBITOR LIST ON PAGES 14-15. The Most Comprehensive Exhibit Hall in the Industry! Communications Networks, Optical Add/Drop Multiplexers Systems, Subsystems Optical Amplifiers Active and Passive Components Optical Cross-Connects Fiber Cables, Assemblies Optical Fibers Fiber Splicers Optical Transmitters/Receivers Fiber Switches Sensors/Sensor Systems Test Equipment Wireless Communications And More! Access to the three-day exposition is free! Register now at www.ofcconference.org/exhibitpass for your complimentary Exhibits Pass PLUS to attend all of the OFC 2014 Exposition and programming detailed in this brochure. 2 CHECK FOR UPDATES AT WWW.OFCCONFERENCE.ORG/EXHIBITPASS Exhibit Pass Plus Gives You Access to 14 Educational Sessions Covering: Industry Trends FTTX Product Showcases 100 G and Beyond SDN Silicon Photonics Cloud Computing Packet Optical Convergence Optical Networks And More! Hear Speakers from: Reserve Your Hotel at the Guaranteed Lowest Rate Book through Travel Planners to get the sin Hou g V l en guaranteed lowest rates and special ia d c o fi f r perks at hotels near the convention O center in San Francisco. -

Sino-Danish Photonics Workshop

Sino-Danish Photonics Workshop 4 September 2012, Shenzhen, China Sino-Danish Photonic Workshop is a research workshop that showcases Chinese and Danish strongholds and focus areas and will allow for matchmaking and networking. The ambition is that workshop will spur and stimulate future Sino-Danish collaborations within academia and industry. Programme 9.00-9.10 Welcome and introduction Linear Variable Filters for Applications in Spectroscopy and Fluorescence Diagnostics Science Consul Lars Christensen, Innovation Centre Denmark Business Development Manager Oliver Pust, DELTA 9.10-10.20 Session 1 – Photonic Communications 12.30-13.30 Lunch and networking Photonic Research and International Collaboration at DTU Fotonik 13.30-15.20 Session 3 – Wireless Communication and Radio Professor Idelfonso Tafur Monroy, Technical University of Denmark over Fiber Photonics Research at JORCEP (Sino-Swedish Joint Research Center Challenges and Solutions for Next Generation Metro and Access of Photonics of the Royal Institute of Technology, Lund University Networks and Zhejiang University) Assistant professor Jesper Bevensee Jensen, Technical University of Professor Sailing He and associate professor Erik Forsberg, Zhejiang Denmark University Novel Convergent PON based on OFDM High Order Modulation Formats for High Capacity Optical Professor Xiangjun Xin, Beijing University for Posts & Communication Systems Communications Professor Chao Lu, The Hong Kong Polytechnic University High Capacity Hybrid Fiber-Wireless Transmission at the 75-110GHz 10.20-10.50 -

Switch-IB™ EDR Switch Silicon 36-Port Switch Device Provides Leading EDR 100Gb/S Infiniband Throughput Per Port, Total of 7.2Tb/S Throughput

SWITCH SILICON PRODUCT BRIEF Switch-IB™ EDR Switch Silicon 36-port switch device provides leading EDR 100Gb/s InfiniBand throughput per port, total of 7.2Tb/s throughput Mellanox continues its interconnect leadership by providing the highest performing server and storage system interconnect solution for Enterprise Data Centers, Cloud Computing, High-Performance Computing, and embedded environments. Switch-IB, the seventh generation switching IC EDR EDR InfiniBand technology uses the efficient from Mellanox, further improves the company’s HIGHLIGHTS tradition of leading bandwidth, low-latency, and 64/66 encoding while increasing the per lane scalability of InfiniBand switching. signaling rate to 25Gb/s. By using EDR 4X-ports and Mellanox ConnectX® family of InfiniBand – Industry-leading cut-through latency The industry- leading integration of 144 SerDes, adapters, users can increase end-to-end band- – Low-cost solution which are flexible enough to run 1 Gb/s to 25 width up to 78% over previous FDR solutions. • Single-chip implementation Gb/s speeds per lane, independent of network protocol, makes Switch-IB an obvious choice for InfiniBand Interconnect – Fully integrated PHY OEMs that must address end-user requirements Switch-IB InfiniBand devices enable industry • Backplane and cable support for faster and more robust applications. Reduced standard networking, clustering, storage, and • 1, 2 and 4 lanes management protocols to seamlessly operate power and footprint and a fully integrated PHY over a single “one-wire” converged network.