Chapter.2.2.2.18 Copy.Pdf

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Using Concrete Scales: a Practical Framework for Effective Visual Depiction of Complex Measures Fanny Chevalier, Romain Vuillemot, Guia Gali

Using Concrete Scales: A Practical Framework for Effective Visual Depiction of Complex Measures Fanny Chevalier, Romain Vuillemot, Guia Gali To cite this version: Fanny Chevalier, Romain Vuillemot, Guia Gali. Using Concrete Scales: A Practical Framework for Effective Visual Depiction of Complex Measures. IEEE Transactions on Visualization and Computer Graphics, Institute of Electrical and Electronics Engineers, 2013, 19 (12), pp.2426-2435. 10.1109/TVCG.2013.210. hal-00851733v1 HAL Id: hal-00851733 https://hal.inria.fr/hal-00851733v1 Submitted on 8 Jan 2014 (v1), last revised 8 Jan 2014 (v2) HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Using Concrete Scales: A Practical Framework for Effective Visual Depiction of Complex Measures Fanny Chevalier, Romain Vuillemot, and Guia Gali a b c Fig. 1. Illustrates popular representations of complex measures: (a) US Debt (Oto Godfrey, Demonocracy.info, 2011) explains the gravity of a 115 trillion dollar debt by progressively stacking 100 dollar bills next to familiar objects like an average-sized human, sports fields, or iconic New York city buildings [15] (b) Sugar stacks (adapted from SugarStacks.com) compares caloric counts contained in various foods and drinks using sugar cubes [32] and (c) How much water is on Earth? (Jack Cook, Woods Hole Oceanographic Institution and Howard Perlman, USGS, 2010) shows the volume of oceans and rivers as spheres whose sizes can be compared to that of Earth [38]. -

Charles and Ray Eames's Powers of Ten As Memento Mori

chapter 2 Charles and Ray Eames’s Powers of Ten as Memento Mori In a pantheon of potential documentaries to discuss as memento mori, Powers of Ten (1968/1977) stands out as one of the most prominent among them. As one of the definitive works of Charles and Ray Eames’s many successes, Pow- ers reveals the Eameses as masterful designers of experiences that communi- cate compelling ideas. Perhaps unexpectedly even for many familiar with their work, one of those ideas has to do with memento mori. The film Powers of Ten: A Film Dealing with the Relative Size of Things in the Universe and the Effect of Adding Another Zero (1977) is a revised and up- dated version of an earlier film, Rough Sketch of a Proposed Film Dealing with the Powers of Ten and the Relative Size of Things in the Universe (1968). Both were made in the United States, produced by the Eames Office, and are widely available on dvd as Volume 1: Powers of Ten through the collection entitled The Films of Charles & Ray Eames, which includes several volumes and many short films and also online through the Eames Office and on YouTube (http://www . eamesoffice.com/ the-work/powers-of-ten/ accessed 27 May 2016). The 1977 version of Powers is in color and runs about nine minutes and is the primary focus for the discussion that follows.1 Ralph Caplan (1976) writes that “[Powers of Ten] is an ‘idea film’ in which the idea is so compellingly objectified as to be palpably understood in some way by almost everyone” (36). -

Biophilia, Gaia, Cosmos, and the Affectively Ecological

vital reenchantments Before you start to read this book, take this moment to think about making a donation to punctum books, an independent non-profit press, @ https://punctumbooks.com/support/ If you’re reading the e-book, you can click on the image below to go directly to our donations site. Any amount, no matter the size, is appreciated and will help us to keep our ship of fools afloat. Contri- butions from dedicated readers will also help us to keep our commons open and to cultivate new work that can’t find a welcoming port elsewhere. Our ad- venture is not possible without your support. Vive la Open Access. Fig. 1. Hieronymus Bosch, Ship of Fools (1490–1500) vital reenchantments: biophilia, gaia, cosmos, and the affectively ecological. Copyright © 2019 by Lauren Greyson. This work carries a Creative Commons BY-NC-SA 4.0 International license, which means that you are free to copy and redistribute the material in any medium or format, and you may also remix, transform and build upon the material, as long as you clearly attribute the work to the authors (but not in a way that suggests the authors or punctum books endorses you and your work), you do not use this work for commercial gain in any form whatsoever, and that for any remixing and transformation, you distribute your rebuild under the same license. http://creativecommons.org/li- censes/by-nc-sa/4.0/ First published in 2019 by punctum books, Earth, Milky Way. https://punctumbooks.com ISBN-13: 978-1-950192-07-6 (print) ISBN-13: 978-1-950192-08-3 (ePDF) lccn: 2018968577 Library of Congress Cataloging Data is available from the Library of Congress Editorial team: Casey Coffee and Eileen A. -

Urantia, a Cosmic View of the Architecture of the Universe

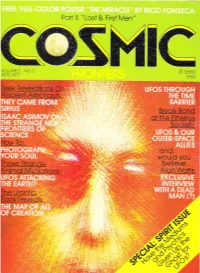

Pr:rt llr "Lost & First Men" vo-L-f,,,tE 2 \c 2 c6510 ADt t 4977 s1 50 Bryce Bond ot the Etherius Soeie& HowTo: ond, would vou Those Stronge believe. Animol Mutilotions: Aicin Wotts; The Urontic BookReveols: N Volume 2, Number 2 FRONTIERS April,1977 CONTENTS REGULAR FEATURES_ Editorial .............. o Transmissions .........7 Saucer Waves .........17 Astronomy Lesson ...... ........22 Audio-Visual Scanners ...... ..7g Cosmic Print-Outs . ... .. ... .... .Bz COSMIC SPOTLIGHTS- They Came From Sirjus . .. .....Marc Vito g Jack, The Animal Mutilator ........Steve Erdmann 11 The Urantia Book: Architecture of the Universe 26 rnterview wirh Alan watts...,f ro, th" otf;lszidThow ut on the Frontrers of science; rr"u" n"trno?'rnoLllffill sutherly 55 Kirrian photography of the Human Auracurt James R. Wolfe 58 UFOs and Time Travel: Doing the Cosmic Wobble John Green 62 CF SERIAL_ "Last & paft2 First Men ," . Olaf Stapledon 35 Cosmic Fronliers;;;;;;J rs DUblrshed Editor: Arthur catti bi-morrhiyy !y_g_oynby Cosm i publica- Art Director: Vincent priore I ons, lnc. at 521 Frith Av.nr."Jb.ica- | New York,i:'^[1 New ';;'].^iffiiYork 10017. I Associate Art Director: Fiank DeMarco Copyright @ 1976 by Cosmic Contributing Editors:John P!blications.l-9,1?to^ lnc, Alt ?I,^c-::Tf riohrs re- I creen, Connie serued, rncludrnqiii,jii"; i'"''1,1.",th€ irqlil ot lvlacNamee. Charles Lane, Wm. Lansinq Brown. jon T; I reproduction ,1wr-or€rn whol€ o,or Lnn oarrpa.'. tsasho Katz, A. A. Zachow, Joseph Belv-edere SLngle copy price: 91.50 I lnside Covers: Rico Fonseca THE URANTIA BOOK: lhe Cosmic view of lhe ARCHITECTURE of lhe UNIVERSE -qnd How Your Spirit Evolves byA.A Zochow Whg uos monkind creoted? The Isle of Paradise is a riers, the Creaior Sons and Does each oJ us houe o pur. -

Detection of PAH and Far-Infrared Emission from the Cosmic Eye

Accepted for publication in ApJ A Preprint typeset using LTEX style emulateapj v. 08/22/09 DETECTION OF PAH AND FAR-INFRARED EMISSION FROM THE COSMIC EYE: PROBING THE DUST AND STAR FORMATION OF LYMAN BREAK GALAXIES B. Siana1, Ian Smail2, A. M. Swinbank2, J. Richard2, H. I. Teplitz3, K. E. K. Coppin2, R. S. Ellis1, D. P. Stark4, J.-P. Kneib5, A. C. Edge2 Accepted for publication in ApJ ABSTRACT ∗ We report the results of a Spitzer infrared study of the Cosmic Eye, a strongly lensed, LUV Lyman Break Galaxy (LBG) at z =3.074. We obtained Spitzer IRS spectroscopy as well as MIPS 24 and 70 µm photometry. The Eye is detected with high significance at both 24 and 70 µm and, when including +4.7 11 a flux limit at 3.5 mm, we estimate an infrared luminosity of LIR = 8.3−4.4 × 10 L⊙ assuming a magnification of 28±3. This LIR is eight times lower than that predicted from the rest-frame UV properties assuming a Calzetti reddening law. This has also been observed in other young LBGs, and indicates that the dust reddening law may be steeper in these galaxies. The mid-IR spectrum shows strong PAH emission at 6.2 and 7.7 µm, with equivalent widths near the maximum values observed in star-forming galaxies at any redshift. The LP AH -to-LIR ratio lies close to the relation measured in local starbursts. Therefore, LP AH or LMIR may be used to estimate LIR and thus, star formation rate, of LBGs, whose fluxes at longer wavelengths are typically below current confusion limits. -

Close-Up View of a Luminous Star-Forming Galaxy at Z = 2.95 S

Close-up view of a luminous star-forming galaxy at z = 2.95 S. Berta, A. J. Young, P. Cox, R. Neri, B. M. Jones, A. J. Baker, A. Omont, L. Dunne, A. Carnero Rosell, L. Marchetti, et al. To cite this version: S. Berta, A. J. Young, P. Cox, R. Neri, B. M. Jones, et al.. Close-up view of a luminous star- forming galaxy at z = 2.95. Astronomy and Astrophysics - A&A, EDP Sciences, 2021, 646, pp.A122. 10.1051/0004-6361/202039743. hal-03147428 HAL Id: hal-03147428 https://hal.archives-ouvertes.fr/hal-03147428 Submitted on 19 Feb 2021 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. A&A 646, A122 (2021) Astronomy https://doi.org/10.1051/0004-6361/202039743 & c S. Berta et al. 2021 Astrophysics Close-up view of a luminous star-forming galaxy at z = 2.95? S. Berta1, A. J. Young2, P. Cox3, R. Neri1, B. M. Jones4, A. J. Baker2, A. Omont3, L. Dunne5, A. Carnero Rosell6,7, L. Marchetti8,9,10 , M. Negrello5, C. Yang11, D. A. Riechers12,13, H. Dannerbauer6,7, I. -

A Biblical View of the Cosmos the Earth Is Stationary

A BIBLICAL VIEW OF THE COSMOS THE EARTH IS STATIONARY Dr Willie Marais Click here to get your free novaPDF Lite registration key 2 A BIBLICAL VIEW OF THE COSMOS THE EARTH IS STATIONARY Publisher: Deon Roelofse Postnet Suite 132 Private Bag x504 Sinoville, 0129 E-mail: [email protected] Tel: 012-548-6639 First Print: March 2010 ISBN NR: 978-1-920290-55-9 All rights reserved. No part of this publication may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopy, recording or any information storage and retrieval system, without permission in writing from the publisher. Cover design: Anneette Genis Editing: Deon & Sonja Roelofse Printing and binding by: Groep 7 Printers and Publishers CK [email protected] Click here to get your free novaPDF Lite registration key 3 A BIBLICAL VIEW OF THE COSMOS CONTENTS Acknowledgements...................................................5 Introduction ............................................................6 Recommendations..................................................14 1. The creation of heaven and earth........................17 2. Is Genesis 2 a second telling of creation? ............25 3. The length of the days of creation .......................27 4. The cause of the seasons on earth ......................32 5. The reason for the creation of heaven and earth ..34 6. Are there other solar systems?............................36 7. The shape of the earth........................................37 8. The pillars of the earth .......................................40 9. The sovereignty of the heaven over the earth .......42 10. The long day of Joshua.....................................50 11. The size of the cosmos......................................55 12. The age of the earth and of man........................59 13. Is the cosmic view of the bible correct?..............61 14. -

NCSA Access Magazine

CONTACTS NCSA Contacts Directory http://www.ncsa.uiuc.edu/Generai/NCSAContacts.html Allocations Education & Outreach Division Orders for Publications, http://www.ncsa.uiuc.edu/Generai/A IIocations/ApplyTop.html http://www.ncsa.uiuc.edu/edu/EduHome.html NCSA Software, and Multimedia Radha Nandkumar John Ziebarth, Associate Director http://www.ncsa.uiuc.edu/Pubs/ 217-244-0650 217-244-1961 TechResCatalog/TRC.TOC.html [email protected] [email protected] Debbie Shirley [email protected] Applications Division/Faculty Program Education http://www.ncsa.uiuc.ed u/Apps/Apps lntro.html Scott Lathrop Public Information Office Melanie Loots, Associate Director 217-244-1099 http://www.ncsa.uiuc.edu/General/ 217-244-2921 [email protected] PIO/NCSAinfo.html [email protected] i uc.edu John Melchi Outreach 217-244-3049 Al lison Clark (information) Alai na Kanfer 217-244-8195 fax 217-244-0768 217-244-0876 [email protected] [email protected] i uc.edu [email protected] Publications Group Visitors Program Training http://www.ncsa.uiuc.edu/Pubs/ jean Soliday http://www.ncsa.uiuc.edu/General/ Pubslntro.html 217-244-1972 Training/training_homepage.html Melissa Johnson [email protected] u Mary Bea Walker 217-244-0645 217-244-9883 melissaj@ncsa .uiuc.edu Computing & Communications Division mbwalker@ncsa .uiuc.edu http://www.ncsa.uiuc.edu/Generai/CC/CCHome.html Software Development Division Charles Catlett, Associate Director Industrial Program http ://www.ncsa.uiuc.edu/SDG/SDGintro.html 217-333-1163 http://www.ncsa.uiuc.edu/General/lndusProg/ joseph Hardin, Associate Director [email protected] lndProg.html 217-244-7802 John Stevenson, Corporate Officer hardin@ncsa .uiuc.edu Ken Sartain (i nformation) 217-244-0474 217-244-0103 [email protected] jae Allen (information) sartain@ ncsa.u iuc.edu 217-244-3364 Marketing Communications Division [email protected] Consulting Services http://www.ncsa.uiuc.edu/Generai/MarComm/ http://www.ncsa. -

Basic Statistics Range, Mean, Median, Mode, Variance, Standard Deviation

Kirkup Chapter 1 Lecture Notes SEF Workshop presentation = Science Fair Data Analysis_Sohl.pptx EDA = Exploratory Data Analysis Aim of an Experiment = Experiments should have a goal. Make note of interesting issues for later. Be careful not to get side-tracked. Designing an Experiment = Put effort where it makes sense, do preliminary fractional error analysis to determine where you need to improve. Units analysis and equation checking Units website Writing down values: scientific notation and SI prefixes http://en.wikipedia.org/wiki/Metric_prefix Significant Figures Histograms in Excel Bin size – reasonable round numbers 15-16 not 14.8-16.1 Bin size – reasonable quantity: Rule of Thumb = # bins = √ where n is the number of data points. Basic Statistics range, mean, median, mode, variance, standard deviation Population, Sample Cool trick for small sample sizes. Estimate this works for n<12 or so. √ Random Error vs. Systematic Error Metric prefixes m n [n 1] Prefix Symbol 1000 10 Decimal Short scale Long scale Since 8 24 yotta Y 1000 10 1000000000000000000000000septillion quadrillion 1991 7 21 zetta Z 1000 10 1000000000000000000000sextillion trilliard 1991 6 18 exa E 1000 10 1000000000000000000quintillion trillion 1975 5 15 peta P 1000 10 1000000000000000quadrillion billiard 1975 4 12 tera T 1000 10 1000000000000trillion billion 1960 3 9 giga G 1000 10 1000000000billion milliard 1960 2 6 mega M 1000 10 1000000 million 1960 1 3 kilo k 1000 10 1000 thousand 1795 2/3 2 hecto h 1000 10 100 hundred 1795 1/3 1 deca da 1000 10 10 ten 1795 0 0 1000 -

Review On: Fractal Antenna Design Geometries and Its Applications

www.ijecs.in International Journal Of Engineering And Computer Science ISSN:2319-7242 Volume - 3 Issue -9 September, 2014 Page No. 8270-8275 Review On: Fractal Antenna Design Geometries and Its Applications Ankita Tiwari1, Dr. Munish Rattan2, Isha Gupta3 1GNDEC University, Department of Electronics and Communication Engineering, Gill Road, Ludhiana 141001, India [email protected] 2GNDEC University, Department of Electronics and Communication Engineering, Gill Road, Ludhiana 141001, India [email protected] 3GNDEC University, Department of Electronics and Communication Engineering, Gill Road, Ludhiana 141001, India [email protected] Abstract: In this review paper, we provide a comprehensive review of developments in the field of fractal antenna engineering. First we give brief introduction about fractal antennas and then proceed with its design geometries along with its applications in different fields. It will be shown how to quantify the space filling abilities of fractal geometries, and how this correlates with miniaturization of fractal antennas. Keywords – Fractals, self -similar, space filling, multiband 1. Introduction Modern telecommunication systems require antennas with irrespective of various extremely irregular curves or shapes wider bandwidths and smaller dimensions as compared to the that repeat themselves at any scale on which they are conventional antennas. This was beginning of antenna research examined. in various directions; use of fractal shaped antenna elements was one of them. Some of these geometries have been The term “Fractal” means linguistically “broken” or particularly useful in reducing the size of the antenna, while “fractured” from the Latin “fractus.” The term was coined by others exhibit multi-band characteristics. Several antenna Benoit Mandelbrot, a French mathematician about 20 years configurations based on fractal geometries have been reported ago in his book “The fractal geometry of Nature” [5]. -

Spatial Accessibility to Amenities in Fractal and Non Fractal Urban Patterns Cécile Tannier, Gilles Vuidel, Hélène Houot, Pierre Frankhauser

Spatial accessibility to amenities in fractal and non fractal urban patterns Cécile Tannier, Gilles Vuidel, Hélène Houot, Pierre Frankhauser To cite this version: Cécile Tannier, Gilles Vuidel, Hélène Houot, Pierre Frankhauser. Spatial accessibility to amenities in fractal and non fractal urban patterns. Environment and Planning B: Planning and Design, SAGE Publications, 2012, 39 (5), pp.801-819. 10.1068/b37132. hal-00804263 HAL Id: hal-00804263 https://hal.archives-ouvertes.fr/hal-00804263 Submitted on 14 Jun 2021 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. TANNIER C., VUIDEL G., HOUOT H., FRANKHAUSER P. (2012), Spatial accessibility to amenities in fractal and non fractal urban patterns, Environment and Planning B: Planning and Design, vol. 39, n°5, pp. 801-819. EPB 137-132: Spatial accessibility to amenities in fractal and non fractal urban patterns Cécile TANNIER* ([email protected]) - corresponding author Gilles VUIDEL* ([email protected]) Hélène HOUOT* ([email protected]) Pierre FRANKHAUSER* ([email protected]) * ThéMA, CNRS - University of Franche-Comté 32 rue Mégevand F-25 030 Besançon Cedex, France Tel: +33 381 66 54 81 Fax: +33 381 66 53 55 1 Spatial accessibility to amenities in fractal and non fractal urban patterns Abstract One of the challenges of urban planning and design is to come up with an optimal urban form that meets all of the environmental, social and economic expectations of sustainable urban development. -

![Archons (Commanders) [NOTICE: They Are NOT Anlien Parasites], and Then, in a Mirror Image of the Great Emanations of the Pleroma, Hundreds of Lesser Angels](https://docslib.b-cdn.net/cover/8862/archons-commanders-notice-they-are-not-anlien-parasites-and-then-in-a-mirror-image-of-the-great-emanations-of-the-pleroma-hundreds-of-lesser-angels-438862.webp)

Archons (Commanders) [NOTICE: They Are NOT Anlien Parasites], and Then, in a Mirror Image of the Great Emanations of the Pleroma, Hundreds of Lesser Angels

A R C H O N S HIDDEN RULERS THROUGH THE AGES A R C H O N S HIDDEN RULERS THROUGH THE AGES WATCH THIS IMPORTANT VIDEO UFOs, Aliens, and the Question of Contact MUST-SEE THE OCCULT REASON FOR PSYCHOPATHY Organic Portals: Aliens and Psychopaths KNOWLEDGE THROUGH GNOSIS Boris Mouravieff - GNOSIS IN THE BEGINNING ...1 The Gnostic core belief was a strong dualism: that the world of matter was deadening and inferior to a remote nonphysical home, to which an interior divine spark in most humans aspired to return after death. This led them to an absorption with the Jewish creation myths in Genesis, which they obsessively reinterpreted to formulate allegorical explanations of how humans ended up trapped in the world of matter. The basic Gnostic story, which varied in details from teacher to teacher, was this: In the beginning there was an unknowable, immaterial, and invisible God, sometimes called the Father of All and sometimes by other names. “He” was neither male nor female, and was composed of an implicitly finite amount of a living nonphysical substance. Surrounding this God was a great empty region called the Pleroma (the fullness). Beyond the Pleroma lay empty space. The God acted to fill the Pleroma through a series of emanations, a squeezing off of small portions of his/its nonphysical energetic divine material. In most accounts there are thirty emanations in fifteen complementary pairs, each getting slightly less of the divine material and therefore being slightly weaker. The emanations are called Aeons (eternities) and are mostly named personifications in Greek of abstract ideas.